Cloud-native development has emerged as a transformative approach to software development, promising enhanced agility and efficiency. This methodology, centered around cloud computing principles, allows organizations to build and run applications that are inherently designed to take full advantage of the cloud’s capabilities. From accelerated deployment cycles to improved scalability and cost optimization, cloud-native practices offer a compelling value proposition for businesses seeking to stay competitive in today’s fast-paced digital landscape.

This discussion delves into the multifaceted benefits of cloud-native development for agility. We will explore how these practices empower developers, streamline operations, and ultimately drive business success. The objective is to provide a comprehensive understanding of how cloud-native approaches can help organizations achieve greater flexibility, faster time to market, and a more responsive approach to evolving customer needs.

Enhanced Speed of Deployment and Release Cycles

Cloud-native development fundamentally alters how software is deployed and updated. By embracing principles like microservices, containerization, and automation, organizations can significantly accelerate their deployment and release cycles, leading to faster time-to-market, improved responsiveness to user needs, and increased overall agility. This section explores how cloud-native architectures achieve this acceleration.

Impact on Feature Deployment Time

Cloud-native architectures dramatically reduce the time it takes to deploy new features. This acceleration stems from several key characteristics.

- Microservices Architecture: Cloud-native applications are often built using a microservices architecture, where an application is broken down into small, independent services. Each service can be deployed, updated, and scaled independently of others. This means that changes to one feature only require deploying the relevant microservice, rather than the entire application.

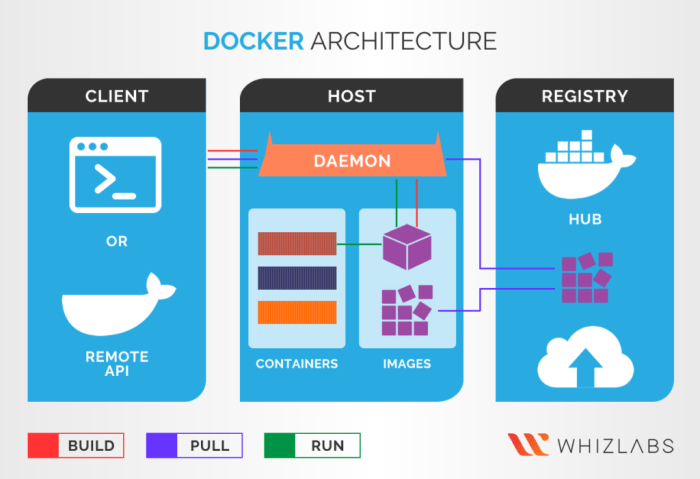

- Containerization: Technologies like Docker package each microservice and its dependencies into a container. Containers provide a consistent environment across different infrastructure platforms, making deployment more predictable and repeatable. This eliminates the “it works on my machine” problem and simplifies the deployment process.

- Orchestration Platforms: Platforms like Kubernetes automate the deployment, scaling, and management of containerized applications. Kubernetes can automatically handle tasks such as service discovery, load balancing, and rolling updates, further streamlining the deployment process.

Acceleration of Software Update Releases

Cloud-native practices accelerate the release of software updates through several mechanisms.

- Continuous Integration and Continuous Delivery (CI/CD): CI/CD pipelines automate the build, test, and deployment processes. This allows developers to frequently integrate code changes and quickly release updates. With automated testing, developers can catch bugs early in the development cycle, reducing the risk of deployment failures.

- Blue/Green Deployments: This deployment strategy involves running two identical environments: one live (blue) and one staging (green). Updates are deployed to the green environment, tested, and then traffic is switched from the blue to the green environment. This minimizes downtime and allows for quick rollbacks if issues arise.

- Canary Releases: In a canary release, a small subset of users are directed to the new version of an application. This allows developers to test the update in a production environment with minimal risk. If the canary release is successful, the update is rolled out to the remaining users.

Comparison of Deployment Methods

Traditional deployment methods often involve manual processes, long lead times, and significant downtime. Cloud-native deployments, on the other hand, are characterized by automation, speed, and minimal disruption.

| Feature | Traditional Deployment | Cloud-Native Deployment |

|---|---|---|

| Deployment Frequency | Infrequent (e.g., monthly, quarterly) | Frequent (e.g., daily, hourly) |

| Deployment Time | Hours or days | Minutes or seconds |

| Downtime | Significant | Minimal or zero |

| Rollback Process | Complex and time-consuming | Simple and automated |

| Automation | Limited | High |

Role of Automation in Speeding Up Deployment

Automation is the cornerstone of accelerating deployment in cloud-native environments. CI/CD pipelines are the primary tool for achieving this.

- CI/CD Pipelines: CI/CD pipelines automate the entire software delivery lifecycle, from code commit to production deployment. A typical CI/CD pipeline includes the following stages:

- Code Commit: Developers commit code changes to a version control system (e.g., Git).

- Build: The code is automatically built, and dependencies are resolved.

- Test: Automated tests (unit tests, integration tests, etc.) are executed.

- Package: The application is packaged into a container image (e.g., Docker image).

- Deploy: The container image is deployed to the target environment (e.g., Kubernetes cluster).

- Monitor: The deployed application is monitored for performance and errors.

- Infrastructure as Code (IaC): IaC allows infrastructure to be defined and managed as code. This enables automated provisioning and configuration of infrastructure resources, further streamlining the deployment process.

- Automated Testing: Comprehensive automated testing is crucial for ensuring the quality and stability of software updates. Automated tests can be executed as part of the CI/CD pipeline, catching bugs early in the development cycle.

Increased Scalability and Resource Utilization

Cloud-native development fundamentally transforms how applications are built and deployed, offering significant advantages in terms of scalability and resource efficiency. This approach allows applications to dynamically adapt to changing demands, ensuring optimal performance and cost-effectiveness. By leveraging the inherent capabilities of cloud platforms, cloud-native applications can scale seamlessly, providing a superior user experience while minimizing infrastructure overhead.

Enabling Applications to Scale on Demand

Cloud-native architectures are designed to be inherently scalable. This means that applications can automatically adjust their resource consumption based on real-time needs. This contrasts sharply with traditional monolithic applications, which often require manual intervention and significant downtime to scale. The ability to scale on demand is crucial for handling fluctuating workloads and unexpected traffic spikes.Automatic scaling in a cloud-native environment is primarily achieved through the following mechanisms:

- Horizontal Scaling: This involves adding or removing instances of an application component to handle changes in demand. For example, if a web application experiences a surge in traffic, the system can automatically launch additional web server instances to distribute the load. This is often implemented using load balancers that distribute incoming requests across multiple instances.

- Vertical Scaling: This involves increasing the resources (e.g., CPU, memory) allocated to an individual application instance. While less common in cloud-native environments due to the preference for horizontal scaling, it can be used to optimize the performance of individual components.

- Auto-scaling Groups: Cloud platforms provide auto-scaling groups that monitor application performance metrics (e.g., CPU utilization, request latency) and automatically adjust the number of instances based on pre-defined rules. These rules can be configured to trigger scaling actions when specific thresholds are met.

- Container Orchestration: Tools like Kubernetes automate the deployment, scaling, and management of containerized applications. Kubernetes can dynamically allocate resources to containers based on their resource requests and limits, ensuring efficient resource utilization.

Optimizing Resource Usage

Cloud-native development promotes efficient resource utilization through several key strategies. Containerization, microservices architecture, and serverless computing all contribute to this optimization.

- Containerization: Container technologies like Docker package applications and their dependencies into isolated units. This allows for efficient resource allocation, as containers only consume the resources they need. Containers also enable consistent deployments across different environments.

- Microservices Architecture: Breaking down applications into smaller, independent services allows for more granular resource allocation. Each microservice can be scaled independently based on its specific needs, preventing the need to scale the entire application.

- Serverless Computing: Serverless platforms allow developers to execute code without managing servers. This eliminates the need to provision and maintain infrastructure, leading to significant cost savings and improved resource utilization. Developers pay only for the compute time their code consumes.

Differences in Resource Allocation: Traditional vs. Cloud-Native

The following table illustrates the key differences in resource allocation between traditional and cloud-native setups. It highlights how cloud-native approaches provide superior flexibility and efficiency.

| Feature | Traditional Setup | Cloud-Native Setup | Benefits |

|---|---|---|---|

| Resource Provisioning | Manual, often involves over-provisioning to handle peak loads. | Automated, dynamic scaling based on real-time demand. | Reduced infrastructure costs, improved resource utilization. |

| Scaling Capabilities | Limited, requires significant downtime and manual intervention. | Highly scalable, horizontal scaling is easily implemented. | Improved application performance, ability to handle fluctuating workloads. |

| Resource Utilization | Often underutilized, leading to wasted resources and higher costs. | Optimized, resources are allocated dynamically based on actual needs. | Cost savings, improved efficiency, and reduced environmental impact. |

| Deployment Frequency | Infrequent, deployments are complex and time-consuming. | Frequent, automated deployments enabled by containerization and CI/CD pipelines. | Faster time-to-market, ability to quickly respond to market changes. |

Improved Resilience and Fault Tolerance

Cloud-native development inherently focuses on building applications that can withstand failures and maintain availability. This is achieved through a combination of architectural principles, design patterns, and operational practices that prioritize resilience. Cloud-native applications are designed to anticipate and gracefully handle disruptions, ensuring a consistent user experience even in the face of adversity.

Application Resilience in Cloud-Native Architectures

Cloud-native architectures enhance application resilience by embracing several key principles. These include:

- Microservices: Decomposing applications into independent, small, and manageable services. This isolation limits the impact of failures; if one service fails, it doesn’t necessarily bring down the entire application.

- Automation: Automating deployment, scaling, and recovery processes. Automation minimizes human error and speeds up the response to failures.

- Immutable Infrastructure: Treating infrastructure as code and building it from scratch each time. This approach reduces the risk of configuration drift and ensures consistency.

- Observability: Implementing comprehensive monitoring, logging, and tracing to gain insights into application behavior and identify potential issues proactively.

- Decentralization: Distributing application components across multiple availability zones or regions to minimize the impact of regional outages.

Fault-Tolerant Design Patterns

Several design patterns are commonly used in cloud-native applications to enhance fault tolerance. These patterns help to isolate failures and ensure that the application can continue to function even when parts of it are unavailable.

- Circuit Breaker: This pattern prevents cascading failures by monitoring the health of downstream services. If a service becomes unavailable or slow, the circuit breaker “opens,” preventing further requests from being sent to the failing service and returning a fallback response instead. This protects the calling service from being overwhelmed.

- Retry Mechanism: Automatically retries failed requests to a service. This can help to overcome transient errors, such as temporary network issues or service overload. Implementations typically include exponential backoff to avoid overwhelming the service.

- Bulkhead: Isolates critical resources to prevent a failure in one part of the application from affecting others. For example, limiting the number of concurrent requests to a particular service.

- Leader Election: Used in distributed systems to determine a single leader node responsible for coordinating tasks. If the leader fails, a new leader is automatically elected, ensuring continued operation.

- Health Checks: Regularly checks the health of application components and services. These checks can be used to automatically detect and recover from failures.

Strategies for Handling Failures and Ensuring Service Availability

Cloud-native applications employ various strategies to handle failures and maintain service availability. These strategies are crucial for ensuring a positive user experience and minimizing downtime.

- Automated Rollbacks: If a new deployment introduces errors, automated rollbacks can quickly revert to the previous working version, minimizing the impact on users.

- Blue/Green Deployments: Deploying a new version of the application alongside the current version (blue/green). Once the new version (green) is validated, traffic is gradually shifted to it, and the old version (blue) is decommissioned. This minimizes downtime during updates and allows for easy rollbacks if necessary.

- Canary Releases: Releasing a new version of the application to a small subset of users (canary) to test it in production before a full rollout. This allows for early detection of issues and reduces the risk of widespread impact.

- Chaos Engineering: Proactively introducing failures into the system to identify weaknesses and improve resilience. This can involve simulating network outages, service failures, and other disruptive events.

- Load Balancing: Distributing traffic across multiple instances of a service to prevent overload and ensure high availability.

Common Failure Scenarios and Mitigation Strategies

The following table Artikels common failure scenarios encountered in cloud-native environments and their corresponding mitigation strategies.

| Failure Scenario | Mitigation Strategy |

|---|---|

| Service Outage | Circuit Breaker, Retry Mechanism, Load Balancing, Automated Failover |

| Network Connectivity Issues | Retry Mechanism, Circuit Breaker, Load Balancing, Service Mesh |

| Database Failure | Database Replication, Automated Failover, Connection Pooling |

| Application Deployment Errors | Automated Rollbacks, Blue/Green Deployments, Canary Releases |

| Resource Exhaustion (CPU, Memory) | Horizontal Scaling, Auto-Scaling, Resource Limits |

| Configuration Errors | Immutable Infrastructure, Infrastructure as Code, Configuration Management Tools |

| Security Breaches | Regular Security Audits, Intrusion Detection Systems, Role-Based Access Control (RBAC) |

Enhanced Developer Productivity

Cloud-native development significantly boosts developer productivity by streamlining workflows, providing powerful tools, and automating repetitive tasks. This translates to faster development cycles, reduced error rates, and increased focus on innovation rather than infrastructure management. Ultimately, it empowers developers to deliver higher-quality software more efficiently.

Improved Developer Efficiency

Cloud-native practices contribute to developer efficiency in several key ways. By abstracting away infrastructure complexities, developers can concentrate on writing code and building features. This focus leads to faster development times and improved code quality.

- Reduced Infrastructure Management: Cloud-native platforms automate infrastructure provisioning, scaling, and management. This reduces the time developers spend on these tasks, allowing them to focus on coding.

- Faster Feedback Loops: Continuous integration and continuous delivery (CI/CD) pipelines, integral to cloud-native development, provide rapid feedback on code changes. This enables developers to identify and fix issues quickly.

- Increased Automation: Cloud-native tools automate many development processes, such as testing, deployment, and monitoring. Automation frees up developers from manual tasks, enabling them to be more productive.

- Enhanced Collaboration: Cloud-native platforms often provide tools that improve collaboration among developers, such as shared environments, version control systems, and integrated communication channels.

Tools and Technologies for Productivity

A variety of tools and technologies are specifically designed to enhance developer productivity in a cloud-native environment. These tools streamline development, testing, and deployment processes, making it easier for developers to build and deploy applications.

- Containerization (Docker, Kubernetes): Containerization allows developers to package applications and their dependencies into portable units, simplifying deployment and ensuring consistency across different environments. Kubernetes automates the deployment, scaling, and management of containerized applications.

- CI/CD Pipelines (Jenkins, GitLab CI, CircleCI): CI/CD pipelines automate the build, test, and deployment processes. They enable developers to integrate code changes frequently and deploy them to production environments quickly.

- Serverless Computing (AWS Lambda, Azure Functions, Google Cloud Functions): Serverless computing allows developers to write and deploy code without managing servers. This reduces operational overhead and allows developers to focus on application logic.

- Infrastructure as Code (IaC) (Terraform, AWS CloudFormation): IaC allows developers to define and manage infrastructure using code. This enables developers to automate infrastructure provisioning and ensure consistency across environments.

- Observability Tools (Prometheus, Grafana, Datadog): Observability tools provide insights into application performance and behavior. They enable developers to monitor applications, identify performance bottlenecks, and troubleshoot issues.

Simplified Development Workflows

Cloud-native approaches significantly reduce the complexity of development workflows. This simplification is achieved through automation, standardized processes, and the use of pre-built components and services. The result is a more efficient and streamlined development process.

- Automated Testing: Automated testing is a cornerstone of cloud-native development. Testing is integrated into the CI/CD pipeline, ensuring that code changes are automatically tested before deployment. This approach reduces the risk of errors and speeds up the development cycle.

- Simplified Deployment: Cloud-native platforms provide tools and services that simplify the deployment process. Containerization and orchestration tools automate the deployment of applications, making it easier to deploy and manage applications across different environments.

- Microservices Architecture: Microservices architecture breaks down applications into small, independent services. This approach makes it easier to develop, deploy, and scale individual services, improving overall development efficiency.

- Reusable Components: Cloud-native development encourages the use of reusable components and services. This approach reduces the amount of code that developers need to write, improving development speed and reducing the risk of errors.

Simplified Development Workflow Diagram

This diagram illustrates a simplified development workflow enabled by cloud-native approaches. It highlights the key stages of the development process, from code commit to deployment, and the tools and technologies that support each stage.

Diagram Description:

The diagram is a flowchart depicting a simplified cloud-native development workflow. It starts with the “Developer Commits Code” box, which leads to a CI/CD pipeline. This pipeline consists of several stages:

- Build: The code is compiled and dependencies are resolved.

- Test: Automated tests are run (unit, integration, and potentially end-to-end tests).

- Package: The application is packaged, often into a container image (e.g., Docker).

- Deploy: The container image is deployed to a cloud environment (e.g., Kubernetes).

After the deployment stage, there is a “Monitoring & Feedback” loop. This includes monitoring the application’s performance and collecting feedback from users, which feeds back into the “Developer Commits Code” stage, creating a continuous cycle of improvement.

The diagram also shows key technologies and tools used at each stage:

- Code Repository: Git (or similar)

- CI/CD Pipeline: Jenkins, GitLab CI, or CircleCI

- Containerization: Docker

- Orchestration: Kubernetes

- Monitoring: Prometheus, Grafana, or Datadog

This streamlined workflow, facilitated by automation and cloud-native tools, allows for faster release cycles, reduced errors, and improved developer productivity.

Cost Optimization

Cloud-native development offers significant opportunities for cost optimization, a crucial benefit for businesses aiming to maximize their return on investment. By leveraging the inherent characteristics of cloud environments, organizations can reduce operational expenses and allocate resources more efficiently. This section will delve into the specifics of how cloud-native practices contribute to cost savings, providing concrete examples and illustrating the advantages over traditional infrastructure.

Cost Savings Through Cloud-Native Development

Cloud-native development enables cost savings through several key mechanisms. These include optimized resource utilization, reduced infrastructure management overhead, and the adoption of pay-as-you-go models. The dynamic nature of cloud environments allows for scaling resources up or down based on actual demand, eliminating the need to provision for peak loads and the associated costs of idle resources. This leads to a more efficient use of capital and operational expenditures.

Furthermore, automation, a core tenet of cloud-native practices, minimizes the need for manual intervention, thereby reducing labor costs and the potential for human error.

Cost Optimization Strategies in Cloud-Native Environments

Cloud-native environments provide a range of strategies for cost optimization. Implementing these strategies requires a proactive approach to resource management and a deep understanding of cloud services.

- Right-Sizing Resources: Accurately matching the size of compute instances, storage, and other resources to the actual workload requirements. This prevents over-provisioning and minimizes unnecessary costs. For example, a company might use monitoring tools to identify instances that are consistently underutilized and then scale them down to a more appropriate size.

- Automated Scaling: Configuring automated scaling policies to adjust resources dynamically based on real-time demand. This ensures that resources are available when needed without overspending during periods of low activity. A news website, for instance, could automatically scale up its web servers during peak traffic hours and scale them down during off-peak hours.

- Serverless Computing: Utilizing serverless functions for specific tasks, such as image processing or data transformation. This eliminates the need to manage underlying infrastructure and allows you to pay only for the compute time consumed by the function. This approach can be significantly cheaper than running a dedicated server.

- Cost Monitoring and Analysis: Implementing robust cost monitoring tools to track resource usage and identify areas where costs can be reduced. This allows you to make data-driven decisions about resource allocation and optimization. For instance, using cloud provider dashboards to monitor the cost of different services and then optimizing their usage.

- Choosing the Right Pricing Models: Selecting the most appropriate pricing model for your needs, such as reserved instances, spot instances, or on-demand pricing. Reserved instances offer significant discounts for long-term commitments, while spot instances provide substantial savings for workloads that can tolerate interruptions. A company with predictable workloads might opt for reserved instances, while a batch processing job might use spot instances to reduce costs.

Pay-as-You-Go Models and Cost Efficiency

Pay-as-you-go models are a fundamental aspect of cloud-native cost efficiency. They allow organizations to pay only for the resources they consume, providing a significant advantage over traditional infrastructure where resources are often purchased upfront and remain idle during periods of low utilization. This model enables a shift from a capital expenditure (CapEx) model to an operational expenditure (OpEx) model, which can improve cash flow and reduce the financial burden of owning and maintaining infrastructure.

The flexibility of pay-as-you-go models also allows organizations to experiment with new technologies and services without significant upfront investments.

Cost Comparison: Traditional vs. Cloud-Native Infrastructure

This table provides a simplified comparison, and actual costs will vary based on specific configurations and usage.

Aspect Traditional Infrastructure Cloud-Native Infrastructure Hardware Costs Significant upfront investment in servers, storage, and networking equipment. Pay-as-you-go model; costs scale with usage. No upfront hardware costs. Operating System and Software Licenses Upfront and ongoing licensing fees for operating systems, databases, and other software. Often included in cloud service pricing; pay-as-you-go for specific software instances. Data Center Costs Costs associated with data center space, power, cooling, and physical security. Included in cloud provider’s pricing model; no direct responsibility for data center management. IT Staffing Costs Salaries and benefits for IT staff to manage hardware, software, and infrastructure. Reduced need for dedicated IT staff; cloud provider handles infrastructure management. Focus shifts to application development and optimization. Maintenance and Upgrades Costs associated with hardware maintenance, software updates, and infrastructure upgrades. Handled by the cloud provider; automatic updates and upgrades. Scalability Limited scalability; requires manual provisioning and hardware purchases. Highly scalable; resources can be scaled up or down automatically based on demand. Resource Utilization Often underutilized resources; leads to wasted costs. Optimized resource utilization; pay only for what you use. Overall Cost Generally higher upfront and ongoing costs. Potentially lower overall costs due to optimized resource utilization and pay-as-you-go models.

Greater Flexibility and Innovation

Cloud-native development fundamentally reshapes how applications are built and deployed, creating an environment that prioritizes agility and adaptability. This approach unlocks significant opportunities for innovation, allowing organizations to respond rapidly to market changes, experiment with new technologies, and ultimately deliver superior value to their customers. The inherent flexibility of cloud-native architectures is a key driver of this innovative potential.

Fostering Innovation through Cloud-Native Development

Cloud-native development inherently promotes a culture of innovation by empowering developers and enabling rapid experimentation. The modular nature of cloud-native applications, built as microservices, allows for independent development and deployment of features. This autonomy accelerates the development lifecycle, enabling teams to test and iterate on new ideas quickly. This iterative process reduces risk and accelerates the discovery of valuable solutions.

Architectural Flexibility in Cloud-Native Environments

Cloud-native architectures offer unparalleled flexibility, largely due to their decoupling of services and reliance on containerization and orchestration. This flexibility manifests in several key areas:

- Microservices Architecture: Breaking down applications into small, independent services allows for independent scaling and updates. This means changes to one part of the application don’t require redeploying the entire system, reducing downtime and accelerating the release cycle.

- Containerization: Technologies like Docker package applications and their dependencies into containers, ensuring consistent behavior across different environments. This eliminates the “it works on my machine” problem and simplifies deployment.

- Orchestration: Platforms like Kubernetes automate the deployment, scaling, and management of containerized applications. This frees up developers to focus on writing code rather than managing infrastructure.

- API-First Design: Cloud-native applications often rely on APIs for communication between services. This promotes interoperability and allows for easy integration with third-party services and data sources.

Supporting Experimentation with New Technologies

Cloud-native development facilitates the seamless integration of new technologies. The ability to quickly deploy and test new services, coupled with the modular nature of microservices, allows organizations to experiment with cutting-edge technologies without disrupting existing systems. This ability to experiment is crucial for staying ahead of the curve in a rapidly evolving technological landscape. Consider the adoption of serverless functions or the integration of machine learning models.

These technologies can be incorporated as individual services, tested, and deployed with minimal impact on the core application.

Visual Representation: Integrating New Technologies

Imagine a visual representation depicting a cloud-native application architecture. The core application is composed of several microservices, each responsible for a specific function (e.g., user authentication, product catalog, order processing). These microservices are deployed in containers and managed by an orchestration platform.To integrate a new technology, such as a recommendation engine powered by machine learning, a new microservice is created.

This service, packaged in its own container, can be developed and deployed independently of the existing services. The new service interacts with other services through well-defined APIs. The visual representation would show a clear distinction between the existing core services and the newly integrated recommendation engine, highlighting its independent nature and seamless integration through API connections. This visual emphasizes the ease with which new technologies can be incorporated without significant disruption to the core application’s functionality.

This approach, based on the principles of microservices and API-driven communication, allows for a swift and efficient integration process. This contrasts sharply with the monolithic architectures of the past, where such changes were complex and time-consuming.

Improved Observability and Monitoring

Cloud-native development emphasizes the importance of comprehensive observability and monitoring. This is critical for maintaining application health, identifying performance bottlenecks, and ensuring a smooth user experience. Observability provides deep insights into the internal states of the application, enabling developers and operations teams to understand and troubleshoot issues effectively. It allows proactive identification and resolution of problems before they impact users.

Benefits of Enhanced Observability

Cloud-native applications, due to their distributed and dynamic nature, benefit immensely from enhanced observability. The ability to monitor and understand application behavior across a complex infrastructure is crucial for several reasons. This includes rapid troubleshooting, informed decision-making, and continuous improvement.

Monitoring Tools and Techniques

Cloud-native setups leverage various monitoring tools and techniques to achieve comprehensive observability. These tools collect, analyze, and visualize data from different sources within the application and infrastructure.

- Metrics: Metrics represent numerical data that tracks application performance over time. These include CPU usage, memory consumption, request latency, and error rates. Tools like Prometheus and Datadog are commonly used for collecting and storing metrics. Prometheus, for example, uses a pull-based model to scrape metrics from configured endpoints, while Datadog provides a comprehensive platform for metric collection, visualization, and alerting.

- Logs: Logs capture events and messages generated by the application and infrastructure components. They provide valuable context for troubleshooting issues and understanding application behavior. Tools like the ELK Stack (Elasticsearch, Logstash, Kibana) and Splunk are popular for log aggregation, analysis, and visualization. The ELK Stack allows for the centralized storage and analysis of logs, enabling developers to search, filter, and visualize log data effectively.

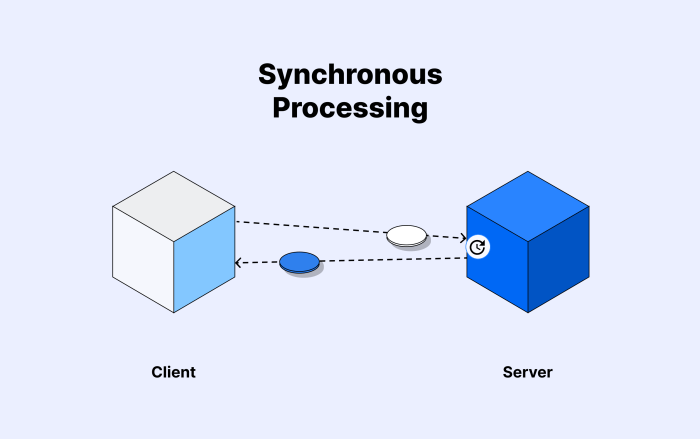

- Tracing: Distributed tracing helps track requests as they flow through multiple services in a cloud-native application. This provides insights into the performance of individual services and the dependencies between them. Tools like Jaeger and Zipkin are widely used for distributed tracing. Jaeger, for example, visualizes traces, enabling developers to identify performance bottlenecks and understand the flow of requests across microservices.

Data Gathering and Analysis for Performance Improvement

Effective data gathering and analysis are crucial for improving application performance. The process involves collecting data from various sources, analyzing it to identify patterns and anomalies, and using the insights to optimize the application.

- Data Collection: Data collection involves gathering metrics, logs, and traces from the application and infrastructure. This can be achieved using various tools and techniques, such as agents, collectors, and APIs.

- Data Analysis: Data analysis involves analyzing the collected data to identify performance bottlenecks, errors, and other issues. This can be done using various techniques, such as data aggregation, filtering, and visualization. For example, analyzing request latency data can reveal slow-performing services or database queries.

- Performance Optimization: Based on the insights gained from data analysis, performance optimization involves making changes to the application and infrastructure to improve performance. This may include code optimization, resource allocation adjustments, and infrastructure scaling.

Setting Up a Monitoring Dashboard

A well-designed monitoring dashboard provides a centralized view of the application’s health and performance. It allows teams to quickly identify issues and track progress over time. The dashboard should be customized to display specific metrics and alerts relevant to the application.

- Metric Selection: Select key metrics that are critical to the application’s performance and user experience. Examples include request latency, error rates, CPU usage, and memory consumption.

- Alert Configuration: Configure alerts based on predefined thresholds for the selected metrics. Alerts should notify the appropriate teams when a metric exceeds a certain threshold, indicating a potential issue. For example, an alert can be triggered if the error rate exceeds 1% over a 5-minute period.

- Dashboard Design: Design a dashboard that is easy to understand and navigate. Use clear visualizations, such as graphs and charts, to display the selected metrics. Group related metrics together to provide a comprehensive view of the application’s health.

- Dashboard Tools: Use tools like Grafana, Prometheus, and Datadog to build the monitoring dashboard. Grafana, for instance, is a popular choice for creating customizable dashboards, allowing users to visualize data from various sources and configure alerts.

Faster Time to Market

Cloud-native development significantly reduces the time it takes to bring new products and features to market. This acceleration stems from the inherent characteristics of cloud-native architectures and development practices, allowing businesses to respond rapidly to market demands and gain a competitive edge.

Factors Contributing to Faster Time to Market

Several key factors within a cloud-native environment contribute to the speed at which products and services are released. These elements work in concert to streamline the development lifecycle.

- Automation: Automation is a cornerstone of cloud-native development. Automated processes, from infrastructure provisioning to testing and deployment, eliminate manual steps and reduce the potential for human error. This results in faster release cycles and quicker time to market. For instance, automated CI/CD pipelines can deploy code changes multiple times a day, as opposed to manual deployments that might occur only once a month.

- Microservices Architecture: The microservices approach allows for the independent development, deployment, and scaling of individual application components. This modularity means that changes can be made to one service without affecting others, reducing the risk and impact of deployments and enabling faster iterations. This is a significant advantage compared to monolithic applications, where even minor changes require a full redeployment.

- Containerization (e.g., Docker) and Orchestration (e.g., Kubernetes): Containerization packages applications and their dependencies into portable units, ensuring consistent behavior across different environments. Orchestration tools automate the deployment, scaling, and management of these containers. This combination simplifies and accelerates the deployment process. A containerized application can be deployed to any cloud or on-premise infrastructure that supports the container runtime, providing flexibility and speed.

- DevOps Practices: Cloud-native development embraces DevOps principles, fostering collaboration between development and operations teams. This collaboration promotes continuous integration and continuous delivery (CI/CD), leading to shorter feedback loops, faster bug fixes, and more frequent releases. The emphasis on shared responsibility and automated processes reduces friction and speeds up the entire software delivery pipeline.

Impact of Cloud-Native on Business Agility

Cloud-native development dramatically enhances business agility, enabling organizations to adapt quickly to changing market conditions and customer needs. This responsiveness is a crucial advantage in today’s dynamic business environment.

- Rapid Experimentation: Cloud-native environments allow for quick experimentation with new features and products. The ability to rapidly deploy, test, and iterate on new ideas enables businesses to validate concepts and gather user feedback quickly. This reduces the risk of investing heavily in products that don’t resonate with the market.

- Faster Innovation Cycles: The streamlined development processes and modular architecture of cloud-native systems accelerate innovation. Teams can experiment with new technologies and approaches more readily, leading to faster development of innovative solutions and a quicker path to market.

- Increased Responsiveness to Market Demands: Cloud-native development enables businesses to respond quickly to market trends and customer feedback. The ability to rapidly deploy new features and updates allows companies to adapt to changing customer needs and stay ahead of the competition.

Timeline Illustrating Reduction in Time-to-Market

The shift to cloud-native development often leads to a significant reduction in the time it takes to bring a product or feature to market. This is best illustrated through a comparative timeline, showing the differences between traditional and cloud-native approaches.

| Traditional Development (Example: Monolithic Application) | Cloud-Native Development (Example: Microservices Application) |

|---|---|

| Phase 1: Planning & Requirements Gathering (4-8 weeks): Detailed requirements documentation, long planning cycles, and extensive stakeholder approvals. | Phase 1: Planning & Requirements Gathering (1-2 weeks): Agile methodologies, shorter planning cycles, and iterative requirements gathering. |

| Phase 2: Development & Testing (12-24 weeks): Long development cycles, extensive manual testing, and complex integration testing. | Phase 2: Development & Testing (4-8 weeks): Smaller, independent services, automated testing, and CI/CD pipelines. |

| Phase 3: Deployment & Release (2-4 weeks): Manual deployment processes, significant downtime, and potential for errors. | Phase 3: Deployment & Release (Hours to Days): Automated deployments, zero-downtime deployments, and continuous delivery. |

| Total Time-to-Market: 18-36 weeks | Total Time-to-Market: 5-11 weeks |

This timeline demonstrates a substantial reduction in time-to-market, which translates to a significant competitive advantage. A company that can release new features and products in weeks, instead of months, can capture market share faster and respond more effectively to customer demands.

Enhanced Portability and Vendor Neutrality

Cloud-native development emphasizes building applications that are portable and can run seamlessly across different environments, including various cloud providers. This flexibility is a key benefit, allowing organizations to avoid vendor lock-in, optimize costs, and choose the best platform for their specific needs. This section explores how cloud-native principles facilitate portability and vendor neutrality, providing practical strategies and examples.

Promoting Application Portability

Cloud-native development promotes application portability through several key practices. These practices ensure applications are not tightly coupled with any specific cloud provider’s infrastructure.

- Containerization: Containerization, using technologies like Docker, packages applications and their dependencies into isolated units. These containers can then be deployed consistently across different cloud platforms, eliminating compatibility issues. This ‘write once, run anywhere’ approach significantly enhances portability.

- Microservices Architecture: Breaking down applications into small, independent services allows each service to be deployed and managed independently. Each microservice can be written in the most suitable technology and deployed on the cloud platform that best meets its requirements.

- Declarative Configuration: Cloud-native applications often use declarative configuration files (e.g., YAML, JSON) to define infrastructure and application deployments. This approach allows for infrastructure-as-code, enabling the same configuration to be used across different environments.

- Abstraction Layers: Utilizing abstraction layers, such as Kubernetes, provides a consistent interface for managing and orchestrating applications, regardless of the underlying cloud provider. Kubernetes abstracts away the differences between cloud platforms, simplifying deployment and management.

Deploying Applications Across Different Cloud Providers

Cloud-native applications can be deployed across various cloud providers, leveraging the portability features described above. Here are some examples:

- Multi-Cloud Deployments: An organization might deploy different microservices of an application on different cloud providers (e.g., AWS, Azure, Google Cloud) based on cost, performance, or specific features offered by each provider. For instance, a database might be deployed on a cloud provider offering specialized database services, while the application’s frontend is deployed on a different provider closer to the end-users.

- Hybrid Cloud Deployments: Organizations can deploy applications across a combination of public cloud and on-premises infrastructure. This approach allows for leveraging the scalability and cost-effectiveness of public cloud while maintaining control over sensitive data or meeting regulatory requirements.

- Cloud Migration: Cloud-native applications are easier to migrate between cloud providers. If an organization decides to switch cloud providers, the containerized application can be redeployed on the new platform with minimal changes.

Achieving Vendor Neutrality

Vendor neutrality in a cloud-native environment can be achieved through several strategies.

- Using Open Standards: Adhering to open standards and APIs (e.g., Kubernetes, gRPC, REST) ensures that applications are not tied to proprietary technologies. This allows for interoperability and easier migration between platforms.

- Choosing Cloud-Agnostic Services: Opting for cloud-agnostic services and tools that can be deployed across different cloud providers minimizes vendor lock-in. Examples include container registries, monitoring tools, and CI/CD pipelines.

- Avoiding Vendor-Specific Features: Minimize the use of vendor-specific features or services that are not available across all cloud providers. If a vendor-specific service is required, consider using a cloud-agnostic alternative or implementing an abstraction layer to provide a consistent interface.

- Implementing Infrastructure as Code (IaC): Using IaC tools like Terraform allows for defining and managing infrastructure across multiple cloud providers with a single codebase. This approach reduces the effort required to deploy and manage applications on different platforms.

Comparing Portability Features of Different Cloud Platforms

The following table provides a comparison of the portability features offered by several major cloud platforms. Note that feature availability and maturity may vary.

| Feature | AWS | Azure | Google Cloud | Other (e.g., DigitalOcean, IBM Cloud) |

|---|---|---|---|---|

| Containerization Support | Excellent (ECS, EKS, Fargate) | Excellent (AKS, ACI) | Excellent (GKE, Cloud Run) | Varies (e.g., Docker support, Kubernetes) |

| Kubernetes Support | Managed Kubernetes (EKS) | Managed Kubernetes (AKS) | Managed Kubernetes (GKE) | Varies (e.g., self-managed, managed Kubernetes) |

| Cloud-Agnostic Services | Wide range (e.g., databases, messaging) | Wide range (e.g., databases, messaging) | Wide range (e.g., databases, messaging) | Varies (availability of cloud-agnostic services) |

| Infrastructure as Code (IaC) Support | Excellent (CloudFormation, Terraform) | Excellent (Azure Resource Manager, Terraform) | Excellent (Cloud Deployment Manager, Terraform) | Varies (e.g., Terraform support) |

Better Alignment with Business Goals

Cloud-native development offers a powerful framework for aligning technology initiatives with overarching business objectives. By embracing cloud-native principles, organizations can significantly enhance their ability to respond to market dynamics, drive innovation, and achieve strategic advantages. This alignment translates into tangible benefits, from faster time-to-market to improved operational efficiency and a more agile business model.

Supporting Business Objectives

Cloud-native architectures are inherently designed to support and accelerate the achievement of core business goals. This support manifests in several key areas, leading to improved performance and a stronger competitive position. Cloud-native approaches prioritize business needs by enabling faster feature releases, more efficient resource allocation, and a greater ability to adapt to evolving customer demands.

Rapid Response to Changing Market Demands

Cloud-native development provides a crucial advantage in responding swiftly to market shifts. The agility inherent in cloud-native architectures allows businesses to quickly adapt to new opportunities and challenges. This adaptability is particularly valuable in today’s rapidly evolving business landscape. For instance, consider a retail company leveraging cloud-native services to launch a new online promotion. If the promotion proves unexpectedly popular, the cloud-native infrastructure can automatically scale to handle the increased traffic, preventing website crashes and ensuring a positive customer experience.

This dynamic scalability is a key enabler of rapid response.

Strategic Advantages for Organizations

Cloud-native adoption offers a multitude of strategic advantages that contribute to long-term organizational success. These advantages extend beyond immediate operational improvements and encompass a broader strategic impact. These advantages often translate into improved market share, increased customer satisfaction, and enhanced brand reputation.

- Enhanced Innovation: Cloud-native environments foster a culture of experimentation and rapid prototyping. The ease of deploying and testing new features allows organizations to quickly iterate on ideas and bring innovative products and services to market. This is particularly important for staying ahead of the competition.

- Increased Market Agility: The ability to quickly respond to market changes is a critical strategic advantage. Cloud-native architectures enable organizations to adapt to new customer demands, emerging trends, and competitive pressures with greater speed and flexibility.

- Improved Customer Experience: Cloud-native applications are often more reliable, responsive, and scalable, leading to a better customer experience. This can translate into increased customer loyalty and positive word-of-mouth referrals.

- Cost Efficiency: While initial investments might be required, cloud-native development can lead to significant cost savings over time. The pay-as-you-go model of cloud services, coupled with optimized resource utilization, can reduce infrastructure costs and operational expenses.

Key Performance Indicators (KPIs) Positively Impacted by Cloud-Native Development

Cloud-native development directly impacts a range of key performance indicators (KPIs) that are critical to business success. Tracking these KPIs provides a clear measure of the benefits derived from cloud-native adoption. Monitoring these metrics allows organizations to gauge the effectiveness of their cloud-native strategy and make data-driven decisions.

- Time-to-Market: Cloud-native development significantly reduces the time it takes to bring new products and features to market. The faster release cycles and streamlined deployment processes contribute to this acceleration. For example, a software company might use cloud-native technologies to release updates to its mobile app on a weekly basis instead of monthly, providing users with new features and improvements faster than competitors.

- Application Performance: Cloud-native applications often exhibit superior performance due to optimized resource allocation and scalability. Faster response times and improved reliability contribute to a better user experience.

- Customer Satisfaction: Improved application performance, faster feature releases, and increased reliability directly translate into higher customer satisfaction levels. Cloud-native architectures help create a more positive and engaging customer experience.

- Operational Costs: Cloud-native environments often lead to reduced operational costs through optimized resource utilization, automation, and the pay-as-you-go model of cloud services. This can include reduced spending on infrastructure maintenance, energy consumption, and IT staff.

- Development Productivity: Cloud-native tools and frameworks enhance developer productivity by automating tasks, simplifying deployments, and providing access to a wider range of services. Developers can focus on writing code instead of managing infrastructure.

- Revenue Growth: Faster time-to-market, improved customer satisfaction, and increased market agility can all contribute to revenue growth. Cloud-native development empowers businesses to capitalize on market opportunities and expand their customer base.

End of Discussion

In conclusion, cloud-native development is not just a technological shift; it’s a strategic imperative for organizations aiming to thrive in the modern digital economy. By embracing cloud-native principles, businesses can unlock significant advantages in terms of agility, scalability, and cost efficiency. As the adoption of cloud-native practices continues to grow, those who embrace this approach will be best positioned to innovate, adapt, and ultimately, succeed in the years to come.

This transformation promises to reshape how we build, deploy, and manage applications, paving the way for a more dynamic and responsive future.

FAQ Resource

What are the primary differences between cloud-native and traditional application development?

Cloud-native development focuses on designing applications specifically for the cloud, emphasizing microservices, containers, and automation. Traditional development often builds applications that are then migrated to the cloud, potentially missing out on the cloud’s full benefits.

How does cloud-native development improve security?

Cloud-native approaches often incorporate robust security practices from the start, including automated security testing, containerization, and microsegmentation. This proactive approach can lead to improved security posture compared to traditional methods.

What skills are essential for cloud-native developers?

Cloud-native developers need expertise in containerization (e.g., Docker, Kubernetes), CI/CD pipelines, microservices architecture, and cloud platforms. They also need a strong understanding of automation and DevOps principles.

What are the potential challenges of adopting cloud-native development?

Challenges can include the need for a cultural shift, the complexity of managing distributed systems, and the requirement for specialized skills. However, the benefits often outweigh these hurdles.

How can organizations measure the success of their cloud-native initiatives?

Success can be measured by metrics such as deployment frequency, lead time for changes, mean time to recovery, and the number of defects found in production. Cost savings and increased customer satisfaction are also important indicators.