Designing for scalability and elasticity in the cloud is a critical aspect of modern application development, focusing on the ability of systems to adapt and perform under fluctuating workloads. This necessitates a deep understanding of cloud computing fundamentals, encompassing various service models and the pivotal roles of virtualization and automation. The following discourse explores the architectural strategies, technical considerations, and practical implementations necessary for building robust, adaptable, and cost-effective cloud-based solutions.

The presented material will systematically dissect the key components of scalable and elastic architectures, from database design and application architecture to load balancing and performance optimization. Furthermore, it addresses crucial aspects like Infrastructure as Code (IaC), security best practices, cost optimization strategies, and disaster recovery planning. Real-world case studies and practical examples will illustrate the successful application of these principles, offering actionable insights for architects and developers alike.

Understanding Scalability and Elasticity

Cloud computing offers unprecedented opportunities for businesses to adapt to fluctuating demands. Understanding the core concepts of scalability and elasticity is crucial for designing efficient and cost-effective cloud architectures. These concepts, while related, represent distinct approaches to managing resource allocation and application performance.

Fundamental Differences Between Scalability and Elasticity

Scalability and elasticity, though often used interchangeably, describe different characteristics of a system’s ability to handle increased workloads. Scalability refers to the capacity of a system to handle increased load. Elasticity, on the other hand, refers to the ability of a system to automatically adapt to changes in demand by dynamically provisioning and de-provisioning resources.Scalability can be categorized into two primary types:

- Vertical Scaling (Scale Up): This involves increasing the resources of a single instance, such as adding more CPU, RAM, or storage to a server. This approach is limited by the physical capabilities of the hardware. A common example is upgrading a database server from 16GB to 32GB of RAM. Vertical scaling is often simpler to implement initially, but it can become expensive and has inherent limitations.

- Horizontal Scaling (Scale Out): This involves adding more instances of a system to handle the load. This is typically achieved by deploying additional servers or virtual machines. For instance, deploying additional web servers behind a load balancer to handle increased traffic. Horizontal scaling is generally more flexible and allows for virtually unlimited scalability, but it requires careful design to ensure data consistency and manage the distributed environment.

Elasticity builds upon scalability by automating the scaling process. An elastic system automatically adjusts its resources based on real-time demand. This automation is typically driven by monitoring metrics such as CPU utilization, memory usage, or queue depth. For example, an application deployed on a cloud platform can automatically launch new virtual machine instances when CPU utilization exceeds a predefined threshold, and shut down instances when the load decreases.

The benefits include cost optimization and improved responsiveness to changing workloads.

Horizontal and Vertical Scaling Methods

The choice between horizontal and vertical scaling depends on the specific application and its requirements.

- Vertical Scaling Example: A database server initially provisioned with 8 vCPUs and 32 GB of RAM experiences performance degradation during peak hours. To address this, the administrator upgrades the server to 16 vCPUs and 64 GB of RAM. This increases the capacity of the single instance, enabling it to handle the increased load. This is a straightforward approach for immediate relief, but it is limited by the hardware’s maximum capacity.

- Horizontal Scaling Example: An e-commerce website experiences a surge in traffic during a holiday sale. To handle the increased load on the web servers, the system automatically provisions additional instances of the web application behind a load balancer. As traffic subsides after the sale, the system automatically de-provisions the extra instances, optimizing resource utilization and cost. This approach offers greater scalability and resilience.

The implementation of horizontal scaling often involves the use of load balancers to distribute traffic across multiple instances, and auto-scaling groups to automatically manage the number of instances based on predefined metrics.

Benefits of Implementing Scalable and Elastic Architectures

Implementing scalable and elastic architectures provides several significant advantages.

- Improved Performance: By scaling resources as needed, applications can maintain optimal performance even under heavy loads. This results in faster response times and a better user experience.

- Cost Optimization: Elasticity allows for dynamic resource allocation, ensuring that resources are only provisioned when required. This prevents over-provisioning and reduces operational costs. For example, a system that automatically scales down during off-peak hours can significantly reduce infrastructure expenses.

- Increased Availability: Horizontal scaling and redundancy mechanisms, such as load balancing and replication, enhance system availability. If one instance fails, the load balancer can automatically redirect traffic to other healthy instances, minimizing downtime.

- Enhanced Fault Tolerance: Distributing workloads across multiple instances increases fault tolerance. If one instance fails, the remaining instances can continue to operate, preventing a complete system outage.

- Rapid Deployment and Iteration: Cloud platforms often provide tools and services that simplify the deployment and scaling of applications. This allows for faster development cycles and quicker time to market.

Core Cloud Computing Concepts

The ability to design for scalability and elasticity in the cloud is fundamentally reliant on understanding the core cloud computing service models, the role of virtualization, and the power of automation. These concepts form the bedrock upon which resilient, efficient, and cost-effective cloud infrastructure is built. A grasp of these elements is crucial for effectively leveraging the cloud’s inherent advantages.

Cloud Service Models and Scalability

Cloud computing offers various service models, each providing a different level of control and management responsibility. These models, Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS), are intrinsically linked to the scalability characteristics of cloud environments. The choice of service model significantly impacts how easily an application can scale to meet fluctuating demands.

- Infrastructure as a Service (IaaS): IaaS provides access to fundamental computing resources—virtual machines, storage, and networking—over the internet. Users manage the operating systems, middleware, and applications. Scalability in IaaS is primarily achieved by provisioning more resources as needed. For instance, a company experiencing a sudden surge in website traffic can quickly spin up additional virtual machines to handle the increased load. The scalability in IaaS is typically horizontal (adding more instances) and relies heavily on the user’s ability to automate resource allocation.

The user’s responsibility is high in terms of management.

- Platform as a Service (PaaS): PaaS offers a platform for developing, running, and managing applications, including the underlying infrastructure. Users manage the applications and data, while the provider manages the operating systems, middleware, and runtime environment. PaaS inherently supports scalability by abstracting away the complexities of infrastructure management. Applications deployed on PaaS platforms can often scale automatically based on predefined rules or dynamic load balancing.

A good example is a web application on Google App Engine; it can automatically scale resources (CPU, memory) based on user traffic.

- Software as a Service (SaaS): SaaS delivers software applications over the internet, on-demand. Users access the software through a web browser or a client application, without managing any of the underlying infrastructure. SaaS providers handle all aspects of the application, including infrastructure, middleware, and the application itself. Scalability in SaaS is largely managed by the provider. SaaS applications are designed to scale to accommodate a large user base.

A well-known example is Salesforce; it scales to accommodate millions of users and vast amounts of data without any user intervention in infrastructure scaling.

Virtualization and Elasticity

Virtualization is a cornerstone technology that enables elasticity in cloud environments. It allows multiple virtual machines (VMs) to run on a single physical server, effectively abstracting the underlying hardware. This abstraction is crucial for resource pooling and dynamic allocation.

- Resource Pooling: Virtualization allows cloud providers to pool physical resources (CPU, memory, storage) and allocate them to VMs as needed. This pooling maximizes resource utilization and efficiency. A physical server with unused resources can be quickly reallocated to a VM experiencing increased demand.

- Dynamic Allocation: VMs can be created, resized, or terminated on demand, providing the flexibility to scale resources up or down rapidly. Elasticity, the ability to automatically scale resources based on demand, is a direct consequence of this dynamic allocation. For example, an e-commerce website can automatically provision more VMs during peak shopping seasons and scale them down during off-peak hours.

- Hypervisors: Hypervisors are the software components that manage the VMs. They allow the sharing of hardware resources among multiple VMs. The two main types are: Type 1 (bare-metal) hypervisors that run directly on the hardware, and Type 2 (hosted) hypervisors that run on top of an operating system. Examples of hypervisors include VMware ESXi (Type 1) and VirtualBox (Type 2).

Automation in Cloud Resource Management

Automation is critical for managing cloud resources efficiently and achieving scalability. It involves using software to automate tasks such as provisioning, configuration, and scaling of resources. Automation reduces manual effort, minimizes errors, and allows for faster response to changes in demand.

- Infrastructure as Code (IaC): IaC allows you to manage and provision infrastructure through code, treating infrastructure like software. Tools like Terraform and AWS CloudFormation enable users to define infrastructure configurations in code and automate the deployment of resources. This approach promotes consistency, repeatability, and version control.

- Configuration Management: Configuration management tools, such as Ansible, Chef, and Puppet, automate the configuration and management of servers. These tools ensure that servers are configured consistently and according to defined standards, reducing the risk of misconfigurations and ensuring scalability.

- Orchestration: Orchestration tools automate the deployment, management, and scaling of complex applications. Kubernetes is a prime example, automating the deployment, scaling, and management of containerized applications.

- Auto-Scaling: Auto-scaling services automatically adjust the number of running instances of a service based on demand. These services monitor metrics, such as CPU utilization or network traffic, and automatically scale resources up or down. For instance, Amazon EC2 Auto Scaling and Azure Virtual Machine Scale Sets.

Designing for Scalability

Designing scalable systems in the cloud necessitates careful consideration of various components, including the database. Databases often become the bottleneck in high-traffic applications, making their scalability a critical aspect of overall system performance. This section delves into database considerations for building scalable applications, particularly within the context of an e-commerce platform.

Designing for Scalability: Database Considerations

A scalable database architecture is crucial for handling the fluctuating demands of an e-commerce platform. The design must accommodate increasing user traffic, product catalogs, and transaction volumes without compromising performance or availability. The following table Artikels a proposed database architecture for such a platform, highlighting key components and their roles.

| Component | Description | Technology (Example) | Scalability Strategy |

|---|---|---|---|

| Database Server | Stores and manages the e-commerce platform’s data, including product information, user accounts, orders, and transactions. | PostgreSQL, MySQL, Amazon RDS | Vertical Scaling (limited), Horizontal Scaling (sharding, replication) |

| Caching Layer | Reduces database load by storing frequently accessed data in memory. Improves read performance. | Redis, Memcached | Horizontal Scaling (clustering) |

| Load Balancer | Distributes database queries across multiple database instances (replicas). Ensures high availability and improved read performance. | HAProxy, AWS Elastic Load Balancing | Automatic scaling based on traffic |

| Database Monitoring & Management Tools | Provides insights into database performance, identifies bottlenecks, and facilitates proactive scaling decisions. | Prometheus, Grafana, AWS CloudWatch | Alerting and automated scaling based on metrics |

To achieve scalability, several database scaling strategies can be employed, each with its own set of trade-offs.

- Sharding: This involves partitioning the database into smaller, more manageable parts (shards). Each shard holds a subset of the data. This allows for horizontal scaling, where new shards can be added to accommodate growing data volumes and traffic.

- Trade-offs: Sharding introduces complexity in data management, including data distribution, query routing, and potential for data skew (uneven distribution of data across shards). It requires careful planning to determine the sharding key and data partitioning strategy.

- Replication: This involves creating multiple copies (replicas) of the database. Read operations can be distributed across the replicas, improving read performance and providing high availability. Write operations typically go to the primary database and are replicated to the secondary replicas.

- Trade-offs: Replication can introduce eventual consistency issues, where data might not be immediately consistent across all replicas.

It also adds complexity in managing data synchronization and failover scenarios. Replication lag, the delay between a write to the primary and its propagation to the replicas, needs careful monitoring.

- Trade-offs: Replication can introduce eventual consistency issues, where data might not be immediately consistent across all replicas.

- Vertical Scaling: This involves increasing the resources (CPU, memory, storage) of a single database server. It’s a simpler approach but has limitations.

- Trade-offs: Vertical scaling has practical limits. Eventually, a single server’s resources will be exhausted. It can also lead to downtime during upgrades or maintenance.

Cost is another factor, as the price of larger instances can increase exponentially.

- Trade-offs: Vertical scaling has practical limits. Eventually, a single server’s resources will be exhausted. It can also lead to downtime during upgrades or maintenance.

- Caching: Implementing a caching layer, such as Redis or Memcached, can significantly reduce the load on the database by storing frequently accessed data in memory.

- Trade-offs: Cache invalidation is a key challenge. Ensuring that the cache is updated when the underlying data changes is critical. Cache consistency and managing cache eviction policies are also important considerations.

Cache misses (when the requested data is not in the cache) still result in database queries.

- Trade-offs: Cache invalidation is a key challenge. Ensuring that the cache is updated when the underlying data changes is critical. Cache consistency and managing cache eviction policies are also important considerations.

Implementing database connection pooling is a critical step in improving database performance, especially in high-traffic applications. Connection pooling manages a pool of database connections, reusing existing connections rather than establishing new ones for each database request. This reduces the overhead of connection creation and destruction, leading to faster response times and improved resource utilization. The following procedure Artikels the steps for implementing database connection pooling:

- Choose a Connection Pooling Library: Select a suitable connection pooling library for the chosen programming language and database technology. Examples include HikariCP (Java), SQLAlchemy (Python), and node-pool (Node.js). These libraries provide efficient connection management and configuration options.

- Configure the Pool: Configure the connection pool with parameters such as the maximum number of connections, minimum idle connections, connection timeout, and connection validation query. These parameters should be tuned based on the application’s workload and database server capacity. A common starting point is to set the maximum pool size to the number of CPU cores multiplied by a factor (e.g., 2x or 3x) to allow for concurrent operations.

- Initialize the Pool: Initialize the connection pool during application startup. This involves providing the connection details (database URL, username, password) to the pooling library. This step establishes the initial set of connections.

- Obtain Connections from the Pool: When the application needs to interact with the database, it requests a connection from the pool. The pool either provides an existing idle connection or creates a new one if necessary (up to the maximum pool size).

- Use the Connection: Use the acquired connection to execute database queries. Ensure that database operations are properly encapsulated within transactions to maintain data consistency.

- Return Connections to the Pool: After completing database operations, the applicationmust* return the connection to the pool. This is typically done using a `finally` block or a context manager to ensure that connections are always released, even in the event of errors.

- Monitor and Tune: Regularly monitor the connection pool’s performance metrics, such as connection usage, wait times, and connection creation/destruction rates. Adjust the pool configuration parameters (e.g., maximum pool size) as needed to optimize performance and resource utilization. Tools like Prometheus and Grafana can be used to visualize these metrics.

By implementing these strategies, an e-commerce platform can be designed to handle significant traffic volume and data growth, ensuring a positive user experience and business continuity.

Designing for Elasticity

Elasticity, a cornerstone of cloud computing, enables applications to dynamically adjust resources based on fluctuating demand. This responsiveness ensures optimal performance and cost efficiency by scaling resources up or down as needed. Effectively designing for elasticity requires careful consideration of application architecture, infrastructure, and automation strategies.

Application Architecture for Autoscaling

Autoscaling relies heavily on an application’s architecture. A well-designed architecture facilitates the dynamic allocation and deallocation of resources without disrupting service availability. This involves breaking down the application into independent, scalable components.

- Microservices Architecture: Decomposing an application into small, independent services, each responsible for a specific business function. This allows for independent scaling of individual services based on their specific resource needs. For example, an e-commerce platform might have separate microservices for product catalog, shopping cart, and payment processing. If the payment processing service experiences a surge in demand, it can be scaled independently without affecting other services.

- Stateless Applications: Designing applications to be stateless, meaning they do not store client-specific data on the server. All necessary information for a request is contained within the request itself or is stored in a shared, persistent data store. This allows for easy scaling as any instance of the application can handle any request. Stateless applications are crucial for horizontal scaling, where new instances can be added or removed without impacting user sessions.

- Decoupling Components: Employing message queues and event-driven architectures to decouple application components. This allows for asynchronous communication and processing, reducing the impact of load spikes on any single component. A message queue, like Apache Kafka or RabbitMQ, acts as an intermediary, buffering requests and enabling components to process them at their own pace. This approach is particularly useful for handling tasks that are not time-critical.

- Load Balancing: Utilizing load balancers to distribute incoming traffic across multiple instances of an application. Load balancers monitor the health of each instance and automatically route traffic away from unhealthy instances. This ensures high availability and optimal resource utilization. Popular load balancing solutions include AWS Elastic Load Balancing (ELB), Google Cloud Load Balancing, and Azure Load Balancer.

Implementing Autoscaling Rules

Autoscaling rules are the mechanism by which cloud environments automatically adjust resources. These rules are typically based on metrics such as CPU utilization, memory usage, network traffic, or custom application-specific metrics. The specific implementation varies depending on the cloud provider.

For instance, in Amazon Web Services (AWS), autoscaling groups (ASGs) are used to manage the scaling of Amazon EC2 instances. The following is a simplified example of how autoscaling rules might be configured using AWS CloudWatch and an ASG:

- Define Metrics: Monitor relevant metrics, such as CPU utilization, using CloudWatch.

- Create Alarm: Set up CloudWatch alarms to trigger scaling actions based on predefined thresholds. For example, an alarm could be configured to trigger when CPU utilization exceeds 70% for a sustained period.

- Configure Autoscaling Group: Define the minimum, maximum, and desired capacity of the ASG. The ASG will launch or terminate instances based on the alarms triggered by CloudWatch.

- Scaling Policies: Configure scaling policies that define how the ASG should respond to the alarms. This includes specifying the number of instances to add or remove when an alarm is triggered. For example, a scaling policy might specify adding two instances when the CPU utilization alarm is triggered.

Similar implementations exist in other cloud providers, such as Google Cloud’s managed instance groups and Azure’s virtual machine scale sets, which also utilize metrics, alarms, and scaling policies to achieve autoscaling.

Stateless Application Design for Enhanced Elasticity

Stateless applications are fundamental to achieving true elasticity. By eliminating the need to store session-specific data on individual instances, they allow for seamless scaling and improved resilience.

- Session Management: Instead of storing session data locally, utilize a shared session store, such as Redis or Memcached. This allows any instance to access session information.

- Data Storage: Store all application data in a shared, persistent data store, such as a relational database (e.g., PostgreSQL, MySQL) or a NoSQL database (e.g., MongoDB, Cassandra). This ensures data consistency and accessibility regardless of which instance handles a request.

- Request Handling: Each request should contain all the information needed for processing. Avoid relying on server-side session data to retrieve user information or other context.

- Caching: Implement caching mechanisms to reduce the load on the database and improve performance. Caching frequently accessed data in a distributed cache, such as Redis, can significantly improve response times.

The adoption of stateless applications has been instrumental in the success of numerous high-traffic websites and services. For example, the architecture of many large-scale social media platforms is heavily reliant on stateless principles, enabling them to handle millions of concurrent users without significant performance degradation.

Load Balancing and Traffic Management

Effective load balancing and traffic management are crucial for ensuring application scalability and elasticity in the cloud. These techniques distribute incoming network traffic across multiple server instances, preventing any single instance from becoming overloaded and thus improving application performance, availability, and resilience. This section explores the role of load balancers, different load balancing algorithms, and the implementation of Content Delivery Networks (CDNs) to optimize application delivery.

Load Balancers in Traffic Distribution

Load balancers act as intermediaries between clients and backend server instances. Their primary function is to distribute incoming client requests across a pool of available servers, optimizing resource utilization and minimizing response times.

- Architecture: Load balancers typically sit in front of a group of server instances. When a client request arrives, the load balancer selects a server from the pool based on a pre-defined algorithm and forwards the request.

- Health Checks: Load balancers continuously monitor the health of the backend server instances. If a server fails a health check, the load balancer automatically removes it from the pool, preventing traffic from being routed to an unhealthy instance. This automated failover mechanism ensures high availability.

- Types of Load Balancers: There are various types of load balancers, including hardware load balancers (dedicated physical appliances), software load balancers (applications running on virtual machines), and cloud-based load balancers (managed services offered by cloud providers). Each type has its own advantages and disadvantages in terms of cost, performance, and features.

- Benefits: Load balancers offer several key benefits, including improved application performance by distributing traffic, enhanced availability through automatic failover, increased scalability by allowing the addition of more server instances as demand grows, and improved security by acting as a single point of entry for client requests.

Load Balancing Algorithms

The choice of load balancing algorithm significantly impacts the efficiency and effectiveness of traffic distribution. Different algorithms are suited for different application needs and traffic patterns.

- Round Robin: This is the simplest algorithm, where requests are distributed sequentially to each server in the pool. It is easy to implement and works well for scenarios where all servers have similar processing capabilities and requests are relatively uniform.

- Least Connections: This algorithm directs traffic to the server with the fewest active connections. It is useful when server processing times vary, as it attempts to balance the load based on the current workload of each server.

- IP Hash: This algorithm uses the client’s IP address to generate a hash, which determines which server receives the request. This ensures that requests from the same client are consistently routed to the same server, which can be beneficial for session persistence.

- Weighted Round Robin/Least Connections: These algorithms allow administrators to assign weights to servers, reflecting their processing capacity. Servers with higher weights receive a larger proportion of the traffic. This is useful when servers have different hardware specifications.

- Performance Considerations: The selection of the optimal algorithm depends on the specific application’s requirements, the server infrastructure, and the expected traffic patterns. Factors to consider include server capacity, the need for session persistence, and the potential for uneven load distribution.

Content Delivery Networks (CDNs) for Application Performance

Content Delivery Networks (CDNs) are geographically distributed networks of servers that cache content closer to users, improving application performance and reducing latency. They are particularly effective for serving static content such as images, videos, and CSS/JavaScript files.

- How CDNs Work: When a user requests content, the CDN identifies the closest server to the user and delivers the content from its cache. If the content is not cached, the CDN retrieves it from the origin server and caches it for future requests.

- Benefits of Using CDNs: CDNs significantly improve application performance by reducing latency, improving load times, and reducing the load on the origin server. They also enhance application availability by providing redundancy and protection against distributed denial-of-service (DDoS) attacks.

- CDN Implementation: Implementing a CDN typically involves selecting a CDN provider, configuring the CDN to cache content from the origin server, and updating DNS records to direct user requests to the CDN.

- CDN Use Cases: CDNs are widely used by websites and applications that serve content to a global audience, such as e-commerce platforms, media streaming services, and social media networks. For example, a global e-commerce platform could use a CDN to ensure fast loading times for product images and videos, regardless of the user’s geographic location.

- Performance Metrics: The effectiveness of a CDN can be measured by metrics such as reduced latency (measured in milliseconds), improved page load times, and reduced origin server load (measured in percentage of CPU utilization).

Monitoring and Performance Optimization

Effective monitoring and performance optimization are crucial for maintaining the scalability and elasticity of cloud applications. Continuous observation of key metrics allows for proactive identification of bottlenecks and inefficiencies, enabling timely adjustments to ensure optimal resource utilization and application responsiveness. A well-defined monitoring strategy provides the necessary data to understand application behavior under varying loads, facilitating informed decisions regarding resource allocation and system architecture.

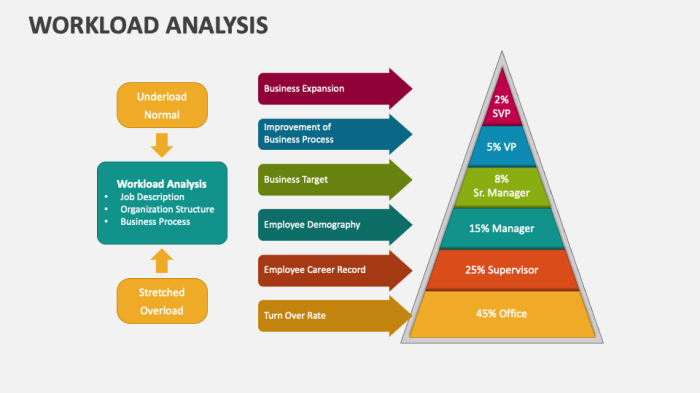

Identifying Key Performance Indicators (KPIs) for Monitoring Scalability and Elasticity

KPIs serve as quantifiable measures to assess the performance of a cloud application and its ability to scale and adapt to changing demands. Selecting the appropriate KPIs is essential for gaining insights into system behavior and identifying areas for improvement. These metrics should be carefully chosen to reflect both the application’s internal workings and its external interactions.

- CPU Utilization: Monitors the percentage of time the CPU is busy processing tasks. High CPU utilization can indicate a bottleneck, requiring scaling up. For instance, an e-commerce platform experiencing a surge in traffic might see CPU utilization on its web servers increase dramatically. If CPU utilization consistently exceeds 80% during peak hours, it’s a clear indicator that additional resources (e.g., more virtual machines) are needed to handle the load.

- Memory Utilization: Tracks the amount of memory being used by the application. Insufficient memory can lead to performance degradation and application crashes. An application consistently using over 90% of its allocated memory might benefit from either vertical scaling (increasing the memory of the existing instance) or horizontal scaling (adding more instances).

- Network Throughput: Measures the amount of data transferred over the network. High network latency or low throughput can impact application responsiveness. Monitoring network throughput is especially critical for applications that handle large data transfers, such as video streaming services. A sudden drop in throughput might indicate network congestion or a problem with the underlying infrastructure.

- Disk I/O: Monitors the rate at which data is read from and written to disk. High disk I/O can indicate bottlenecks, especially for applications that perform a lot of disk operations. Databases, for example, are often heavily reliant on disk I/O. Slow disk I/O can lead to increased query times and overall performance degradation.

- Response Time: Measures the time it takes for the application to respond to user requests. Slow response times directly impact user experience. Monitoring response times across different application components is crucial for identifying performance bottlenecks. For example, a significant increase in response time for database queries might indicate a need for database optimization or scaling.

- Error Rate: Tracks the percentage of requests that result in errors. A high error rate can indicate problems with the application’s code, infrastructure, or dependencies. Monitoring error rates is crucial for identifying and resolving issues that affect application stability.

- Request Rate (Throughput): Measures the number of requests processed per unit of time. This metric helps assess the application’s ability to handle load. Monitoring request rates allows you to see how the system performs under various load conditions.

- Queue Length: Measures the number of pending tasks waiting to be processed. A long queue length can indicate that the application is not able to process requests as quickly as they are arriving. For example, if a message queue used by an application has a consistently high queue length, it could indicate that the consumers are not keeping up with the rate at which messages are being produced.

Designing a System for Automatically Collecting and Analyzing Performance Metrics

Automated collection and analysis of performance metrics are fundamental to effective monitoring. This system should gather data from various sources, process it, and present it in a readily understandable format. The design must consider the volume, velocity, and variety of the data generated by a cloud application.

- Data Collection: Utilize monitoring agents, application instrumentation, and cloud provider services to gather metrics.

- Metric Aggregation: Aggregate raw metrics to calculate averages, percentiles, and other useful statistics. For instance, calculating the 95th percentile response time helps identify outliers and understand the worst-case performance.

- Data Storage: Choose a suitable storage solution for the metrics data. Time-series databases are specifically designed for storing and querying time-stamped data. Popular options include Prometheus, InfluxDB, and Amazon CloudWatch.

- Data Visualization: Use dashboards and visualizations to present the metrics data in a clear and concise manner. Tools like Grafana, Kibana, and the monitoring dashboards provided by cloud providers allow for the creation of interactive dashboards.

- Alerting and Notification: Configure alerts based on predefined thresholds and anomalies. When a metric exceeds a threshold, the system should trigger notifications to relevant stakeholders.

- Automated Analysis: Implement automated analysis techniques, such as anomaly detection and trend analysis, to identify potential issues. Machine learning algorithms can be used to predict future performance and proactively identify potential problems.

Creating a Detailed Procedure for Optimizing Application Performance in a Scalable Environment

Optimizing application performance in a scalable environment requires a systematic approach that includes identifying bottlenecks, implementing improvements, and continuously monitoring the results. This procedure should be iterative and adaptable to changing workloads and application requirements.

- Baseline Performance Assessment: Establish a baseline of application performance under normal load conditions. This involves measuring key performance indicators and documenting the application’s behavior. This baseline serves as a reference point for evaluating the impact of optimization efforts.

- Bottleneck Identification: Use monitoring data to identify performance bottlenecks. Common bottlenecks include CPU utilization, memory usage, network latency, and database query performance. Analyze the data to pinpoint the specific components or processes that are causing performance issues.

- Optimization Strategies: Implement appropriate optimization strategies based on the identified bottlenecks. These strategies may include:

- Code Optimization: Optimize application code to improve efficiency. This includes techniques such as code profiling, refactoring, and algorithm optimization. For example, replacing inefficient loops with more efficient data structures.

- Database Optimization: Optimize database queries, indexes, and schema. This can significantly improve the performance of database-driven applications. Examples include adding indexes to frequently queried columns or optimizing slow queries using query explain plans.

- Caching: Implement caching mechanisms to reduce the load on backend systems. Caching can be applied at various levels, including web server caching, database query caching, and content delivery networks (CDNs).

- Resource Scaling: Scale application resources (e.g., CPU, memory, storage) based on demand. This may involve both vertical scaling (increasing the resources of an existing instance) and horizontal scaling (adding more instances).

- Load Balancing: Distribute traffic across multiple application instances using load balancers. This helps to ensure that no single instance is overloaded.

- Network Optimization: Optimize network configuration and settings to reduce latency and improve throughput. This includes techniques such as optimizing TCP settings and using content delivery networks (CDNs) for static content.

Infrastructure as Code (IaC) for Scalability

Infrastructure as Code (IaC) is a paradigm shift in how cloud infrastructure is provisioned and managed. It treats infrastructure, such as servers, networks, and databases, as code, allowing for automated, repeatable, and version-controlled deployments. This approach is critical for building scalable and elastic cloud environments.

Benefits of Using IaC for Cloud Infrastructure

Using IaC offers significant advantages for managing cloud infrastructure, particularly in the context of scalability. It enhances efficiency, reduces errors, and improves the overall reliability of deployments.

- Automation: IaC automates infrastructure provisioning, eliminating manual configuration and reducing the risk of human error. This automation allows for rapid and consistent deployments, which is crucial for scaling up or down quickly.

- Repeatability: IaC allows for the creation of reusable infrastructure templates. These templates can be used to deploy identical environments repeatedly, ensuring consistency across different environments (e.g., development, testing, production). This repeatability is vital for scaling out resources when demand increases.

- Version Control: Infrastructure code can be stored in version control systems (e.g., Git), allowing for tracking changes, rollbacks, and collaboration. This ensures that infrastructure configurations are auditable and that changes can be managed effectively, preventing unexpected issues during scaling operations.

- Consistency: IaC enforces consistent infrastructure configurations across all environments. This reduces configuration drift and ensures that all resources are provisioned and configured in the same way, regardless of the deployment environment.

- Faster Deployment: IaC significantly speeds up infrastructure deployments. Automated deployments are much faster than manual processes, enabling organizations to respond quickly to changing demands and scale resources on-demand.

- Cost Optimization: IaC facilitates cost optimization by allowing for the automated deprovisioning of unused resources. This helps prevent unnecessary spending and ensures that resources are only consumed when needed.

Examples of IaC Tools and Their Use in Scalable Architectures

Several IaC tools are available, each with its strengths and weaknesses. Selecting the right tool depends on the specific requirements of the project and the cloud provider being used. These tools are instrumental in building scalable architectures by enabling automated provisioning and management of resources.

- Terraform: Terraform, developed by HashiCorp, is a popular IaC tool that supports multiple cloud providers (AWS, Azure, Google Cloud, etc.). It uses a declarative approach, where users define the desired state of the infrastructure, and Terraform automatically provisions and manages the resources to achieve that state.

For example, using Terraform, one can define a scalable architecture that includes an auto-scaling group for web servers, a load balancer to distribute traffic, and a database.

Terraform can then automatically create and manage these resources, ensuring that the infrastructure scales up or down based on demand.

- AWS CloudFormation: CloudFormation is an IaC service provided by Amazon Web Services (AWS). It uses templates (written in YAML or JSON) to define and manage AWS resources. CloudFormation is well-integrated with other AWS services, making it easy to deploy and manage resources within the AWS ecosystem.

For instance, a CloudFormation template can be used to create an auto-scaling group for EC2 instances, along with an Elastic Load Balancer (ELB) and a relational database service (RDS) instance.

The template ensures that these resources are created and configured correctly, and that the EC2 instances scale automatically based on predefined metrics.

- Azure Resource Manager (ARM) Templates: ARM templates are used to define and deploy Azure resources. They use JSON format to describe the infrastructure, including virtual machines, virtual networks, storage accounts, and other Azure services. ARM templates enable the creation of complex, scalable architectures within the Azure cloud.

Consider the scenario where an organization needs to deploy a scalable web application on Azure.

Using an ARM template, they can define the necessary resources, such as virtual machines, virtual networks, load balancers, and databases. The template can then automatically deploy and configure these resources, enabling the web application to scale as needed.

- Google Cloud Deployment Manager: Deployment Manager is Google Cloud’s IaC tool. It uses YAML or Python templates to define and deploy Google Cloud resources. Deployment Manager supports a wide range of Google Cloud services and allows for the creation of complex, scalable infrastructure configurations.

For a scalable web application, Deployment Manager could be used to define resources such as Compute Engine instances, Cloud Load Balancing, and Cloud SQL databases.

The deployment can then be automated, allowing for easy scaling and management of the application’s infrastructure.

Designing a Script Using IaC to Automatically Deploy a Scalable Web Application

Designing a script using IaC involves defining the desired state of the infrastructure in code. This code is then used to automatically provision and configure the resources needed to run the web application. The following example uses Terraform to illustrate the process.

Example: Deploying a Scalable Web Application using Terraform

This example Artikels a simplified Terraform script to deploy a scalable web application on AWS. It includes an Auto Scaling Group (ASG) for web servers, a load balancer, and a security group.

Step 1: Define the provider

The script begins by specifying the AWS provider, including the region.“`terraformterraform required_providers aws = source = “hashicorp/aws” version = “~> 4.0” provider “aws” region = “us-east-1″“`

Step 2: Create a Security Group

This section defines a security group to allow inbound traffic on ports 80 and 22 (for HTTP and SSH, respectively).“`terraformresource “aws_security_group” “web_sg” name = “web-sg” description = “Allow HTTP and SSH inbound traffic” ingress from_port = 80 to_port = 80 protocol = “tcp” cidr_blocks = [“0.0.0.0/0”] ingress from_port = 22 to_port = 22 protocol = “tcp” cidr_blocks = [“0.0.0.0/0”] egress from_port = 0 to_port = 0 protocol = “-1” # Allow all outbound traffic cidr_blocks = [“0.0.0.0/0”] “`

Step 3: Create an Application Load Balancer (ALB)

This section sets up an ALB to distribute traffic across the web servers.“`terraformresource “aws_lb” “app_lb” name = “app-lb” internal = false # Publicly accessible load_balancer_type = “application” security_groups = [aws_security_group.web_sg.id] subnets = [“subnet-xxxxxxxxxxxxxxxxx”, “subnet-yyyyyyyyyyyyyyyyy”] # Replace with your subnet IDsresource “aws_lb_listener” “http_listener” load_balancer_arn = aws_lb.app_lb.arn port = 80 protocol = “HTTP” default_action type = “forward” target_group_arn = aws_lb_target_group.web_tg.arn “`

Step 4: Create a Target Group

This defines a target group for the web servers.“`terraformresource “aws_lb_target_group” “web_tg” name = “web-tg” port = 80 protocol = “HTTP” vpc_id = “vpc-zzzzzzzzzzzzzzzzzzz” # Replace with your VPC ID“`

Step 5: Create an Auto Scaling Group (ASG)

This section creates an ASG that launches EC2 instances and automatically scales them based on demand.“`terraformresource “aws_launch_template” “web_lt” name_prefix = “web-lt-” image_id = “ami-xxxxxxxxxxxxxxxxx” # Replace with your AMI ID instance_type = “t2.micro” user_data = base64encode( <

Explanation of the Script:

The Terraform script first defines the AWS provider and the region.

It then creates a security group that allows inbound HTTP and SSH traffic. An Application Load Balancer (ALB) is configured to distribute traffic, with a listener on port 80. A target group is defined to register the EC2 instances with the load balancer. Finally, an Auto Scaling Group (ASG) is created using a launch template, which specifies the AMI, instance type, and user data (a simple web server configuration).

The ASG automatically scales the number of EC2 instances between the defined minimum and maximum sizes based on demand. The `target_group_arns` attribute in the ASG configuration ensures that instances launched by the ASG are registered with the load balancer.

Deployment Process:

The deployment process involves the following steps:

- Initialization: Initialize Terraform by running `terraform init`. This downloads the necessary provider plugins.

- Planning: Create an execution plan by running `terraform plan`. This shows the changes that Terraform will make to your infrastructure.

- Application: Apply the changes by running `terraform apply`. Terraform will provision the resources defined in the script.

- Verification: After the deployment, verify that the web application is accessible by navigating to the DNS name of the load balancer in a web browser.

This example provides a basic framework for deploying a scalable web application. In a real-world scenario, the script would be expanded to include additional resources, such as a database, caching mechanisms, and more sophisticated scaling policies. The use of IaC allows for easy modification and redeployment of the infrastructure as requirements evolve.

Security Considerations in Scalable Architectures

Designing scalable and elastic cloud applications necessitates a robust security posture. Security is not an afterthought but an integral component of the architecture, ensuring the confidentiality, integrity, and availability of data and resources. A failure to adequately address security vulnerabilities can undermine the benefits of scalability and elasticity, exposing systems to attacks that could lead to data breaches, service disruptions, and financial losses.

Security Best Practices for Scalable Cloud Applications

Implementing security best practices is paramount when designing scalable cloud applications. This involves a multi-layered approach that encompasses various aspects of the application lifecycle, from design and development to deployment and ongoing operations.

- Principle of Least Privilege: Grant users and services only the minimum necessary permissions to perform their tasks. This limits the impact of a compromised account. For instance, database access should be restricted to only the specific tables and operations required by the application.

- Defense in Depth: Employ multiple layers of security controls to protect against various threats. This includes network segmentation, intrusion detection systems (IDS), web application firewalls (WAFs), and data encryption. If one layer fails, others remain to provide protection.

- Automated Security Testing: Integrate security testing into the CI/CD pipeline. This includes static code analysis, dynamic application security testing (DAST), and vulnerability scanning to identify and address security flaws early in the development process.

- Regular Security Audits and Assessments: Conduct periodic security audits and penetration testing to identify vulnerabilities and assess the effectiveness of security controls. This helps to proactively address potential weaknesses.

- Security Information and Event Management (SIEM): Utilize SIEM systems to collect, analyze, and correlate security logs from various sources. This enables early detection of security incidents and provides valuable insights for incident response.

- Patch Management: Implement a robust patch management process to promptly apply security updates to operating systems, software, and libraries. Unpatched systems are vulnerable to known exploits.

- Configuration Management: Securely configure cloud resources and services according to security best practices. This includes setting up network security groups, configuring access controls, and enabling logging and monitoring.

- Data Encryption: Employ encryption both in transit and at rest to protect sensitive data. This includes using TLS/SSL for secure communication and encrypting data stored in databases and storage services.

- Compliance: Adhere to relevant industry regulations and compliance standards (e.g., GDPR, HIPAA, PCI DSS). This ensures that the application meets the necessary security requirements.

Implementing Robust Authentication and Authorization Mechanisms

Securely verifying the identity of users and controlling their access to resources are crucial aspects of a scalable architecture. Effective authentication and authorization mechanisms are essential to prevent unauthorized access and protect sensitive data.

- Multi-Factor Authentication (MFA): Implement MFA to add an extra layer of security beyond passwords. This typically involves requiring users to provide a second factor of authentication, such as a code from a mobile app or a biometric scan. MFA significantly reduces the risk of account compromise.

- Centralized Identity Management: Utilize a centralized identity management system (e.g., Active Directory, Azure Active Directory, Okta) to manage user identities and access controls. This simplifies user provisioning, deprovisioning, and access management across multiple applications and services.

- Role-Based Access Control (RBAC): Implement RBAC to define user roles and assign permissions based on those roles. This simplifies access management and ensures that users only have access to the resources they need.

- OAuth 2.0 and OpenID Connect (OIDC): Leverage OAuth 2.0 and OIDC for secure authentication and authorization, particularly for web and mobile applications. These protocols allow users to grant access to their data without sharing their credentials. For example, users can log in to an application using their Google or Facebook accounts.

- API Key Management: Securely manage API keys and tokens to control access to APIs. Implement rate limiting and other security measures to prevent abuse and protect API resources.

- Regular Password Policies: Enforce strong password policies, including password complexity requirements, regular password changes, and protection against password reuse.

- Monitoring and Auditing of Authentication and Authorization Events: Implement comprehensive logging and monitoring of authentication and authorization events. This allows for the detection of suspicious activities and security incidents. Regularly review audit logs to identify potential security threats.

Securing Data Storage and Transmission in a Scalable Environment

Protecting data during storage and transmission is critical in a scalable cloud environment. Data breaches can have severe consequences, including financial losses, reputational damage, and legal liabilities.

- Data Encryption at Rest: Encrypt data stored in databases, object storage, and other storage services. This protects data from unauthorized access even if the storage infrastructure is compromised. Use strong encryption algorithms and manage encryption keys securely.

- Data Encryption in Transit: Utilize TLS/SSL to encrypt data transmitted between clients and servers and between services within the cloud environment. This prevents eavesdropping and protects data confidentiality.

- Secure Storage Services: Utilize cloud-provider-specific storage services with built-in security features. These services often offer features like encryption, access controls, and data replication for high availability and durability.

- Database Security: Secure databases by implementing access controls, encrypting sensitive data, and regularly patching database software. Consider using database-specific security features like auditing and intrusion detection.

- Object Storage Security: Secure object storage by implementing access controls, enabling versioning, and using object lifecycle policies. Regularly review object storage configurations to ensure data protection.

- Network Segmentation: Segment the network to isolate sensitive data and services from less secure areas. This limits the impact of a security breach. For instance, place databases in a private subnet accessible only to authorized application servers.

- Data Loss Prevention (DLP): Implement DLP solutions to identify and prevent sensitive data from leaving the organization’s control. DLP can monitor data in transit and at rest and prevent unauthorized data transfers.

- Regular Backups and Disaster Recovery: Implement a robust backup and disaster recovery strategy to protect against data loss. Regularly back up data and test the recovery process to ensure data can be restored in case of a failure or security incident.

Cost Optimization Strategies

Effective cost optimization is crucial for realizing the full benefits of cloud scalability and elasticity. While the cloud offers unprecedented flexibility, unchecked spending can quickly erode its advantages. This section delves into strategies for minimizing cloud expenses, leveraging provider pricing models, and implementing robust monitoring and control mechanisms.

Right-Sizing Resources

Determining the appropriate size of resources is paramount for cost efficiency. Over-provisioning leads to wasted resources and unnecessary costs, while under-provisioning can compromise performance and availability.

The process involves a thorough analysis of resource utilization, including CPU, memory, storage, and network bandwidth. This analysis informs the selection of instance types, storage tiers, and other cloud services that best match the workload’s requirements. For example, a web server experiencing predictable traffic patterns might benefit from a smaller, more cost-effective instance type during off-peak hours.

- Continuous Monitoring: Implement monitoring tools to track resource utilization metrics in real-time. This data is essential for identifying underutilized or overutilized resources.

- Performance Testing: Conduct performance tests under realistic load conditions to determine the resource requirements of applications. This helps to avoid over-provisioning.

- Automated Scaling: Utilize auto-scaling features to dynamically adjust resources based on demand. This ensures that resources are scaled up during peak periods and scaled down during off-peak periods, optimizing costs.

- Instance Type Selection: Choose instance types that align with the workload’s specific needs. For example, memory-intensive applications benefit from memory-optimized instances, while compute-intensive applications benefit from compute-optimized instances.

Leveraging Cloud Provider Pricing Models

Cloud providers offer a variety of pricing models designed to cater to different usage patterns and commitment levels. Understanding and utilizing these models is key to cost optimization.

Each pricing model has its own advantages and disadvantages, and the optimal choice depends on the specific workload characteristics and predictability. Strategic selection can lead to significant cost savings. For instance, Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) offer several pricing options.

- On-Demand Instances: Ideal for workloads with unpredictable usage patterns or short-term needs. Pay-as-you-go pricing provides flexibility but can be more expensive than other options.

- Reserved Instances/Committed Use Discounts: Provide significant discounts for committing to a specific instance type for a fixed period (e.g., one or three years). Suitable for steady-state workloads with predictable resource requirements. AWS Reserved Instances, Azure Reserved Virtual Machine Instances, and GCP Committed Use Discounts all function similarly.

- Spot Instances/Preemptible VMs: Offer substantial discounts for utilizing spare cloud capacity. However, these instances can be terminated with short notice if the provider needs the capacity back. Best suited for fault-tolerant workloads.

- Savings Plans: AWS Savings Plans, Azure Savings Plans, and GCP Compute Engine Savings Plans offer a flexible approach to reducing compute costs. These plans provide discounts in exchange for a commitment to a consistent amount of compute usage (measured in dollars per hour) over a one- or three-year term. Savings Plans are not tied to a specific instance type or region, providing greater flexibility than Reserved Instances/Committed Use Discounts.

- Tiered Pricing: Some services, such as storage and data transfer, offer tiered pricing, where the cost per unit decreases as usage volume increases.

Implementing Cost Monitoring and Control

Proactive monitoring and control are essential for preventing unexpected cloud spending and identifying areas for optimization.

Effective cost management involves establishing clear budgets, setting up alerts, and regularly analyzing spending patterns. The goal is to gain visibility into cloud costs and proactively address any anomalies or inefficiencies.

- Budgeting: Set up budgets to track cloud spending and receive alerts when spending approaches or exceeds predefined thresholds. Cloud providers offer budgeting tools that allow for defining budgets based on various criteria, such as service, region, or tag.

- Cost Allocation Tags: Utilize cost allocation tags to categorize and track spending by department, project, or application. This provides granular insights into cost distribution and facilitates chargeback or showback processes.

- Cost Reporting: Generate regular cost reports to analyze spending trends, identify cost drivers, and evaluate the effectiveness of cost optimization efforts. Cloud providers offer built-in reporting tools and allow integration with third-party cost management platforms.

- Anomaly Detection: Implement anomaly detection to identify unexpected spikes in spending. Many cloud providers and third-party tools offer machine-learning-based anomaly detection capabilities.

- Right-sizing and Resource Optimization Recommendations: Many cloud providers provide recommendations on how to optimize resources based on their utilization. Following these recommendations can help reduce costs. For example, AWS Cost Explorer, Azure Cost Management + Billing, and GCP Cost Management provide such recommendations.

- Automated Cost Optimization: Leverage automated tools and scripts to identify and implement cost-saving measures, such as deleting unused resources or scaling down underutilized instances.

Storage Optimization

Storage costs can contribute significantly to overall cloud expenses. Optimizing storage usage involves choosing the appropriate storage tiers, managing data lifecycle, and employing data compression techniques.

Storage costs are often determined by the amount of data stored, the frequency of access, and the level of data redundancy. Effective storage management involves a combination of technical and strategic approaches.

- Storage Tiering: Utilize different storage tiers based on data access frequency. For example, frequently accessed data can reside in a high-performance tier, while less frequently accessed data can be stored in a lower-cost tier, such as archive storage. AWS S3 provides options like Standard, Intelligent-Tiering, Glacier, and Glacier Deep Archive. Azure offers Blob Storage tiers (Hot, Cool, Archive), and GCP offers Storage classes (Standard, Nearline, Coldline, Archive).

- Data Lifecycle Management: Implement data lifecycle policies to automatically move data between storage tiers based on age or access patterns. This ensures that data is stored in the most cost-effective tier at any given time.

- Data Compression: Compress data to reduce storage space and data transfer costs. Compression can be implemented at the application level or using cloud provider-specific features.

- Data Deduplication: Deduplicate data to eliminate redundant copies of data, thereby reducing storage consumption. This is particularly effective for large datasets with a high degree of data redundancy.

- Object Storage Optimization: Utilize object storage features, such as object lifecycle policies, to automatically manage object storage costs. These policies can be configured to transition objects to cheaper storage tiers or delete them based on predefined criteria.

Network Optimization

Network costs, including data transfer and inter-region traffic, can also be significant. Optimizing network usage involves strategies such as content delivery networks (CDNs), data transfer optimization, and private network configurations.

Network costs are influenced by factors such as data transfer volume, data transfer location, and network bandwidth utilization. Network optimization is essential for controlling these costs.

- Content Delivery Networks (CDNs): Utilize CDNs to cache content closer to users, reducing data transfer costs and improving performance. CDNs cache static content, such as images, videos, and JavaScript files, at edge locations around the world.

- Data Transfer Optimization: Optimize data transfer by compressing data, using efficient data transfer protocols, and minimizing inter-region data transfer.

- Private Network Configurations: Utilize private networks (e.g., AWS VPC, Azure VNet, GCP VPC) to keep data transfer within the same region, minimizing data transfer costs. Transferring data within a private network is typically less expensive than transferring data over the public internet.

- Data Transfer Pricing Models: Understand and leverage the cloud provider’s data transfer pricing models. Some providers offer free data transfer within a region or to specific services.

- Network Monitoring: Monitor network traffic to identify bottlenecks and optimize network performance. Tools such as network performance monitoring (NPM) and network traffic analysis (NTA) can provide insights into network utilization.

Disaster Recovery and Business Continuity

Designing for scalability and elasticity in the cloud necessitates robust strategies for disaster recovery (DR) and business continuity (BC). These are not merely optional add-ons; they are critical components that ensure application availability, data integrity, and business resilience in the face of unforeseen events, ranging from natural disasters to infrastructure failures. A well-defined DR and BC plan minimizes downtime, reduces data loss, and protects the organization’s reputation.

Design a Disaster Recovery Plan for a Scalable Cloud Application

A comprehensive disaster recovery plan for a scalable cloud application is a multi-faceted strategy encompassing data replication, failover mechanisms, and recovery procedures. The design must consider the application’s architecture, Recovery Time Objectives (RTOs), and Recovery Point Objectives (RPOs).Data replication strategies are paramount.

- Active-Active Replication: This involves replicating data in real-time across multiple regions. Both regions serve live traffic, and in the event of a disaster in one region, traffic is automatically routed to the other. This minimizes RTO and RPO. However, it requires careful consideration of data consistency and potential conflicts. For example, a global e-commerce platform might use this strategy, ensuring users can access the platform even if one region experiences an outage.

- Active-Passive Replication: Data is replicated to a standby region, but only the primary region serves traffic. In a disaster, the standby region is activated. This approach is typically less expensive than active-active, but results in a longer RTO. A financial institution might use this, prioritizing data integrity over immediate availability, where a few minutes of downtime is acceptable.

- Asynchronous Replication: Data is replicated with a delay. This can be cost-effective but increases the RPO. This is commonly used for backup and archival purposes, where immediate recovery isn’t critical.

Failover mechanisms are critical to automated recovery.

- Automated Failover: The system automatically detects failures and initiates a failover to the backup infrastructure. This requires monitoring systems, health checks, and automated scripts. Cloud providers offer services like Amazon Route 53, Google Cloud DNS, and Azure Traffic Manager that facilitate automated failover based on health checks.

- Manual Failover: Requires human intervention to initiate the failover process. This is typically used for less critical applications or where complex decision-making is required.

Recovery procedures must be documented and tested.

- Recovery Steps: Documented steps for restoring the application, including infrastructure provisioning, data restoration, and DNS updates.

- Roles and Responsibilities: Clearly defined roles for individuals or teams responsible for different aspects of the recovery process.

- Communication Plan: A communication plan to inform stakeholders about the disaster and recovery progress.

RTO and RPO are key metrics.

RTO (Recovery Time Objective): The maximum acceptable downtime after a disaster.

RPO (Recovery Point Objective): The maximum acceptable data loss.

The choice of replication strategy and failover mechanism is heavily influenced by the RTO and RPO requirements. For instance, a mission-critical application might demand an RTO of minutes and an RPO of seconds, requiring active-active replication.

Organize the Steps Involved in Implementing a Business Continuity Strategy

Implementing a robust business continuity strategy involves a series of structured steps, from initial assessment to ongoing maintenance. This strategy ensures the continuation of critical business functions during disruptions.The process starts with business impact analysis.

- Identify Critical Business Functions: Determine the essential functions required to maintain business operations. This involves identifying the applications, data, and infrastructure that are vital to the business.

- Assess Potential Threats: Evaluate potential threats, including natural disasters, cyberattacks, and human error. This includes understanding the likelihood and impact of each threat.

- Determine RTO and RPO: Define the acceptable downtime and data loss for each critical business function. These objectives drive the selection of recovery strategies.

Develop a business continuity plan.

- Develop Recovery Strategies: Design recovery strategies for each critical business function, considering different scenarios and their associated RTO and RPO.

- Select Recovery Solutions: Choose appropriate recovery solutions, such as data replication, backup and restore, and failover mechanisms.

- Document the Plan: Create a comprehensive business continuity plan that documents all recovery procedures, roles and responsibilities, and communication protocols.

Implement and test the plan.

- Implement Recovery Solutions: Implement the chosen recovery solutions in the cloud environment. This includes configuring infrastructure, setting up data replication, and automating failover mechanisms.

- Train Personnel: Train personnel on the business continuity plan, including their roles and responsibilities during a disaster.

- Conduct Regular Testing: Conduct regular tests of the business continuity plan to ensure its effectiveness and identify areas for improvement.

Maintain and update the plan.

- Monitor and Evaluate: Continuously monitor the effectiveness of the business continuity plan and evaluate its performance.

- Update the Plan: Regularly update the plan to reflect changes in the business environment, technology, and infrastructure. This includes reviewing and updating recovery strategies, roles and responsibilities, and contact information.

Create a Procedure for Testing and Validating Disaster Recovery Plans

Testing and validating disaster recovery plans is an iterative process. Regular testing ensures the plan’s effectiveness, identifies potential weaknesses, and provides opportunities for improvement. The procedure should encompass different testing levels and scenarios.Testing types are essential.

- Tabletop Exercises: A discussion-based exercise where the team reviews the disaster recovery plan and discusses their roles and responsibilities in a simulated disaster scenario. This is a low-cost, low-impact way to familiarize the team with the plan.

- Walkthrough Tests: Participants walk through the steps of the disaster recovery plan, without actually executing them. This allows the team to identify potential issues and gaps in the plan.

- Failover Tests: Simulate a real disaster by initiating a failover to the backup infrastructure. This tests the effectiveness of the failover mechanisms and the recovery procedures.

Test frequency and scope are crucial.

- Test Frequency: Regular testing, ideally quarterly or semi-annually, depending on the criticality of the application and the frequency of changes to the infrastructure.

- Test Scope: Testing should cover all critical business functions and infrastructure components. This includes data replication, failover mechanisms, and recovery procedures.

Validation and improvement are ongoing.

- Document Test Results: Document the results of each test, including any issues encountered and the steps taken to resolve them.

- Analyze Test Results: Analyze the test results to identify areas for improvement in the disaster recovery plan.

- Update the Plan: Update the disaster recovery plan based on the test results and any changes in the business environment.

Real-world examples illustrate the importance of regular testing. Consider the case of a major cloud provider that experienced a significant outage due to a configuration error. While the provider had a disaster recovery plan in place, the lack of regular testing led to delays in recovery and significant customer impact. Conversely, organizations that regularly test their disaster recovery plans are better prepared to handle unforeseen events and minimize their impact on business operations.

For instance, a large e-commerce company successfully recovered from a major outage by rapidly failing over to its backup region, thanks to its well-tested disaster recovery plan.

Case Studies: Real-World Examples

Understanding how scalable and elastic cloud architectures translate into tangible benefits requires examining real-world implementations. Analyzing successful case studies provides valuable insights into the challenges, solutions, and outcomes associated with cloud adoption. This section focuses on a prominent example, illustrating the practical application of the concepts discussed previously.

E-commerce Platform: Scaling for Peak Demand

This case study examines a leading e-commerce platform that successfully scaled its cloud infrastructure to manage significant fluctuations in traffic, particularly during peak shopping seasons like Black Friday and Cyber Monday. The platform’s previous on-premise infrastructure struggled to handle these surges, leading to performance degradation and lost revenue.To address these issues, the e-commerce company migrated its applications and data to a public cloud provider, leveraging its scalability and elasticity features.The architecture employed the following components:

- Application Tier: Deployed across multiple availability zones, utilizing auto-scaling groups to automatically provision and de-provision compute instances (e.g., virtual machines or containers) based on real-time demand. Monitoring metrics, such as CPU utilization, memory usage, and request latency, triggered scaling events.

- Database Tier: Utilized a managed database service (e.g., a relational database like PostgreSQL or MySQL or a NoSQL database like MongoDB) with features like read replicas and automatic failover to ensure high availability and performance. The database was designed to handle increased read and write operations during peak periods.

- Caching Layer: Implemented a distributed caching system (e.g., Redis or Memcached) to reduce the load on the database and improve response times. Frequently accessed data, such as product catalogs and user session information, was cached to serve requests more efficiently.

- Load Balancing: Employed a load balancer to distribute incoming traffic across the application instances, ensuring even resource utilization and preventing any single instance from becoming overloaded. The load balancer also provided health checks to automatically route traffic away from unhealthy instances.

- Content Delivery Network (CDN): Leveraged a CDN to cache static content (e.g., images, videos, and JavaScript files) closer to users, reducing latency and improving the overall user experience. The CDN also helped to offload traffic from the origin servers.

The challenges faced by the e-commerce platform included:

- Predicting Demand: Accurately forecasting peak traffic periods was crucial for pre-emptively scaling the infrastructure. The company used historical data, seasonal trends, and marketing campaign forecasts to anticipate demand.

- Database Performance: The database was a potential bottleneck during peak periods. The company optimized database queries, implemented database sharding (partitioning data across multiple database instances), and scaled the database resources to handle the increased load.

- Cost Optimization: Managing cloud costs effectively was essential. The company used reserved instances (for stable workloads), spot instances (for fault-tolerant tasks), and auto-scaling policies to optimize resource utilization and minimize costs.

The solutions implemented included:

- Automated Scaling: Implementing auto-scaling groups to automatically adjust the number of application instances based on real-time demand, allowing the platform to handle traffic spikes without manual intervention.

- Database Optimization: Optimizing database queries, implementing caching mechanisms, and scaling database resources to handle the increased load.

- Performance Monitoring: Establishing comprehensive monitoring and alerting systems to track key performance indicators (KPIs) such as response times, error rates, and resource utilization. This allowed the team to proactively identify and address performance bottlenecks.

- Infrastructure as Code (IaC): Using IaC tools (e.g., Terraform or AWS CloudFormation) to automate the provisioning and management of the infrastructure, enabling faster deployments and consistent configurations.

The benefits realized by the e-commerce platform were significant:

- Improved Performance: The platform experienced a substantial reduction in response times and improved overall performance, even during peak traffic periods.

- Enhanced Scalability: The ability to automatically scale the infrastructure allowed the platform to handle massive traffic spikes without performance degradation.

- Increased Availability: The use of multiple availability zones, automatic failover mechanisms, and health checks ensured high availability and minimized downtime.

- Reduced Costs: The implementation of cost optimization strategies helped to reduce overall cloud spending.

- Increased Revenue: The improved performance and scalability contributed to increased sales and revenue.

Visual Representation of the Architecture:Imagine a diagram illustrating the e-commerce platform’s cloud architecture. The diagram shows the following key components and their interactions: