Cloud computing offers incredible flexibility and scalability, but it also presents the challenge of managing costs effectively. One of the most impactful strategies for optimizing cloud spending is automating cloud resource shutdown schedules. Imagine a world where your development environments automatically power down when not in use, or your testing resources are gracefully decommissioned outside of peak hours. This guide will explore how to make this vision a reality, transforming your cloud infrastructure into a lean, efficient, and cost-effective powerhouse.

This document will delve into the intricacies of automating cloud resource shutdowns. We will cover everything from understanding the core benefits and identifying the right tools to implementing schedules, monitoring performance, and ensuring robust security. Whether you are a seasoned cloud architect or just starting your cloud journey, this guide provides the knowledge and practical steps you need to significantly reduce your cloud bills and maximize your return on investment.

Understanding the Need for Automated Cloud Resource Shutdown

Cloud computing offers unprecedented flexibility and scalability, but these benefits come with the responsibility of efficient resource management. A critical aspect of this management is the strategic shutdown of cloud resources when they are not actively in use. Automating this process can significantly impact operational costs and resource utilization.

Cost-Saving Benefits of Shutting Down Unused Cloud Resources

Automated shutdowns directly translate to financial savings. Cloud providers typically charge based on resource consumption, which includes compute time, storage, and network bandwidth. By shutting down resources when they are idle, organizations avoid unnecessary charges.

- Reduced Operational Expenses: Eliminating wasted compute time for development environments during nights and weekends, for instance, leads to tangible cost reductions.

- Optimized Resource Allocation: Shutdown schedules free up resources, allowing for more efficient allocation during peak demand periods. This can prevent the need for over-provisioning, further reducing costs.

- Improved Return on Investment (ROI): By optimizing cloud spending, the overall ROI on cloud investments improves. The cost savings can be reinvested in other areas, such as innovation or new product development.

Common Scenarios Where Automated Shutdowns Are Beneficial

Several use cases benefit greatly from automated shutdown schedules. These scenarios typically involve resources that are not required 24/7.

- Development and Testing Environments: Development and testing environments are frequently used during business hours and often idle outside of those times. Automated shutdowns can significantly reduce costs associated with these environments. For example, a company might schedule its test servers to shut down at 6 PM each evening and restart at 8 AM the following day, leading to significant cost savings.

- Staging Environments: Staging environments, used for pre-production testing, often have periods of inactivity. Scheduling shutdowns during these inactive periods can prevent unnecessary charges.

- Batch Processing Jobs: Resources dedicated to batch processing jobs can be automatically shut down after the jobs are completed. This prevents the waste of compute resources when no processing is required.

- Seasonal Applications: Applications with seasonal demand can be scaled down and shut down during off-peak periods. For instance, an e-commerce site might scale down its resources significantly after the holiday shopping season.

Potential Risks of Not Implementing Shutdown Schedules

Failing to implement automated shutdown schedules can lead to several risks, impacting both financial performance and operational efficiency.

- Increased Cloud Costs: The most direct risk is the accumulation of unnecessary cloud expenses. Unused resources continue to accrue charges, impacting the overall budget.

- Inefficient Resource Utilization: Resources are left idle, reducing the overall efficiency of the cloud infrastructure. This can lead to wasted capacity and potentially impact performance during peak times.

- Security Vulnerabilities: Unattended resources may be vulnerable to security threats. Shutdown schedules can limit the attack surface and reduce the risk of unauthorized access.

- Operational Overhead: Manual shutdown and startup processes are time-consuming and prone to errors. Automated schedules eliminate this overhead and ensure consistent resource management.

Impact of Cloud Resource Uptime Versus Downtime on Different Business Areas

The impact of cloud resource uptime and downtime varies significantly across different business areas. Understanding these impacts is crucial for establishing appropriate shutdown schedules and ensuring business continuity.

| Business Area | Impact of Uptime | Impact of Downtime | Examples |

|---|---|---|---|

| Development | Faster development cycles, improved collaboration, and efficient testing. | Delayed releases, impaired developer productivity, and increased debugging time. | Development teams relying on testing servers that are consistently available for code testing and debugging. |

| Testing | Thorough and timely testing, efficient identification of bugs, and increased software quality. | Incomplete testing, missed bugs, and compromised software quality. | Testing environments that are regularly available, allowing for comprehensive testing before deployment. |

| Production | Seamless user experience, increased customer satisfaction, and continuous revenue generation. | Service outages, loss of revenue, damage to brand reputation, and customer dissatisfaction. | E-commerce websites ensuring 24/7 availability to avoid missed sales opportunities. |

| Marketing | Effective campaign execution, timely data analysis, and accurate performance measurement. | Delayed campaign launches, inaccurate performance data, and inefficient marketing spending. | Marketing teams using data analytics tools for real-time insights and campaign optimization. |

Identifying Cloud Providers and Their Automation Tools

Automating cloud resource shutdown schedules requires understanding the capabilities of different cloud providers and their respective automation tools. This section delves into the built-in features offered by major cloud providers – Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) – comparing their scheduling capabilities and identifying suitable services for automation. We will also examine the advantages and disadvantages of using native tools versus third-party solutions for each provider.

Cloud Provider Automation Tools

Each major cloud provider offers a suite of native tools designed to automate various tasks, including resource shutdown schedules. These tools leverage the provider’s infrastructure and services to offer seamless integration and potentially reduce operational overhead. The choice between native and third-party solutions often depends on factors like complexity, cost, and specific requirements.

Amazon Web Services (AWS) Automation

AWS provides a robust set of tools for automating resource shutdown schedules. Key services include:* AWS CloudWatch Events (now Amazon EventBridge): This service allows you to schedule events based on a cron expression, enabling the triggering of actions at specific times. For example, you can schedule an event to stop an EC2 instance at 6 PM every weekday.

AWS Systems Manager (SSM)

SSM provides a feature called “Run Command,” which can be used to execute commands on EC2 instances or other managed instances. You can combine Run Command with CloudWatch Events to schedule instance shutdowns.

AWS Lambda

Serverless compute service that can be triggered by CloudWatch Events or other events. Lambda functions can be written to stop or start resources based on a schedule.The scheduling capabilities within AWS are highly flexible, allowing for precise control over resource shutdown times. You can use cron expressions to define schedules that meet various needs, from simple daily shutdowns to more complex recurring schedules.Here’s a breakdown of the pros and cons:* Pros of Native AWS Tools:

Integration

Seamlessly integrates with other AWS services.

Cost-Effective

Often included as part of the AWS service offering, reducing additional costs.

Scalability

Designed to handle large-scale deployments.

Security

Leverages AWS’s robust security infrastructure.

Simplified Management

Reduces the need for external tools.

Cons of Native AWS Tools

Complexity

Can become complex to manage for intricate scheduling scenarios.

Vendor Lock-in

Tightly coupled with the AWS ecosystem.

Limited Features

May lack some advanced features found in third-party solutions.

Debugging

Debugging can be more challenging when issues arise.

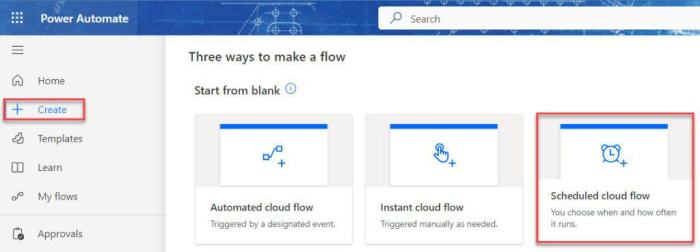

Microsoft Azure Automation

Azure offers several tools for automating resource shutdown schedules:* Azure Automation: Provides a service for automating tasks using runbooks. Runbooks can be written in PowerShell or Python to stop or start Azure resources based on schedules.

Azure Logic Apps

A cloud service that allows you to create automated workflows and integrate various services. Logic Apps can be used to schedule resource shutdowns by triggering actions based on schedules.

Azure Functions

A serverless compute service, similar to AWS Lambda, that can be triggered by timers or other events to perform actions like stopping or starting resources.

Azure Resource Manager (ARM) Templates

Although not directly for scheduling, ARM templates can be used to deploy resources with defined schedules, often in conjunction with Azure Automation or Logic Apps.Azure’s scheduling capabilities are versatile, with options for both simple and complex scheduling requirements. Azure Automation and Logic Apps offer robust features for managing schedules and integrating with other Azure services.Here’s a comparison of pros and cons:* Pros of Native Azure Tools:

Integration

Strong integration with other Azure services.

Cost-Effective

Can be included in existing Azure subscriptions.

Automation

Provides robust automation capabilities.

Security

Leverages Azure’s security features.

Centralized Management

Simplifies resource management.

Cons of Native Azure Tools

Complexity

Runbooks and Logic Apps can become complex to design and maintain.

Vendor Lock-in

Tightly integrated with the Azure ecosystem.

Limited Feature Sets

May lack some advanced scheduling features.

Learning Curve

Requires familiarity with Azure services and scripting languages.

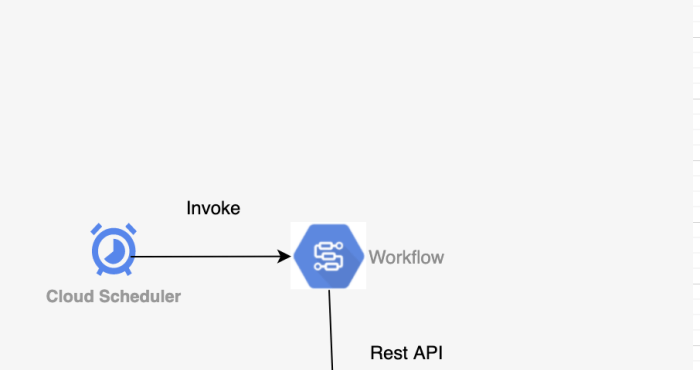

Google Cloud Platform (GCP) Automation

GCP provides tools for automating resource shutdown schedules:* Cloud Scheduler: A fully managed cron job scheduler that allows you to schedule tasks at specific times or intervals. It can trigger Cloud Functions, App Engine apps, or send HTTP requests.

Cloud Functions

Serverless compute service that can be triggered by Cloud Scheduler or other events to perform actions like stopping or starting resources.

Cloud Pub/Sub

A messaging service that can be used to trigger Cloud Functions or other services based on messages, enabling event-driven scheduling.

Cloud Monitoring

Allows for monitoring resource usage and can be integrated with other services for automated actions, such as shutting down resources based on performance metrics.GCP offers flexible scheduling options, including cron-based scheduling with Cloud Scheduler and event-driven scheduling using Cloud Functions and Pub/Sub.Here’s a comparison of pros and cons:* Pros of Native GCP Tools:

Integration

Seamless integration with other GCP services.

Cost-Effective

Can be included in existing GCP subscriptions.

Scalability

Designed to handle large-scale deployments.

Security

Leverages GCP’s security infrastructure.

Ease of Use

Cloud Scheduler offers a user-friendly interface.

Cons of Native GCP Tools

Limited Features

May lack advanced features found in third-party solutions.

Vendor Lock-in

Tightly coupled with the GCP ecosystem.

Debugging

Debugging can be more challenging.

Dependency

Requires familiarity with GCP services and architecture.

Planning and Designing Shutdown Schedules

Planning and designing effective cloud resource shutdown schedules is crucial for optimizing costs and ensuring resource availability when needed. This involves a systematic approach to determine the optimal shutdown times, considering resource dependencies, business requirements, and the capabilities of the chosen cloud provider’s automation tools. Careful planning prevents disruptions and maximizes the benefits of automated shutdowns.

Identifying Resource Dependencies

Before implementing any shutdown schedule, it’s essential to identify and understand the dependencies between cloud resources. These dependencies determine the order in which resources must be shut down and started to avoid application failures or data loss. Failing to account for dependencies can lead to significant operational issues.Identifying resource dependencies involves the following:

- Application Architecture Review: Analyze the application’s architecture to understand how different components interact. Identify which resources rely on others. For example, a database server is likely a dependency for web servers and application servers.

- Resource Mapping: Create a detailed map of all cloud resources, including their types (e.g., virtual machines, databases, storage) and their relationships. This can be achieved using cloud provider management consoles, infrastructure-as-code tools (e.g., Terraform, CloudFormation), or third-party monitoring and dependency mapping tools.

- Dependency Testing: Conduct thorough testing to validate the identified dependencies. Simulate shutdown and startup scenarios to verify that resources are shut down and started in the correct order, and that applications function as expected. This testing phase helps uncover any unforeseen dependencies.

- Documentation: Document all identified dependencies, including the order in which resources should be shut down and started. This documentation should be easily accessible to the operations team.

Designing a Workflow for Determining Optimal Shutdown Times

Determining the optimal shutdown times for various resource types requires a structured workflow that considers business hours, usage patterns, and service level agreements (SLAs). This workflow ensures that resources are available when needed while minimizing operational costs.The workflow includes the following steps:

- Usage Pattern Analysis: Analyze historical resource usage data to identify periods of low activity. This can be achieved by reviewing CPU utilization, network traffic, and storage I/O metrics. Cloud providers offer tools for monitoring and analyzing resource usage.

- Business Hour Definition: Define the standard business hours during which the application or service needs to be fully operational. This information is crucial for determining when resources should be available.

- SLA Consideration: Review service level agreements (SLAs) to identify any requirements for uptime and availability. Ensure that shutdown schedules do not violate any SLA commitments.

- Resource Grouping: Group resources based on their function and dependencies. This helps streamline the scheduling process. For example, all web servers can be grouped together.

- Shutdown Time Determination: Based on usage patterns, business hours, and SLAs, determine the optimal shutdown time for each resource group. This is typically after business hours and before the next scheduled start time.

- Startup Time Determination: Determine the optimal startup time for each resource group, typically before the start of business hours or the expected peak usage period.

- Schedule Implementation: Implement the shutdown and startup schedules using the chosen cloud provider’s automation tools.

- Monitoring and Optimization: Continuously monitor resource usage and adjust the schedules as needed. This iterative process ensures that the schedules remain optimal over time.

Creating Different Schedules Based on Business Needs

Different business needs require different shutdown schedules. Flexibility is key, allowing for various schedule types to accommodate development, testing, and production environments. This approach ensures that resources are used efficiently across different stages of the software development lifecycle.The following table illustrates different schedule types and their applications:

| Schedule Type | Application | Description |

|---|---|---|

| Daily | Development Environments | Resources are shut down after business hours and started before the next workday. This is the most common type, optimizing costs during non-working hours. |

| Weekly | Testing Environments | Resources are shut down over the weekend and started on Monday morning. This is suitable for environments where testing is primarily done during the work week. |

| Custom | Production (with care) or Specific Projects | Resources are shut down and started based on specific project needs or seasonal variations. This schedule requires careful planning and monitoring to avoid disruptions. For example, a seasonal e-commerce site might increase resource availability before peak shopping seasons. |

Implementing Shutdown Automation with Native Cloud Tools

Now, let’s delve into the practical implementation of automated cloud resource shutdown using the native tools provided by the major cloud providers. This section will walk you through the specific steps for configuring automation within AWS, Azure, and Google Cloud, accompanied by code snippets to illustrate the underlying logic. This approach leverages the providers’ built-in services, minimizing the need for external dependencies and simplifying management.

Implementing Shutdown Automation with AWS CloudWatch Events and Lambda Functions

AWS offers a powerful combination of CloudWatch Events (now EventBridge) and Lambda functions for automating resource shutdowns. CloudWatch Events acts as the scheduler, triggering events at specified times, while Lambda functions execute the shutdown logic.To implement automated shutdowns using AWS CloudWatch Events and Lambda functions, follow these steps:

- Create an IAM Role for the Lambda Function: This role grants the Lambda function the necessary permissions to interact with AWS resources, such as EC2 instances. The role should include permissions to stop instances (e.g., `ec2:StopInstances`).

- Write the Lambda Function (Python Example): This function retrieves a list of instances based on tags or other criteria and then stops them.

import boto3 import os def lambda_handler(event, context): ec2 = boto3.client('ec2') region = os.environ['AWS_REGION'] # or hardcode the region # Retrieve instances to stop (e.g., by tag) instance_ids = [] filters = [ 'Name': 'tag:ShutdownSchedule', 'Values': ['true'] # Replace 'ShutdownSchedule' with your tag key ] response = ec2.describe_instances(Filters=filters) for reservation in response['Reservations']: for instance in reservation['Instances']: instance_ids.append(instance['InstanceId']) if instance_ids: ec2.stop_instances(InstanceIds=instance_ids) print(f"Stopped instances: instance_ids") else: print("No instances found to stop.") return 'statusCode': 200, 'body': 'Shutdown initiated' In this Python code:

- The code imports the `boto3` library, the AWS SDK for Python.

- It defines a `lambda_handler` function, which is the entry point for the Lambda function.

- It creates an EC2 client.

- It retrieves a list of instance IDs based on a tag named “ShutdownSchedule” with a value of “true”. You’ll need to replace this tag key and value with your desired criteria.

- It stops the instances if any are found.

- ?` will trigger the function every day at 6 PM UTC. Select “Lambda function” as the target and choose the Lambda function created in the previous step.

Setting Up Automated Shutdowns Using Azure Automation

Azure Automation provides a comprehensive platform for automating tasks within Azure. This includes the ability to create runbooks that can stop virtual machines (VMs) based on a schedule.To set up automated shutdowns using Azure Automation, consider the following:

- Create an Azure Automation Account: This account is the container for your runbooks, schedules, and other automation assets.

- Create a Runbook (PowerShell Example): A runbook contains the PowerShell script that performs the shutdown operation.

#Requires -RunAsAdministrator # Get the VMs to stop (e.g., by resource group) $resourceGroupName = "your-resource-group-name" # Replace with your resource group $vms = Get-AzVM -ResourceGroupName $resourceGroupName foreach ($vm in $vms) # Check if the VM is tagged for shutdown if ($vm.Tags.ContainsKey("ShutdownSchedule") -and $vm.Tags["ShutdownSchedule"] -eq "true") # Replace 'ShutdownSchedule' with your tag key Write-Output "Stopping VM: $($vm.Name)" Stop-AzVM -Name $vm.Name -ResourceGroupName $resourceGroupName -Force In this PowerShell script:

- The code retrieves the VMs within a specified resource group. Replace `your-resource-group-name` with the actual name of your resource group.

- It iterates through each VM and checks for a tag named “ShutdownSchedule” with a value of “true.”

- If the tag exists and has the correct value, the script stops the VM using `Stop-AzVM`.

Using Google Cloud Scheduler and Cloud Functions for Resource Shutdown

Google Cloud Platform (GCP) offers a robust solution for automated shutdowns using Cloud Scheduler and Cloud Functions. Cloud Scheduler provides the scheduling capabilities, while Cloud Functions executes the shutdown logic.To implement automated shutdowns using Google Cloud Scheduler and Cloud Functions, follow these steps:

- Create a Service Account: Create a service account with the necessary permissions to stop Compute Engine instances (e.g., `roles/compute.instanceAdmin.v1`). This service account will be used by the Cloud Function.

- Write the Cloud Function (Python Example): This function retrieves a list of instances and stops them based on criteria, such as tags.

from google.cloud import compute import os def shutdown_instances(event, context): project = os.environ.get('GCP_PROJECT') # Or hardcode your project ID zone = os.environ.get('ZONE') # Replace with the zone where your instances are located client = compute.InstancesClient() # Get instances to stop (e.g., by tag) instances_to_stop = [] # Replace with your tag key and value tag_key = "shutdown-schedule" tag_value = "true" instances = client.list(project=project, zone=zone) for instance in instances: if instance.tags and tag_key in instance.tags.items: if tag_value in instance.tags.items[tag_key]: instances_to_stop.append(instance.name) for instance_name in instances_to_stop: print(f"Stopping instance: instance_name") operation = client.stop(project=project, zone=zone, instance=instance_name) operation.result() # Wait for the operation to complete In this Python code:

- The code imports the `google.cloud.compute` library.

- It retrieves the GCP project ID and the zone from environment variables.

- It retrieves a list of instances within the specified zone.

- It checks for a tag named “shutdown-schedule” with a value of “true” on each instance. Replace the key and value with your desired tag configuration.

- It stops the instances that match the tag.

- ` will trigger the function every day at 6 PM UTC. Set the target to be the Cloud Function you deployed.

Scripting and Coding for Custom Automation

Custom scripting offers unparalleled flexibility and control when automating cloud resource shutdown schedules. While native cloud tools provide a solid foundation, scripting allows for complex logic, integration with other systems, and tailored solutions that precisely meet your specific requirements. This section delves into utilizing scripting languages to create robust and efficient automation solutions.

Scripting Languages for Custom Automation

Scripting languages like Python and Bash are instrumental in building custom automation for cloud resource shutdowns. Python, with its extensive libraries and readability, excels at interacting with cloud provider APIs. Bash, a powerful shell scripting language, is well-suited for system-level tasks and command-line interactions. Choosing the right language depends on the specific needs of the automation task.Python, for instance, simplifies API interactions through libraries like `boto3` (for AWS), `google-api-python-client` (for Google Cloud), and `azure-sdk-for-python` (for Azure).

These libraries abstract away the complexities of API calls, enabling developers to focus on the automation logic. Bash, on the other hand, can directly execute cloud provider CLI commands, allowing for straightforward task execution.

Code Examples for Automating Shutdowns

Let’s explore code examples for automating shutdowns using Python and Bash, focusing on AWS as an example. Python Example (AWS):“`pythonimport boto3import datetime# Configure AWS credentialssession = boto3.Session(region_name=’us-east-1′) # Replace with your desired regionec2 = session.client(‘ec2’)def stop_instance(instance_id): “””Stops an EC2 instance.””” try: ec2.stop_instances(InstanceIds=[instance_id]) print(f”Stopping instance: instance_id”) return True except Exception as e: print(f”Error stopping instance instance_id: e”) return Falsedef main(): # Replace with your instance IDs instance_ids_to_stop = [‘i-xxxxxxxxxxxxxxxxx’, ‘i-yyyyyyyyyyyyyyyyy’] now = datetime.datetime.now() # Schedule shutdown for a specific time, e.g., 18:00 (6 PM) shutdown_time = now.replace(hour=18, minute=0, second=0, microsecond=0) if now >= shutdown_time: for instance_id in instance_ids_to_stop: stop_instance(instance_id) else: print(f”Shutdown scheduled for shutdown_time.strftime(‘%Y-%m-%d %H:%M:%S’) (not yet)”)if __name__ == “__main__”: main()“`This Python script uses the `boto3` library to interact with the AWS EC2 service.

The `stop_instance` function handles the actual stopping of the instance, and the `main` function orchestrates the process, scheduling the shutdown. This example also includes basic error handling. Bash Example (AWS CLI):“`bash#!/bin/bash# Replace with your instance IDsINSTANCE_IDS=(“i-xxxxxxxxxxxxxxxxx” “i-yyyyyyyyyyyyyyyyy”)# Loop through the instance IDs and stop each instancefor instance_id in “$INSTANCE_IDS[@]”do echo “Stopping instance: $instance_id” aws ec2 stop-instances –instance-ids “$instance_id” –region us-east-1 # Replace with your region if [ $?

-eq 0 ]; then echo “Instance $instance_id stopped successfully.” else echo “Error stopping instance $instance_id.” fidone“`This Bash script uses the AWS CLI to stop EC2 instances. The script iterates through a list of instance IDs and executes the `aws ec2 stop-instances` command for each one. It also includes basic error checking using `$?`.

Handling Errors and Implementing Retry Mechanisms

Robust scripts should include error handling and retry mechanisms to ensure reliability. Cloud provider APIs can sometimes experience transient issues (e.g., temporary network problems or API throttling). Error Handling:Both Python and Bash offer ways to handle errors. In Python, the `try…except` block is used to catch exceptions that may occur during API calls. In Bash, the `$?` variable indicates the exit status of the last command, with a non-zero value generally indicating an error.

Retry Mechanisms:Retry mechanisms are essential for handling transient errors. These mechanisms involve attempting an operation multiple times if it fails, with a delay between each attempt. Python Retry Example:“`pythonimport boto3import timedef stop_instance_with_retry(instance_id, max_retries=3, delay=5): “””Stops an EC2 instance with retry mechanism.””” for attempt in range(max_retries): try: ec2 = boto3.client(‘ec2′, region_name=’us-east-1’) # Replace with your region ec2.stop_instances(InstanceIds=[instance_id]) print(f”Instance instance_id stopped successfully.”) return True except Exception as e: print(f”Attempt attempt + 1 failed to stop instance instance_id: e”) if attempt < max_retries - 1: print(f"Retrying in delay seconds...") time.sleep(delay) else: print(f"Failed to stop instance instance_id after max_retries attempts.") return False```This Python example attempts to stop an instance up to `max_retries` times, with a `delay` between each attempt.Bash Retry Example:“`bash#!/bin/bashstop_instance() local instance_id=”$1″ local max_retries=3 local attempt=1 while [ “$attempt” -le “$max_retries” ]; do echo “Attempt $attempt to stop instance $instance_id” aws ec2 stop-instances –instance-ids “$instance_id” –region us-east-1 # Replace with your region if [ $?

-eq 0 ]; then echo “Instance $instance_id stopped successfully.” return 0 # Exit the function successfully else echo “Error stopping instance $instance_id. Attempt $attempt failed.” if [ “$attempt” -lt “$max_retries” ]; then echo “Retrying in 5 seconds…” sleep 5 fi fi attempt=$((attempt + 1)) done echo “Failed to stop instance $instance_id after $max_retries attempts.” return 1 # Indicate failure# Example Usage:INSTANCE_ID=”i-xxxxxxxxxxxxxxxxx”stop_instance “$INSTANCE_ID”“`This Bash script defines a function `stop_instance` that includes a retry loop.

It attempts to stop the instance multiple times, waiting a few seconds between each attempt.

Troubleshooting Tips for Script-Based Automation

When encountering issues with script-based automation, consider the following troubleshooting tips:

- Verify Credentials: Ensure that the scripts are configured with the correct cloud provider credentials (e.g., AWS access keys, Google Cloud service account keys, Azure service principal credentials) and that these credentials have the necessary permissions to perform the desired actions (e.g., stopping instances).

- Check API Rate Limits: Cloud providers often have API rate limits. If your script is making too many API calls in a short period, it might be throttled. Implement delays or batch operations to avoid exceeding these limits.

- Examine Error Messages: Carefully review any error messages generated by the scripts. These messages often provide valuable clues about the root cause of the problem. Look for specific error codes, error descriptions, and timestamps.

- Test Scripts Locally: Before deploying scripts to a production environment, test them locally. This allows you to identify and fix any syntax errors or logical flaws without affecting live resources.

- Use Logging: Implement comprehensive logging within your scripts. Log important events, such as the start and end of operations, the values of variables, and any errors that occur. Logging makes it easier to track down issues and understand the script’s behavior.

- Check Network Connectivity: Ensure that the system running the script has network connectivity to the cloud provider’s API endpoints. Firewalls or other network restrictions could be blocking API requests.

- Review Cloud Provider Documentation: Refer to the cloud provider’s official documentation for the specific APIs and commands you are using. The documentation provides detailed information about the parameters, error codes, and best practices.

- Verify Instance States: Confirm the state of the cloud resources before and after the script execution. This can help identify if the resources are already in the desired state or if the shutdown was successful.

- Check for Dependencies: Make sure all necessary dependencies (e.g., Python libraries, the AWS CLI) are installed and configured correctly on the system running the script.

- Use Version Control: Employ version control systems (e.g., Git) to manage your scripts. This allows you to track changes, revert to previous versions, and collaborate with others.

Monitoring and Logging Shutdown Activities

Effective monitoring and logging are crucial for the successful implementation and maintenance of automated cloud resource shutdown schedules. They provide visibility into the operation of the schedules, enabling administrators to identify and resolve issues, optimize performance, and ensure compliance. Without robust monitoring and logging, it becomes challenging to verify that resources are shutting down as planned, diagnose problems when they arise, and track the overall effectiveness of the automation strategy.

Importance of Monitoring Shutdown Schedule Execution

Monitoring the execution of shutdown schedules is essential for several reasons. It ensures the schedules are functioning correctly, verifies that resources are being shut down at the intended times, and helps in identifying and troubleshooting any failures or unexpected behavior. Comprehensive monitoring allows for proactive identification of potential problems, minimizing downtime and optimizing resource utilization.

Setting Up Logging for Audit Trails and Troubleshooting

Implementing detailed logging is critical for creating an audit trail and facilitating effective troubleshooting. Logs provide a historical record of all shutdown activities, including start times, end times, the resources affected, and any errors or warnings encountered. This information is invaluable for auditing purposes, compliance requirements, and pinpointing the root cause of issues.

Metrics to Monitor

Several key metrics should be monitored to assess the performance and effectiveness of shutdown schedules. These metrics provide insights into the success of the automation process and help in identifying areas for improvement.

- Shutdown Success Rates: Tracking the percentage of successful shutdowns is fundamental. A low success rate indicates potential issues with the automation scripts, the resources themselves, or the underlying cloud infrastructure. For instance, if a schedule is designed to shut down 100 instances and only 80 shut down successfully, the success rate is 80%.

- Resource Utilization Before Shutdown: Monitoring resource utilization metrics, such as CPU usage, memory consumption, and network traffic, immediately before shutdown, can reveal whether resources are being used efficiently. This information can help determine if resources are being shut down while they are still active, or if they are idle, confirming the schedule’s efficiency.

- Shutdown Duration: Measuring the time it takes for resources to shut down provides insights into the efficiency of the shutdown process. Excessive shutdown times could indicate performance bottlenecks or issues with the resource configuration.

- Error Rates: Tracking the frequency and types of errors encountered during shutdown attempts is crucial for troubleshooting. Analyzing error logs helps identify the root causes of failures and implement corrective actions.

- Schedule Execution Times: Monitoring the start and end times of the shutdown schedules allows administrators to ensure they are running as planned. Deviations from the scheduled times can indicate scheduling issues or system delays.

- Resource States: Regularly checking the states of the resources (e.g., running, stopped, terminated) confirms whether they have successfully transitioned to the desired state after the shutdown.

Monitoring Tools and Their Features

Several monitoring tools are available for tracking and analyzing the performance of cloud resource shutdown schedules. Each tool offers different features and capabilities, allowing administrators to choose the solution that best fits their needs.

| Monitoring Tool | Features | Cloud Provider Compatibility | Use Cases |

|---|---|---|---|

| CloudWatch (AWS) |

| AWS | Monitoring EC2 instances, creating custom dashboards for shutdown schedules, setting up alerts for failed shutdowns. |

| Azure Monitor (Azure) |

| Azure | Monitoring Azure virtual machines, analyzing logs for shutdown events, and setting up alerts for resource states. |

| Cloud Monitoring (GCP) |

| GCP | Monitoring Compute Engine instances, creating custom dashboards to track shutdown success rates, and setting up alerts for resource utilization. |

| Prometheus and Grafana |

| Multi-cloud, on-premises | Monitoring resource utilization across different cloud providers, creating custom dashboards for shutdown schedules, and setting up alerts. |

Security Considerations for Automated Shutdowns

Automating cloud resource shutdowns introduces significant security considerations that must be addressed to prevent unauthorized access, data breaches, and service disruptions. Properly securing the automation process is crucial to maintaining the confidentiality, integrity, and availability of your cloud infrastructure. Neglecting security best practices can expose your environment to various threats, including credential compromise, privilege escalation, and denial-of-service attacks.

Security Implications of Automated Shutdown Scripts and Configurations

Automated shutdown scripts and configurations, if not properly secured, can become attack vectors. Attackers may exploit vulnerabilities within these scripts or configurations to gain unauthorized access to cloud resources, manipulate shutdown schedules, or execute malicious code.

- Credential Exposure: Hardcoded credentials (API keys, passwords) within scripts or configuration files represent a significant risk. If an attacker gains access to these scripts, they can directly use the credentials to access and control cloud resources.

- Privilege Escalation: Automation scripts often run with elevated privileges to manage cloud resources. If an attacker compromises a script, they could potentially use these elevated privileges to escalate their access and perform unauthorized actions, such as deleting data or modifying configurations.

- Data Breaches: Compromised shutdown automation can be used to shut down security controls or data protection mechanisms, leading to data breaches. An attacker might shut down firewalls, intrusion detection systems, or data encryption services to facilitate data exfiltration.

- Service Disruptions: Malicious actors could manipulate shutdown schedules to disrupt services, leading to denial-of-service (DoS) or distributed denial-of-service (DDoS) attacks. This could cause significant downtime and financial losses.

- Compliance Violations: Failure to secure shutdown automation can lead to violations of compliance regulations, such as GDPR or HIPAA, which require strict controls over data access and security. This can result in hefty fines and legal repercussions.

Securing API Keys and Credentials Used for Automation

Protecting API keys and credentials is paramount when implementing automated cloud resource shutdowns. Compromised credentials are the most common entry point for attackers.

- Avoid Hardcoding Credentials: Never hardcode API keys, passwords, or other sensitive information directly into your scripts or configuration files. This makes them easily accessible to anyone who can view the script.

- Use Secure Secret Management: Employ a dedicated secret management service, such as AWS Secrets Manager, Azure Key Vault, or Google Cloud Secret Manager. These services provide secure storage, retrieval, and rotation of secrets.

- Implement Key Rotation: Regularly rotate your API keys and passwords. This limits the window of opportunity for attackers if a key is compromised. Secret management services often provide automated key rotation capabilities.

- Restrict Key Permissions: Grant API keys only the minimum necessary permissions (least privilege) to perform their intended tasks. Avoid granting broad permissions that could allow attackers to access more resources than needed.

- Monitor Key Usage: Implement monitoring and logging to track the usage of API keys. This can help you detect suspicious activity or unauthorized access attempts. Alerting on unusual key usage patterns can help identify potential security breaches.

- Encrypt Configuration Files: If you must store configuration files containing sensitive information, encrypt them using strong encryption algorithms. This protects the data from unauthorized access if the files are compromised.

Implementing Least-Privilege Access Control

The principle of least privilege dictates that users and processes should be granted only the minimum necessary permissions to perform their tasks. Applying this principle to your automation infrastructure significantly reduces the potential impact of a security breach.

- Define Roles and Permissions: Create specific roles with clearly defined permissions based on the tasks performed by your automation scripts. Avoid using overly permissive roles.

- Assign Permissions to Roles, Not Users: Assign permissions to roles rather than directly to individual users or service accounts. This simplifies management and ensures consistency.

- Use Service Accounts: Utilize service accounts specifically designed for automation tasks. Avoid using personal user accounts for automation purposes.

- Regularly Review Permissions: Periodically review and audit the permissions granted to roles and service accounts. Remove any unnecessary permissions and ensure that permissions are still appropriate for the current tasks.

- Implement Role-Based Access Control (RBAC): Utilize RBAC features offered by your cloud provider to enforce fine-grained access control. This allows you to define specific permissions for each role based on the principle of least privilege.

- Monitor Access Activity: Continuously monitor access activity to identify any unauthorized attempts or unusual behavior. Use cloud provider logging and monitoring services to track access events and alert on suspicious activities.

Best Practices for Securing Your Automation Infrastructure

Adhering to these best practices will enhance the security posture of your automated cloud resource shutdown processes.

- Secure the Automation Environment: Ensure that the environment where your automation scripts run (e.g., virtual machines, containers) is properly secured. This includes applying security patches, configuring firewalls, and implementing intrusion detection systems.

- Use Secure Communication Channels: When interacting with cloud APIs, use secure communication channels such as HTTPS. This encrypts the data transmitted between your automation scripts and the cloud provider’s services.

- Validate Input Data: Implement input validation to prevent injection attacks. Sanitize all user inputs and configuration data to ensure that malicious code cannot be injected into your scripts.

- Implement Code Reviews: Conduct regular code reviews to identify and address security vulnerabilities in your automation scripts. This can help catch errors and potential security flaws before they are deployed.

- Test Your Automation: Regularly test your automation scripts and configurations to ensure they are functioning correctly and that security controls are working as expected.

- Monitor and Log Everything: Implement comprehensive monitoring and logging to track all activities related to your automation infrastructure. This includes script execution, API calls, and any changes to configurations. This allows for rapid detection and response to security incidents.

- Implement Incident Response Plan: Develop and maintain an incident response plan that Artikels the steps to be taken in the event of a security breach or incident. This plan should include procedures for containing the breach, investigating the cause, and recovering from the incident.

- Stay Updated: Keep your automation tools, scripts, and libraries up-to-date with the latest security patches and updates. This helps to protect against known vulnerabilities.

Testing and Validation of Shutdown Schedules

Before deploying automated cloud resource shutdown schedules, rigorous testing and validation are crucial to ensure they function as intended and do not inadvertently disrupt critical services or cause data loss. Thorough testing minimizes risks, identifies potential issues early on, and builds confidence in the reliability of the automation. A well-tested shutdown schedule protects against unforeseen consequences and contributes to the overall stability and efficiency of the cloud environment.

Importance of Testing Shutdown Schedules

Testing shutdown schedules is paramount for several key reasons. It’s a fundamental step in preventing unintended outages and data corruption.

- Preventing Service Disruptions: Testing verifies that resources shut down and restart in the correct order, preventing service interruptions. For example, a database server should be shut down before the application servers that depend on it. Failure to do so could lead to data inconsistencies or application errors.

- Ensuring Data Integrity: Shutdown schedules must be tested to ensure that data is properly saved and flushed before resources are terminated. This prevents data loss and ensures data consistency. Consider the scenario where a web server’s logs are not saved before the server is terminated; the logs would be lost.

- Validating Configuration and Dependencies: Testing validates that the automation correctly identifies and handles resource dependencies. This ensures that all dependent resources are shut down and restarted in the correct sequence. A misconfigured dependency can lead to cascading failures.

- Identifying and Resolving Errors: Testing allows for the identification and resolution of errors in the automation scripts or configurations. These errors could be related to incorrect resource IDs, access permissions, or timing issues.

- Building Confidence and Trust: Comprehensive testing builds confidence in the automation and ensures that it functions as expected, providing peace of mind to the operations team.

Testing Strategies

Several testing strategies can be employed to validate shutdown schedules effectively. The choice of strategy depends on the complexity of the environment and the criticality of the resources.

- Dry Runs: Dry runs simulate the shutdown process without actually terminating any resources. This is a safe way to verify the configuration and the logic of the automation. The automation script is executed, but instead of taking actions, it logs the actions it

-would* take. - Staged Deployments: Staged deployments involve deploying the shutdown schedule to a non-production environment (e.g., a staging environment) before deploying it to production. This allows for testing in a controlled environment without affecting live services. The staging environment should mirror the production environment as closely as possible.

- Canary Deployments: Canary deployments involve gradually rolling out the shutdown schedule to a small subset of resources in the production environment. This allows for monitoring the behavior of the schedule in a real-world environment and identifying any issues before they impact a large number of resources. For example, shutting down only one out of ten web servers at a time.

- Full Environment Testing: This involves testing the shutdown schedule across the entire environment, including all dependent resources and services. This is the most comprehensive form of testing but also the most time-consuming.

- Automated Testing: Automating the testing process, using tools and scripts, can significantly improve the efficiency and repeatability of the tests. These automated tests can be integrated into a CI/CD pipeline.

Checklist for Validating Shutdown Schedules

A comprehensive checklist ensures all aspects of the shutdown schedule are tested and validated.

- Resource Identification: Verify that all resources targeted for shutdown are correctly identified. This includes checking resource IDs, names, and tags.

- Shutdown Order: Confirm that resources are shut down in the correct order, considering dependencies.

- Shutdown Timing: Ensure that the shutdown process allows sufficient time for resources to gracefully shut down and for data to be saved.

- Restart Order: Verify that resources are restarted in the correct order.

- Restart Timing: Ensure that the restart process allows sufficient time for resources to fully initialize and become available.

- Data Integrity: Validate that data is not lost or corrupted during the shutdown and restart process.

- Application Functionality: Verify that applications and services continue to function correctly after resources are restarted.

- Monitoring and Logging: Confirm that monitoring and logging are functioning correctly and that all shutdown and restart events are captured.

- Security: Verify that all security considerations, such as access controls and encryption, are maintained during the shutdown and restart process.

- Notifications: Confirm that notifications are sent out as expected.

Process for Performing a Test Shutdown and Verifying Resource States

A structured process ensures that test shutdowns are performed effectively and that resource states are verified accurately.

- Preparation:

- Environment Selection: Choose a non-production environment for testing (e.g., staging).

- Backup: Create backups of all resources before testing. This allows for easy restoration in case of issues.

- Test Plan: Develop a detailed test plan outlining the scope, objectives, and expected outcomes of the test.

- Initiate Shutdown: Execute the shutdown schedule in the test environment.

- Monitor Progress: Monitor the shutdown process in real-time, observing the logs and monitoring dashboards.

- Check Shutdown Status: Verify that all resources are successfully shut down.

- Resource State Verification: Check the status of each resource to ensure it is in the expected state (e.g., stopped, terminated). Use cloud provider consoles or APIs to verify the state.

- Data Integrity Verification: Verify that data has been saved and that there is no data loss or corruption. This may involve checking database logs or application data.

- Application Functionality Testing: Test the functionality of applications and services after the resources have been restarted. Ensure that all services are available and functioning as expected.

- Log Analysis: Review logs to identify any errors or warnings that occurred during the shutdown and restart process.

- Test Results: Document the results of the test, including any issues encountered and how they were resolved.

- Lessons Learned: Identify any lessons learned from the test and update the shutdown schedule or automation accordingly.

- Sign-off: Obtain sign-off from relevant stakeholders to indicate that the shutdown schedule has been successfully tested and validated.

Advanced Automation Techniques

Moving beyond basic scheduling, advanced automation techniques allow for dynamic and intelligent cloud resource management. These techniques leverage real-time data and integrate with other automation processes to optimize resource utilization and cost savings. This section delves into conditional shutdowns, resource scaling, and integration strategies to enhance the efficiency of your cloud environment.

Conditional Shutdowns Based on Resource Utilization

Conditional shutdowns respond dynamically to resource usage, providing a more efficient approach than fixed schedules. This method ensures resources are only shut down when truly idle, maximizing uptime during peak periods. Implementing conditional shutdowns typically involves monitoring key metrics.

- Monitoring Key Metrics: Monitor metrics like CPU utilization, memory usage, network I/O, and active connections. Cloud providers offer monitoring services such as AWS CloudWatch, Azure Monitor, and Google Cloud Monitoring.

- Setting Thresholds: Define thresholds for each metric. For instance, a server might be considered idle if CPU utilization is below 5% for 15 minutes. The thresholds should be tailored to the application’s expected behavior.

- Triggering Shutdowns: When the defined conditions are met, trigger the shutdown process. This can be done using automation tools or custom scripts.

- Example: Consider a web server. If CPU utilization remains below 10% and there are no active connections for 30 minutes, the system can automatically shut down. This prevents unnecessary costs during off-peak hours.

Designing a System for Automating Resource Scaling Before Shutdowns

Before shutting down resources, consider scaling them down to reduce waste and ensure optimal performance during active periods. This approach allows resources to scale down gracefully before shutdown, preventing abrupt interruptions and potential data loss.

- Defining Scaling Rules: Establish rules for scaling down resources. These rules should be based on predicted or observed demand.

- Triggering Scaling Actions: Before initiating the shutdown, trigger scaling actions. For example, scale down the number of instances in an Auto Scaling group or reduce the size of a database instance.

- Implementing Pre-Shutdown Checks: Implement checks to ensure that scaling operations have completed successfully before proceeding with the shutdown. This helps to prevent disruptions.

- Example: A database server might scale down to a smaller instance size one hour before the scheduled shutdown. This minimizes the resources consumed during the idle period while allowing for a quick scale-up if needed.

Integrating Shutdown Schedules with Other Automation Processes

Integrating shutdown schedules with other automation processes can significantly improve efficiency and responsiveness. This integration allows for a coordinated approach to resource management, streamlining workflows and reducing operational overhead.

- Event-Driven Automation: Use event-driven automation to trigger shutdowns based on specific events. For instance, if a build process completes, shut down the build servers.

- Integration with Configuration Management: Integrate shutdown schedules with configuration management tools. When a resource is shut down, automatically update its configuration to reflect its new state.

- Orchestration Tools: Employ orchestration tools like AWS Step Functions, Azure Logic Apps, or Google Cloud Workflows to manage complex workflows, including shutdown processes.

- Example: When an application deployment is finished, the orchestration tool can automatically shut down the staging environment after a set period.

Advanced Use Cases for Automated Shutdown

Automated shutdown strategies can be applied to numerous scenarios, optimizing resource usage and cost efficiency.

- Development and Testing Environments: Automatically shut down development and testing environments outside of business hours or when not in use.

- Non-Production Databases: Schedule shutdowns for non-production database instances to save on storage and compute costs during off-peak times.

- Batch Processing Jobs: Shut down compute resources after batch processing jobs are completed.

- Idle Virtual Machines: Identify and shut down virtual machines that have been idle for an extended period.

- Temporary Workloads: Shut down temporary workloads, such as those used for data analysis or short-term projects, once their tasks are completed.

- Compliance and Security: Implement automated shutdowns for resources that are no longer required, ensuring compliance with security policies.

Cost Optimization Strategies Beyond Shutdowns

While automating cloud resource shutdowns is a powerful cost-saving technique, it’s just one piece of a larger cost optimization puzzle. A comprehensive approach involves several strategies that, when combined, can significantly reduce cloud spending and improve resource efficiency. This section explores various methods to optimize cloud costs beyond simply shutting down resources, offering practical examples and guidance on leveraging cloud cost management tools.

Right-Sizing Cloud Resources

Right-sizing involves ensuring that cloud resources are appropriately sized to meet their actual workload demands. Over-provisioning leads to wasted resources and unnecessary costs, while under-provisioning can result in performance issues and a poor user experience.

- Analyzing Resource Utilization: Regularly monitor resource utilization metrics such as CPU usage, memory consumption, network I/O, and disk I/O. Cloud providers offer various monitoring tools to collect and visualize these metrics.

- Identifying Over-Provisioned Resources: Identify instances where resources are consistently underutilized. For example, if a virtual machine consistently uses only 10% of its CPU capacity, it’s likely over-provisioned.

- Resizing Resources: Resize over-provisioned resources to smaller instances or configurations that better match the actual workload demands. This can involve scaling down virtual machines, reducing the size of database instances, or adjusting storage capacity.

- Automating Right-Sizing: Implement automated right-sizing strategies using tools like AWS Auto Scaling, Azure Autoscale, or Google Cloud Autoscaler. These tools dynamically adjust resource capacity based on predefined thresholds and policies.

Leveraging Reserved Instances and Savings Plans

Cloud providers often offer discounted pricing for resources that are reserved or committed to for a specific period. These discounts can significantly reduce costs compared to on-demand pricing.

- Reserved Instances (RIs): Purchase reserved instances for compute resources that have a predictable and consistent workload. RIs provide significant discounts compared to on-demand pricing, but require a commitment to use the instance for a specific duration (typically one or three years).

- Savings Plans: Savings Plans offer a flexible way to save on compute costs by committing to a consistent amount of spending over a one- or three-year period. Unlike RIs, Savings Plans apply to a broader range of compute services, including EC2 instances, Fargate, and Lambda.

- Choosing the Right Option: Evaluate the predictability of your workloads and your willingness to commit to a specific duration to determine whether reserved instances or Savings Plans are the best fit.

- Monitoring Utilization: Regularly monitor the utilization of reserved instances and Savings Plans to ensure that you are maximizing the benefits of these discounts. Underutilized reservations can lead to wasted savings.

Optimizing Storage Costs

Cloud storage can be a significant cost driver, and optimizing storage usage is crucial for cost efficiency.

- Choosing the Right Storage Class: Cloud providers offer different storage classes with varying pricing and performance characteristics. Choose the storage class that best suits your data access patterns and performance requirements. For example, use cheaper storage classes like Amazon S3 Glacier for infrequently accessed data.

- Data Lifecycle Management: Implement data lifecycle management policies to automatically move data between different storage classes based on its age and access frequency.

- Deleting Unused Data: Regularly identify and delete unused or obsolete data to reduce storage costs.

- Compressing Data: Compress data to reduce its storage footprint and lower storage costs.

Implementing Cost Allocation and Tagging

Accurate cost allocation and tagging enable you to track and understand cloud spending across different projects, departments, or applications.

- Tagging Resources: Tag all cloud resources with relevant metadata, such as project name, department, application, and cost center.

- Cost Allocation: Use cloud cost management tools to allocate costs based on resource tags. This allows you to track spending by specific business units or projects.

- Cost Reporting: Generate cost reports to analyze spending trends, identify cost drivers, and detect areas for optimization.

- Alerting: Set up cost alerts to be notified when spending exceeds predefined thresholds.

Using Cloud Cost Management Tools

Cloud providers offer native cost management tools, and third-party tools are available to help you analyze and optimize cloud costs.

- Cloud Provider Tools: Utilize the cost management tools provided by your cloud provider, such as AWS Cost Explorer, Azure Cost Management + Billing, or Google Cloud Cost Management. These tools provide detailed cost analysis, budgeting, and reporting capabilities.

- Third-Party Tools: Explore third-party cost management tools that offer advanced features, such as automated recommendations, cost optimization insights, and cross-cloud visibility.

- Setting Budgets and Alerts: Establish budgets and set up alerts to monitor spending and receive notifications when costs exceed predefined thresholds.

- Generating Cost Optimization Recommendations: Leverage the recommendations provided by cloud cost management tools to identify opportunities for cost savings, such as right-sizing resources, purchasing reserved instances, and implementing data lifecycle management policies.

Example: A company utilizes a combination of cost optimization strategies. They implement automated resource shutdowns for non-production environments during off-peak hours, right-size their virtual machines based on utilization data, purchase reserved instances for their production database servers, and use data lifecycle management to move infrequently accessed data to a cheaper storage tier. Furthermore, they utilize cloud cost management tools to monitor their spending, set budgets, and receive alerts when costs exceed certain thresholds.

This multi-pronged approach results in a 30% reduction in their overall cloud spending while maintaining application performance and availability.

End of Discussion

In conclusion, automating cloud resource shutdown schedules is not just a cost-saving measure; it is a fundamental practice for responsible cloud management. By implementing the strategies and techniques Artikeld in this guide, you can gain greater control over your cloud environment, optimize resource utilization, and unlock significant cost savings. Embrace automation, monitor your progress, and continuously refine your schedules to ensure your cloud infrastructure remains efficient, secure, and perfectly tailored to your business needs.

The path to a more sustainable and cost-effective cloud future starts with automated shutdowns.

Key Questions Answered

What are the primary benefits of automating cloud shutdowns?

The main benefits include significant cost savings by eliminating unnecessary resource consumption, improved resource utilization, reduced environmental impact, and simplified cloud management by automating routine tasks.

What types of cloud resources are best suited for automated shutdowns?

Resources like development and testing environments, non-production databases, and virtual machines used for batch processing or infrequent tasks are ideal candidates for automation. Essentially, any resource that is not continuously needed can benefit from scheduled shutdowns.

How do I handle data loss during a shutdown?

Implement proper data backup and recovery strategies before automating shutdowns. Ensure data is either saved to persistent storage or replicated to a secure location before a shutdown occurs. Regularly test your recovery procedures.

Can I automate shutdowns for production environments?

While less common, it’s possible to automate shutdowns in production environments, but it requires careful planning and consideration. This is typically used for non-critical resources or for disaster recovery purposes. Always thoroughly test such schedules and have a clear understanding of the impact on your applications.

What happens if a scheduled shutdown fails?

Implement robust monitoring and alerting to detect failed shutdowns. The specific actions to take depend on the failure, but it may involve manual intervention, retrying the shutdown, or investigating the underlying cause of the issue.