Database schema migrations are crucial for managing evolving data structures in software applications. Manual migrations, while straightforward for small changes, become cumbersome and error-prone as projects grow. This comprehensive guide dives into the world of automated database schema migrations, equipping you with the knowledge and tools to streamline this essential process. We’ll explore various techniques, tools, and best practices to ensure smooth, efficient, and secure migrations, minimizing downtime and maximizing application stability.

From understanding the fundamentals of database schema migrations to implementing robust CI/CD pipelines, this guide covers every aspect of automating the migration process. We’ll also discuss the importance of data modeling and security considerations to ensure your migrations are not only efficient but also secure and reliable.

Introduction to Database Schema Migrations

Database schema migrations represent a crucial aspect of software development, especially in applications involving persistent data. They meticulously track and manage changes to the database structure, ensuring that the database remains compatible with evolving application requirements. This process is vital for maintaining data integrity and application functionality as the software evolves.

Database Schema Migration Explained

Database schema migrations are the controlled process of modifying a database’s structure. This includes alterations to tables, columns, indexes, and other database objects. They provide a structured method for implementing these changes, enabling developers to track modifications over time. These migrations often utilize a dedicated tool or script to describe the desired modifications and apply them to the database.

This approach facilitates version control and rollback capabilities, safeguarding against unintended consequences.

Importance of Automated Schema Migrations

Automated schema migrations significantly enhance software development processes. Manual schema modifications are prone to errors, leading to data corruption or application inconsistencies. Automated systems, in contrast, offer significant benefits, including reduced errors, improved consistency, and enhanced collaboration. Automated systems also streamline the process, enabling quicker deployments and reduced development time. They facilitate the management of complex database changes, improving efficiency and consistency.

Common Challenges with Manual Schema Migrations

Manual schema migrations present numerous challenges. One key concern is the potential for errors, such as typos in SQL statements or inconsistencies in data structures. Furthermore, managing multiple versions of the database schema becomes complex and prone to errors, especially in large-scale applications. This lack of version control can lead to significant issues during maintenance or updates.

The risk of human error is amplified in complex schema alterations, potentially compromising data integrity and application functionality.

Scenarios Requiring Automated Migrations

Automated migrations are critical in numerous scenarios. In rapidly evolving applications, where frequent schema changes are needed, automated systems provide an efficient way to manage these updates. For instance, adding new fields to a user table or creating a new table to track product reviews. Automated migrations are also crucial for teams working on large-scale projects where multiple developers contribute to the database schema.

These scenarios necessitate a robust and efficient approach to managing changes to the database structure. Automated schema migrations are also vital for maintaining data integrity and application functionality in scenarios requiring frequent and complex database modifications.

Manual vs. Automated Database Migrations

| Feature | Manual Migrations | Automated Migrations |

|---|---|---|

| Implementation | Requires manual modification of SQL statements and database structure. | Utilizes tools and scripts to define and apply changes to the database. |

| Error Rate | Higher risk of errors due to manual intervention and potential inconsistencies. | Lower error rate due to automated verification and application of changes. |

| Version Control | Difficult to manage multiple versions of the database schema. | Facilitates version control, enabling easy rollback and tracking of changes. |

| Maintenance | Complex maintenance, especially with frequent updates. | Simplifies maintenance by automating updates and schema management. |

| Scalability | Less scalable for large-scale projects or frequent schema changes. | Highly scalable for managing complex database schemas and frequent updates. |

Automated migrations offer a more efficient and reliable way to manage schema changes, reducing the risk of errors and improving the overall development process.

Popular Migration Tools and Technologies

Database schema migrations are a crucial aspect of software development, ensuring consistent and reliable data management across different stages. Effective migration tools streamline this process, automating the tedious task of updating database structures while minimizing risks. Choosing the right tool depends on specific project needs and technical considerations.Popular migration tools provide standardized approaches to manage database schema changes, ensuring compatibility and minimizing the risk of errors during the migration process.

These tools typically support various database systems, from relational databases like PostgreSQL and MySQL to others, simplifying the migration process for different projects.

Popular Database Migration Tools

Various tools facilitate database schema migrations, each with unique strengths and weaknesses. Commonly used tools include Flyway, Liquibase, and dbmate, each catering to distinct needs and technical preferences. Understanding the characteristics of each tool enables informed decisions about project suitability.

Flyway

Flyway is a lightweight and open-source database migration tool renowned for its simplicity and efficiency. It excels in handling straightforward schema changes and is particularly well-suited for projects with smaller teams or those needing a straightforward migration solution. Flyway’s SQL-based approach is easy to understand and implement.

Liquibase

Liquibase is a robust and feature-rich migration tool, known for its extensive support for various databases and features like branching and version control. Its comprehensive functionality is a valuable asset for complex projects requiring advanced features and broader database support.

DbMate

DbMate is a database migration tool that combines code-based and SQL-based approaches. Its versatile nature allows it to address various migration needs, and it is often preferred by teams using a hybrid approach, blending code and SQL for migrations.

Installation and Configuration of Flyway

Flyway’s installation and configuration are relatively straightforward. The tool is typically downloaded as a JAR file, which is then included in the project’s build path. The configuration process involves setting up a database connection and defining the location of migration scripts. Flyway uses SQL scripts for schema changes. A simple configuration file specifies database credentials and the location of migration scripts.

Comparison of Migration Tools

| Tool | Strengths | Weaknesses |

|---|---|---|

| Flyway | Lightweight, easy to use, SQL-based, good for simple migrations. | Limited advanced features, less comprehensive support for diverse database systems. |

| Liquibase | Robust, extensive database support, branching and version control, excellent for complex projects. | Steeper learning curve, larger size compared to Flyway. |

| DbMate | Hybrid approach (code and SQL), flexible, handles diverse migration needs. | Might be more complex to configure compared to Flyway for basic use cases. |

Integration with Development Environments

Integration of migration tools with development environments (like Java and Python) is typically straightforward. Libraries and frameworks often provide seamless integration, enabling automated execution of migration scripts during build processes or deployment. Tools like Maven and Gradle in Java projects, and Pipenv or Poetry in Python projects, often integrate smoothly with migration tools.

Data Modeling and Migration Design

Data modeling is fundamental to successful database schema migrations. A well-designed data model ensures that the database structure accurately reflects the application’s data requirements and facilitates future changes without disrupting existing functionality. Proper data modeling practices significantly reduce the risk of errors and inconsistencies during migration. Careful consideration of relationships between data entities is crucial for maintaining data integrity and consistency throughout the migration process.Thorough data modeling precedes any migration effort, establishing a blueprint for the database structure.

This allows for a clear understanding of the data entities, their attributes, and relationships, enabling a controlled and predictable migration process.

Significance of Data Modeling in Database Schema Migrations

A robust data model is the cornerstone of any successful database schema migration. It provides a clear and unambiguous representation of the data entities, their attributes, and the relationships between them. This ensures that the database accurately reflects the application’s data requirements and facilitates future changes without disrupting existing functionality. A well-defined model allows for better planning and execution of migrations, minimizing potential conflicts and errors.

This meticulous planning minimizes risks associated with schema alterations and allows for greater predictability during the migration process.

Good Data Modeling Practices for Migration

Effective data modeling practices are critical for seamless database schema migrations. Normalization is paramount, reducing redundancy and ensuring data integrity. Careful consideration of data types and constraints ensures data accuracy and consistency. Thorough documentation, including entity relationships and attribute descriptions, is essential for understanding and maintaining the model throughout the migration process. Designing for future scalability and flexibility allows the database to adapt to evolving application requirements without requiring extensive restructuring.

Designing Database Schemas for Future Migrations

Designing schemas that accommodate future changes is a critical aspect of migration planning. Anticipating potential future needs and incorporating flexibility into the design is crucial for reducing the impact of subsequent migrations. Including future-proof attributes, such as timestamps for tracking modifications, is an excellent strategy for accommodating evolving data requirements. The design should be modular and decoupled, allowing for changes in one area without affecting others.

Employing a well-defined naming convention, consistent throughout the schema, helps with maintainability and understanding.

Sample Data Model for a Hypothetical E-commerce Application

This model illustrates relationships for a hypothetical e-commerce application.

- Customers: Includes attributes like customer ID, name, email, and address. This entity is crucial for tracking user interactions.

- Products: Stores product details such as product ID, name, description, price, and category.

- Orders: Captures order information, including order ID, customer ID, order date, and total amount. It represents transactions.

- Order Items: Links orders to individual products, including order item ID, order ID, product ID, and quantity.

These entities are interconnected through foreign keys, creating relationships. For example, an order is linked to a customer, and order items link orders to products. This structured approach ensures data integrity and traceability throughout the application.

Managing and Resolving Conflicts During Schema Migrations

Conflicts during schema migrations can arise from various factors. Careful planning, version control, and testing are vital in mitigating conflicts. A robust version control system for database schemas is essential. This allows for easy rollback to previous versions if issues arise. Thorough testing of the migration script in a staging environment, prior to deploying to production, helps in identifying and resolving potential conflicts early.

Clear communication and collaboration between development and operations teams are vital for a smooth migration.

Implementing and Testing Migrations

Implementing schema migrations involves translating design decisions into executable code that alters the database structure. Careful planning and meticulous implementation are crucial for maintaining data integrity and avoiding application downtime. This process typically involves writing SQL scripts that perform the desired modifications, and these scripts should be thoroughly tested to ensure they function as intended.

Implementing Schema Migration Scripts

Schema migration scripts are typically written in SQL, although some migration tools offer specialized languages or DSLs. The scripts should be designed to be atomic and idempotent, meaning they can be run multiple times without unintended consequences and should achieve the desired change in a single, self-contained operation. Consider using parameterized queries to prevent SQL injection vulnerabilities.

Testing Procedures for Validating Migration Scripts

Thorough testing is essential to ensure that migration scripts accurately reflect the intended changes and do not introduce errors or inconsistencies. Test cases should cover various scenarios, including successful migrations, failures due to constraints, and rollback operations. A comprehensive testing strategy ensures that the migration process functions reliably and maintains data integrity.

Different Testing Approaches for Migration Scripts

Several testing approaches can be used to validate migration scripts. Unit tests focus on individual migration steps, isolating them from the database and application context. Integration tests verify the complete migration process, including interactions with the database and application code. End-to-end tests, while more complex, simulate a real-world scenario, confirming the overall functionality of the migration.

Testing Scenarios and Procedures

The following table Artikels different testing scenarios and their corresponding procedures.

| Scenario | Description | Procedure |

|---|---|---|

| Successful Migration | Verifying a migration script executes correctly and produces the desired changes in the database. | Execute the migration script. Verify the database structure matches the expected schema. Check for data consistency. |

| Constraint Violation | Ensuring that the migration script handles constraints appropriately, preventing data corruption. | Execute the migration script. Verify the database reports expected errors related to constraints. Inspect the error messages and ensure they are meaningful. |

| Rollback Functionality | Validating the rollback process to revert the changes made by a migration script. | Execute the migration script. Execute the corresponding rollback script. Verify the database structure returns to its previous state. Validate data consistency after rollback. |

| Data Integrity | Confirming that the migration script does not introduce data inconsistencies. | Execute the migration script. Query the database for specific data points. Verify the data integrity against pre-migration values. Ensure data relationships remain valid. |

Managing Rollback and Revert Functionality

Migration scripts should include rollback procedures to revert changes in case of errors or unforeseen issues. This process ensures that the database can be restored to its previous state if a migration fails. A well-designed rollback script, mirroring the original migration script, is critical to maintaining database consistency. Rollback scripts are typically automatically generated by the migration tool.

Security Considerations in Migrations

Database schema migrations, while essential for evolving database structures, introduce potential security risks if not handled meticulously. Carefully designed and implemented migration procedures are critical to maintaining data integrity and preventing vulnerabilities. Security considerations must be integrated throughout the entire migration lifecycle, from initial design to final testing and deployment.Thorough understanding of potential vulnerabilities and threats during migrations, coupled with robust security practices, is paramount for safeguarding sensitive data and ensuring the continued operational reliability of the database system.

Proactive measures for secure migration processes can prevent data breaches, unauthorized access, and other security incidents.

Security Implications of Database Schema Migrations

Database schema migrations, if not implemented securely, can expose sensitive data to various threats. Inadequate security controls during migration can lead to unauthorized access, data breaches, and compromised system integrity. For instance, if migration scripts are not properly vetted, they might inadvertently expose confidential data or create backdoors. This is especially critical when dealing with production systems.

Potential Vulnerabilities and Threats During Migrations

Several vulnerabilities and threats can emerge during schema migration processes. Improperly configured or unvalidated user inputs can lead to SQL injection attacks. This is particularly relevant when migration scripts interact with user-provided data. Insufficient access controls during migration can result in unauthorized access to sensitive data, and outdated or vulnerable libraries used in migration tools can introduce known exploits.

Best Practices for Securing Migration Scripts and Processes

Ensuring the security of migration scripts and processes requires a multi-layered approach. All migration scripts should be thoroughly reviewed and tested for vulnerabilities, ideally by a security team. Version control systems, like Git, should be used to track changes and maintain a history of migration scripts. Access controls must be implemented to limit who can execute migration scripts.

This should include a separate, dedicated user account with only the necessary permissions for the migration process.

Handling Sensitive Data During Migration

Sensitive data, such as Personally Identifiable Information (PII), must be handled with utmost care during migration. Encryption of sensitive data at rest and in transit is crucial. Implement data masking techniques to obscure sensitive data during migration testing and development phases. Consider using a secure database backup and restore process to minimize data exposure during migration.

Checklist for Security Audits of Migration Processes

Regular security audits of migration processes are vital for identifying and mitigating potential vulnerabilities. A comprehensive checklist for these audits should include:

- Review of migration scripts for potential vulnerabilities (e.g., SQL injection, insecure data handling).

- Verification of access controls to ensure only authorized personnel can execute migration scripts.

- Assessment of the security of the migration environment (e.g., network configuration, firewall rules).

- Evaluation of data masking techniques to protect sensitive data during migration testing.

- Testing for data leaks and vulnerabilities through penetration testing and security scans.

- Regular review and update of security policies and procedures for database schema migrations.

A comprehensive security audit checklist is essential to maintain a secure and robust database migration process. The steps Artikeld above are fundamental for safeguarding sensitive data and preventing security breaches.

Performance Optimization during Migrations

Optimizing database performance during schema migrations is crucial for minimizing downtime and ensuring a smooth user experience. Efficient migration strategies are vital for maintaining application availability and preventing disruptions to ongoing operations. This section details techniques for maximizing performance and minimizing the impact on existing systems.

Database Optimization Strategies

Careful planning and execution are key to minimizing performance issues during migrations. Database-specific optimization strategies can be employed to streamline the migration process and ensure minimal disruption. Choosing the right approach depends on the size of the dataset, the complexity of the schema changes, and the available resources.

- Using Transactions: Employing transactions ensures atomicity. If any part of the migration fails, the entire operation is rolled back, preventing inconsistencies in the database. This approach is particularly valuable when dealing with complex schema alterations.

- Indexing Strategies: Appropriate indexing is essential for efficient data retrieval during migrations. Ensure indexes are properly defined on columns frequently used in queries. This significantly improves the performance of data retrieval operations during migration tasks.

- Batch Processing: Breaking down large migration tasks into smaller, manageable batches can greatly enhance performance. Processing data in batches reduces the load on the database and minimizes the risk of overwhelming resources during the migration.

- Parallel Processing: Leveraging parallel processing, if the database allows, enables simultaneous execution of migration operations. This is especially beneficial for large-scale migrations, significantly reducing the overall migration time.

Minimizing Downtime

Minimizing downtime during schema migrations is a primary concern. Employing strategies that allow continuous operation while the database undergoes modifications is critical for maintaining service availability.

- Schema Versioning: Schema versioning provides a structured approach to manage different versions of the database schema. Versioning enables concurrent access to different versions of the database schema while the migration is in progress, potentially reducing the need for downtime.

- Read-Replica Approach: Utilizing a read replica of the database allows read operations to continue without impacting the primary database during the migration process. This ensures continuous availability for read-only requests while the migration occurs on the primary database.

- Incremental Migrations: Incremental migrations apply changes in smaller, manageable steps, reducing the risk of errors and enabling continuous operation. This phased approach allows for more frequent, smaller updates, minimizing potential disruptions.

Migrating Large Datasets Efficiently

Efficient migration of large datasets is critical for maintaining application performance and preventing disruptions. Strategies that minimize the load on the database are crucial during large-scale migrations.

- Data Export and Import: Exporting and importing large datasets in stages using specialized tools can be significantly faster than directly modifying the data within the database during the migration. This technique allows offloading data operations to dedicated tools designed for bulk data handling.

- Using Staging Environments: Employing staging environments allows for thorough testing of the migration process on a copy of the production data. This ensures the migration process works as expected before applying it to the live database, mitigating the risk of unexpected errors.

- Data Compression: Compressing large datasets before migration can significantly reduce the storage space and processing time, leading to more efficient data transfer and migration operations. This approach reduces the overall migration time and storage requirements.

Performance Optimization Strategies

Different strategies can be employed depending on the specifics of the migration. The best approach depends on the size of the dataset, the complexity of the changes, and available resources.

| Strategy | Description | Benefits |

|---|---|---|

| Transactions | Execute operations as a single unit | Data integrity, rollback on failure |

| Indexing | Optimize data retrieval | Faster queries, improved performance |

| Batch Processing | Process data in smaller chunks | Reduced load, minimized resource usage |

| Parallel Processing | Execute operations concurrently | Faster migration times |

| Schema Versioning | Manage different schema versions | Support concurrent access, reduced downtime |

| Read Replica | Use a copy for read operations | Maintain availability during migration |

| Incremental Migrations | Apply changes in smaller steps | Reduced risk of errors, continuous operation |

Measuring and Monitoring Performance

Monitoring performance during migrations is critical to ensure the process is running as expected.

- Monitoring Tools: Utilizing database monitoring tools allows for real-time tracking of resource usage, query performance, and other metrics during the migration process. This provides valuable insights into the progress and potential bottlenecks.

- Performance Metrics: Tracking key metrics such as transaction time, query response time, and resource utilization provides valuable data for evaluating the performance of the migration process. This data is crucial for identifying areas requiring optimization.

- Benchmarking: Establishing benchmarks before and after the migration allows for quantifiable comparisons of performance. This helps in evaluating the impact of the migration on the overall database performance.

Error Handling and Rollbacks

Database schema migrations, while crucial for evolving database structures, can encounter errors. Robust error handling and rollback procedures are essential to maintain data integrity and application functionality. A well-designed system for managing errors during migrations minimizes downtime and ensures data recovery.Effective error handling involves anticipating potential problems, implementing mechanisms to detect and respond to them, and establishing clear rollback strategies.

This ensures the database can be restored to a consistent state if a migration fails, minimizing the impact on the application.

Strategies for Handling Errors During Database Schema Migrations

A comprehensive strategy for handling errors involves proactively identifying potential failure points. This requires careful planning, understanding the limitations of the migration tools and technologies used, and recognizing the various types of errors that can arise. These potential errors can be categorized, analyzed, and addressed by implementing the appropriate recovery mechanisms. For instance, errors can arise from constraints violations, data type mismatches, or inconsistencies in data relationships.

Techniques for Implementing Rollback Procedures

Rollback procedures are critical for recovering from migration failures. These procedures are designed to reverse the changes made by a failed migration, restoring the database to its previous state. A common approach involves creating a script for each migration step that includes both the forward and backward operations. This enables the migration system to revert to the previous state if an error is encountered.

Demonstrating How to Recover from Migration Failures

Recovery from migration failures involves careful planning and execution of rollback procedures. The process typically involves executing the rollback script associated with the failed migration. This script will revert the database to its previous state, undoing the changes that led to the failure. If the error is detected early, it can be immediately reverted. This process often necessitates monitoring the migration process and implementing safeguards to prevent cascading failures.

Recovery Strategies for Different Error Types

| Error Type | Description | Recovery Strategy ||—|—|—|| Constraint Violation | A new column constraint is not met by existing data. | Rollback the migration, potentially addressing the data issues, and re-attempting the migration with validated data. || Data Type Mismatch | Data being migrated is not compatible with the target data type. | Rollback the migration, and either modify the data to match the target type or adjust the migration script to accommodate the existing data.

|| Foreign Key Violation | A foreign key constraint is violated due to missing or inconsistent data. | Rollback the migration, addressing the foreign key constraint issues in the data, and re-attempting the migration. || Transaction Rollback | The database transaction for the migration fails. | Rollback the migration to the previous state, and address the root cause of the transaction failure.

|

Implementing Logging and Monitoring for Migration Processes

Logging and monitoring are essential components of a robust migration process. Detailed logging records every step of the migration, including successes, failures, and any errors encountered. Monitoring tools track the migration’s progress in real-time, providing insights into performance and potential issues. This data enables proactive identification of potential issues, enabling swift and effective responses to errors. Logging and monitoring information is essential for diagnosing problems and making necessary adjustments.

Detailed logs help pinpoint the exact cause of any failures.

Version Control and Collaboration

Effective database schema migrations rely heavily on version control and collaboration to ensure accuracy, consistency, and maintainability. Proper versioning allows for tracking changes, reverting to previous states, and facilitating collaboration among development teams. This section details the critical role of version control in managing schema migrations.

Importance of Version Control

Version control systems (VCS), such as Git, are essential for managing database schema migrations. They provide a centralized repository for all migration scripts, allowing developers to track changes, revert to previous versions if necessary, and collaborate effectively. This crucial capability prevents data loss and ensures the integrity of the database schema throughout the development lifecycle. Version control fosters a history of changes, enabling efficient debugging and rollback procedures.

Using Version Control Systems for Migration Scripts

Version control systems are ideally suited for managing migration scripts. They allow for branching, merging, and resolving conflicts, making it easier for multiple developers to work on the same database schema simultaneously. Each migration script can be treated as a commit in the VCS, allowing for a clear audit trail of changes made to the database schema. This approach enables precise tracking of who made what changes and when, facilitating the identification and resolution of any potential errors or inconsistencies.

Collaborative Migration Development

Collaborative development of database schema migrations is a common practice. Multiple developers can work on different parts of the schema concurrently, contributing to the overall improvement of the database design. A robust version control system facilitates this collaborative environment, ensuring that all changes are documented and tracked. This approach maximizes efficiency and ensures that everyone working on the project is aware of the latest modifications.

Strategies for Conflict Resolution

Conflicts can arise during collaborative migrations, especially when multiple developers modify the same parts of the schema simultaneously. Several strategies can be employed to resolve these conflicts effectively. One common strategy involves using a branching model, where developers work on separate branches and merge their changes back into the main branch. Clear communication and coordination among developers are essential to prevent conflicts.

Sample Workflow for Collaborative Migration Development

A typical workflow for collaborative migration development involves the following steps:

- Developers create new branches for their specific migration tasks.

- Changes are committed to their respective branches as the migration progresses.

- Regular code reviews are performed to ensure the quality and consistency of the migration scripts.

- Conflicts are resolved by merging changes from the different branches, using tools provided by the VCS.

- Finally, the updated migration scripts are integrated into the main branch, ensuring a cohesive and up-to-date database schema.

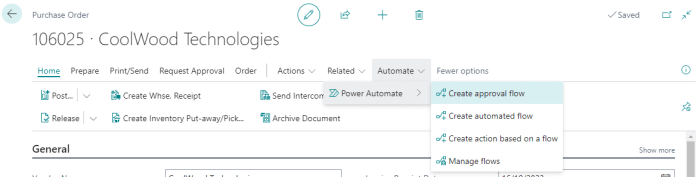

Continuous Integration/Continuous Deployment (CI/CD) for Migrations

Integrating database schema migrations into a Continuous Integration/Continuous Deployment (CI/CD) pipeline is crucial for automating the deployment process and ensuring consistent database updates. This approach enhances efficiency, reduces manual intervention, and minimizes the risk of errors during deployments. Automated deployments, facilitated by CI/CD, are pivotal in maintaining a robust and reliable database infrastructure.CI/CD pipelines streamline the entire migration process, from code changes to database updates, creating a robust and repeatable workflow.

This automation allows developers to focus on building new features while ensuring that database schema changes are deployed correctly and reliably. The pipeline also allows for easy tracking of migration execution history and rollback capabilities, enhancing the overall management of database schema evolution.

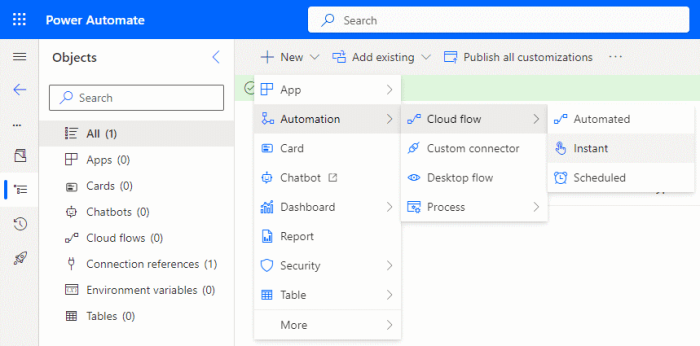

Integrating Migrations into CI/CD Workflows

Integrating database schema migrations into CI/CD workflows involves automating the execution of migration scripts within the pipeline. This ensures that any changes to the database schema are applied consistently and reliably across all environments. The workflow should include the stages of building, testing, and deploying the database changes.

- Building: The build stage involves compiling the code and generating the necessary artifacts for deployment. This includes the migration scripts. The build process often incorporates a unit test phase to validate the migration code and ensure compatibility with the existing schema.

- Testing: This crucial stage validates the correctness of the database migrations. Comprehensive unit tests, integration tests, and potentially end-to-end tests should verify the integrity of the data and the application’s functionality after the schema changes. These tests are designed to identify any issues arising from the migrations and prevent unexpected problems during production deployment.

- Deploying: The deployment stage involves applying the verified migration scripts to the target database environment. This is typically handled by dedicated deployment tools that interact with the database management system. The deployment process should include rollback mechanisms in case issues arise.

Automating Deployment of Database Schema Migrations

Automating the deployment of database schema migrations is vital for reducing human error and enabling faster deployment cycles. This automation is achievable by integrating the migration scripts into the CI/CD pipeline.

- Using Migration Tools: Tools like Flyway, Liquibase, and DBeaver provide functionalities to automate migration tasks. These tools manage migration scripts, ensuring they are applied in the correct order and allowing for rollback capabilities. They facilitate the creation of repeatable deployment processes.

- Scripting the Process: Custom scripts can be created to manage the deployment process, ensuring the steps are executed in the correct sequence and order. This method can be tailored to specific needs and integrate with other tools in the pipeline.

Tracking Migration Execution History

Tracking migration execution history within a CI/CD pipeline provides valuable insights into database schema evolution. This includes logging the execution time, success or failure status, and any associated metadata.

- Logging Mechanisms: Employing logging mechanisms within the migration scripts is essential for capturing details about the execution process. This logging should include timestamps, details of the changes made, and any errors encountered during the migration process. The logs provide valuable data for troubleshooting and auditing.

- Database Tables: A dedicated database table can store migration history details, including the script applied, the timestamp of execution, the status (success or failure), and any associated errors. This table allows for comprehensive audit trails of schema changes.

Sample CI/CD Pipeline for Database Schema Migration

A sample CI/CD pipeline for database schema migration can be structured as follows:

| Stage | Action |

|---|---|

| Source Code Commit | Developers commit code changes, including migration scripts, to the version control system. |

| Build | The CI server builds the application and compiles the migration scripts. Unit tests are executed to validate the scripts. |

| Test | Integration tests are run against the database to ensure that the migrations don’t break existing functionality. |

| Deployment | The migration scripts are deployed to the staging environment, and a final round of tests is performed. |

| Staging | Database schema changes are verified in the staging environment. |

| Production | If successful, the verified changes are deployed to the production database. |

| Rollback | Rollback procedures are in place to revert changes if issues arise in production. |

Ending Remarks

In conclusion, automating database schema migrations is paramount for modern software development. This guide has provided a comprehensive overview of the process, from foundational concepts to advanced techniques like CI/CD integration. By understanding the various migration strategies, tools, and security considerations, developers can build more robust, maintainable, and scalable applications. The key takeaway is that automating this process significantly reduces manual effort, minimizes errors, and ultimately enhances the overall development lifecycle.

FAQ Summary

What are the common challenges associated with manual database schema migrations?

Manual schema migrations can lead to inconsistencies, errors, and wasted development time. Tracking changes becomes difficult, potentially introducing bugs or data corruption. The risk of human error increases significantly with more complex migrations, and manual processes lack the inherent auditability and version control of automated solutions.

How do I choose the right migration tool?

Selecting the right tool depends on your specific database system, project needs, and team expertise. Consider factors like ease of use, features (e.g., rollback, versioning), and integration with your development environment. Thorough research and evaluation of different tools are crucial before making a decision.

What are some best practices for designing effective migration scripts?

Designing effective migration scripts involves meticulous planning and clear documentation. Use descriptive names, follow a structured approach (e.g., incremental changes), and include comprehensive comments. Thorough testing and validation are critical to ensuring the accuracy and stability of your scripts.

What is the role of version control in database schema migrations?

Version control systems are essential for tracking changes to your database schema over time. They allow you to revert to previous versions if necessary and facilitate collaboration among developers. Tools like Git provide an essential framework for managing schema changes in a structured and auditable manner.