The adoption of serverless computing has revolutionized application development, offering unparalleled scalability and agility. However, the “pay-per-use” model, while advantageous, introduces a critical need for vigilant cost management. Understanding the intricacies of serverless billing, from function duration to request counts, is paramount to avoiding financial surprises.

This guide provides a scientific and analytical framework for navigating the complexities of serverless cost optimization. It dissects the key components of serverless billing, identifies potential cost drivers, and offers actionable strategies to ensure efficient resource utilization. Through a detailed examination of cost management tools, coding best practices, and service selection, this document empowers developers and organizations to harness the full potential of serverless computing without incurring unnecessary expenses.

Understanding Serverless Billing Models

Serverless computing offers a compelling value proposition, but its “pay-per-use” billing model requires careful understanding to avoid unexpected costs. The inherent flexibility of serverless, where resources are provisioned and scaled automatically, necessitates a granular understanding of how usage translates to charges. This section dissects the core billing concepts and compares the pricing structures across major cloud providers, enabling informed cost management strategies.

Core Serverless Billing Concepts

The foundation of serverless billing rests on a few key metrics. Understanding these is critical to estimating and controlling your serverless expenses.* Pay-per-use: This is the cornerstone of serverless economics. You are charged only for the resources your code consumes. Unlike traditional infrastructure, where you pay for reserved capacity regardless of utilization, serverless providers bill you only for actual execution time, memory usage, and the number of requests.

Function Duration

This represents the time your code runs, measured from the invocation of a function to its completion. Billing is often calculated in milliseconds, with some providers offering a free tier or a minimum execution time. The duration directly impacts cost; optimizing code for efficiency and minimizing execution time is crucial.

Request Count

This refers to the number of times a serverless function is invoked. Each invocation, whether successful or resulting in an error, typically incurs a charge. High request volumes can quickly escalate costs, so monitoring request patterns and implementing throttling mechanisms are essential for cost control.

Memory Allocation

Serverless platforms allow you to specify the amount of memory allocated to a function. While more memory can sometimes improve performance (and thus, potentially, reduce execution time), it also increases the cost per execution.

Storage

Serverless applications often interact with storage services like object storage or databases. The cost of these services, including storage volume, data transfer, and request costs, is separate from the function execution costs but contributes significantly to the overall bill.

Comparison of Serverless Billing Models Across Cloud Providers

The pricing structures for serverless functions vary across cloud providers. The following table provides a comparative overview of the core billing components for AWS Lambda, Azure Functions, and Google Cloud Functions, as of October 26, 2023. Note that pricing is subject to change; always refer to the provider’s official documentation for the most up-to-date information.

| Feature | AWS Lambda | Azure Functions | Google Cloud Functions |

|---|---|---|---|

| Invocation Pricing | First 1 million requests are free per month. After that, $0.0000002 per request. | First 1 million invocations are free. After that, $0.20 per million invocations. | First 2 million invocations are free. After that, $0.0000004 per invocation. |

| Duration Pricing | $0.0000166667 for every GB-second used. Free tier: 400,000 GB-seconds per month. | Pricing varies by region and memory allocation. Pay-per-use based on GB-seconds. | $0.0000025 per GB-second. |

| Memory Allocation | From 128 MB to 10 GB, in 1 MB increments. | From 128 MB to 1.5 GB, configurable in increments. | From 128 MB to 8 GB, configurable in increments. |

| Free Tier | 1 million free requests per month and 400,000 GB-seconds of compute time. | 1 million free invocations per month. | 2 million free invocations per month. |

| Data Transfer (Outbound) | Varies by region; generally, the first GB is free, then tiered pricing applies. | First 5 GB is free. Subsequent GBs are priced. | First 1 GB is free. Subsequent GBs are priced. |

| Concurrency | Configurable, with default limits that can be increased. | Configurable, with limits that can be increased. | Configurable, with limits that can be increased. |

The table illustrates significant differences in pricing. AWS Lambda offers a generous free tier for requests and compute time. Azure Functions provides a free tier based on invocations. Google Cloud Functions has a free tier that encompasses both requests and compute time. Choosing the most cost-effective provider depends on your application’s specific usage patterns, including the number of requests, execution time, and memory requirements.

Factors Influencing Serverless Costs Beyond Simple Execution Time

While function duration and request count are primary cost drivers, several other factors contribute to serverless expenses. These factors can significantly impact the overall cost of running serverless applications, and neglecting them can lead to unexpected bills.* Cold Starts: The time it takes for a serverless function to initialize before processing a request (a “cold start”) can affect costs.

Cold starts can increase execution time and, consequently, the cost.

Network Costs

Data transfer in and out of the function, particularly to and from external services or across regions, incurs network charges. These costs can become significant, especially with high data volumes.

Logging and Monitoring

Implementing comprehensive logging and monitoring is crucial for debugging and performance optimization. However, logging services like CloudWatch (AWS), Application Insights (Azure), or Cloud Logging (GCP) have their own associated costs, based on the volume of logs stored and the number of operations performed.

Database Interactions

Serverless functions frequently interact with databases. Database costs are separate from function execution costs but are a significant part of the overall bill.

Dependencies and Package Size

Large function packages, including numerous dependencies, can increase cold start times and execution duration, thereby impacting costs. Optimizing package size is essential.

Provisioned Concurrency

Provisioned concurrency, available on some platforms, can reduce cold start times and improve performance, but it also incurs a cost for the provisioned capacity, even when the function is not actively processing requests.

Region Selection

Pricing can vary by region. Choosing the region closest to your users can minimize latency and potentially reduce data transfer costs.Consider a hypothetical e-commerce application. A serverless function handles product searches. Initially, the function’s package size is large, resulting in slow cold starts and high execution times. The development team optimizes the code, reducing the package size and improving execution time by 30%.

They also implement a caching mechanism to reduce database queries. The combined effect is a significant reduction in execution time and database interaction costs, leading to a 40% decrease in the overall monthly serverless bill. This example highlights the importance of considering all factors beyond just execution time.

Identifying Potential Cost Drivers

Understanding the mechanisms behind serverless cost accumulation is critical to preventing unexpected bills. Serverless architectures, while offering scalability and operational efficiency, introduce complexities in cost management. Without diligent monitoring and proactive optimization, seemingly minor inefficiencies can rapidly escalate into significant financial burdens. This section will delve into the key cost drivers in serverless environments, equipping you with the knowledge to identify and mitigate potential financial risks.

Inefficient Code Execution

The efficiency of your code directly impacts the duration and resources consumed during function invocations, translating into costs. Inefficient code leads to longer execution times and potentially increased memory usage, both of which are directly tied to the pricing models of most serverless providers. Optimizing code is therefore a crucial step in cost control.

- Prolonged Execution Times: Poorly optimized code, such as inefficient algorithms or excessive I/O operations, can significantly extend the execution time of a function. Serverless providers typically charge based on execution time, making prolonged durations a direct cost multiplier. For example, a function that should complete in 100 milliseconds but takes 500 milliseconds due to inefficient database queries will incur five times the cost per invocation.

- Memory Over-Allocation: Requesting more memory than a function actually requires can also inflate costs. While more memory can sometimes improve performance (especially for CPU-bound tasks), it also increases the per-invocation price. The optimal memory allocation balances performance needs with cost considerations. Monitoring memory usage metrics is essential to avoid over-provisioning.

- Unnecessary Operations: Redundant or inefficient code paths, such as performing unnecessary calculations or fetching data that is not used, add to execution time and cost. Code reviews and profiling tools can help identify and eliminate these inefficiencies.

- Cold Starts: While not directly related to code

-efficiency* in terms of execution within a single invocation, cold starts can contribute to higher costs, especially for functions with infrequent invocations. Cold starts involve the initial loading of the function’s execution environment, adding latency and potentially increasing the total execution time and resources needed. Mitigating cold starts through techniques like pre-warming or using provisioned concurrency can reduce costs.

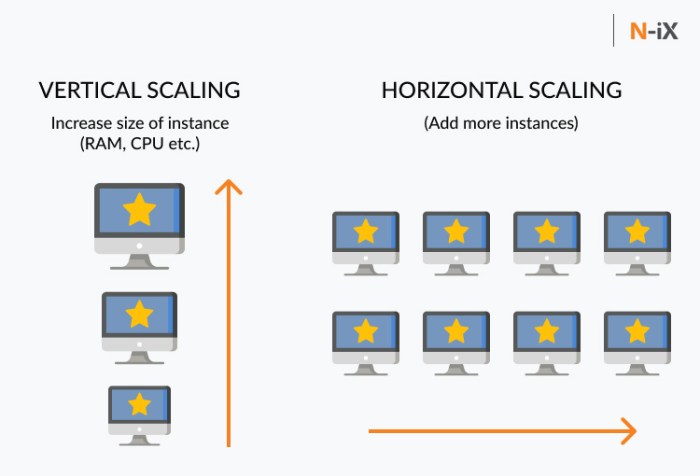

Over-Provisioning and Resource Consumption

Serverless platforms automatically scale resources based on demand. However, misconfigurations or unforeseen traffic patterns can lead to over-provisioning, where resources are allocated beyond what is actually needed, thereby increasing costs. Effective monitoring and capacity planning are vital for managing resource consumption.

- Excessive Concurrency: Serverless functions can handle multiple concurrent invocations. Setting concurrency limits too high can lead to over-provisioning, especially during traffic spikes. Monitoring concurrency metrics and adjusting limits based on observed traffic patterns is crucial.

- Unoptimized Database Interactions: Serverless functions often interact with databases. Inefficient database queries, such as those lacking indexes or performing full table scans, can consume excessive database resources (e.g., CPU, I/O), leading to higher database costs, which are often a significant component of the overall bill.

- Unnecessary Data Transfer: Data transfer costs, particularly for egress (data leaving the cloud provider’s network), can be substantial. Functions that transfer large amounts of data, such as serving large files or processing high-volume data streams, will incur higher data transfer charges. Optimizing data transfer by compressing data, caching frequently accessed content, and choosing the appropriate data storage and retrieval methods is critical.

- Automated Scaling Misconfigurations: Auto-scaling configurations can be a double-edged sword. Incorrectly configured scaling policies can lead to over-provisioning during periods of low traffic or inadequate resource allocation during peak loads. Careful tuning of scaling parameters is essential.

Runaway Processes and Infinite Loops

Uncontrolled processes, such as infinite loops or resource leaks, can quickly exhaust resources and generate significant costs. These issues are often difficult to detect without proper monitoring and alerting.

- Infinite Loops: Logic errors that result in infinite loops within a function will consume resources indefinitely, leading to exponential cost increases. Robust testing and error handling are crucial to prevent these situations.

- Resource Leaks: Resource leaks, such as failing to close database connections or release memory, can lead to the accumulation of unused resources, driving up costs over time. Regular code reviews and the use of memory profiling tools can help identify and address resource leaks.

- Uncontrolled Background Processes: Functions that spawn background processes without proper control mechanisms can lead to excessive resource consumption. Monitoring and limiting the resources allocated to these background processes are essential.

- Trigger-Related Issues: Issues with event triggers, such as misconfigured event sources or unintended recursive invocations, can lead to a function being invoked repeatedly, resulting in high costs. Monitoring trigger activity and implementing safeguards to prevent unintended invocations is crucial.

Real-World Examples of Unexpected Serverless Bills

Examining real-world examples can provide valuable insights into the potential pitfalls of serverless cost management.

- Example 1: Inefficient Database Queries: A company experienced a significant increase in its serverless bill after deploying a new feature. Investigation revealed that a function responsible for fetching data from a database was using inefficient queries lacking proper indexes. This resulted in longer execution times and increased database resource consumption, driving up the cost. The issue was resolved by adding indexes and optimizing the queries.

- Example 2: Uncontrolled Concurrency: A mobile application experienced a sudden surge in user traffic during a promotional event. The serverless functions handling user requests were configured with excessively high concurrency limits. This resulted in over-provisioning and significantly increased the bill, even though the application could have handled the traffic with lower concurrency settings.

- Example 3: Infinite Loop in Processing: A data processing pipeline encountered a bug that caused a function to enter an infinite loop. This function continuously processed the same data, consuming resources until the service was throttled, and the resulting bill was considerably higher than expected. The root cause was a data validation error that wasn’t properly handled.

- Example 4: Unoptimized Data Transfer: A media company serving video content from a serverless platform saw a sudden spike in its data transfer costs. This was traced to the fact that large video files were being served uncompressed, leading to higher egress charges. Implementing video compression reduced the data transfer volume and the associated costs.

Monitoring and Tracking Serverless Resource Usage

Proactive monitoring is essential for identifying and addressing cost anomalies in serverless environments. Establishing a robust monitoring strategy involves collecting and analyzing relevant metrics.

- Function Execution Metrics: Monitor key metrics like execution time, memory usage, and the number of invocations. Analyze these metrics over time to identify trends and anomalies.

- Concurrency Metrics: Track the number of concurrent function invocations. This metric helps identify over-provisioning or resource bottlenecks.

- Error Rates: Monitor error rates (e.g., 4xx and 5xx errors) to detect performance issues and potential resource consumption problems.

- Database Resource Usage: Monitor database query times, read/write operations, and storage consumption to identify inefficiencies.

- Data Transfer Metrics: Track data transfer volume (ingress and egress) to identify and optimize data transfer costs.

- Cost Dashboards and Alerts: Create custom dashboards that visualize key cost metrics. Set up alerts to notify you of unusual spikes in cost or resource usage. For example, set an alert if the cost of a function exceeds a predefined threshold.

- Log Analysis: Analyze function logs to identify performance bottlenecks, errors, and other issues that may be contributing to higher costs. Utilize log aggregation and analysis tools to search and analyze logs efficiently.

- Cost Allocation Tags: Use cost allocation tags to track the costs associated with different functions, services, or projects. This helps you pinpoint which components are driving the most costs.

Implementing Cost-Effective Coding Practices

Optimizing code for serverless environments is crucial for controlling costs. Serverless billing models are directly tied to resource consumption; therefore, the efficiency of the code directly impacts the expenses incurred. Employing strategic coding practices can significantly reduce function execution time, the amount of memory utilized, and the number of function invocations, thereby minimizing overall costs. This section explores specific strategies and techniques to achieve cost-effective serverless applications.

Design Code Optimization Strategies to Minimize Function Execution Time and Resource Consumption

Reducing execution time and resource consumption is paramount in serverless environments, where billing is often based on these metrics. Several optimization strategies can be employed to achieve this goal, leading to significant cost savings.

- Code Profiling and Performance Analysis: Employing profiling tools specific to the programming language and serverless platform is essential. These tools help identify performance bottlenecks within the code, such as slow database queries, inefficient algorithms, or excessive memory allocation. For example, in Python, tools like `cProfile` can be used to analyze function execution times and identify areas for improvement. In Node.js, `perf_hooks` can be used for similar analysis.

Analyzing the profiling results allows developers to pinpoint areas where optimization efforts should be concentrated.

- Algorithm Optimization: Choosing the most efficient algorithms for a given task is crucial. The time complexity of an algorithm directly impacts execution time. For instance, using a more efficient sorting algorithm (e.g., Merge Sort or QuickSort, which have an average time complexity of O(n log n)) instead of a less efficient one (e.g., Bubble Sort, which has a time complexity of O(n^2)) can lead to substantial performance improvements, especially when dealing with large datasets.

- Resource Allocation Management: Serverless platforms allow configuring the amount of memory allocated to a function. Allocating the appropriate amount of memory is critical; insufficient memory can lead to performance degradation, while excessive memory wastes resources. Monitoring function performance and adjusting memory allocation accordingly is a continuous process. For example, if a function consistently runs out of memory, increasing the allocated memory can improve performance, but it also increases costs.

Conversely, if a function uses only a fraction of the allocated memory, reducing the allocation can lead to cost savings.

- Code Minimization and Bundling: Reducing the size of the deployed code package can decrease cold start times, which can significantly impact latency and, consequently, costs. Techniques such as code minification (removing unnecessary characters and whitespace), dead code elimination (removing unused code), and bundling (combining multiple files into a single file) are beneficial. Bundling tools like Webpack (for JavaScript/Node.js) or tools like `pyminify` (for Python) can be used to optimize code packages.

- Asynchronous Operations: Leveraging asynchronous operations can significantly improve performance, especially when dealing with I/O-bound tasks such as database interactions or API calls. Instead of waiting for each operation to complete sequentially, asynchronous programming allows functions to continue executing while waiting for these operations to finish. This can lead to a reduction in overall execution time. For instance, in Node.js, using `async/await` or Promises can make asynchronous code easier to write and manage.

Elaborate on the Use of Caching and Other Techniques to Reduce the Number of Function Invocations

Reducing the number of function invocations is a direct way to minimize serverless costs, as each invocation typically incurs a charge. Implementing caching and other optimization techniques helps achieve this goal by reusing results and avoiding unnecessary computations.

- Caching Strategy: Caching involves storing the results of function executions or frequently accessed data to avoid recomputing them or retrieving them from external sources. Caching can be implemented at different levels, including:

- In-memory Caching: Storing data in the function’s memory during its execution. This is suitable for caching data that is accessed frequently within a single function invocation.

- Distributed Caching: Using a distributed caching service (e.g., Redis, Memcached) to store data accessible across multiple function invocations. This is suitable for caching data that is accessed by multiple functions or across different invocations.

- Edge Caching: Caching content at the edge of a content delivery network (CDN) to reduce latency and function invocations.

- Event-Driven Architecture: Implementing an event-driven architecture can reduce the need for frequent function invocations. Instead of continuously polling for updates, functions can be triggered by specific events. This approach can minimize unnecessary invocations. For example, a function that processes customer orders can be triggered only when a new order event is received, rather than running periodically to check for new orders.

- Data Transformation and Pre-processing: Performing data transformation and pre-processing steps outside of the function, if possible, can reduce the amount of processing required within the function itself. This approach reduces execution time and resource consumption. For example, pre-calculating data aggregates or transforming data into a format suitable for the function’s needs can reduce the function’s workload.

- Batch Processing: Grouping multiple requests into a single function invocation (batch processing) can reduce the number of invocations and associated overhead. For instance, instead of invoking a function to process each individual record in a database, multiple records can be processed in a single batch.

- Rate Limiting: Implementing rate limiting can prevent excessive function invocations, especially when dealing with external APIs or services that have usage limits. This can be achieved by tracking the number of requests made within a specific time window and limiting the number of requests allowed.

Create a Guide on Writing Efficient Serverless Code for Different Programming Languages (Python, Node.js, etc.)

Efficient serverless code varies slightly depending on the programming language used. Each language has its own best practices and optimization techniques. The following sections provide language-specific guidance.

- Python:

- Use optimized libraries: Utilize Python libraries optimized for performance, such as `NumPy` for numerical computations, `Pandas` for data manipulation, and `psycopg2` for PostgreSQL database interaction. These libraries are often written in C or other compiled languages and offer significant performance improvements over native Python implementations.

- Optimize imports: Only import the necessary modules and functions. Avoid importing entire libraries when only a few functions are needed. This reduces the package size and potentially improves cold start times.

- Use context managers: Employ context managers (using the `with` statement) for resource management, such as file handling or database connections. This ensures that resources are properly released, even if errors occur, preventing resource leaks.

- Leverage asynchronous programming (asyncio): Use the `asyncio` library for asynchronous I/O operations to avoid blocking function execution, particularly for network requests or database interactions.

- Minimize dependencies: Reduce the number of external dependencies to keep the deployment package size small. Regularly review dependencies and remove any that are no longer required.

- Node.js:

- Use asynchronous programming: Node.js is inherently asynchronous, so use `async/await` or Promises for all I/O-bound operations to prevent blocking the event loop. This maximizes concurrency and performance.

- Optimize event loop usage: Avoid long-running synchronous operations that can block the event loop. If CPU-intensive tasks are necessary, consider offloading them to worker threads or child processes.

- Use efficient data structures: Choose appropriate data structures for specific tasks. For example, using a `Map` for key-value lookups can be more efficient than using an object with a large number of properties.

- Optimize memory usage: Avoid creating unnecessary objects and references. Use the garbage collector efficiently by releasing references to objects that are no longer needed.

- Use native modules: When performance is critical, consider using native modules written in C or C++ for computationally intensive tasks. These modules can offer significant performance advantages.

- Other Languages (e.g., Java, Go):

- Java:

- Use efficient data structures and algorithms: Java provides robust data structures and algorithms in its standard library. Choose the most efficient ones for the task at hand.

- Optimize object creation: Avoid creating unnecessary objects, as object creation can be a performance bottleneck. Consider using object pooling for frequently created objects.

- Use the right JVM settings: Configure the Java Virtual Machine (JVM) appropriately for the serverless environment. Adjust memory settings, garbage collection settings, and other parameters to optimize performance.

- Go:

- Optimize goroutines: Goroutines are lightweight, concurrent functions. Use them effectively to parallelize tasks and improve performance.

- Use channels for communication: Channels provide a safe and efficient way for goroutines to communicate and synchronize.

- Minimize allocations: Avoid unnecessary memory allocations, as allocations can impact performance. Use techniques like pre-allocating memory and reusing objects.

- Java:

Leveraging Monitoring and Alerting

Effective monitoring and alerting are critical for managing serverless costs. They provide real-time visibility into resource utilization, identify potential cost anomalies, and enable proactive intervention to prevent unexpected bills. Implementing these practices allows for a data-driven approach to cost optimization, ensuring that resources are used efficiently and aligned with business needs.

Setting Up Comprehensive Monitoring Dashboards for Serverless Applications

Comprehensive monitoring dashboards are essential for visualizing the performance and cost characteristics of serverless applications. These dashboards aggregate data from various sources, providing a centralized view of application behavior.To create effective monitoring dashboards, consider the following:

- Choosing the Right Monitoring Tools: Select tools that integrate seamlessly with your serverless platform. Popular options include:

- CloudWatch (AWS): Offers comprehensive monitoring, logging, and alerting capabilities for AWS services.

- Stackdriver (Google Cloud): Provides similar functionalities for Google Cloud Platform (GCP) services.

- Azure Monitor (Azure): Enables monitoring and alerting for Azure services.

- Third-party tools: Datadog, New Relic, and Dynatrace offer cross-platform monitoring solutions with advanced features.

- Defining Key Metrics: Identify the critical metrics that reflect application performance and cost. These typically include:

- Invocation count: The number of times a function is executed.

- Execution time: The duration of function execution.

- Memory usage: The amount of memory consumed by a function.

- Errors: The number of function errors.

- Cold starts: The number of times a function is initialized.

- Concurrency: The number of concurrent function invocations.

- Cost per function: The cost associated with each function’s execution.

- Creating Visualizations: Use charts, graphs, and tables to present the data clearly.

- Line charts: To track metrics over time, such as invocation count and execution time.

- Bar charts: To compare metrics across different functions or regions.

- Pie charts: To visualize the distribution of costs across different services.

- Tables: To display detailed information about function invocations, errors, and costs.

- Customizing Dashboards: Tailor dashboards to your specific application and team needs. Organize metrics by function, service, or business unit to facilitate easy analysis and decision-making.

For example, a dashboard could display a line chart showing the daily invocation count of a specific Lambda function, a bar chart comparing the execution time of different functions, and a pie chart illustrating the cost distribution across various AWS services. This visual representation allows for quick identification of trends, anomalies, and potential areas for optimization.

Organizing an Alert System to Notify Users of Unusual Spending Patterns or Potential Cost Overruns

An effective alert system proactively identifies and communicates potential cost issues, enabling timely intervention. The system should be configured to monitor key metrics and trigger notifications when predefined thresholds are exceeded.Implementing an alert system involves these steps:

- Setting Thresholds: Define clear thresholds for each metric based on historical data, budget constraints, and performance expectations. These thresholds trigger alerts when breached. For instance:

- Invocation count: Set an alert if the number of invocations exceeds a certain limit within a specific time frame.

- Execution time: Trigger an alert if the average execution time exceeds a defined threshold, indicating potential performance issues or inefficient code.

- Cost per function: Establish an alert if the cost of a function exceeds a predetermined value.

- Error rate: Set an alert if the error rate exceeds an acceptable level.

- Configuring Alert Channels: Choose appropriate notification channels for alerts. Common options include:

- Email: Sends notifications to designated recipients.

- Slack/Microsoft Teams: Integrates alerts into team communication channels.

- PagerDuty/Opsgenie: Sends alerts to on-call engineers.

- Automating Alert Actions: Configure automated actions to respond to alerts. These can include:

- Scaling resources: Automatically increase or decrease function concurrency based on demand.

- Triggering code deployments: Deploy optimized function versions.

- Disabling functions: Disable problematic functions to prevent further cost accumulation.

- Testing and Refining: Regularly test the alert system to ensure it functions correctly and provides accurate notifications. Continuously refine thresholds and alert configurations based on feedback and changing application behavior.

An example of an alert system in action: If a Lambda function’s cost exceeds a pre-defined threshold, the system sends an email notification to the engineering team, along with a link to the monitoring dashboard for further investigation. If the cost continues to rise, the system can automatically scale the function’s concurrency limit, preventing further overruns.

Detailing the Different Metrics to Track to Understand Serverless Cost Drivers

Tracking specific metrics provides valuable insights into the factors that drive serverless costs. By analyzing these metrics, developers can pinpoint areas for optimization and reduce expenses.Key metrics to monitor include:

- Invocation Count: Represents the frequency of function executions. High invocation counts directly correlate with higher costs, particularly for functions with high per-invocation pricing.

- Example: A web application experiencing a sudden surge in user traffic will likely see an increase in Lambda function invocations, leading to higher costs.

- Execution Time: Measures the duration of function execution. Longer execution times result in higher costs, as you pay for the time your function consumes resources.

- Example: A function with inefficient code or resource-intensive operations will have longer execution times, driving up costs. Optimizing the code or utilizing more efficient libraries can reduce execution time.

- Memory Usage: Reflects the amount of memory allocated to a function. Allocating more memory than necessary increases costs.

- Example: A function that processes large files might require more memory, increasing the cost. However, over-provisioning memory leads to unnecessary expenses. Carefully analyzing memory requirements helps in finding the right balance.

- Cold Starts: Indicates the time taken for a function to initialize. Cold starts can impact performance and indirectly increase costs due to longer execution times.

- Example: Functions experiencing frequent cold starts may require optimization of the function code, or increased provisioned concurrency to mitigate the impact.

- Concurrency: Represents the number of function instances running simultaneously. High concurrency can increase costs, particularly during peak load.

- Example: During a flash sale, a website might experience a surge in concurrent user requests, leading to increased function concurrency and associated costs. Managing concurrency limits can help control these costs.

- Error Rate: Measures the percentage of function invocations that result in errors. High error rates can indicate underlying problems and might result in increased costs.

- Example: A function with a high error rate might be retried multiple times, leading to increased invocations and costs. Debugging and resolving the root cause of errors can help to lower costs.

- Data Transfer Costs: In serverless applications, data transfer costs can accumulate when data is transferred between services or regions. Monitoring data transfer volumes helps in identifying and managing these costs.

- Example: A serverless application that processes large files and transfers them between different storage services can incur significant data transfer costs. Optimizing data transfer strategies can help minimize these costs.

By closely monitoring these metrics, developers can gain a comprehensive understanding of the cost drivers within their serverless applications, enabling informed decisions about optimization strategies and cost management.

Utilizing Cost Management Tools

Effective cost management is paramount in serverless environments, where resources are dynamically provisioned and billed based on consumption. Cloud providers offer a range of tools to help users track, analyze, and control their spending. Utilizing these tools, alongside third-party solutions, allows for proactive cost optimization and mitigation of unexpected charges.

Cloud Provider-Specific Cost Management Tools

Each major cloud provider offers its own suite of cost management tools designed to provide insights into resource consumption and spending patterns. These tools often provide visualizations, reporting capabilities, and the ability to set budgets and alerts.

- AWS Cost Explorer: AWS Cost Explorer enables users to visualize, understand, and manage their AWS costs over time. It allows filtering and grouping costs by various dimensions, such as service, region, and resource tags. The tool provides forecasts based on historical spending patterns, allowing users to anticipate future costs. Users can create custom reports and save them for recurring analysis. For example, a user can identify the cost associated with a specific Lambda function and track its spending trends over time.

- Azure Cost Management + Billing: Azure Cost Management + Billing provides comprehensive cost management capabilities for Azure resources. It offers cost analysis, budgeting, and recommendations for optimizing resource usage. Users can analyze costs across various scopes, including subscriptions, resource groups, and resources. The tool also integrates with Azure Advisor, which provides cost optimization recommendations based on resource utilization and configuration. For instance, it can identify underutilized virtual machines that can be resized or shut down to reduce costs.

- Google Cloud Billing: Google Cloud Billing offers features to monitor and manage Google Cloud costs. It allows users to view detailed cost breakdowns, set budgets, and create billing alerts. The tool integrates with Google Cloud’s resource hierarchy, allowing cost allocation based on projects, folders, and organizations. Users can also utilize cost reports to analyze spending patterns and identify areas for optimization. For example, a user can track the cost of a specific Cloud Function and identify if it is exceeding its expected budget.

Setting Budgets and Spending Alerts

Setting budgets and spending alerts is a crucial proactive measure to prevent unexpected charges in serverless deployments. By defining spending thresholds and receiving notifications when those thresholds are approached or exceeded, users can take timely action to control costs.

- Budget Creation: Cloud providers allow users to create budgets for various scopes, such as accounts, services, or resource tags. Budgets define a spending limit over a specified period (e.g., monthly). When setting a budget, consider historical spending patterns, anticipated resource usage, and business requirements. For example, a development team might set a monthly budget for testing and development resources to prevent overspending.

- Alerting Configuration: Alerts are configured to trigger notifications when spending reaches a predefined percentage of the budget. These alerts can be sent via email, SMS, or other communication channels. The frequency and severity of alerts can be customized based on the budget and the user’s preferences. For example, an alert can be configured to notify the team when 80% of the budget is utilized and another one when the budget is exceeded.

- Alerting Actions: Upon receiving an alert, users can investigate the cause of the increased spending and take corrective actions. These actions might include optimizing code, scaling resources, or reconfiguring services. The ability to take prompt action based on alerts is critical to preventing unexpected costs.

Benefits of Third-Party Cost Optimization Tools

While cloud provider-specific tools provide a foundational level of cost management, third-party cost optimization tools often offer more advanced features and capabilities. These tools can provide deeper insights, automate cost optimization recommendations, and integrate with various monitoring and alerting systems.

- Advanced Analytics and Reporting: Third-party tools often provide more sophisticated analytics and reporting capabilities than native cloud provider tools. They can analyze cost data from multiple cloud providers, identify cost drivers, and provide detailed reports on resource utilization and spending patterns.

- Automated Recommendations: Many third-party tools use machine learning algorithms to analyze resource usage and provide automated recommendations for cost optimization. These recommendations might include resizing resources, identifying idle resources, or suggesting code optimizations.

- Integration with Monitoring and Alerting: Third-party tools often integrate with existing monitoring and alerting systems, providing a unified view of cost, performance, and security data. This integration can simplify the process of identifying and responding to cost-related issues.

- Example: Consider a scenario where a company uses a third-party tool to analyze its serverless Lambda function costs. The tool identifies that a particular function is consistently over-provisioned, leading to higher costs. Based on this analysis, the tool recommends reducing the memory allocation for the function, resulting in cost savings. This demonstrates the value of third-party tools in providing actionable insights and automating cost optimization.

Optimizing Function Configuration

Function configuration is a critical lever for cost optimization in serverless architectures. Incorrectly configured functions can lead to significant overspending due to inefficient resource allocation and unnecessary scaling. Careful consideration of memory allocation, timeout settings, and concurrency limits, along with a proactive approach to managing function scaling, is essential for controlling costs and ensuring optimal performance.

Configuring Function Memory, Timeout, and Concurrency

The configuration of function memory, timeout settings, and concurrency limits directly impacts the cost and performance of serverless functions. Each setting influences resource consumption and, consequently, the billing incurred.

- Memory Allocation: The amount of memory allocated to a function dictates the CPU power available. Higher memory allocation generally results in faster execution times but also increases the cost per invocation. The optimal memory setting is a balance between performance and cost.

Consider the following scenario: A function processes image resizing.

Initially, it’s configured with 128MB of memory and takes 5 seconds to process an image. By increasing the memory to 512MB, the processing time drops to 1 second. While the cost per invocation increases, the overall cost might decrease if the function is invoked frequently because it processes more images per unit of time, thus reducing the total execution time, and the related cost.

Conversely, if the function is rarely invoked, the increased cost per invocation might outweigh the time saved.

To determine the optimal memory allocation, a trial-and-error approach, combined with performance testing and cost analysis, is often employed. Start with a low memory setting and gradually increase it, measuring the execution time and cost at each step.

Use monitoring tools to track CPU utilization to identify whether the function is CPU-bound or memory-bound. If the function is CPU-bound, increasing memory will likely improve performance.

- Timeout Settings: The timeout setting defines the maximum execution time allowed for a function. Setting the timeout too high can lead to unnecessary costs if a function encounters an error or gets stuck in a loop, as it will continue to consume resources until the timeout is reached. Setting the timeout too low can result in failed invocations and re-attempts, which also increase costs.

For example, if a function is designed to process data from an external API that occasionally experiences delays, a higher timeout might be necessary to avoid premature termination. However, if the function primarily performs simple operations, a shorter timeout is appropriate. Monitoring function execution times and error rates helps to determine the appropriate timeout setting.

- Concurrency Limits: Concurrency limits control the number of function instances that can run concurrently. Setting these limits helps prevent a single function from consuming excessive resources and potentially impacting other services.

For example, a web application function processing user requests might benefit from a concurrency limit to prevent overload during peak traffic.

The concurrency limit should be set based on the expected traffic volume, the function’s execution time, and the available resources. Monitoring the function’s invocation rate and error rates is crucial to determine if the concurrency limit needs adjustment.

Impact of Function Scaling and Effective Management

Serverless functions automatically scale based on demand, a key advantage of the architecture. However, this scaling behavior can also contribute significantly to costs if not managed effectively. Understanding how scaling affects billing and implementing strategies to control it is essential.

- Scaling and Billing Relationship: Serverless providers typically bill based on the number of invocations, the duration of execution, and the resources consumed. Function scaling directly influences these factors.

For example, a function processing a high volume of requests will automatically scale up, resulting in a higher number of invocations and potentially longer execution times (due to increased resource contention).

This increased usage directly translates to higher billing.

The scaling behavior is often influenced by factors such as the invocation rate, the function’s memory allocation, and the availability of resources in the underlying infrastructure.

- Managing Scaling Effectively: Several strategies can be employed to manage function scaling and control costs.

- Concurrency Limits: Setting appropriate concurrency limits can prevent a single function from consuming excessive resources and driving up costs during peak traffic.

- Provisioned Concurrency: Some serverless platforms offer provisioned concurrency, which allows pre-warming function instances to handle anticipated traffic spikes. This can reduce cold start times and improve performance, but it also incurs a cost for the provisioned resources, even when the function is not actively processing requests.

- Autoscaling Configuration: Configure autoscaling rules based on metrics like invocation rate, error rate, and queue depth. This ensures that the function scales up or down dynamically based on the actual demand. Fine-tuning the autoscaling configuration is critical to avoid over-provisioning or under-provisioning.

- Rate Limiting: Implement rate limiting mechanisms to control the number of requests a function processes within a specific time window. This can help prevent a function from being overwhelmed by a sudden surge of traffic.

Checklist for Function Configuration Review

Regularly reviewing function configurations is a proactive measure to ensure cost-effectiveness and performance. A checklist provides a structured approach to identify and address potential issues.

- Memory Allocation:

- Assess the function’s CPU utilization and execution time.

- Experiment with different memory settings to find the optimal balance between performance and cost.

- Monitor for memory leaks or inefficient memory usage within the function code.

- Timeout Settings:

- Review function execution times and error rates.

- Ensure the timeout setting is sufficient to handle typical workloads without being excessively long.

- Monitor for timeout errors and adjust the setting accordingly.

- Concurrency Limits:

- Evaluate the function’s invocation rate and the expected traffic volume.

- Set concurrency limits to prevent excessive resource consumption during peak loads.

- Monitor for concurrency-related errors or performance degradation.

- Scaling Configuration:

- Review autoscaling rules and ensure they are configured based on relevant metrics.

- Monitor the function’s scaling behavior and identify any patterns of over-scaling or under-scaling.

- Evaluate the use of provisioned concurrency and its cost-effectiveness.

- Code Optimization:

- Review the function’s code for inefficiencies, such as unnecessary computations or resource-intensive operations.

- Optimize the code to reduce execution time and resource consumption.

- Use code profiling tools to identify performance bottlenecks.

- Cost Monitoring:

- Use cost management tools to track function-related costs.

- Set up alerts to notify you of any unexpected cost increases.

- Regularly analyze cost reports to identify areas for optimization.

Choosing the Right Serverless Services

Selecting the appropriate serverless services is critical for optimizing costs. The architectural choices made during the design phase directly impact the overall expenditure. This section will delve into comparing different serverless services, offering guidance on service selection based on use cases, and highlighting often-overlooked cost factors.

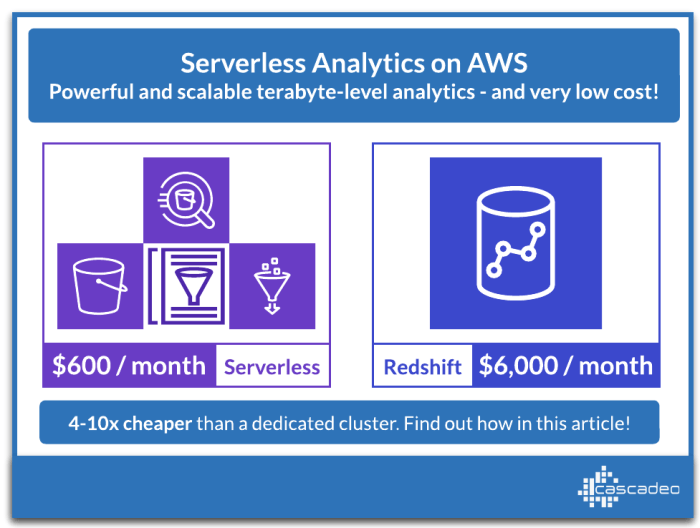

Comparing Serverless Service Cost Implications

Understanding the cost differences between various serverless services is paramount. This involves analyzing the pricing models and resource consumption patterns of each service to make informed decisions.

- AWS Lambda vs. Google Cloud Functions (or Azure Functions): These services provide compute resources for executing code in response to events. The cost is primarily driven by the number of invocations, the duration of execution (measured in milliseconds), and the memory allocated to the function.

- AWS Lambda:

- Pros: Mature ecosystem, extensive integration with other AWS services, wide community support.

- Cons: Cold starts can impact latency, particularly for infrequently invoked functions.

- Google Cloud Functions:

- Pros: Often faster cold starts compared to AWS Lambda, seamless integration with Google Cloud services.

- Cons: Less mature ecosystem than AWS Lambda, can be more complex to integrate with non-Google services.

- Azure Functions:

- Pros: Deep integration with the Microsoft ecosystem, supports various programming languages and triggers.

- Cons: Pricing can be less predictable depending on the consumption plan and underlying resources.

- API Gateway vs. Cloud Endpoints (or Azure API Management): These services manage the access to backend APIs, including routing, authentication, and rate limiting. The cost depends on the number of API calls, the data transfer, and the features used.

- API Gateway (AWS):

- Pros: Highly scalable, integrates well with other AWS services, supports various authentication methods.

- Cons: Can become complex to configure for advanced API management features, higher cost for certain features.

- Cloud Endpoints (Google Cloud):

- Pros: Integrated with Google Cloud’s security and monitoring features, simpler configuration for basic API management.

- Cons: Less feature-rich than API Gateway, might require additional configuration for advanced use cases.

- Azure API Management:

- Pros: Comprehensive API management features (e.g., versioning, monitoring, security), supports various deployment options.

- Cons: Can be more expensive than other options, complexity in configuration and setup.

- DynamoDB vs. Cloud Firestore (or Azure Cosmos DB): These are NoSQL databases used to store and retrieve data for serverless applications. The cost is determined by the storage used, the read/write operations, and the data transfer.

- DynamoDB (AWS):

- Pros: Highly scalable, offers various pricing models (e.g., on-demand, provisioned), well-integrated with AWS services.

- Cons: Requires careful capacity planning for provisioned mode to avoid overspending or performance issues.

- Cloud Firestore (Google Cloud):

- Pros: Easy to use, supports real-time updates, integrated with Google Cloud’s ecosystem.

- Cons: Can be more expensive for large-scale read operations, potentially higher costs for complex queries.

- Azure Cosmos DB:

- Pros: Multi-model database (supports various data models), global distribution, high availability.

- Cons: Can be complex to configure and manage, higher minimum cost compared to other options.

Guide to Selecting Cost-Effective Serverless Services for Specific Use Cases

The optimal choice of serverless services varies depending on the specific application requirements. Careful evaluation of workload characteristics is crucial.

- Compute-Intensive Tasks: For tasks that require significant compute resources, consider optimizing the memory allocation and execution time of the functions. If the workload is highly variable, an on-demand pricing model can be more cost-effective. For example, using AWS Lambda with a high memory allocation (e.g., 1024MB or more) can reduce the overall execution time and thus, the cost, compared to a lower memory allocation that results in a longer execution time.

- Event-Driven Applications: Serverless functions are ideally suited for event-driven applications. Use services like AWS EventBridge or Google Cloud Pub/Sub to trigger functions based on events. Careful consideration of the event volume and the frequency of function invocations is essential. Implementing batch processing for high-volume events can often reduce costs by minimizing the number of individual function invocations.

- API Backends: For API backends, select API Gateway or Cloud Endpoints based on the features needed. If complex API management features are required, API Gateway might be the better choice. For simpler use cases, Cloud Endpoints could be more cost-effective. Implementing caching at the API gateway level can reduce the load on backend functions and decrease costs.

- Data Storage: For data storage, choose a NoSQL database based on the access patterns and data volume. DynamoDB is often suitable for high-volume, low-latency read/write operations. Cloud Firestore is better for applications that require real-time updates. Cosmos DB is well-suited for globally distributed applications with multi-model support. Choosing the correct read/write capacity units (RCUs/WCUs) or auto-scaling settings is essential to avoid overspending.

Identifying and Mitigating Often-Overlooked Cost Factors

Several serverless services and configurations can contribute to unexpected costs if not carefully managed. Proactive monitoring and optimization are necessary.

- Data Transfer Costs: Data transfer costs between services within the cloud provider’s network are generally free, but data transfer

-out* of the cloud provider’s network incurs charges. This is particularly relevant for APIs that serve large amounts of data or for applications that transfer data to external services. Regularly monitor data egress and optimize data transfer patterns to reduce these costs.Using content delivery networks (CDNs) can reduce data transfer costs by caching content closer to the end-users.

- Idle Resources: Some serverless services might consume resources even when idle. For example, if a database connection pool is configured without proper limits, or if resources are over-provisioned, costs can accrue even when there is no active traffic. Implement automated scaling mechanisms and resource cleanup to prevent these costs.

- Monitoring and Logging Costs: While monitoring and logging are crucial for troubleshooting and performance analysis, they can also incur costs. Use the monitoring tools provided by the cloud provider (e.g., AWS CloudWatch, Google Cloud Monitoring, Azure Monitor) and configure them to collect only the necessary metrics and logs. Consider using log aggregation and analysis services to optimize storage costs. Implementing log sampling can help reduce the volume of logs collected.

- Network Costs: Network configuration can affect costs. For example, if a function is deployed in a region with high network costs, or if there is excessive data transfer between different regions, costs will increase. Optimize network configuration by deploying services in the same region as the end-users, and using appropriate network security groups and VPC configurations.

- Development and Testing Environments: Often, development and testing environments are overlooked when considering costs. These environments can consume resources if not properly managed. Implement automation to create and destroy these environments when they are not in use. Using cost allocation tags can help identify the cost of development and testing environments.

Implementing Infrastructure as Code (IaC) for Cost Control

Infrastructure as Code (IaC) represents a pivotal shift in cloud management, enabling the automation of infrastructure provisioning and management through code. This approach not only enhances operational efficiency but also provides a powerful mechanism for controlling and optimizing serverless costs. By codifying infrastructure configurations, organizations gain unprecedented visibility and control over resource allocation, allowing for proactive cost management and preventing unexpected billing surprises.

Using IaC for Serverless Cost Management

IaC tools, such as Terraform and AWS CloudFormation, facilitate the definition and management of serverless infrastructure in a declarative manner. This declarative approach contrasts with imperative methods, offering several advantages for cost control. IaC allows the infrastructure to be described as code, enabling version control, reusability, and automated deployment. This translates directly into improved cost management by:

- Predictable Resource Allocation: IaC enables precise definition of resources, eliminating guesswork and over-provisioning.

- Automated Scaling and Configuration: IaC can be used to define auto-scaling policies, ensuring resources are scaled up or down based on demand, optimizing resource utilization.

- Cost Tracking and Analysis: IaC tools often integrate with cost management platforms, providing detailed insights into resource consumption and associated costs.

- Reduced Human Error: Automation through IaC minimizes manual configuration errors that can lead to wasted resources and unexpected costs.

Defining Cost-Effective Infrastructure Configurations with IaC

Crafting cost-effective serverless infrastructure with IaC involves careful consideration of resource allocation, service selection, and configuration parameters. Here’s how to leverage IaC tools for optimal cost efficiency:

- Resource Sizing: Define function memory and timeout limits appropriately. Start with the minimum resources needed and monitor performance. Adjust configurations as necessary based on observed metrics, rather than making assumptions. For example, in AWS Lambda, configure the `memory_size` and `timeout` parameters within your IaC configuration (e.g., Terraform’s `aws_lambda_function` resource).

- Service Selection: Choose the most cost-effective serverless services for the specific workload. For instance, consider using Amazon SQS for asynchronous task processing, which can be cheaper than using a Lambda function directly triggered by a web request. Define these service integrations in your IaC, ensuring the correct service is deployed for the intended function.

- Auto-Scaling Configuration: Implement auto-scaling policies to dynamically adjust resource capacity based on demand. This prevents over-provisioning during periods of low activity. In AWS, for example, you can define auto-scaling rules for Lambda functions using the `aws_autoscaling_group` and `aws_cloudwatch_metric_alarm` resources in Terraform, or the equivalent resources in CloudFormation.

- Monitoring and Logging: Integrate monitoring and logging into the IaC configuration. Define CloudWatch alarms to alert you to potential cost issues, such as excessive function invocation times or high error rates. This is achieved by defining `aws_cloudwatch_metric_alarm` resources within your IaC configuration.

- Region Selection: Deploy resources in regions with lower pricing if latency requirements allow. Your IaC can specify the AWS region (e.g., `us-east-1`, `eu-west-1`) for each service, enabling you to control deployment location.

Integrating Cost Management Best Practices into IaC Pipelines

Integrating cost management into IaC pipelines is crucial for proactive cost control. This involves incorporating checks and validations into the deployment process to ensure cost-effective configurations are deployed and maintained.

- Cost Analysis Tools: Integrate cost analysis tools, such as AWS Cost Explorer or third-party solutions, into your IaC pipeline. These tools can analyze the resources defined in your IaC configuration and provide estimated cost projections.

- Cost-Aware Linting and Validation: Implement linting and validation rules within your IaC code to enforce cost-related best practices. For example, a linting rule could flag functions with excessively high memory allocation or timeout values. Tools like `terraform validate` can be used to enforce these rules.

- Automated Cost Checks: Implement automated cost checks as part of your CI/CD pipeline. Before deploying infrastructure changes, the pipeline should run cost analysis and validation steps. If potential cost issues are identified, the deployment should be blocked or flagged for review.

- Regular Cost Reviews: Schedule regular cost reviews of your serverless infrastructure. These reviews should involve analyzing cost reports, identifying areas for optimization, and updating your IaC configuration accordingly. This continuous improvement loop is essential for long-term cost control.

- Version Control and Rollbacks: Utilize version control (e.g., Git) for your IaC code, enabling you to track changes and easily revert to previous configurations if a deployment results in unexpected costs. This provides a safety net and minimizes the impact of costly mistakes.

Security Best Practices and Cost

Implementing robust security measures in serverless architectures is paramount, not only for protecting sensitive data and maintaining application integrity but also for managing and optimizing costs. While security often appears as an upfront investment, neglecting it can lead to significant financial ramifications. This section explores the interplay between security practices and cost efficiency in serverless environments.

Identity and Access Management (IAM) and Cost Implications

IAM is a critical component of serverless security, dictating who has access to what resources. Properly configured IAM policies can dramatically influence operational costs.

- Granular Permissions: Implementing the principle of least privilege, granting users and services only the necessary permissions, minimizes the attack surface and prevents unauthorized resource consumption. This directly translates to cost savings by limiting the scope of potential misuse. For example, if a function only needs to read data from a specific database table, it should not have permissions to write or delete data.

- IAM Roles and Resource-Based Policies: Using IAM roles and resource-based policies enables fine-grained control over access to serverless resources. This reduces the risk of unintended resource access and potential cost overruns. Misconfigured policies can lead to scenarios where functions consume excessive resources due to broad permissions, driving up costs.

- Regular Auditing: Regularly reviewing and auditing IAM configurations is crucial. This includes identifying and removing unused or overly permissive policies, ensuring alignment with the principle of least privilege. Tools like AWS IAM Access Analyzer can assist in identifying overly permissive or unused policies, highlighting areas for optimization.

- Multi-Factor Authentication (MFA): Enforcing MFA for all users accessing the serverless environment adds an extra layer of security. While the direct cost of MFA may be minimal, the prevention of unauthorized access and resource consumption provides significant long-term cost benefits.

Potential Cost Implications of Poor Security Configurations

Failing to prioritize security in serverless deployments can result in a range of costly consequences.

- Data Breaches: A data breach can lead to substantial financial losses, including fines, legal fees, and reputational damage. The cost of a data breach is often far greater than the investment in robust security measures. According to the 2023 IBM Cost of a Data Breach Report, the average total cost of a data breach reached $4.45 million globally.

- Denial-of-Service (DoS) Attacks: Serverless applications can be vulnerable to DoS attacks, where malicious actors flood the application with requests, consuming resources and driving up costs. Effective security measures, such as rate limiting and web application firewalls (WAFs), can mitigate the impact of such attacks.

- Cryptojacking: Attackers may exploit vulnerabilities to install malicious code that uses serverless resources for cryptocurrency mining. This unauthorized resource consumption can result in unexpected and significant charges.

- Unauthorized Resource Usage: Misconfigured IAM policies or vulnerabilities in application code can lead to unauthorized access and consumption of resources. This can result in unexpected bills and performance degradation.

Secure and Cost-Efficient Serverless Deployment Architecture Illustrations

A secure and cost-efficient serverless architecture prioritizes both security and resource optimization.

Illustration 1: Secure API Gateway Integration

This illustration depicts a secure serverless API deployment using AWS services. An API Gateway sits at the front, receiving requests. Before routing to the backend functions, it is configured with security features such as API keys, authentication via AWS Cognito, and rate limiting. Web Application Firewall (WAF) is integrated to protect against common web exploits, reducing the risk of DoS attacks and malicious requests.

IAM roles associated with the backend Lambda functions are configured with least privilege, granting only the necessary permissions to access resources like databases and S3 buckets. Logging and monitoring are enabled to provide real-time insights into API usage and potential security threats.

Key components and their roles:

- API Gateway: Manages API traffic, handles authentication, authorization, and rate limiting.

- Cognito: Provides user authentication and authorization.

- WAF: Protects against common web exploits.

- Lambda Functions: Execute business logic.

- IAM Roles: Define permissions for Lambda functions.

- DynamoDB: A NoSQL database for storing data.

- CloudWatch: Monitors API usage and performance.

Illustration 2: Secure Data Processing Pipeline

This illustration showcases a secure and cost-efficient data processing pipeline. Data is ingested through an S3 bucket. An event trigger, such as an object creation event, invokes a Lambda function. This function performs data transformation, validation, and filtering. Another Lambda function, triggered by the first, then processes the data and stores it in a secure data warehouse, like Amazon Redshift or a similarly secured database.

IAM roles restrict access to data and resources. Encryption at rest (using KMS keys) and in transit (using TLS) are enabled to protect data confidentiality. Monitoring and logging are implemented to track pipeline performance and detect potential security issues.

Key components and their roles:

- S3 Bucket: Stores incoming data.

- Lambda Functions: Perform data processing and transformation.

- Event Triggers: Initiate Lambda function execution.

- IAM Roles: Define permissions for Lambda functions and S3 access.

- KMS Keys: Encrypt data at rest.

- Redshift: Secure data warehouse for storing processed data.

- CloudWatch: Monitors pipeline performance.

Last Point

In conclusion, mastering serverless cost optimization is not merely about minimizing expenses; it’s about maximizing the efficiency and effectiveness of your cloud infrastructure. By embracing proactive monitoring, implementing cost-effective coding practices, and leveraging the available cost management tools, organizations can unlock the full potential of serverless computing while maintaining budgetary control. The strategies Artikeld here provide a solid foundation for building and deploying serverless applications in a sustainable and financially responsible manner.

FAQ Overview

What is the primary difference between serverless and traditional cloud computing in terms of billing?

Serverless billing is based on actual resource consumption (e.g., function executions, memory usage, request counts), while traditional cloud computing often involves paying for provisioned resources regardless of utilization.

How can I monitor serverless costs effectively?

Implement comprehensive monitoring dashboards using cloud provider-specific tools (e.g., AWS CloudWatch, Azure Monitor, Google Cloud Monitoring) and third-party solutions. Track key metrics like function execution time, memory usage, and request counts, and set up alerts for unusual spending patterns.

What are the common pitfalls that lead to unexpected serverless bills?

Inefficient code (e.g., slow function execution), over-provisioned resources (e.g., allocating excessive memory), runaway processes (e.g., infinite loops), and misconfigured event triggers are common pitfalls.

How does function memory allocation impact serverless costs?

Function memory allocation directly affects the price per execution. Allocating more memory than required increases costs. Optimizing memory usage is crucial for cost efficiency. Find the optimal balance by testing.

Can I use IaC to control serverless costs?

Yes, Infrastructure as Code (IaC) tools like Terraform or CloudFormation allow you to define and manage your serverless infrastructure, including cost-related configurations, in a repeatable and auditable way. This enables you to set up budgets, enforce resource limits, and ensure consistent cost-effective deployments.