The construction of a serverless data processing pipeline represents a paradigm shift in data engineering, offering unparalleled scalability, cost-efficiency, and maintainability. This architectural approach leverages the power of cloud-based, event-driven computing to transform, analyze, and store data without the need for managing underlying infrastructure. By embracing serverless technologies, organizations can focus on their core business logic, reducing operational overhead and accelerating time-to-insight.

This guide dissects the critical components of a serverless data processing pipeline, providing a detailed exploration of technology selection, data ingestion and transformation techniques, orchestration strategies, and essential considerations for error handling, security, and cost optimization. We will delve into the intricacies of various serverless platforms, offering practical insights and best practices to empower developers and data engineers to design and deploy robust, scalable, and cost-effective data processing solutions.

Defining Serverless Data Processing Pipelines

Serverless data processing pipelines represent a paradigm shift in how data is ingested, transformed, and analyzed. This approach leverages cloud-based services that automatically manage the underlying infrastructure, allowing developers to focus solely on the code and logic required for data processing tasks. This contrasts sharply with traditional methods that necessitate managing servers, scaling resources, and handling operational overhead.

Core Components of a Serverless Data Processing Pipeline

A serverless data processing pipeline typically comprises several key components that work together to handle data from ingestion to output. Understanding these components is crucial for designing and implementing effective pipelines.

- Data Ingestion: This is the entry point of the pipeline, responsible for receiving data from various sources. Common ingestion services include:

- Amazon Kinesis Data Streams: A fully managed, scalable service for real-time data streaming.

- Azure Event Hubs: A highly scalable event ingestion service for big data applications.

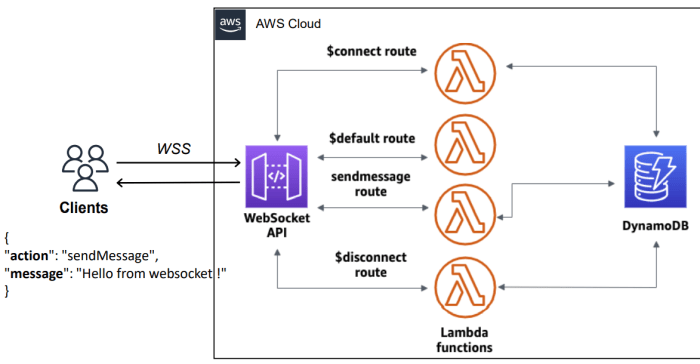

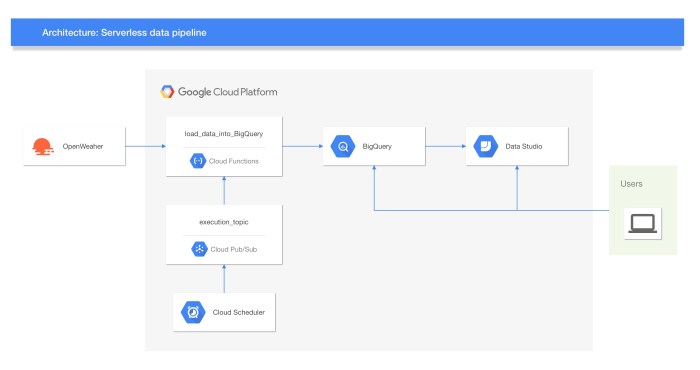

- Google Cloud Pub/Sub: A globally distributed messaging service for real-time data ingestion.

- Data Storage: Raw data is often stored in a durable and scalable data store. Choices include:

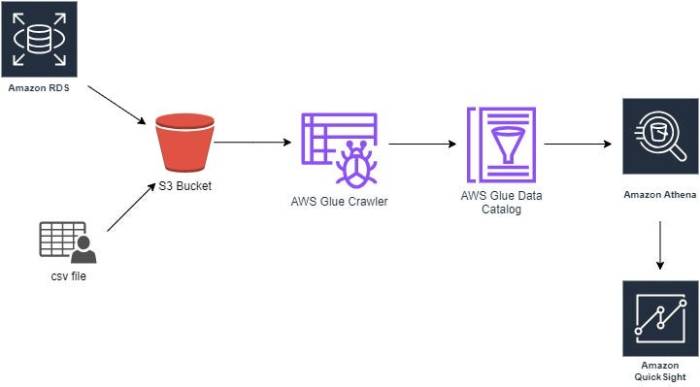

- Amazon S3: Object storage service offering high durability and availability.

- Azure Blob Storage: Object storage for storing unstructured data.

- Google Cloud Storage: Unified object storage for developers.

- Data Transformation: This component performs operations on the data, such as cleaning, filtering, and enriching. Serverless functions are commonly used for this purpose:

- AWS Lambda: Allows you to run code without provisioning or managing servers.

- Azure Functions: Event-driven, compute-on-demand experience.

- Google Cloud Functions: Enables you to run your code in response to events.

- Data Processing/Analysis: This involves applying analytics and transformations to derive insights. Services can include:

- AWS Glue: Serverless data integration service for discovering, preparing, and integrating data.

- Azure Data Factory: Cloud-based data integration service for orchestrating data movement and transformation.

- Google Cloud Dataflow: A fully managed service for stream and batch data processing.

- Data Output/Storage: The processed data is stored in a format suitable for analysis and consumption. This might include:

- Amazon Redshift: A fast, fully managed data warehouse.

- Azure Synapse Analytics: Unlimited analytics service that brings together data integration, enterprise data warehousing, and big data analytics.

- Google BigQuery: A fully managed, serverless data warehouse.

- Orchestration: Coordinating the different components of the pipeline, ensuring data flows smoothly. Services like:

- AWS Step Functions: Enables you to coordinate multiple AWS services into serverless workflows.

- Azure Logic Apps: Provides a visual designer to build and automate workflows.

- Google Cloud Composer: A fully managed workflow orchestration service built on Apache Airflow.

Benefits of Using a Serverless Approach

Serverless data processing pipelines offer significant advantages over traditional, server-based approaches, leading to greater efficiency and reduced operational overhead. The key benefits are centered around scalability, cost optimization, and reduced maintenance.

- Scalability: Serverless platforms automatically scale resources based on demand.

- For instance, AWS Lambda automatically scales the number of function instances to handle incoming requests. This is in stark contrast to traditional approaches, where you would have to manually provision and manage servers to handle peak loads.

- Cost Optimization: You pay only for the compute time consumed.

- This “pay-per-use” model can significantly reduce costs compared to traditional approaches where resources are provisioned and paid for regardless of usage.

- Example: A data processing task that runs for only 10 seconds per day would only incur costs for those 10 seconds, as opposed to the constant cost of a server running 24/7.

- Reduced Maintenance: Serverless platforms abstract away infrastructure management.

- You do not need to manage servers, operating systems, or patching. This reduces operational overhead and allows developers to focus on the core business logic of data processing.

- Faster Development Cycles: The ease of deployment and the ability to quickly iterate on code contribute to faster development cycles.

- Developers can rapidly deploy and test new features without the complexities of server management.

Comparison of Serverless Computing Platforms

Choosing the right serverless computing platform is crucial for the success of a data processing pipeline. The primary contenders—AWS Lambda, Azure Functions, and Google Cloud Functions—each possess unique strengths and weaknesses that make them suitable for different use cases.

| Feature | AWS Lambda | Azure Functions | Google Cloud Functions |

|---|---|---|---|

| Programming Languages Supported | Node.js, Python, Java, Go, C#, Ruby, PowerShell, and more. | C#, F#, Node.js, Python, Java, PowerShell, and more. | Node.js, Python, Go, Java, .NET, Ruby, PHP, and more. |

| Trigger Support | Amazon S3, Amazon DynamoDB, Amazon Kinesis, Amazon API Gateway, etc. | Azure Blob Storage, Azure Event Hubs, Azure Cosmos DB, Azure HTTP Triggers, etc. | Cloud Storage, Cloud Pub/Sub, Cloud Firestore, Cloud HTTP Triggers, etc. |

| Concurrency Limits | Configurable concurrency limits, default limits based on region. | Adjustable concurrency limits. | Concurrency limits, but often more generous than AWS Lambda. |

| Integration with Other Services | Deep integration with other AWS services. | Strong integration with other Azure services. | Strong integration with other Google Cloud services. |

| Pricing | Pay-per-invocation and duration. Free tier available. | Pay-per-execution and duration. Free grant available. | Pay-per-execution and duration. Free tier available. |

| Cold Starts | Can experience cold starts, especially for less frequently used functions. | Can experience cold starts, similar to AWS Lambda. | Generally faster cold starts compared to AWS Lambda, particularly for some languages. |

- AWS Lambda:

- Strengths: Mature platform with a wide range of integrations with other AWS services. Large community support and extensive documentation.

- Weaknesses: Can have slower cold starts compared to other platforms, especially for less frequently used functions.

- Azure Functions:

- Strengths: Strong integration with the Azure ecosystem, including services like Azure Blob Storage and Azure Event Hubs. Easy to use and deploy.

- Weaknesses: Limited support for certain programming languages compared to AWS Lambda and Google Cloud Functions.

- Google Cloud Functions:

- Strengths: Generally faster cold starts. Excellent integration with other Google Cloud services like Cloud Storage and BigQuery.

- Weaknesses: Smaller community and less mature than AWS Lambda in terms of ecosystem size.

Choosing the Right Technologies

Selecting the appropriate serverless technologies is paramount for building an efficient and cost-effective data processing pipeline. The choices made at this stage directly influence the pipeline’s performance, scalability, and overall operational costs. This section will delve into the criteria for selecting serverless components, specifically focusing on data ingestion, storage, and database technologies.

Data Ingestion Technologies Selection Criteria

The choice of a data ingestion service significantly impacts the pipeline’s ability to handle real-time data streams. Considerations include throughput requirements, data format, event volume, and integration with other services. Several serverless options exist, each with specific strengths and weaknesses.

- API Gateway: Ideal for handling HTTP requests and acting as a front door for incoming data. Suitable for data submitted via APIs, webhooks, or mobile applications.

- Kinesis (AWS): Designed for real-time data streaming and is capable of ingesting high volumes of data. Supports various data formats and offers features like data transformation and stream processing.

- Event Hubs (Azure): A highly scalable event ingestion service capable of receiving millions of events per second. It integrates well with other Azure services and supports various protocols.

The selection process involves evaluating these factors:

- Data Volume and Velocity: High-volume, high-velocity data streams necessitate services designed for real-time processing, such as Kinesis or Event Hubs. API Gateway is suitable for lower-volume, request-driven data.

- Data Format and Structure: The data format (e.g., JSON, CSV, binary) impacts the choice of services. Services like Kinesis and Event Hubs offer better support for handling various data formats.

- Real-time Processing Requirements: If real-time transformation or processing is needed during ingestion, services like Kinesis, which offer stream processing capabilities, become essential.

- Integration with Existing Systems: Consider which service best integrates with the existing cloud ecosystem and other services used in the data pipeline.

- Cost Optimization: Each service has a different pricing model. Consider the anticipated data volume and processing requirements to choose the most cost-effective option.

Storage Options Suitability

Choosing the correct storage solution is critical for data accessibility, durability, and cost optimization. The characteristics of the data, including its type, size, and access patterns, should guide the selection process. The following table provides an overview of common serverless storage options and their suitability.

| Storage Option | Data Types | Access Patterns | Suitability |

|---|---|---|---|

| S3 (AWS) | Any object (files, images, videos, logs) | High throughput, infrequent access, archival | Excellent for storing large datasets, data lakes, and backups. Cost-effective for infrequently accessed data. |

| Azure Blob Storage | Any object (files, images, videos, logs) | High throughput, infrequent access, archival | Similar to S3. Suitable for storing large datasets, backups, and archival data within the Azure ecosystem. |

| Google Cloud Storage | Any object (files, images, videos, logs) | High throughput, infrequent access, archival | Similar to S3 and Azure Blob Storage. Ideal for storing large datasets and backups within the Google Cloud ecosystem. |

| DynamoDB (AWS) | Structured data (key-value and document data) | Low-latency, high-throughput reads and writes | Suitable for applications requiring fast data access, such as session management, gaming leaderboards, and real-time applications. |

| Cosmos DB (Azure) | Multi-model (key-value, document, graph, column-family) | Low-latency, global distribution | Ideal for globally distributed applications requiring low-latency access to data. Supports various data models. |

| Cloud Firestore (Google Cloud) | Document data | Real-time updates, scalable | Suitable for mobile and web applications requiring real-time data synchronization and scalability. |

Serverless Database Decision Tree

Selecting the appropriate serverless database depends on several factors, including data model, read/write patterns, consistency requirements, and scalability needs. A decision tree can help guide the selection process.

- Data Model:

- Is the data primarily key-value or document-based? If yes, proceed to DynamoDB or Cloud Firestore.

- Is the data multi-model (key-value, document, graph, etc.)? If yes, consider Cosmos DB.

- Read/Write Patterns:

- Are low-latency reads and writes critical? If yes, consider DynamoDB or Cosmos DB.

- Are real-time updates and data synchronization required? If yes, Cloud Firestore is a good choice.

- Consistency Requirements:

- Does the application require strong consistency? DynamoDB offers strong consistency options.

- Does the application tolerate eventual consistency? Cloud Firestore and Cosmos DB support eventual consistency.

- Scalability Needs:

- Does the application require global distribution and low-latency access? Cosmos DB is well-suited for global scale.

- Is the application primarily regional? DynamoDB and Cloud Firestore can be scaled within a region.

This decision tree provides a structured approach for choosing the optimal serverless database based on the application’s specific needs. For instance, a mobile gaming application requiring real-time leaderboards and low-latency reads would likely benefit from using DynamoDB. Conversely, a globally distributed social media application requiring real-time data synchronization and supporting various data models might benefit from Cosmos DB.

Data Ingestion and Transformation

Data ingestion and transformation are critical phases in a serverless data processing pipeline. These steps ensure data from various sources is reliably collected and prepared for subsequent analysis and storage. This involves handling diverse data formats, real-time data streams, and batch uploads, alongside cleaning, filtering, and enriching the data to improve its quality and usefulness.

Methods for Data Ingestion

Data ingestion strategies must accommodate diverse data sources and ingestion frequencies. These methods are essential for building robust and scalable data pipelines.

- Real-time Stream Ingestion: This involves capturing data as it’s generated, offering low-latency processing capabilities. This is vital for applications that demand immediate insights.

- Batch Uploads: This involves ingesting data in bulk, typically from files or databases. This method is suitable for periodic data updates and historical data processing.

Real-time stream ingestion often employs message queue services like Amazon Kinesis, Azure Event Hubs, or Google Cloud Pub/Sub. These services facilitate the ingestion of high-volume, real-time data streams from sources like IoT devices, social media feeds, or application logs. Batch uploads typically leverage cloud storage services such as Amazon S3, Azure Blob Storage, or Google Cloud Storage, along with mechanisms like scheduled jobs or API endpoints to trigger data ingestion.

For example, a company that tracks customer interactions may ingest data in real-time using Amazon Kinesis, while it uses batch uploads to load historical sales data from a CRM system into the data processing pipeline.

Data Transformation with Serverless Functions

Serverless functions provide a flexible and scalable approach to data transformation. These functions can be triggered by events, such as the arrival of a new file in a cloud storage bucket or a new message in a message queue, allowing for automated data processing.

- Cleaning: This involves removing or correcting errors, inconsistencies, and missing values within the data.

- Filtering: This process involves selecting a subset of data based on specific criteria, such as removing irrelevant records or selecting data within a specific time range.

- Enrichment: This involves adding additional information to the data, such as looking up related data from other sources or performing calculations.

Serverless functions, such as AWS Lambda (Python, Node.js), Azure Functions (Python, Node.js), or Google Cloud Functions (Python, Node.js), provide the execution environment for data transformation logic. The choice of language and platform depends on factors like existing skill sets, performance requirements, and integration with other cloud services. For instance, cleaning a dataset might involve removing rows with missing values or correcting data type inconsistencies.

Filtering could involve selecting data within a specific date range or filtering out records based on specific criteria. Enrichment might include looking up customer information from a CRM system to add customer details to transaction data.

Code Snippets for Data Transformation (Python and AWS Lambda)

The following Python code snippet demonstrates a simple data transformation using AWS Lambda, processing data from an Amazon S3 bucket. The code performs basic cleaning, filtering, and enrichment operations.“`pythonimport jsonimport boto3s3 = boto3.client(‘s3’)def lambda_handler(event, context): # Retrieve the bucket name and file key from the event bucket = event[‘Records’][0][‘s3’][‘bucket’][‘name’] key = event[‘Records’][0][‘s3’][‘object’][‘key’] try: # Download the file from S3 response = s3.get_object(Bucket=bucket, Key=key) content = response[‘Body’].read().decode(‘utf-8’) data = json.loads(content) # Data Cleaning (Example: Removing rows with missing ‘price’ values) cleaned_data = [item for item in data if ‘price’ in item and item[‘price’] is not None] # Data Filtering (Example: Selecting items with a price greater than 10) filtered_data = [item for item in cleaned_data if item[‘price’] > 10] # Data Enrichment (Example: Adding a ‘discount’ field if the price is greater than 50) for item in filtered_data: if item[‘price’] > 50: item[‘discount’] = item[‘price’]

0.1 # Apply a 10% discount

# Convert the transformed data back to JSON transformed_data = json.dumps(filtered_data, indent=4) # Upload the transformed data back to S3 (or to a different bucket/location) s3.put_object(Bucket=bucket, Key=f’transformed/key’, Body=transformed_data) return ‘statusCode’: 200, ‘body’: json.dumps(‘Data transformation successful!’) except Exception as e: print(f’Error processing file: key from bucket: bucket.

Error: e’) raise e“`This code snippet illustrates the fundamental steps involved in data transformation within a serverless environment. It demonstrates the use of Python, AWS Lambda, and Amazon S3 for data ingestion, processing, and output. The function is triggered by an S3 event (e.g., a new file upload), downloads the data, performs cleaning, filtering, and enrichment operations, and then uploads the transformed data back to S3.

This approach provides scalability, cost-efficiency, and ease of maintenance.

Orchestration and Workflow Management

Serverless data processing pipelines, while offering scalability and cost-effectiveness, often involve multiple steps and services. Coordinating these individual components effectively is crucial for a reliable and efficient data flow. Orchestration services provide a means to manage these complex workflows, ensuring that each step executes in the correct order, handles errors gracefully, and allows for monitoring and auditing. This section delves into the utilization of orchestration services and guides the construction of a basic data processing workflow.Orchestration services act as the central nervous system of a serverless data pipeline, providing the logic to coordinate the execution of various functions and services.

They define the sequence of operations, manage dependencies, and handle error conditions. This approach simplifies the management of complex data processing tasks and allows for greater control and visibility over the pipeline’s operation.

Demonstration of Orchestration Services

Several cloud providers offer robust orchestration services, each with its unique features and capabilities. The selection of an orchestration service depends on factors such as the chosen cloud platform, the complexity of the pipeline, and specific requirements. Some prominent examples include:* AWS Step Functions: A fully managed, serverless orchestration service from Amazon Web Services (AWS). Step Functions allows users to define workflows as state machines, which are executed based on predefined states and transitions.

It supports various integration patterns, including parallel execution, error handling, and conditional branching.* Azure Logic Apps: A cloud service from Microsoft Azure that provides a visual designer for building automated workflows. Logic Apps integrates with various services and connectors, enabling users to create workflows that connect different applications and data sources. It offers features such as triggers, actions, and conditions to define the workflow logic.* Google Cloud Workflow: A fully managed workflow orchestration service from Google Cloud Platform (GCP).

Cloud Workflow allows users to define workflows using YAML or JSON files, providing flexibility and control over the workflow’s structure and behavior. It supports features such as error handling, retries, and monitoring.These services share common functionalities, including the ability to define workflows, manage dependencies, handle errors, and monitor execution. The choice between these services depends on the specific cloud platform and the requirements of the data processing pipeline.

Building a Simple Data Processing Workflow

A fundamental data processing workflow involves receiving data, transforming it, and storing the processed results. The following steps illustrate a simple workflow that can be implemented using an orchestration service. This example utilizes a conceptual cloud environment, but the principles apply to any of the previously mentioned services.To illustrate the process, consider a pipeline that ingests CSV files from a cloud storage bucket, transforms the data, and stores the processed results in a data warehouse.

The workflow can be broken down into the following steps:* Trigger: The workflow is initiated when a new CSV file is uploaded to a designated cloud storage bucket. The orchestration service is configured to monitor the bucket for new file uploads.

Trigger Type

* Object creation in cloud storage (e.g., AWS S3, Azure Blob Storage, Google Cloud Storage).

Action

* The orchestration service receives an event notification when a new file is uploaded.

Condition

* The event triggers only for files matching a specific naming convention (e.g., files ending in “.csv”).

* Step 1: Data Extraction: A serverless function (e.g., AWS Lambda, Azure Functions, Google Cloud Functions) is invoked to extract the data from the uploaded CSV file. This function reads the file content and prepares it for transformation.

Action

* The function reads the CSV file from the storage bucket.

Condition

* The function executes after the trigger event is received.

Output

* The function returns the data as a structured format, such as JSON.

* Step 2: Data Transformation: Another serverless function is invoked to transform the extracted data. This function performs operations such as data cleaning, type conversion, and aggregation.

Action

* The function receives the structured data from the previous step.

Condition

* The function executes after the data extraction step completes successfully.

Output

* The function returns the transformed data.

* Step 3: Data Loading: A final serverless function is invoked to load the transformed data into a data warehouse or a data lake.

Action

* The function receives the transformed data.

Condition

* The function executes after the data transformation step completes successfully.

Output

* The transformed data is stored in the data warehouse.

* Error Handling: If any step fails, the orchestration service can handle the error by retrying the failed step, sending notifications, or taking other corrective actions.

Action

* The orchestration service monitors the execution of each step.

Condition

* If a step fails, the service triggers error handling logic.

Output

* Error notifications are sent, and potentially, the failed step is retried.

This workflow demonstrates the basic principles of orchestrating a serverless data processing pipeline. Each step is executed in a defined sequence, with dependencies managed by the orchestration service. This approach ensures that data is processed reliably and efficiently, while the serverless nature of the underlying functions provides scalability and cost-effectiveness. The use of error handling mechanisms further enhances the robustness of the pipeline.

Error Handling and Monitoring

Robust error handling and comprehensive monitoring are critical components of a successful serverless data processing pipeline. The inherent distributed nature of serverless architectures introduces complexities in identifying, diagnosing, and resolving issues. Without proactive measures, even minor failures can cascade, leading to data loss, processing delays, and ultimately, business disruptions. Implementing effective error handling and monitoring strategies ensures the pipeline’s reliability, maintainability, and ability to meet Service Level Agreements (SLAs).

Identifying Common Failure Points

Serverless data processing pipelines are susceptible to failures across various stages. Understanding these potential failure points is the first step towards building a resilient system.

- Data Ingestion Failures: These can occur during the initial data capture and arrival in the pipeline. Potential causes include:

- Network connectivity issues between data sources and ingestion services (e.g., S3, Kinesis).

- Incorrectly formatted data or data schema violations.

- Rate limiting or throttling imposed by the data source or ingestion service.

- Insufficient capacity in the ingestion service to handle the incoming data volume.

- Transformation Failures: Data transformation logic, often implemented using functions (e.g., AWS Lambda, Azure Functions), can fail for a variety of reasons:

- Code errors within the transformation functions (e.g., null pointer exceptions, division by zero).

- Dependency issues, such as missing libraries or incorrect versions.

- Resource exhaustion, such as exceeding memory or execution time limits.

- Data corruption or inconsistencies during transformation.

- Orchestration Failures: The orchestration layer (e.g., AWS Step Functions, Azure Durable Functions) is responsible for managing the workflow. Failures can stem from:

- Configuration errors in the workflow definition.

- Service outages of the underlying orchestration platform.

- Exceeding the maximum execution time or state limits.

- Incorrect handling of task failures or timeouts.

- Data Storage Failures: Data storage services (e.g., S3, Azure Blob Storage, databases) can experience issues:

- Storage capacity limitations.

- Service outages or performance degradation.

- Data corruption during write operations.

- Incorrect permissions or access restrictions.

- External Service Failures: Pipelines often interact with external services (e.g., APIs, databases). Failures can arise from:

- Service outages or performance degradation of the external service.

- API rate limiting.

- Network connectivity problems between the pipeline and the external service.

- Authentication or authorization issues.

Implementing Robust Error Handling and Retry Mechanisms

Effective error handling and retry mechanisms are essential for mitigating failures and ensuring data processing continues uninterrupted. The approach should be tailored to the specific failure scenario.

- Idempotency: Ensure that operations can be executed multiple times without unintended side effects. This is crucial for retries. For example, writing a record to a database should be designed to handle duplicate writes gracefully.

- Retry Strategies: Implement retry logic with appropriate backoff strategies to handle transient failures.

- Exponential Backoff: Gradually increase the delay between retries, starting with a short delay and doubling it with each subsequent attempt. This helps to avoid overwhelming a failing service. The formula is often expressed as:

`Retry Delay = Base

– 2 ^ (Attempt – 1) + Random(0, Jitter)`where `Base` is the initial delay, `Attempt` is the retry number, and `Jitter` introduces a small random delay to avoid retry storms.

- Fixed Delay: Retry after a fixed interval. This is suitable for situations where the issue is expected to resolve within a known timeframe.

- Circuit Breaker: Implement a circuit breaker pattern to prevent retrying indefinitely when a service is unavailable. If a service consistently fails, the circuit breaker “opens,” and subsequent requests are immediately rejected. After a timeout, the circuit breaker “closes” and allows requests to resume.

- Exponential Backoff: Gradually increase the delay between retries, starting with a short delay and doubling it with each subsequent attempt. This helps to avoid overwhelming a failing service. The formula is often expressed as:

- Dead-Letter Queues (DLQs): Configure DLQs to store messages that fail to be processed after multiple retries. This allows for manual inspection and reprocessing of problematic data. Common examples include AWS SQS DLQs or Azure Service Bus DLQs.

- Exception Handling in Code: Within transformation functions, use try-catch blocks to handle exceptions gracefully. Log the errors with sufficient context to aid in debugging.

- Example (Python using AWS Lambda):

import logging logger = logging.getLogger() logger.setLevel(logging.INFO) def lambda_handler(event, context): try: # Your data transformation logic here result = process_data(event) return result except Exception as e: logger.error(f"An error occurred: str(e)") # Optionally, send the error to a DLQ or trigger an alert raise # Re-raise the exception to trigger retries (if configured)

- Example (Python using AWS Lambda):

- Transaction Management: For operations involving multiple steps, consider using transactions (where supported by the services) to ensure atomicity and data consistency. If one step fails, the entire transaction rolls back.

Detailing Methods for Monitoring Pipeline Performance and Logging

Comprehensive monitoring and logging are essential for gaining insights into pipeline performance, identifying bottlenecks, and troubleshooting issues.

- Metrics to Track: Monitor key performance indicators (KPIs) to assess the pipeline’s health and efficiency.

- Execution Time: Measure the duration of each stage and the overall pipeline execution time. Identify slow-running tasks.

- Success/Failure Rates: Track the percentage of successful and failed executions for each stage and the overall pipeline.

- Error Counts: Monitor the number of errors, categorized by type and source.

- Data Volume: Measure the amount of data processed at each stage. Track data ingestion rates and transformation throughput.

- Resource Utilization: Monitor resource consumption (e.g., CPU, memory, network) of the functions and services used by the pipeline.

- Queue Lengths: Monitor the number of messages in queues (e.g., SQS, Kafka) to identify backlogs and potential performance issues.

- Logging: Implement structured logging to capture relevant information for debugging and analysis.

- Log Levels: Use appropriate log levels (e.g., DEBUG, INFO, WARNING, ERROR) to categorize log messages.

- Contextual Information: Include relevant context in log messages, such as:

- Unique identifiers for each execution (e.g., request IDs).

- Timestamps.

- Input data.

- Output data.

- Function names and versions.

- Structured Logging Formats: Use structured logging formats (e.g., JSON) to facilitate parsing and analysis.

- Tools to Use: Leverage cloud provider-specific monitoring and logging services.

- CloudWatch (AWS): Provides logging, monitoring, and alerting capabilities. Use CloudWatch Logs to collect and analyze logs from functions and services. Use CloudWatch Metrics to create dashboards and track KPIs. Create alarms to notify you of critical events.

- Azure Monitor (Azure): Offers similar functionality to CloudWatch, including log collection, metric monitoring, and alerting. Use Azure Log Analytics to analyze logs. Use Azure dashboards to visualize performance.

- Google Cloud Monitoring (GCP): Provides comprehensive monitoring and logging services, including Cloud Logging and Cloud Monitoring. Use Cloud Logging to collect and analyze logs. Use Cloud Monitoring to create dashboards and set up alerts.

- Third-Party Tools: Consider using third-party monitoring and logging tools (e.g., Datadog, New Relic, Splunk) for advanced features, such as distributed tracing and application performance monitoring (APM).

- Alerting: Configure alerts to be notified of critical events, such as:

- High error rates.

- Long execution times.

- Resource exhaustion.

- Pipeline failures.

Alerts should be routed to appropriate channels (e.g., email, Slack, PagerDuty) and include sufficient context for rapid investigation and resolution.

- Dashboards: Create dashboards to visualize pipeline performance and health. Dashboards should display key metrics and allow for drill-down analysis.

Security Considerations

Securing serverless data processing pipelines is paramount to protect sensitive data, maintain data integrity, and ensure the availability of the pipeline. Serverless architectures, while offering scalability and cost-effectiveness, introduce unique security challenges due to their distributed nature and reliance on third-party services. A robust security strategy is essential to mitigate these risks and safeguard the data processing pipeline.

Security Best Practices for Serverless Data Processing Pipelines

Implementing robust security measures is crucial for protecting data and maintaining the integrity of serverless data processing pipelines. These best practices encompass various aspects, from infrastructure security to application-level controls.

- Least Privilege Access: Grant only the necessary permissions to each function, service, and user. This principle minimizes the potential impact of a security breach. For example, a function that transforms data should only have access to the specific data storage locations it needs and should not have administrative privileges.

- Input Validation and Sanitization: Validate and sanitize all incoming data to prevent injection attacks, such as SQL injection or cross-site scripting (XSS). This involves checking the data type, format, and range of the input to ensure it conforms to expected values.

- Encryption at Rest and in Transit: Encrypt sensitive data both when it is stored (at rest) and when it is being transmitted (in transit). This protects the data from unauthorized access, even if the storage or network is compromised. Utilize encryption provided by the cloud provider, such as AWS KMS for data at rest and TLS/SSL for data in transit.

- Regular Security Audits and Penetration Testing: Conduct regular security audits and penetration testing to identify vulnerabilities and assess the effectiveness of security controls. These assessments should be performed by qualified security professionals.

- Monitoring and Logging: Implement comprehensive monitoring and logging to detect suspicious activities, such as unauthorized access attempts or unusual data access patterns. Use centralized logging and monitoring tools to analyze logs and generate alerts.

- Security Patching and Updates: Keep all dependencies, libraries, and runtime environments up to date with the latest security patches and updates. Automate the patching process to minimize the risk of vulnerabilities.

- Network Security: Implement network security controls, such as firewalls and network access control lists (ACLs), to restrict access to the serverless functions and services. Configure these controls to allow only necessary traffic.

- Secure Configuration Management: Securely configure the serverless functions and services, including environment variables, secrets, and other sensitive information. Avoid hardcoding secrets in the code. Utilize secure secret management services, such as AWS Secrets Manager or Azure Key Vault.

Checklist for Securing Data at Rest and in Transit

Securing data at rest and in transit is critical for protecting sensitive information throughout the data processing pipeline. This checklist provides a structured approach to implement these security measures.

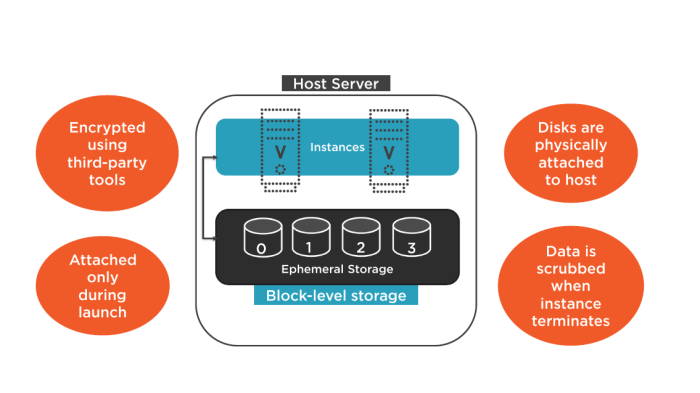

- Data at Rest Security:

- Encrypt data stored in databases, object storage (e.g., Amazon S3), and other data stores. Use encryption keys managed by a secure key management service.

- Implement access controls to restrict unauthorized access to data stores. Use IAM roles and policies to manage permissions.

- Regularly audit data storage configurations to ensure security best practices are followed.

- Data in Transit Security:

- Use TLS/SSL encryption for all data transmitted over the network. This ensures that data is encrypted during transit between components of the pipeline.

- Implement mutual TLS (mTLS) for enhanced security, requiring both the client and server to authenticate themselves.

- Use secure protocols for data transfer, such as HTTPS for API calls and SFTP for file transfers.

- Monitor network traffic for suspicious activity and potential security breaches.

IAM Roles and Permissions

Properly configuring IAM roles and permissions is fundamental to securing serverless data processing pipelines. IAM roles define the permissions granted to a service or function, allowing it to access other resources.

IAM roles are the cornerstone of secure serverless architectures. They define what actions a serverless function or service can perform and what resources it can access. Incorrectly configured IAM roles can lead to unauthorized access and data breaches. Implementing the principle of least privilege is critical; grant only the minimum permissions necessary for each function to operate. This approach minimizes the potential impact of a security compromise. For example, a data transformation function might need read access to an S3 bucket containing raw data and write access to an S3 bucket for processed data. The IAM role associated with this function should only grant these specific permissions, preventing it from accessing other resources or performing unauthorized actions. Regularly review and audit IAM role configurations to ensure they align with the principle of least privilege and are up-to-date with the pipeline’s requirements. This process is essential for maintaining a secure and robust serverless data processing environment.

Cost Optimization Strategies

Optimizing the cost of a serverless data processing pipeline is crucial for achieving operational efficiency and maximizing the return on investment. Serverless platforms offer a pay-per-use model, making cost management a dynamic and ongoing process. Effective cost optimization requires a deep understanding of resource consumption patterns, pricing models, and available optimization techniques. This section will delve into the methods for estimating, reducing, and managing costs within a serverless data processing pipeline.

Estimating and Optimizing Pipeline Costs

Accurately estimating and subsequently optimizing the cost of a serverless data processing pipeline involves several key steps. These steps enable a data engineer to forecast expenses and proactively identify areas for cost reduction.

Initial cost estimation requires analyzing the anticipated workload characteristics and matching these to the pricing models of the serverless services employed. The following factors should be considered:

- Data Volume: The amount of data ingested, processed, and stored directly impacts costs. Higher data volumes typically lead to increased storage and processing charges.

- Processing Complexity: The intricacy of data transformations and calculations influences the compute time required, thereby affecting the cost of function invocations and related services.

- Concurrency: The number of concurrent function executions determines the resource provisioning needs and, consequently, the associated costs.

- Service-Specific Pricing: Each serverless service (e.g., AWS Lambda, Azure Functions, Google Cloud Functions) has its pricing structure. Understanding these nuances is essential for accurate cost forecasting.

After initial estimation, continuous monitoring and refinement are essential. Cost optimization is not a one-time activity; it is an ongoing process of analysis and adjustment. To achieve this, implement the following:

- Resource Monitoring: Employ tools and services provided by the cloud provider to monitor resource utilization (CPU, memory, network) and function invocation metrics (duration, frequency).

- Cost Allocation Tags: Utilize cost allocation tags to categorize resources by project, team, or function. This enables granular cost tracking and attribution.

- Cost Forecasting: Leverage cost management tools to forecast future spending based on current consumption patterns and anticipated workload changes.

- Regular Audits: Conduct periodic audits of the pipeline to identify inefficiencies, unused resources, or areas where optimization can be applied.

Strategies for Reducing Costs

Several strategies can be employed to reduce the operational costs of a serverless data processing pipeline. These strategies encompass various aspects of the pipeline, from function configuration to data storage optimization.

Function memory allocation plays a significant role in cost management. Allocating the appropriate amount of memory to each function is crucial for optimizing performance and cost. Consider these aspects:

- Right-sizing Functions: Over-provisioning memory leads to unnecessary costs, while under-provisioning can result in performance degradation. The optimal memory allocation is determined by the function’s computational requirements.

- Performance Testing: Regularly benchmark functions with different memory configurations to identify the optimal balance between performance and cost.

- Monitoring Memory Utilization: Track memory utilization during function invocations. If memory usage is consistently low, consider reducing the allocated memory. Conversely, if functions are frequently hitting memory limits, increase the allocation.

Data storage optimization is another key area for cost reduction. The choice of storage technology and data management practices significantly impact storage costs. Consider the following:

- Data Compression: Compress data before storing it to reduce storage space and potentially lower the cost of data transfer.

- Data Tiering: Utilize different storage tiers (e.g., hot, cold, archive) based on data access frequency. Frequently accessed data can be stored in faster, more expensive tiers, while less frequently accessed data can be moved to lower-cost tiers.

- Data Lifecycle Management: Implement data lifecycle policies to automatically delete or archive data based on its age. This helps manage storage costs and ensures compliance with data retention policies.

- Storage Format: Choose storage formats optimized for serverless processing, such as Parquet or ORC, which are columnar formats and can lead to efficient data access and reduced storage footprint.

Furthermore, optimizing code and function execution is important for reducing costs. This includes:

- Code Optimization: Optimize function code for performance and efficiency. Minimize the execution time of functions to reduce compute costs.

- Efficient Libraries: Use optimized and lightweight libraries. Avoid loading unnecessary dependencies that can increase function size and execution time.

- Batch Processing: Whenever possible, process data in batches to reduce the number of function invocations and associated overhead.

- Idempotent Functions: Design functions to be idempotent, which means they can be safely executed multiple times without unintended side effects. This is crucial for handling retries and ensuring data consistency.

Comparative Analysis of Pricing Models

Serverless platforms offer diverse pricing models, each with its characteristics. Understanding these models is essential for selecting the most cost-effective solution for a specific use case.

Common pricing models offered by serverless platforms include:

- Pay-per-Use: The most common model, where users pay for the actual resources consumed (e.g., compute time, data storage, network bandwidth). Pricing is typically based on factors such as function execution time, memory allocation, and the number of invocations.

- Free Tier: Most serverless platforms offer a free tier that provides a limited amount of free usage for certain services. This can be beneficial for development, testing, and low-volume production workloads.

- Reserved Instances/Capacity: Some platforms offer reserved instances or capacity options, allowing users to reserve resources at a discounted rate for a specific period. This can be cost-effective for predictable workloads.

- Provisioned Concurrency: This model allows users to pre-provision a certain number of concurrent function instances, ensuring low-latency performance and predictable costs.

The selection of the most appropriate pricing model depends on several factors, including the workload characteristics, the volume of data, and the performance requirements. To illustrate the impact of different pricing models, consider the following scenario:

Scenario: A data processing pipeline that processes 1 million events per day. Each event requires 100 milliseconds of compute time and 128MB of memory. The pipeline is deployed on a serverless platform that offers a pay-per-use model with a price of $0.0000002 per GB-second. The cost for the daily workload can be calculated as follows:

Total Compute Time = 1,000,000 events

– 0.1 seconds/event = 100,000 secondsTotal GB-seconds = 100,000 seconds

– 0.128 GB = 12,800 GB-secondsTotal Cost = 12,800 GB-seconds

– $0.0000002/GB-second = $2.56

In this scenario, the estimated daily cost is $2.56. The actual cost might vary based on factors like the number of function invocations and any other services used. Understanding these pricing models enables data engineers to select the most economical and efficient approach for their particular needs. Choosing the correct pricing model, based on a thorough evaluation of the application’s needs, is critical for cost optimization.

Scalability and Performance Tuning

Serverless architectures are inherently designed for scalability, adapting automatically to fluctuating workloads. However, optimizing performance within these environments requires a strategic approach, focusing on function design, resource allocation, and careful monitoring. Understanding the nuances of scaling and tuning is critical for building efficient and cost-effective serverless data processing pipelines.

Horizontal Scaling in Serverless Environments

Serverless platforms inherently scale horizontally. This means that when the demand on a function increases, the platform automatically provisions more instances of that function to handle the load. This is a core benefit of serverless, eliminating the need for manual scaling and ensuring responsiveness.To illustrate horizontal scaling, consider the following conceptual model:“`+———————+ +———————+ +———————+| Incoming Requests |——>| Function Instance |——>| Processing |+———————+ +———————+ +———————+ | ^ | | | (Load increases) | V |+———————+ +———————+ +———————+| Incoming Requests |——>| Function Instance |——>| Processing |+———————+ +———————+ +———————+ | ^ | | | (Load increases) | V |+———————+ +———————+ +———————+| Incoming Requests |——>| Function Instance |——>| Processing |+———————+ +———————+ +———————+ | | V+———————+| Platform Orchestrator| (Manages function instances)+———————+“`This visual representation shows how a platform orchestrator manages multiple function instances.

As incoming requests increase, the orchestrator automatically spins up more function instances. Each instance independently processes a portion of the incoming requests. This parallel processing allows the system to handle a large volume of requests without performance degradation. This model effectively illustrates the concept of horizontal scaling in serverless.

Performance Tuning Strategies for Serverless Functions

Optimizing the performance of serverless functions involves several key strategies. These techniques aim to minimize latency, reduce execution time, and improve overall efficiency.

- Function Code Optimization: Writing efficient code is paramount. Minimizing code size, reducing dependencies, and optimizing algorithms directly impact function execution time. This includes using efficient data structures and algorithms, and avoiding unnecessary computations. For example, optimizing data transformations within a function can significantly reduce processing time.

- Memory and CPU Allocation: Serverless platforms allow configuring the allocated memory and CPU resources for functions. Increasing memory can often improve performance, particularly for memory-intensive operations. However, over-provisioning resources can lead to increased costs without a corresponding performance gain. Monitoring function metrics like memory utilization and CPU utilization is crucial for finding the optimal balance.

- Cold Start Mitigation: Cold starts, where a function instance needs to be initialized, can introduce latency. Strategies to mitigate cold starts include:

- Provisioned Concurrency: Some platforms offer provisioned concurrency, which keeps a set number of function instances warm and ready to handle requests.

- Keep-alive Mechanisms: Implementing mechanisms to keep function instances active, such as scheduled invocations or pinging, can reduce the likelihood of cold starts.

- Efficient Data Access: Optimizing data access patterns is crucial for performance. This includes:

- Caching: Implementing caching mechanisms, such as using in-memory caches or content delivery networks (CDNs), can significantly reduce the need to repeatedly retrieve data from external sources.

- Database Optimization: Optimizing database queries and using appropriate indexing strategies can dramatically improve data retrieval performance.

- Asynchronous Processing: Employing asynchronous processing techniques, such as using message queues, can decouple tasks and improve responsiveness. Functions can publish tasks to a queue and return immediately, allowing other processes to handle the tasks in the background.

- Monitoring and Profiling: Continuous monitoring and profiling are essential for identifying performance bottlenecks. Tools that provide insights into function execution time, memory usage, and other key metrics are invaluable for optimization. Regularly reviewing function logs and traces helps to pinpoint areas for improvement.

Deployment and Versioning

Deploying a serverless data processing pipeline involves a series of orchestrated steps to move code from development to a production environment. Versioning is crucial for managing changes and ensuring stability, while a robust CI/CD pipeline automates the deployment process, minimizing errors and promoting efficient collaboration. This section details the key aspects of deployment and versioning within the context of serverless data processing.

Deployment Steps

Deploying a serverless data processing pipeline is a multi-stage process, starting with the packaging of code and configuration, followed by deployment to the cloud provider’s infrastructure, and concluding with verification and monitoring. Each step requires careful attention to detail to ensure a successful and reliable deployment.

- Code Packaging: This step involves preparing the code for deployment. Serverless functions, along with any dependencies (libraries, configuration files), are packaged into a deployable artifact, typically a ZIP file or container image. The specific method depends on the cloud provider and the programming language used. For example, in AWS, you might use the Serverless Application Model (SAM) to package your code.

- Infrastructure Provisioning: This involves defining and creating the necessary cloud resources, such as functions, triggers (e.g., S3 bucket events, API Gateway endpoints), databases, and monitoring tools. Infrastructure-as-Code (IaC) tools, like Terraform or AWS CloudFormation, are commonly used to automate this process, allowing for repeatable and consistent deployments.

- Configuration Management: Configuring environment-specific settings is a critical step. This includes setting environment variables, configuring security settings (e.g., IAM roles and permissions), and defining resource limits. Configuration management tools and services, such as AWS Systems Manager Parameter Store or Azure Key Vault, help manage sensitive data securely.

- Deployment to Cloud Provider: The packaged code and infrastructure configurations are deployed to the chosen cloud provider’s platform. This process typically involves using the cloud provider’s CLI or SDK to upload the code and create or update the necessary resources. For instance, AWS Lambda functions are deployed via the Lambda service.

- Testing and Verification: After deployment, rigorous testing is essential to ensure the pipeline functions correctly. This includes unit tests, integration tests, and end-to-end tests. Monitoring tools are used to track the pipeline’s performance, identify errors, and validate data transformations.

- Monitoring and Logging: Setting up comprehensive monitoring and logging is critical for the operational health of the pipeline. Cloud providers offer services like AWS CloudWatch, Azure Monitor, and Google Cloud Operations to collect and analyze logs, metrics, and traces. Alerts can be configured to notify operators of any issues or anomalies.

Versioning Strategies for Serverless Functions

Versioning is vital for managing changes to serverless functions and allowing for rollback capabilities in case of issues. Several versioning strategies can be employed to manage function updates and maintain operational stability.

- Immutable Deployments: This approach involves deploying a new version of a function alongside the existing version. This ensures that the previous version remains available if the new version encounters problems. Traffic can then be gradually shifted to the new version.

- Traffic Splitting (Canary Releases): This strategy allows a small percentage of traffic to be directed to the new version of a function while the majority of traffic continues to use the existing version. If the new version performs well, the traffic is gradually increased. If issues arise, traffic can be quickly rolled back to the previous version. This is often implemented using routing features in API gateways or load balancers.

- Blue/Green Deployments: This involves maintaining two identical environments (blue and green). The green environment is the live production environment, and the blue environment is used for testing and deploying new versions. Once the new version in the blue environment is validated, traffic is switched to the blue environment, making it the new production environment.

- Semantic Versioning (SemVer): This is a widely adopted versioning scheme that uses a three-part version number (MAJOR.MINOR.PATCH) to indicate the nature of changes.

- MAJOR version increments indicate incompatible API changes.

- MINOR version increments indicate added functionality in a backward-compatible manner.

- PATCH version increments indicate backward-compatible bug fixes.

CI/CD Pipeline for Automated Deployments

A Continuous Integration/Continuous Deployment (CI/CD) pipeline automates the process of building, testing, and deploying code changes, which is essential for serverless data processing pipelines. It helps maintain code quality, reduce manual effort, and accelerate the release cycle.

- Code Repository: The CI/CD pipeline starts with a code repository (e.g., GitHub, GitLab, Bitbucket) where the source code is stored and version-controlled.

- Continuous Integration (CI): When code changes are pushed to the repository, the CI process is triggered. This typically involves:

- Build: Compiling the code and creating deployable artifacts.

- Testing: Running unit tests, integration tests, and other automated tests to verify code quality.

- Code Analysis: Static code analysis tools (e.g., SonarQube) can be used to check for code style violations, security vulnerabilities, and other issues.

- Continuous Deployment (CD): If the CI process is successful, the CD process is initiated. This involves:

- Deployment: Deploying the built artifacts and infrastructure configurations to the target environment (e.g., development, staging, production).

- Testing: Running end-to-end tests and performing other validation checks in the deployed environment.

- Monitoring and Alerting: Setting up monitoring and alerting to track the performance and health of the deployed pipeline.

- Automation Tools: CI/CD pipelines utilize various automation tools, such as Jenkins, GitLab CI, CircleCI, or AWS CodePipeline. These tools orchestrate the different stages of the pipeline and provide features like build automation, test execution, and deployment management.

- Benefits of CI/CD:

- Faster Release Cycles: Automating the deployment process allows for faster and more frequent releases.

- Improved Code Quality: Automated testing and code analysis help ensure code quality and reduce the risk of bugs.

- Reduced Risk: Automated deployments minimize the risk of human error.

- Increased Efficiency: Automating the deployment process reduces manual effort and frees up developers to focus on writing code.

Ultimate Conclusion

In conclusion, the serverless data processing pipeline presents a compelling architecture for modern data workflows. By carefully considering the factors discussed – from technology selection and data transformation to security and cost optimization – organizations can build pipelines that are highly scalable, resilient, and cost-effective. The transition to serverless requires a shift in mindset, embracing event-driven architectures and the inherent benefits of cloud-native services.

As the landscape of serverless technologies continues to evolve, the ability to adapt and leverage these advancements will be critical for maintaining a competitive edge in the data-driven era.

Common Queries

What are the primary benefits of using a serverless approach for data processing?

Serverless offers significant advantages, including automatic scaling, reduced operational overhead (no server management), pay-per-use pricing, and increased agility, enabling faster development cycles and quicker time-to-market for data-driven applications.

How does serverless handle data security?

Serverless architectures rely on robust security features provided by cloud providers. This includes features like Identity and Access Management (IAM) roles, encryption at rest and in transit, and network security controls. Security best practices include using least-privilege access, regularly auditing access logs, and implementing data validation.

What are the main cost considerations when building a serverless data processing pipeline?

Cost optimization involves selecting the appropriate cloud services, optimizing function memory allocation, choosing the correct storage options, and monitoring resource usage. Pay-per-use pricing models and efficient resource utilization are key to controlling costs. Regularly review and optimize your pipeline’s resource consumption.

How can I monitor the performance of my serverless data processing pipeline?

Monitoring involves using logging, metrics, and tracing tools provided by the cloud provider. Key metrics include function execution time, invocation count, error rates, and data processing throughput. Implementing alerts for critical events and performance bottlenecks is crucial.

What is the role of CI/CD in serverless data processing pipeline deployments?

CI/CD (Continuous Integration/Continuous Deployment) automates the build, testing, and deployment of serverless functions and infrastructure. This ensures code quality, speeds up the release process, and enables faster iteration cycles. It helps maintain consistency and reduces the risk of errors in production.