Database migrations are a critical aspect of modern software development, especially when embracing the efficiency and automation of a Continuous Integration and Continuous Deployment (CI/CD) pipeline. This guide delves into the intricacies of managing database changes alongside your application code, ensuring smooth deployments, minimizing downtime, and fostering a robust development workflow. We’ll explore the core concepts, tools, and best practices necessary to seamlessly integrate database migrations into your CI/CD pipeline, transforming what can be a complex process into a streamlined and reliable operation.

From understanding the fundamental principles of database migrations to mastering advanced techniques for zero-downtime deployments, this document provides a comprehensive roadmap. We will cover selecting the right migration tools, version controlling your database changes, automating migrations within your CI/CD pipeline, and implementing robust testing and rollback strategies. Furthermore, we will delve into monitoring, logging, and advanced topics to equip you with the knowledge and skills to confidently manage database migrations in projects of all sizes.

Introduction to Database Migrations in CI/CD

Integrating database migrations into a Continuous Integration and Continuous Delivery (CI/CD) pipeline is crucial for maintaining a smooth and efficient software development lifecycle. This integration ensures that database schema changes are synchronized with application code deployments, minimizing the risk of errors and downtime. Properly managed migrations are essential for achieving automated, reliable, and repeatable deployments.Database migrations are controlled, versioned changes to a database schema.

They allow developers to evolve the database structure over time in a structured and predictable manner. These changes can include creating tables, adding columns, modifying data types, and more. Each migration is typically represented by a script or a set of scripts, and they are executed in a specific order to ensure consistency.

Benefits of Integrating Database Migrations into a CI/CD Pipeline

Integrating database migrations into a CI/CD pipeline offers several significant advantages, enhancing the overall development and deployment process. This integration promotes automation, reduces manual effort, and minimizes the potential for human error, resulting in more reliable and faster releases.

- Automated Schema Updates: Database migrations automate the process of applying schema changes to the database during deployments. This automation eliminates the need for manual intervention, reducing the risk of errors and speeding up the deployment process.

- Version Control for Database Schema: Migrations are typically versioned, allowing developers to track and manage changes to the database schema over time. This version control enables rollback capabilities and simplifies debugging and troubleshooting.

- Consistent Environments: Integrating migrations ensures that the database schema is consistent across all environments, including development, testing, staging, and production. This consistency reduces the likelihood of environment-specific issues and promotes more reliable testing.

- Reduced Downtime: Well-designed database migrations, especially those incorporating strategies like zero-downtime migrations, minimize or eliminate downtime during deployments. This is achieved by applying changes incrementally and carefully, avoiding locking the database for extended periods.

- Simplified Rollbacks: In case of deployment failures, migrations facilitate easy rollbacks to previous schema versions. This ability to revert to a known good state minimizes the impact of deployment issues and ensures business continuity.

- Improved Collaboration: By integrating migrations into the CI/CD pipeline, teams can collaborate more effectively on database changes. This promotes better communication and coordination between developers and database administrators.

For example, consider a scenario where a new feature requires a new column in a table. Without a CI/CD-integrated migration system, a developer might have to manually create the column in the production database, potentially leading to errors and downtime. With migrations, the developer creates a migration script, which is automatically executed as part of the deployment process. This ensures that the column is created correctly and consistently across all environments.

Choosing the Right Migration Tool

Selecting the appropriate database migration tool is crucial for a smooth and efficient CI/CD pipeline. The right tool simplifies the process of managing database schema changes, reducing the risk of errors and downtime. This section explores popular tools, compares their features, and provides a decision-making framework to guide your selection.

Popular Database Migration Tools

Several robust tools are available for managing database migrations, each with its strengths and weaknesses. Understanding these tools is the first step in making an informed decision.

- Flyway: A lightweight, open-source database migration tool that emphasizes simplicity. It supports a wide range of databases and uses SQL scripts for migrations.

- Liquibase: Another popular open-source tool that provides database-independent schema management. It supports both SQL and XML/YAML/JSON formats for defining changesets.

- Django Migrations: A built-in migration system for the Django web framework. It simplifies database schema management within Django projects.

- Entity Framework Core Migrations (for .NET): Provides a built-in migration system specifically for .NET applications using Entity Framework Core.

- Active Record Migrations (for Ruby on Rails): A migration system built into the Ruby on Rails framework, designed to manage database schema changes.

Feature Comparison of Migration Tools

Comparing the features of different migration tools helps determine which tool best aligns with your project’s requirements. The following table provides a comparative overview:

| Feature | Flyway | Liquibase | Django Migrations |

|---|---|---|---|

| Database Support | Extensive (PostgreSQL, MySQL, Oracle, etc.) | Extensive (PostgreSQL, MySQL, Oracle, etc.) | Primarily focused on Django’s supported databases (PostgreSQL, MySQL, SQLite, etc.) |

| Migration Definition | SQL scripts | SQL, XML, YAML, JSON | Python code |

| Change Tracking | Based on checksums and version numbers | Uses changeSets with unique IDs | Tracks migrations through a database table |

| Rollback Support | Supports rollback to previous versions | Supports rollback to previous states | Supports rollback through reverse migrations |

| Database-Independent Schema Management | Limited, relies on SQL | Yes, through changeSet definitions | Partially, through Django’s ORM |

Decision-Making Process for Selecting a Migration Tool

Choosing the right migration tool involves evaluating several factors specific to your project. The following steps Artikel a decision-making process:

- Assess Project Requirements: Determine the specific needs of your project. This includes the supported databases, the complexity of schema changes, and the desired level of database independence.

- Evaluate Tool Features: Compare the features of different tools against your requirements. Consider factors such as database support, migration definition methods, rollback capabilities, and ease of use.

- Consider Team Expertise: Take into account the existing skills and experience of your development team. Some tools may have a steeper learning curve than others.

- Evaluate Community Support and Documentation: Consider the availability of community support, documentation, and examples. This can be crucial for troubleshooting and learning the tool.

- Test and Pilot: Before making a final decision, test the shortlisted tools in a pilot project or a non-production environment. This allows you to evaluate their performance and identify any potential issues.

- Consider Integration with CI/CD Pipeline: Ensure the chosen tool integrates seamlessly with your existing CI/CD pipeline. This involves automating the migration process as part of your build and deployment processes.

By following this decision-making process, you can select the database migration tool that best fits your project’s needs, ensuring a reliable and efficient CI/CD pipeline.

Version Control and Database Migrations

Version control is essential for managing database migrations within a CI/CD pipeline. It allows teams to track changes, collaborate effectively, and revert to previous states if necessary. Proper version control practices ensure the integrity and reliability of the database schema throughout the development lifecycle.

Version Control of Database Migration Scripts

Database migration scripts, like application code, must be version-controlled. This ensures a complete history of changes, facilitates collaboration, and enables rollback capabilities. Storing migration scripts in a version control system provides several key benefits.

- Change Tracking: Every modification to a migration script is tracked, including the author, date, and a descriptive commit message. This allows for easy auditing and understanding of schema evolution.

- Collaboration: Multiple developers can work on migration scripts concurrently, using branching and merging features to integrate their changes.

- Rollback Capabilities: In case of issues, version control allows for easy reversion to a previous, stable state of the database schema.

- Reproducibility: The exact state of the database schema at any point in time can be reproduced by applying the appropriate migration scripts from the version control system.

A common approach is to store migration scripts alongside the application code in the same repository. This practice maintains consistency and makes it easy to relate schema changes to application code changes. Each migration script should be uniquely identified, often with a timestamp or a sequential number, and should contain the necessary SQL commands to apply the schema changes.

Branching and Merging Migration Changes

Branching and merging strategies are critical for managing database schema changes in a collaborative environment. Developers typically create branches for feature development or bug fixes. When changes are ready, they are merged into the main branch (e.g., `main` or `develop`).

- Feature Branches: Developers create feature branches to isolate changes related to a specific feature. They create migration scripts in their feature branch that correspond to the database changes needed for the feature.

- Merging: When a feature is complete, the feature branch is merged into the integration branch (e.g., `develop`). During the merge process, potential conflicts in the migration scripts must be resolved.

- Integration Branch: The integration branch acts as a staging area where all changes are combined and tested before being deployed to production.

- Release Branches: Before releasing to production, a release branch is created from the integration branch. This branch is used for final testing and preparing the release.

- Main/Production Branch: Once the release is verified, the release branch is merged into the main branch, and the changes are deployed to production.

This process mirrors the branching and merging strategy used for application code. The key is to ensure that the migration scripts are merged in a consistent and controlled manner to avoid conflicts and data integrity issues.For example, consider two developers working on separate features. Developer A adds a new column to a table, and Developer B adds a new index to the same table.

Both developers create migration scripts in their respective feature branches. When the branches are merged, a conflict may arise if both scripts attempt to modify the same table. The conflict must be resolved by manually merging the changes in the migration scripts, ensuring that the new column and the new index are both applied correctly.

Handling Migration Conflicts in a CI/CD Environment

Migration conflicts are inevitable in a collaborative CI/CD environment. A well-defined procedure for handling these conflicts is crucial for maintaining database integrity and preventing deployment failures. The procedure typically involves the following steps.

- Conflict Detection: The CI/CD pipeline should automatically detect conflicts in migration scripts during the merge process. This can be achieved by using the version control system’s conflict detection features or by implementing custom scripts that analyze the scripts before deployment.

- Conflict Resolution: When a conflict is detected, the developers responsible for the conflicting changes must resolve it. This usually involves manually merging the conflicting migration scripts, ensuring that all changes are applied correctly and that the database schema remains consistent.

- Testing: After resolving the conflict, thorough testing is essential. This includes unit tests for individual migration scripts and integration tests to ensure that the changes work together correctly.

- Rollback Strategy: A rollback strategy must be in place to handle any unforeseen issues during deployment. This might involve reverting to a previous, stable state of the database schema.

- Communication: Clear communication between developers is critical throughout the conflict resolution process. Developers should communicate about the nature of the conflicts, the resolution steps, and the testing results.

In a CI/CD pipeline, conflicts can be handled through automated processes. For example, when a pull request is created, the CI system can run a script that compares the migration scripts in the pull request branch with the migration scripts in the target branch (e.g., `main`). If conflicts are detected, the CI system can automatically flag the pull request and notify the developers involved.

The developers can then resolve the conflicts and re-run the tests before merging the changes. This automation reduces the risk of human error and ensures that conflicts are resolved efficiently.

Implementing Migration Scripts

Writing effective migration scripts is crucial for the success of database changes within a CI/CD pipeline. These scripts act as the blueprints for modifying your database schema and data, ensuring that each deployment results in a consistent and predictable state. Proper script design minimizes downtime, prevents data loss, and simplifies rollback procedures. The choice of database system will influence the specific syntax and best practices employed.

Writing Effective Migration Scripts for Different Database Systems

Different database systems have their own unique SQL dialects and features. Understanding these differences is essential when writing migration scripts.

- PostgreSQL: PostgreSQL is known for its adherence to SQL standards and its extensive feature set. Migration scripts often involve `CREATE TABLE`, `ALTER TABLE`, `CREATE INDEX`, and `CREATE FUNCTION` statements. PostgreSQL also supports advanced features like triggers, stored procedures, and user-defined types, which can be incorporated into migrations. Ensure the use of transaction blocks (`BEGIN`, `COMMIT`, `ROLLBACK`) to maintain data consistency.

- MySQL: MySQL is a widely used open-source database system. Migration scripts in MySQL commonly use `CREATE TABLE`, `ALTER TABLE`, `CREATE INDEX`, and `INSERT` statements. MySQL also supports features like stored procedures and triggers, though their implementation might differ from PostgreSQL. Consider using the `ENGINE` option to specify the storage engine (e.g., InnoDB) and carefully manage data types.

- SQL Server: Microsoft SQL Server is a robust and feature-rich database system. Migration scripts in SQL Server utilize `CREATE TABLE`, `ALTER TABLE`, `CREATE INDEX`, and `CREATE PROCEDURE` statements. SQL Server also supports advanced features like Common Table Expressions (CTEs) and window functions. Be mindful of SQL Server’s specific syntax and data type conventions. Consider using `GO` statements to separate batches of SQL commands, which can improve execution performance.

- SQLite: SQLite is a lightweight, file-based database system. Migration scripts in SQLite are often simpler due to its limited feature set compared to the other databases. Use `CREATE TABLE`, `ALTER TABLE`, and `INSERT` statements. SQLite has fewer data type options, so carefully choose appropriate data types. It’s important to note that SQLite has limitations on `ALTER TABLE` operations; for example, adding a `NOT NULL` constraint to a column can be more complex.

Common Migration Operations

Migration scripts perform various operations to modify the database schema and data. Here are examples of common migration operations.

- Creating Tables: This is the fundamental operation for setting up the database schema.

Example (PostgreSQL):

CREATE TABLE users ( id SERIAL PRIMARY KEY, username VARCHAR(255) NOT NULL, email VARCHAR(255) UNIQUE, created_at TIMESTAMP WITH TIME ZONE DEFAULT CURRENT_TIMESTAMP ); - Altering Columns: Modifying existing columns is a common task.

Example (MySQL):

ALTER TABLE products ADD COLUMN description TEXT; - Adding Indexes: Indexes improve query performance.

Example (SQL Server):

CREATE INDEX idx_email ON users (email); - Inserting Data: Populating tables with initial data or seed data.

Example (SQLite):

INSERT INTO roles (name) VALUES ('admin'); - Renaming Tables/Columns: Refactoring the schema.

Example (PostgreSQL):

ALTER TABLE old_table RENAME TO new_table; - Dropping Tables/Columns: Removing obsolete elements.

Example (MySQL):

ALTER TABLE products DROP COLUMN obsolete_column;

Handling Data Seeding and Initial Setup During Migrations

Data seeding and initial setup are essential steps in a database migration. They ensure that the database is populated with the necessary data and configurations after the schema changes are applied.

- Seed Data: Seed data provides initial values for lookup tables, default configurations, or sample data for testing. Consider the following aspects:

- Insert Statements: Use `INSERT` statements to populate tables with seed data.

- Data Format: Ensure that the data format matches the database’s data types.

- Idempotency: Design seed scripts to be idempotent. This means that running the script multiple times should have the same effect as running it once. One common technique is to check if the data already exists before inserting it (e.g., `SELECT COUNT(*) FROM … WHERE …`).

- Initial Setup: This includes creating initial users, setting up default configurations, or running any necessary setup scripts.

- Stored Procedures/Functions: Utilize stored procedures or functions to encapsulate complex setup logic.

- Configuration Settings: Store configuration settings in the database.

- User Creation: Create the initial set of users and grant them appropriate permissions.

- Best Practices for Data Seeding and Initial Setup:

- Separate Scripts: Keep seed data and setup scripts separate from schema migration scripts. This promotes organization and maintainability.

- Order of Execution: Determine the correct order of execution. Seed data should typically be applied after the tables have been created.

- Environment Variables: Use environment variables to configure the setup based on the environment (e.g., development, staging, production).

- Testing: Thoroughly test seed data and setup scripts to ensure that they function as expected.

Automating Migrations in the CI/CD Pipeline

Integrating database migrations into a CI/CD pipeline is crucial for ensuring smooth, reliable, and automated deployments. This automation minimizes manual intervention, reduces the risk of human error, and accelerates the release cycle. By incorporating migrations into the pipeline, you guarantee that the database schema is synchronized with the application code at every deployment.

Integrating Migration Tools into a CI/CD Pipeline

The integration of migration tools into a CI/CD pipeline requires careful planning and execution. The goal is to automate the application of database schema changes as part of the build and deployment process. This generally involves configuring the CI/CD tool to execute the migration commands at specific stages, such as after the application code is built and before the application is deployed.

To achieve this, consider the following points:

- Choose the right tool: Select a migration tool that integrates well with your CI/CD system. Tools like Flyway, Liquibase, and Alembic offer robust command-line interfaces, which is essential for automation. Ensure the chosen tool supports your database platform and aligns with your team’s expertise.

- Define deployment stages: Determine the appropriate stages in your pipeline where the migrations should be executed. This might be after the code is built and tests have passed, but before the application servers are restarted or the application is deployed to production.

- Configure the environment: Ensure the CI/CD environment has the necessary access to the database. This includes providing database connection strings, credentials, and any other configuration parameters required by the migration tool. These parameters should be securely stored and managed, potentially using environment variables or secrets management tools.

- Implement error handling: Implement robust error handling within the migration scripts. This involves checking the exit codes of the migration tool and implementing appropriate actions if a migration fails, such as rolling back the deployment or sending notifications.

- Test thoroughly: Test the migration process thoroughly in a staging environment before deploying to production. This includes testing the migration scripts with various data scenarios to ensure they work as expected.

Scripts for Automating Database Migrations

Automating database migrations often involves creating scripts that can be executed by the CI/CD pipeline. These scripts typically include commands to run the migration tool, manage database connections, and handle potential errors. The exact implementation will depend on the chosen migration tool and CI/CD platform.

Here’s an example using Flyway and a hypothetical CI/CD pipeline:

#!/bin/bash# Set environment variablesDATABASE_URL=$DATABASE_URLDATABASE_USER=$DATABASE_USERDATABASE_PASSWORD=$DATABASE_PASSWORD# Migrate the databaseflyway migrate \ -url="$DATABASE_URL" \ -user="$DATABASE_USER" \ -password="$DATABASE_PASSWORD"# Check the resultif [ $? -eq 0 ]; then echo "Database migration successful"else echo "Database migration failed" exit 1fiecho "Deployment completed" In this script:

- The script first sets environment variables for the database connection. These variables should be securely configured within the CI/CD platform.

- The

flyway migratecommand runs the database migrations. - The script checks the exit code of the

flyway migratecommand to determine if the migration was successful. - If the migration fails, the script exits with a non-zero exit code, which will cause the CI/CD pipeline to fail, preventing the deployment from proceeding.

For a more complex scenario, consider adding these features:

- Rollback capability: Implement a rollback strategy in case a migration fails.

- Dry run: Include a “dry run” option to test the migration without actually applying it to the database.

- Logging: Add detailed logging to capture the output of the migration tool for troubleshooting.

Strategies for Handling Schema Changes During Deployments with Zero Downtime

Implementing zero-downtime deployments with schema changes is a complex challenge that requires careful planning and execution. Several strategies can be employed to minimize or eliminate downtime during deployments. These strategies often involve making schema changes in a backward-compatible manner, ensuring that the new application version can work with both the old and new schema versions.

Here are some key approaches:

- Backward compatibility: Ensure that new schema changes are backward compatible with the previous application version. This means that the new application version can work with both the old and new schema versions. For example, when adding a new column, provide a default value or allow nulls to accommodate the older application version.

- Feature flags: Use feature flags to control the visibility of new features. This allows you to deploy the code with the new schema changes, but only enable the new features after the application code has been fully deployed.

- Blue/Green deployments: Deploy the new application version with the updated schema to a separate environment (the “green” environment) while the existing application version (the “blue” environment) continues to serve traffic. Once the new version is validated, switch traffic to the “green” environment. This minimizes downtime and allows for a rollback if needed.

- Canary deployments: Deploy the new application version to a small subset of users (the “canary” group) and monitor its performance. If the new version performs well, gradually roll it out to more users. This approach allows you to detect and address any issues before the full deployment.

- Database migrations as part of the application deployment: Integrate the database migration steps into the application deployment process. The migration tool can be executed before or after the application code deployment, depending on the strategy.

- Staged migrations: For large or complex schema changes, consider breaking the migration into smaller, staged steps. This reduces the risk of a single, large migration failing and makes it easier to roll back if necessary. Each stage should be backward compatible.

- Transaction management: Ensure that all schema changes are executed within transactions to maintain data consistency. This prevents partial updates and ensures that the database remains in a consistent state.

Consider a real-world example: Adding a new column, `customer_email_verified`, to a `customers` table.

- Phase 1 (Deployment 1): Add the `customer_email_verified` column to the `customers` table, allowing null values. Update the application code to handle the new column, but the feature using it is hidden behind a feature flag. The application code can read both the old and new schema.

- Phase 2 (Deployment 2): Deploy the application code that uses the new column and enable the feature flag. The application now fully utilizes the new column.

- Phase 3 (Deployment 3 – Optional): Once you’re certain that the old application version is no longer in use, you could remove the feature flag and/or add a `NOT NULL` constraint to the `customer_email_verified` column.

Testing Database Migrations

![Types of door handles explained [2022 Update] Types of door handles explained [2022 Update]](https://wp.ahmadjn.dev/wp-content/uploads/2025/06/Bar_Handles_Teaser_880x880px.jpg)

Testing database migrations is a critical step in ensuring the stability and reliability of your application. Thorough testing helps prevent data loss, application downtime, and unexpected behavior caused by flawed migration scripts. Implementing a robust testing strategy involves various techniques, from unit tests that validate individual migration steps to integration tests that verify the overall migration process in a realistic environment.

Testing Strategy

A comprehensive testing strategy should cover several key aspects of the migration process. It ensures that migrations function as expected across different environments and that data integrity is maintained.

- Unit Tests: Focus on individual migration operations, such as adding columns, creating indexes, or modifying data types. These tests isolate specific functionalities and verify their correctness.

- Integration Tests: Validate the complete migration process by running migrations against a test database that simulates the production environment. This includes testing the order of migrations and their interaction with existing data.

- Environment-Specific Testing: Run tests in environments that mirror the production environment, such as staging or pre-production. This helps identify environment-specific issues that might not be apparent in local development.

- Data Validation Tests: Verify the integrity of data after the migration. This can involve checking data types, constraints, and the accuracy of data transformations.

- Rollback Testing: Test the rollback functionality to ensure that migrations can be reverted safely without data loss or application disruption.

- Performance Testing: Measure the performance impact of migrations, especially for large datasets. This can help identify bottlenecks and optimize migration scripts.

Creating Unit Tests for Migration Scripts

Unit tests are essential for verifying the correctness of individual migration steps. They should be designed to isolate and test specific functionalities, such as adding a column, creating an index, or modifying a data type.

Here’s how to create effective unit tests:

- Test Framework: Choose a suitable testing framework for your programming language and database. Popular choices include JUnit (Java), pytest (Python), and PHPUnit (PHP).

- Test Database: Use a dedicated test database for unit tests to avoid affecting the main application data. Consider using an in-memory database for faster test execution.

- Test Cases: Create test cases for each migration step, covering various scenarios and edge cases.

- Assertions: Use assertions to verify the expected outcome of each migration step. For example, assert that a column has been added or that an index exists.

Example (Python with pytest):

Assume a migration script adds a ‘status’ column to a ‘users’ table.

# migration_script.pyfrom alembic import opimport sqlalchemy as sadef upgrade(): op.add_column('users', sa.Column('status', sa.String(50), nullable=False, default='active'))def downgrade(): op.drop_column('users', 'status') Unit test:

# test_migration_script.pyimport pytestfrom alembic import commandfrom alembic.config import Configfrom sqlalchemy import create_engine, [email protected](scope="module")def engine(): engine = create_engine("sqlite:///:memory:") # Using an in-memory SQLite database yield engine engine.dispose()@pytest.fixture(scope="module")def alembic_config(): config = Config("alembic.ini") # Assuming alembic.ini is configured config.set_main_option("script_location", "migrations") # Path to migrations return configdef test_add_status_column(engine, alembic_config): # Apply the migration command.upgrade(alembic_config, "head") inspector = inspect(engine) assert "users" in inspector.get_table_names() # Verify that the 'status' column exists in the 'users' table columns = inspector.get_columns("users") status_column_exists = any(column["name"] == "status" for column in columns) assert status_column_exists == True # Optionally, check the data type and default value status_column_info = next((column for column in columns if column["name"] == "status"), None) assert status_column_info["type"].__class__.__name__ == "String" assert status_column_info["default"] == "active" # Rollback the migration command.downgrade(alembic_config, "base") # Verify that the 'status' column no longer exists columns = inspector.get_columns("users") status_column_exists = any(column["name"] == "status" for column in columns) assert status_column_exists == False

Creating Integration Tests for Migration Scripts

Integration tests ensure that the entire migration process functions correctly.

They simulate the production environment as closely as possible.

Key aspects of integration tests:

- Test Environment: Use a test database that mirrors the production environment, including database type, version, and configuration.

- Migration Order: Verify that migrations are applied in the correct order and that dependencies between migrations are handled correctly.

- Data Interaction: Test how migrations interact with existing data and ensure that data transformations are performed accurately.

- Rollback Functionality: Test the rollback process to verify that the database can be reverted to a previous state without data loss.

Example (Python with pytest and Alembic):

Assume we have two migrations: the first creates the ‘users’ table, and the second adds a ‘status’ column.

# Create the users table# migrations/versions/20240101_create_users_table.pyfrom alembic import opimport sqlalchemy as sadef upgrade(): op.create_table( 'users', sa.Column('id', sa.Integer, primary_key=True), sa.Column('name', sa.String(255), nullable=False) )def downgrade(): op.drop_table('users') # Add status column# migrations/versions/20240102_add_status_column.pyfrom alembic import opimport sqlalchemy as sadef upgrade(): op.add_column('users', sa.Column('status', sa.String(50), nullable=False, default='active'))def downgrade(): op.drop_column('users', 'status') Integration Test:

# test_integration.pyimport pytestfrom alembic import commandfrom alembic.config import Configfrom sqlalchemy import create_engine, [email protected](scope="module")def engine(): engine = create_engine("sqlite:///:memory:") yield engine engine.dispose()@pytest.fixture(scope="module")def alembic_config(): config = Config("alembic.ini") config.set_main_option("script_location", "migrations") return configdef test_full_migration_and_rollback(engine, alembic_config): # Apply all migrations command.upgrade(alembic_config, "head") inspector = inspect(engine) assert "users" in inspector.get_table_names() # Verify that the 'status' column exists columns = inspector.get_columns("users") status_column_exists = any(column["name"] == "status" for column in columns) assert status_column_exists == True # Add a row to the table and verify from sqlalchemy import text with engine.connect() as connection: connection.execute(text("INSERT INTO users (name) VALUES ('testuser')")) result = connection.execute(text("SELECT- FROM users")) rows = result.fetchall() assert len(rows) == 1 # Rollback all migrations command.downgrade(alembic_config, "base") # Verify that the users table is dropped assert "users" not in inspector.get_table_names() # Verify the table and data has been removed after the rollback with engine.connect() as connection: result = connection.execute(text("SELECT- FROM users")) rows = result.fetchall() assert len(rows) == 0

Test Cases for Different Migration Scenarios

Creating diverse test cases that cover various migration scenarios is crucial for ensuring the robustness of the migration process.

These test cases should address different aspects of the database schema and data.

Examples of test cases:

- Adding a new column: Verify that the new column is created with the correct data type, constraints, and default values. Test cases should include adding columns with different data types (e.g., VARCHAR, INTEGER, BOOLEAN, DATE) and default values.

- Removing a column: Ensure that the column is dropped and that data is not lost or corrupted.

- Changing a column’s data type: Validate that data is converted correctly when changing a column’s data type (e.g., from INTEGER to VARCHAR). This includes testing data type conversions and potential data loss if the new data type cannot accommodate existing data.

- Creating an index: Verify that the index is created and that it improves query performance. Performance testing can be included here to measure the impact of the index on query execution time.

- Renaming a table or column: Ensure that references to the renamed object are updated correctly and that the application continues to function as expected.

- Adding or removing a foreign key constraint: Test that the constraint is enforced correctly and that data integrity is maintained.

- Data migration: Test data transformations, such as updating data values, splitting or merging columns, or migrating data to a new table. These tests should verify that data is transformed accurately and that data consistency is maintained. For example, testing a migration that changes a user’s role from a string to an enum type would involve verifying that the existing string values are correctly mapped to the enum values.

- Rollback scenarios: Test rollback functionality after each of the above scenarios to ensure that the database can be reverted to its previous state without data loss or application downtime.

Example (adding a NOT NULL constraint):

# Migration scriptfrom alembic import opimport sqlalchemy as sadef upgrade(): op.alter_column('users', 'email', nullable=False)def downgrade(): op.alter_column('users', 'email', nullable=True) Test case:

# Test caseimport pytestfrom alembic import commandfrom alembic.config import Configfrom sqlalchemy import create_engine, inspect, [email protected](scope="module")def engine(): engine = create_engine("sqlite:///:memory:") yield engine engine.dispose()@pytest.fixture(scope="module")def alembic_config(): config = Config("alembic.ini") config.set_main_option("script_location", "migrations") return configdef test_add_not_null_constraint(engine, alembic_config): # Create a base table with engine.connect() as connection: connection.execute(text("CREATE TABLE users (id INTEGER PRIMARY KEY, email VARCHAR(255))")) connection.execute(text("INSERT INTO users (email) VALUES ('[email protected]')")) # Apply the migration command.upgrade(alembic_config, "head") # Verify that the constraint is enforced from sqlalchemy.exc import IntegrityError with pytest.raises(IntegrityError): with engine.connect() as connection: connection.execute(text("INSERT INTO users (email) VALUES (NULL)")) # Rollback the migration command.downgrade(alembic_config, "base") # Verify that the constraint is removed with engine.connect() as connection: connection.execute(text("INSERT INTO users (email) VALUES (NULL)")) # This should now succeed without raising an error

Rollback Strategies

Database migrations, while essential for evolving your application’s data model, can sometimes introduce errors.

These errors can lead to application downtime, data corruption, or other critical issues. Implementing robust rollback strategies is crucial for mitigating the risks associated with failed migrations and ensuring the stability and reliability of your application. A well-defined rollback plan allows you to revert to a known good state quickly, minimizing the impact of migration failures on your users.

Importance of Rollback Strategies

Rollback strategies are vital for several reasons. They act as a safety net, protecting your data and application from the adverse effects of faulty migrations.

- Data Integrity: Failed migrations can lead to data loss, corruption, or inconsistencies. Rollbacks allow you to restore the database to its pre-migration state, preserving data integrity.

- Application Availability: Migration errors can cause application downtime. Rollback strategies enable you to quickly revert to a working version, minimizing disruption to your users.

- Reduced Risk: Implementing rollback plans reduces the overall risk associated with database changes. This provides confidence when deploying new database schema versions.

- Faster Recovery: When a migration fails, a well-defined rollback strategy streamlines the recovery process, allowing for a swift return to a stable state.

Different Rollback Methods

Several rollback methods can be employed, each with its own advantages and disadvantages. The choice of method depends on factors such as the complexity of the migration, the database system, and the specific requirements of your application.

- Manual Rollback: This involves manually executing a set of SQL scripts or commands to revert the changes introduced by the failed migration. It provides flexibility but requires careful planning, documentation, and the ability to understand the impact of each migration step. This method is often time-consuming and prone to human error, especially for complex migrations.

- Automated Rollback: This approach automates the rollback process, often using scripts or tools to revert the database to a previous state. This can significantly reduce the time and effort required for rollback.

- Transaction-Based Rollback: If the entire migration is wrapped within a database transaction, the database system can automatically roll back all changes if any part of the migration fails. This is a simple and effective approach for atomic changes, but it may not be suitable for long-running migrations or migrations that involve external dependencies.

- Rollback Scripts: These are pre-written SQL scripts designed to undo the changes made by a specific migration. When a migration fails, the rollback script is executed to revert the database to its previous state.

- Migration Tool Features: Some migration tools provide built-in rollback features that automatically handle the rollback process. These features often track the changes made during the migration and can revert them in a controlled manner.

- Point-in-Time Recovery (PITR): PITR allows you to restore the database to a specific point in time. This is useful for rolling back to a state before the failed migration was applied. PITR requires regular database backups and transaction logs.

- Blue/Green Deployments: In a blue/green deployment strategy, you maintain two identical database environments (blue and green). The green environment receives the new migration. If the migration fails in the green environment, you can switch back to the blue environment. This strategy offers zero-downtime rollbacks, but it requires additional infrastructure and resources.

Procedure for Implementing a Rollback Strategy in a CI/CD Pipeline

Implementing a robust rollback strategy within your CI/CD pipeline involves several key steps. This ensures that rollbacks are executed efficiently and effectively when migration failures occur.

- Design Rollback Scripts: For each migration, create a corresponding rollback script. These scripts should reverse the changes made by the migration. Thoroughly test the rollback scripts to ensure they function correctly.

- Automated Rollback Execution: Integrate the rollback process into your CI/CD pipeline. When a migration fails, the pipeline should automatically trigger the execution of the appropriate rollback script or use a migration tool’s built-in rollback functionality.

- Monitoring and Alerting: Implement monitoring to detect migration failures. Set up alerts to notify the relevant teams when a migration fails and a rollback is initiated.

- Testing Rollback Procedures: Regularly test your rollback procedures to ensure they work as expected. Simulate migration failures and verify that the rollback process successfully restores the database to a stable state.

- Version Control Integration: Store your migration scripts and rollback scripts in your version control system (e.g., Git). This allows you to track changes, manage versions, and easily deploy them with your application code.

- Logging and Auditing: Log all migration and rollback events, including the start and end times, the scripts executed, and any errors encountered. This information is crucial for troubleshooting and auditing purposes.

- Consider Dependencies: If your migration involves changes to application code, you might need to rollback both the database and the application code to maintain consistency. Coordinate these rollbacks carefully to avoid compatibility issues.

Monitoring and Logging

Monitoring and logging are crucial components of a robust CI/CD pipeline for database migrations. They provide visibility into the migration process, enabling teams to identify and resolve issues promptly, ensuring data integrity, and maintaining application uptime. Implementing effective monitoring and logging strategies helps to build confidence in the migration process and allows for quick responses to any unexpected problems.

Monitoring Database Migration Progress and Identifying Potential Issues

Monitoring the progress of database migrations is essential for understanding their status and identifying potential problems. This proactive approach enables teams to address issues before they escalate, minimizing downtime and data corruption risks.

- Real-time Monitoring: Implement real-time monitoring dashboards that display the status of migration jobs. These dashboards should show the number of migrations completed, the number of migrations in progress, and any errors encountered. Tools like Prometheus, Grafana, and dedicated database monitoring solutions can be leveraged for this purpose. For example, a dashboard might display the percentage of tables migrated, the elapsed time, and the number of rows processed per second.

- Performance Metrics: Track key performance indicators (KPIs) to identify performance bottlenecks. Monitor metrics such as migration duration, CPU usage, memory consumption, and I/O operations. If a migration takes longer than expected or consumes excessive resources, it could indicate an issue with the migration script, database configuration, or underlying infrastructure.

- Error Tracking: Establish a system for tracking and alerting on errors during migrations. This could involve integrating with error tracking tools like Sentry or Rollbar, or configuring custom alerts based on log messages. Analyzing error patterns can help identify recurring issues and improve the robustness of migration scripts. For example, an alert might be triggered if a migration fails due to a constraint violation or a missing index.

- Transaction Monitoring: Monitor database transactions, especially during critical migration steps. Analyze transaction logs to identify long-running transactions, deadlocks, or other transaction-related issues that could impact the migration process. This level of monitoring helps in identifying potential data inconsistencies.

- Infrastructure Monitoring: Monitor the underlying infrastructure, including servers, network, and storage, to ensure they are not impacting the migration process. Check for resource constraints, network latency, and storage I/O bottlenecks. Tools like Nagios, Zabbix, and cloud-provider-specific monitoring services can be used for infrastructure monitoring.

Logging Migration Events and Errors

Comprehensive logging is vital for providing detailed information about the migration process. Logs should capture all relevant events, including the start and end times of migrations, the execution of individual scripts, and any errors encountered. Well-structured logs enable effective troubleshooting and facilitate auditing.

- Event Logging: Log all significant events during the migration process. This includes the start and end of each migration, the execution of individual SQL scripts, and the completion status of each step. Include timestamps, migration IDs, and the user or service that initiated the migration.

- Error Logging: Implement robust error logging to capture detailed information about any errors that occur during migrations. Include the error message, the SQL statement that caused the error, the database server, and the context in which the error occurred. Log the stack trace to facilitate debugging.

- Log Levels: Utilize different log levels (e.g., DEBUG, INFO, WARN, ERROR) to control the verbosity of the logs. Use DEBUG for detailed information useful for troubleshooting, INFO for general operational information, WARN for potential issues, and ERROR for critical failures.

- Structured Logging: Employ structured logging formats, such as JSON, to facilitate parsing and analysis of log data. Structured logs make it easier to search, filter, and aggregate log entries.

- Log Aggregation: Use a log aggregation tool, such as the ELK stack (Elasticsearch, Logstash, Kibana) or Splunk, to collect, store, and analyze logs from multiple sources. This allows for centralized log management and provides powerful search and visualization capabilities.

- Sensitive Data Masking: Implement measures to protect sensitive data in logs, such as masking passwords, API keys, and other confidential information. This helps to prevent unauthorized access to sensitive data.

Alerting and Notifications During Migration Deployments

Alerting and notifications are critical for proactively responding to issues during migration deployments. Timely alerts enable teams to address problems before they impact application availability or data integrity.

- Alerting Rules: Define clear alerting rules based on specific events and conditions. For example, set up alerts for failed migrations, slow migrations, high CPU usage during migrations, and database errors.

- Notification Channels: Configure multiple notification channels, such as email, Slack, and PagerDuty, to ensure that the appropriate team members are notified promptly. Consider using different channels for different severity levels.

- Severity Levels: Assign severity levels to alerts (e.g., critical, high, medium, low) to prioritize responses. Critical alerts should trigger immediate action, while lower-priority alerts can be addressed during regular working hours.

- Notification Content: Include relevant information in the notification messages, such as the migration ID, the error message, the affected database, and links to relevant logs and dashboards. This helps recipients quickly understand the issue and take appropriate action.

- Automation: Automate the alerting process to minimize manual intervention. Integrate alerting with the CI/CD pipeline to automatically trigger notifications when a migration fails or encounters an issue.

- Incident Management: Establish an incident management process to handle alerts and ensure that issues are resolved efficiently. This should include clear escalation paths and documented procedures for responding to different types of alerts.

Advanced Topics and Best Practices

Managing database migrations in a CI/CD pipeline, while seemingly straightforward, can become complex in large-scale projects or when dealing with intricate database schemas. This section delves into advanced techniques and best practices to ensure smooth and efficient database management throughout your development lifecycle.

Database Refactoring During Migrations

Database refactoring is the process of restructuring the internal design of a database without changing its external behavior. It’s crucial for maintaining a healthy and scalable database, and it often needs to be performed in conjunction with schema migrations.

- Why Refactor? Over time, database schemas can become unwieldy and inefficient. Refactoring addresses issues such as:

- Performance bottlenecks caused by poorly designed indexes or inefficient queries.

- Data integrity problems due to inconsistent data types or missing constraints.

- Difficulties in adding new features or modifying existing ones due to a complex schema.

- Refactoring Techniques: Common database refactoring techniques include:

- Adding Indexes: Improves query performance by allowing the database to quickly locate specific data.

- Changing Data Types: Optimizes storage and data integrity by selecting the most appropriate data type for each column.

- Splitting Tables: Improves performance and organization by dividing a large table into smaller, more manageable ones.

- Merging Tables: Consolidates similar tables to reduce redundancy and simplify the schema.

- Renaming Columns or Tables: Improves clarity and maintainability by using more descriptive names.

- Adding Constraints: Ensures data integrity by enforcing rules such as foreign keys, unique constraints, and check constraints.

- Migration Strategy: Integrating refactoring into migrations requires careful planning:

- Incremental Changes: Make small, incremental changes rather than large, disruptive ones.

- Backwards Compatibility: Ensure that new code is compatible with the old database schema during the transition period.

- Testing: Thoroughly test refactored schemas to ensure they function correctly and do not introduce regressions.

- Blue/Green Deployments: For complex refactorings, consider using blue/green deployments to minimize downtime. The “blue” environment is the current live database, and the “green” environment is a new, refactored database. Once testing is complete, traffic is switched to the “green” environment.

Best Practices for Large-Scale Projects

Large-scale projects have unique challenges when it comes to database migrations. These best practices will help ensure that your migrations are reliable, efficient, and maintainable.

- Database Migration as Code: Treat database migrations as code, just like application code. This means version control, code reviews, and automated testing.

- Automation is Key: Automate every step of the migration process, from script generation to deployment. Use CI/CD pipelines to execute migrations automatically.

- Idempotent Migrations: Write migrations that can be run multiple times without causing errors or data corruption. This is crucial for handling failures and retries.

- Batch Processing: For large datasets, use batch processing to avoid locking tables for extended periods. Break down large operations into smaller, more manageable chunks.

- Monitoring and Alerting: Implement comprehensive monitoring and alerting to detect and respond to migration failures or performance issues. Monitor migration execution time, success/failure rates, and database resource utilization.

- Database Schema Documentation: Maintain clear and up-to-date documentation of your database schema, including tables, columns, relationships, and indexes. This documentation should be version-controlled and updated alongside your migrations.

- Staging Environment: Use a staging environment that mirrors your production environment for testing migrations before deploying them to production. This helps identify potential issues early on.

- Database User Permissions: Grant the least privilege necessary to database users. Avoid using overly permissive accounts.

- Rollback Plan: Have a well-defined rollback plan in place for every migration. This includes a strategy for reverting changes and restoring the database to a previous state. Test your rollback plan regularly.

- Team Collaboration: Establish clear communication and collaboration processes between developers, database administrators, and operations teams. This ensures that everyone is aware of upcoming migrations and can contribute to their success.

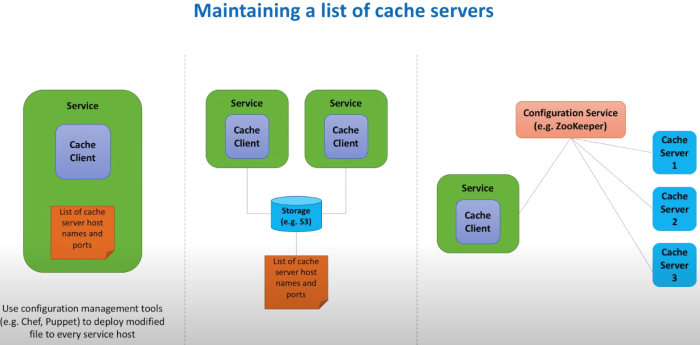

Illustration of a CI/CD Pipeline with Database Migration Steps

The following describes a typical CI/CD pipeline with database migration steps.

Imagine a horizontal flow diagram. At the far left is the “Developer’s Machine,” the starting point. An arrow indicates the code change process. Next is “Version Control (e.g., Git),” where the code, including migration scripts, is committed. A second arrow goes to “CI Server (e.g., Jenkins, GitLab CI, GitHub Actions).” This is the core of the pipeline, which includes these steps:

- Code Commit: The process begins with a developer committing code changes, including database migration scripts, to a version control system (e.g., Git).

- Trigger: The commit triggers the CI/CD pipeline.

- Build Phase:

- Code Compilation (if applicable): The CI server compiles the application code (e.g., Java, C#).

- Unit Tests: Unit tests are executed to verify individual components.

- Database Migration Phase:

- Schema Validation: The CI server validates the database migration scripts.

- Database Connection: The CI server connects to the target database (e.g., a staging or development database).

- Migration Execution: The migration tool executes the migration scripts against the database.

- Integration Tests: Integration tests are run to ensure the application and database work together correctly.

- Testing Phase:

- Integration Tests: Further integration tests are performed to validate the application’s functionality with the updated database schema.

- Performance Tests: Performance tests are conducted to assess the impact of the migration on database performance.

- User Acceptance Testing (UAT): (Optional) UAT is performed in a staging environment to validate the changes.

- Deployment Phase:

- Artifact Creation: The CI server creates deployable artifacts (e.g., container images, packages).

- Deployment to Environment: The artifacts are deployed to the target environment (e.g., staging or production).

- Database Migration in Production: The migration scripts are executed against the production database.

- Health Checks: Post-deployment health checks are performed to verify the application and database are functioning correctly.

- Monitoring and Feedback:

- Monitoring: Continuous monitoring of the application and database performance.

- Alerting: Automated alerts are configured to notify the team of any issues.

- Feedback Loop: Feedback is provided to developers to improve the code and migration scripts.

The pipeline is designed to be automated, ensuring that database migrations are executed consistently and reliably. The CI/CD server orchestrates each step, from code commit to deployment, including the critical database migration phase. The pipeline also incorporates testing and monitoring to validate changes and ensure a smooth transition. The cycle repeats with each code change, providing a continuous delivery process.

Final Review

In conclusion, successfully managing database migrations within a CI/CD pipeline is crucial for achieving efficient and reliable software delivery. By adopting the strategies and best practices Artikeld in this guide – from selecting the right tools to implementing robust testing and rollback procedures – you can ensure a smooth and automated deployment process. Embracing these principles not only reduces the risk of errors and downtime but also empowers your development team to iterate faster and deliver value more effectively.

The journey towards optimized database migrations is a continuous one, and this guide provides a solid foundation for achieving excellence in this critical area.

Clarifying Questions

What is the difference between database migrations and schema updates?

Database migrations are a broader concept, encompassing all changes to a database schema, including table creation, modification, and data manipulation. Schema updates are a subset of migrations, focusing specifically on altering the structure of the database (e.g., adding columns, changing data types).

Why is version control important for database migrations?

Version control allows you to track changes to your database schema over time, collaborate effectively with your team, and easily roll back to previous states if necessary. It also enables you to maintain a history of all database changes, making it easier to understand and debug issues.

How can I handle database migrations in a multi-environment setup (e.g., development, staging, production)?

Use environment-specific configuration files or variables to manage settings like database connection strings. Implement conditional logic within your migration scripts to execute environment-specific changes, and ensure your CI/CD pipeline handles deployments to each environment appropriately.

What are the common challenges in database migrations?

Common challenges include managing data consistency during migrations, handling complex schema changes, ensuring zero-downtime deployments, and coordinating migrations across multiple environments. Careful planning, testing, and the right tools are crucial to mitigate these challenges.

How do I choose the right migration tool for my project?

Consider factors like your database system, project size, team familiarity, and desired features. Evaluate tools like Flyway, Liquibase, and Django migrations based on their ease of use, support for your database, and integration capabilities with your CI/CD pipeline. Choose the tool that best fits your project’s specific needs.