The transition from on-premise infrastructure to the cloud represents a significant architectural shift, demanding a methodical approach to ensure success. This transformation involves not only migrating existing systems but also rethinking operational strategies, security protocols, and cost management. The following sections will provide a detailed roadmap for organizations aiming to navigate this complex process effectively.

This document provides a comprehensive guide to the strategic, technical, and operational aspects of migrating from on-premise infrastructure to the cloud. It encompasses planning, provider selection, migration methods, security considerations, and ongoing management. Each section offers actionable insights and practical advice to help organizations optimize their cloud journey, minimizing risks and maximizing benefits.

Planning and Strategy

The successful migration from on-premise infrastructure to the cloud hinges on meticulous planning and a well-defined strategy. This phase lays the groundwork for a smooth transition, minimizing disruptions and maximizing the benefits of cloud adoption. Careful consideration of existing infrastructure, business needs, and potential risks is crucial for a successful cloud migration.

Assessing Current On-Premise Infrastructure

Understanding the current on-premise environment is the first step. This assessment provides a baseline for comparison and identifies dependencies, bottlenecks, and areas for optimization.

The following steps are involved in a thorough infrastructure assessment:

- Inventory and Documentation: A comprehensive inventory of all hardware, software, and network components is essential. This includes servers, storage devices, network devices, operating systems, applications, and databases. Detailed documentation should be created or updated, including configuration details, dependencies, and interconnections.

- Performance Analysis: Analyze the performance of existing infrastructure, including CPU utilization, memory usage, disk I/O, and network latency. Tools like performance monitoring software (e.g., Prometheus, Grafana, or vendor-specific monitoring solutions) should be employed to gather data over a representative period. This data is crucial for understanding resource consumption and identifying potential performance bottlenecks.

- Application Dependency Mapping: Identify and map the dependencies between applications and infrastructure components. This includes understanding how applications interact with each other, databases, and other services. This mapping is critical for planning the migration order and ensuring application functionality in the cloud. Tools like network traffic analysis (e.g., Wireshark) and application performance monitoring (APM) can assist in this process.

- Security Assessment: Evaluate the security posture of the on-premise environment. This includes identifying vulnerabilities, assessing access controls, and reviewing security policies. Penetration testing and vulnerability scanning should be conducted to identify potential security risks.

- Cost Analysis: Determine the total cost of ownership (TCO) of the on-premise infrastructure. This includes hardware costs, software licensing fees, maintenance costs, energy consumption, and personnel costs. This analysis provides a baseline for comparing the costs of the on-premise environment with cloud-based solutions.

Identifying Business Needs and Aligning Them with Cloud Solutions

Aligning business needs with cloud solutions ensures that the migration supports the organization’s strategic goals. This requires a deep understanding of the business requirements and a careful selection of cloud services that meet those needs.

The process of aligning business needs with cloud solutions involves the following:

- Requirements Gathering: Conduct interviews with key stakeholders across different business units to gather requirements. These requirements should include performance, scalability, security, compliance, and cost considerations.

- Cloud Service Selection: Evaluate different cloud service models (IaaS, PaaS, SaaS) and providers (AWS, Azure, GCP) to determine the best fit for the identified business needs. Consider factors such as cost, performance, security, and compliance.

- Solution Architecture Design: Design a cloud-based solution architecture that meets the business requirements. This includes selecting appropriate cloud services, designing network configurations, and defining security policies.

- Proof of Concept (POC): Implement a proof of concept (POC) to validate the proposed solution architecture. This involves testing the performance, scalability, and security of the cloud-based solution.

- Cost Modeling: Develop a cost model to estimate the ongoing costs of the cloud-based solution. This should include the costs of cloud services, network bandwidth, and personnel.

Designing a Risk Assessment Framework for Potential Challenges During the Migration

A comprehensive risk assessment framework is essential to identify, assess, and mitigate potential challenges during the migration process. This framework helps to proactively address potential issues and minimize disruptions.

The following elements constitute a robust risk assessment framework:

- Risk Identification: Identify potential risks associated with the cloud migration. These risks can include data loss, security breaches, performance degradation, vendor lock-in, and cost overruns. Risk identification should involve a cross-functional team with expertise in various areas, including IT, security, and business operations.

- Risk Assessment: Assess the likelihood and impact of each identified risk. This can be done using a risk matrix, which plots the likelihood of a risk occurring against the potential impact. The impact can be quantified in terms of cost, time, and business disruption.

- Risk Mitigation Planning: Develop mitigation plans for each identified risk. These plans should Artikel the steps that will be taken to reduce the likelihood or impact of the risk. Mitigation strategies can include technical controls, process improvements, and insurance.

- Risk Monitoring and Review: Continuously monitor the risks throughout the migration process. This includes tracking the status of mitigation plans and identifying any new risks that may arise. The risk assessment framework should be reviewed and updated regularly to ensure its effectiveness.

- Contingency Planning: Develop contingency plans for high-impact risks. These plans should Artikel the steps that will be taken to recover from a major disruption. For example, if there’s a risk of data loss, the contingency plan should include a data backup and recovery strategy.

Choosing the Right Cloud Provider

Selecting the appropriate cloud provider is a critical decision in the transition from on-premise infrastructure to the cloud. This choice significantly impacts cost, performance, security, and the overall success of the migration. The following sections provide a comparative analysis of leading cloud providers, guidance on cost optimization, and methods for aligning provider selection with specific business needs and technical expertise.

Comparing Cloud Provider Services

Choosing the right cloud provider necessitates a thorough understanding of the services offered by leading vendors. Each provider – Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) – possesses unique strengths, weaknesses, and pricing models. The following table provides a comparative overview to aid in this evaluation:

| Provider | Strengths | Weaknesses | Pricing Models |

|---|---|---|---|

| AWS |

|

|

|

| Azure |

|

|

|

| GCP |

|

|

|

Evaluating Cloud Provider Pricing Models

Effective cost optimization is crucial for successful cloud adoption. Understanding and leveraging cloud provider pricing models is key to controlling expenses.

- Pay-as-you-go: This model is suitable for fluctuating workloads and allows for scalability without upfront investment. However, it requires careful monitoring to avoid unexpected costs.

- Reserved Instances/Committed Use Discounts: These models offer significant discounts for committing to resource usage for a specific duration (e.g., one or three years). They are ideal for predictable workloads. For instance, AWS Reserved Instances can reduce the cost of an EC2 instance by up to 75% compared to on-demand pricing, providing substantial savings for consistently running workloads.

- Spot Instances/Preemptible VMs: These provide the lowest cost but come with the risk of interruption. They are suitable for fault-tolerant workloads. Google Cloud’s Preemptible VMs can offer discounts of up to 80% compared to standard VMs, making them cost-effective for batch processing or non-critical tasks.

- Savings Plans: These flexible pricing models, available from AWS, allow you to commit to a certain amount of compute usage (measured in dollars per hour) in exchange for discounts. This can provide savings across different instance types and families.

Careful monitoring of resource utilization and regular cost analysis are essential for optimizing cloud spending. Utilize cloud provider cost management tools to identify areas for improvement and to track spending against budgets.

Selecting a Provider Based on Business Requirements and IT Expertise

The optimal cloud provider selection hinges on aligning the provider’s capabilities with the specific business needs and the existing IT expertise within the organization.

- Business Requirements: Consider factors such as data residency requirements, industry-specific compliance standards (e.g., HIPAA, GDPR), and the geographic distribution of the user base. For example, a company operating in the European Union must ensure its cloud provider complies with GDPR regulations, which may influence the choice of provider and data center locations.

- IT Expertise: Assess the skills and experience of the IT team. If the team has extensive experience with Microsoft technologies, Azure might be a more natural fit. If the team is proficient in open-source technologies and data analytics, GCP could be a better choice. Training and upskilling may be necessary to bridge any skill gaps.

- Workload Characteristics: Analyze the nature of the workloads to be migrated. Compute-intensive applications may benefit from the performance and pricing of specific instance types offered by each provider. Data-intensive applications might favor providers with robust data analytics and storage solutions. For example, if an organization relies heavily on containerized applications, GCP’s Kubernetes service (GKE) may be a more suitable choice due to its advanced features and integration.

- Vendor Lock-in: Be aware of the potential for vendor lock-in. While it’s often beneficial to leverage a provider’s specialized services, avoid becoming overly dependent on a single provider’s proprietary offerings. Consider adopting a multi-cloud strategy or using open-source technologies to maintain flexibility.

A pilot project or proof-of-concept (POC) can be invaluable in evaluating different cloud providers. This allows the organization to test various services, assess performance, and gain hands-on experience before committing to a full-scale migration.

Assessing Current Infrastructure

A thorough assessment of the existing on-premise infrastructure is paramount for a successful cloud migration. This phase provides the foundational data necessary to plan, execute, and optimize the transition. It involves a comprehensive understanding of hardware, software, dependencies, and potential compatibility issues. Neglecting this step can lead to unforeseen challenges, cost overruns, and performance bottlenecks during and after the migration.

Inventorying Existing Hardware and Software

Creating a detailed inventory of the current infrastructure is the initial step. This process involves identifying all hardware and software components, their configurations, and their interdependencies. Several tools and techniques can be employed to achieve this objective.

- Automated Discovery Tools: These tools automatically scan the network and identify hardware and software assets. Examples include:

- Network Scanners: Tools like Nmap, OpenVAS, and Nessus can identify active hosts, operating systems, open ports, and running services. These tools use techniques like TCP connect scans, SYN scans, and UDP scans to gather information.

- Agent-Based Inventory Tools: Agents are installed on individual servers and workstations to collect detailed hardware and software information. Examples include:

- System Center Configuration Manager (SCCM): Microsoft’s SCCM provides comprehensive hardware and software inventory capabilities, including hardware details, installed software, and software usage data. It operates by deploying agents to client devices that report back to a central management server.

- Ansible: While primarily a configuration management tool, Ansible can also be used to gather inventory data by running ad-hoc commands and gathering facts about managed hosts.

- Manual Documentation: While automated tools are essential, manual documentation is also crucial, especially for specialized systems or configurations. This involves:

- Spreadsheets: Using spreadsheets (e.g., Microsoft Excel, Google Sheets) to record hardware specifications (CPU, RAM, storage), software versions, and licensing information.

- Configuration Management Databases (CMDBs): CMDBs, such as ServiceNow or BMC Remedy, provide a centralized repository for storing and managing IT asset information. These databases allow for the tracking of relationships between assets and can be used to generate reports and visualizations.

- Software License Auditing: Accurate tracking of software licenses is critical to ensure compliance and avoid penalties. This involves:

- License Management Tools: Tools like Flexera Software Manager and Snow Software can automate license tracking, usage monitoring, and compliance reporting.

- Manual Audits: Regularly reviewing software installations and license agreements to ensure that all software is properly licensed.

Identifying Dependencies and Compatibility Issues

Understanding the dependencies between applications and systems is crucial for planning the migration order and minimizing downtime. Compatibility issues can arise when migrating applications to the cloud due to differences in operating systems, databases, or network configurations.

- Dependency Mapping: This involves identifying how different applications and systems rely on each other.

- Application Dependency Mapping Tools: Tools like AppDynamics and New Relic can automatically map application dependencies by analyzing network traffic and code execution.

- Manual Analysis: Examining application configuration files, database schemas, and network diagrams to identify dependencies. This might involve using tools like `netstat` or `lsof` to determine network connections and file usage.

- Compatibility Assessment: Evaluating the compatibility of existing applications with the target cloud environment.

- Operating System Compatibility: Ensuring that the operating systems used by the applications are supported by the cloud provider. For example, some cloud providers may not support older versions of Windows Server.

- Database Compatibility: Verifying that the database systems are compatible with the cloud provider’s offerings. This might involve migrating to a cloud-native database service or reconfiguring the existing database.

- Application Code Analysis: Analyzing the application code to identify any dependencies on on-premise infrastructure or libraries that are not available in the cloud.

- Network Configuration Analysis: Evaluating network configurations to identify potential issues.

- Firewall Rules: Reviewing firewall rules to ensure that they allow the necessary traffic to and from the cloud environment.

- DNS Configuration: Verifying that DNS records are correctly configured to resolve to the cloud resources.

Documenting the Current State of the On-Premise Environment

Comprehensive documentation is essential for a smooth transition. This documentation serves as a reference point for planning, execution, and troubleshooting during the migration.

- Infrastructure Diagrams: Creating visual representations of the current infrastructure, including servers, network devices, and application dependencies. These diagrams can be created using tools like Lucidchart, draw.io, or Microsoft Visio. A well-structured diagram will clearly show the flow of data and interactions between different components.

- Configuration Documentation: Documenting the configuration of servers, applications, and network devices.

- Server Configuration: Recording the operating system version, installed software, network settings, and security configurations.

- Application Configuration: Documenting the configuration files, database connection strings, and other settings specific to each application.

- Network Configuration: Documenting the IP addresses, subnet masks, gateway addresses, and other network settings.

- Performance Metrics: Collecting performance data to establish a baseline for comparison after the migration.

- CPU Utilization: Monitoring the CPU usage of servers and applications.

- Memory Usage: Monitoring the memory usage of servers and applications.

- Disk I/O: Monitoring the disk input/output operations per second (IOPS).

- Network Throughput: Monitoring the network traffic volume.

- Security Documentation: Documenting security configurations, including firewall rules, access controls, and security policies.

Migration Methods and Approaches

Successfully transitioning from an on-premise infrastructure to a cloud environment hinges on selecting and executing the appropriate migration strategy. The chosen approach dictates the complexity, cost, and timeline of the migration, influencing the overall business impact. Understanding the various methods and their suitability for different application types is crucial for a successful cloud adoption journey.

Migration Strategies

Several distinct migration strategies exist, each with its own characteristics, advantages, and disadvantages. The selection process involves careful consideration of factors like application complexity, business requirements, budget constraints, and desired level of cloud optimization. The following Artikels the primary migration strategies.

- Rehosting (Lift and Shift): This involves moving applications and their associated infrastructure to the cloud with minimal or no modifications. The existing virtual machines (VMs) or servers are essentially replicated in the cloud environment. This approach is often the quickest and simplest way to migrate, particularly for applications that are not heavily dependent on on-premise-specific configurations. The core advantage lies in its speed and lower initial cost.

However, it may not fully leverage the benefits of the cloud, such as scalability and cost optimization, if the underlying architecture is not adapted.

- Replatforming (Lift, Tinker, and Shift): Replatforming involves making some modifications to the application to leverage cloud-native services, without fundamentally changing its core architecture. This might include migrating to a managed database service (e.g., Amazon RDS, Azure SQL Database) or using a cloud-based application server. This approach offers a balance between effort and cloud optimization. The application may gain some benefits from cloud-native services without requiring a complete rewrite.

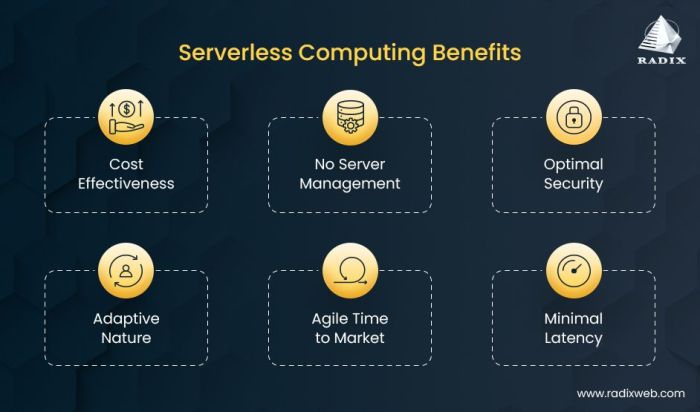

- Refactoring (Re-architecting): This involves redesigning and rewriting the application to take full advantage of cloud-native features and services. This strategy often leads to the most significant benefits in terms of scalability, performance, and cost optimization. It may involve breaking down a monolithic application into microservices, adopting serverless computing, or utilizing containerization technologies like Docker and Kubernetes. While offering the most significant long-term advantages, refactoring is the most time-consuming and resource-intensive approach.

- Repurchasing: This involves replacing the existing application with a cloud-native Software-as-a-Service (SaaS) solution. This is a common approach for applications like Customer Relationship Management (CRM), Enterprise Resource Planning (ERP), and email. The advantage is that the customer no longer needs to manage the underlying infrastructure, but the trade-off is often a loss of control over the application’s customization and data location.

- Retiring: Some applications may no longer be necessary or relevant in the cloud environment. Retiring these applications can reduce costs and simplify the migration process. This involves identifying and decommissioning applications that are no longer used or have become obsolete.

- Retaining: Some applications are best kept on-premise, for various reasons, such as compliance requirements, data residency needs, or specific performance demands. Hybrid cloud strategies often involve retaining some applications on-premise while migrating others to the cloud.

Step-by-Step Procedure for Lift and Shift Migration

The “lift and shift” strategy offers a straightforward path to cloud migration, primarily suited for applications with minimal dependencies on on-premise infrastructure. A well-defined procedure ensures a smooth and successful transition.

- Assessment and Planning: Conduct a thorough assessment of the existing on-premise environment. Identify all applications, their dependencies, resource requirements (CPU, memory, storage, network), and potential compatibility issues with the target cloud platform. Create a detailed migration plan, including timelines, resource allocation, and rollback strategies.

- Choose a Cloud Provider and Services: Select the appropriate cloud provider (e.g., AWS, Azure, Google Cloud) based on factors such as pricing, services offered, geographic regions, and existing expertise. Identify the cloud services needed to replicate the on-premise infrastructure, such as compute instances (VMs), storage, and networking.

- Prepare the Target Environment: Configure the cloud environment, including virtual networks, subnets, security groups, and access controls. Ensure the cloud infrastructure meets the application’s performance and security requirements. This step involves setting up the virtual machines, storage, and network configurations in the cloud.

- Data Migration: Transfer the application data to the cloud. The method of data migration depends on the volume of data, network bandwidth, and downtime tolerance. Options include using cloud-native data transfer services, virtual machine disk image transfer, or database replication tools. For large datasets, consider using offline data transfer methods (e.g., AWS Snowball, Azure Data Box) to minimize network transfer times.

- Application Migration: Deploy the application instances to the cloud environment. This involves creating virtual machine instances in the cloud and deploying the application components. Ensure that the application is configured correctly and that all dependencies are installed.

- Testing and Validation: Thoroughly test the migrated application in the cloud environment. Perform functional testing, performance testing, and security testing to ensure that the application behaves as expected and meets all service level agreements (SLAs).

- Cutover and Go-Live: Once the application has been successfully tested, perform the cutover to the cloud environment. This involves redirecting traffic to the cloud-based application. Minimize downtime by using techniques such as DNS cutover or load balancing.

- Post-Migration Optimization: After the migration is complete, optimize the cloud environment for performance, cost, and security. This may involve resizing instances, optimizing storage, and implementing auto-scaling. Continuously monitor the application’s performance and make adjustments as needed.

Determining the Most Appropriate Migration Approach for Different Applications

Selecting the right migration approach is critical for success. The best strategy varies based on the application’s characteristics, business objectives, and the resources available.

- Application Characteristics:

- Monolithic Applications: For monolithic applications, a “lift and shift” or “replatforming” approach may be suitable for initial migration, with refactoring considered for future optimization.

- Microservices: Microservices architectures are ideally suited for “refactoring” and leveraging cloud-native services like containerization (Docker, Kubernetes) and serverless functions.

- Legacy Applications: Legacy applications often benefit from a “lift and shift” approach initially, followed by “replatforming” or “refactoring” as time and resources allow.

- SaaS-Ready Applications: Applications that can be easily replaced with SaaS solutions are best suited for “repurchasing.”

- Business Objectives:

- Speed of Migration: “Lift and shift” is the fastest approach.

- Cost Optimization: “Refactoring” often yields the most significant cost savings in the long run by leveraging cloud-native features.

- Minimize Downtime: “Lift and shift” or “replatforming” with careful planning can minimize downtime.

- Innovation and Agility: “Refactoring” enables greater innovation and agility by leveraging cloud-native services and DevOps practices.

- Resource Availability:

- Limited Resources: “Lift and shift” or “replatforming” require fewer resources and less specialized expertise.

- Dedicated Team: “Refactoring” requires a dedicated team with cloud expertise and the ability to re-architect the application.

Data Migration Strategies

Data migration represents a critical phase in the transition from on-premise infrastructure to the cloud. The successful transfer of data, encompassing its integrity, security, and minimal disruption, is paramount to a smooth and efficient cloud adoption. The strategies employed during this phase directly influence the overall success of the migration, impacting business continuity, operational efficiency, and the realization of cloud-based benefits.

Data Transfer Methods

Several methods exist for transferring data from on-premise systems to the cloud, each with its own advantages and disadvantages depending on factors like data volume, network bandwidth, and downtime requirements.

- Online Migration: This approach involves transferring data over the network in real-time or near real-time. This method is suitable for smaller datasets and when minimal downtime is a priority. The primary advantage is its speed and ability to maintain data synchronization. However, it’s highly dependent on network bandwidth and stability. For example, a small e-commerce business with a limited customer database might opt for an online migration to ensure uninterrupted service.

- Offline Migration: Offline migration, also known as “bulk transfer,” involves physically moving data. This can be achieved through methods like using removable storage devices (e.g., hard drives, tapes) or dedicated network appliances. This method is often preferred for large datasets or when network bandwidth is a constraint. It typically results in higher transfer speeds, but requires more planning and potentially extended downtime.

For instance, a large financial institution with petabytes of archived data would likely utilize offline migration, leveraging services like AWS Snowball or Azure Data Box to transport the data physically.

- Hybrid Migration: This approach combines both online and offline methods. It often starts with an offline bulk transfer of the majority of the data, followed by an online synchronization of incremental changes. This strategy balances speed and downtime. It is often used when a business wants to move a large volume of data with minimal downtime. A large media company, for example, might use a hybrid approach to migrate its video archives, initially using a physical appliance for the bulk transfer and then synchronizing new content online.

Data Integrity and Security During Migration

Maintaining data integrity and security throughout the migration process is crucial. Several measures are essential to protect data from loss, corruption, and unauthorized access.

- Data Validation: Implementing rigorous data validation checks at both the source and destination systems is vital. This involves verifying data consistency, format, and completeness. Before the migration, perform checksums and hash validations on the source data. After the transfer, compare these values with the destination data to confirm data integrity. This process helps to identify and correct any data discrepancies.

For instance, a database migration might involve comparing the number of records, data types, and specific field values before and after the migration.

- Encryption: Employing encryption at rest and in transit is paramount. Data should be encrypted both during the transfer process (using protocols like HTTPS or TLS) and after it resides in the cloud storage. Use robust encryption algorithms (e.g., AES-256) to protect the data from unauthorized access. Cloud providers offer built-in encryption capabilities that should be leveraged. Consider the use of hardware security modules (HSMs) for key management if enhanced security is needed.

- Access Controls and Authentication: Strictly control access to data during the migration process. Implement robust authentication and authorization mechanisms to limit access to authorized personnel only. Use multi-factor authentication (MFA) for all users involved in the migration. Review and regularly update access controls to prevent unauthorized access. Regularly audit access logs to detect any suspicious activity.

- Data Loss Prevention (DLP) Measures: Implement DLP measures to prevent sensitive data from leaving the secure environment. This might include monitoring network traffic, using data classification tools, and applying data masking or redaction techniques. Consider the use of cloud-native DLP solutions to identify and protect sensitive information.

- Regular Backups: Create regular backups of both the on-premise and cloud data during the migration. This safeguards against data loss due to unforeseen issues. Test the backups regularly to ensure their recoverability.

Minimizing Downtime During Data Migration

Reducing downtime is a key objective, particularly for businesses that require continuous operation.

- Careful Planning and Preparation: Develop a detailed migration plan that includes a timeline, resource allocation, and a comprehensive testing strategy. Thoroughly test the migration process in a non-production environment before the actual migration. Conduct dry runs to identify potential issues and refine the process.

- Choosing the Right Migration Method: Select the migration method that best aligns with the organization’s downtime tolerance. For instance, online migration is suitable for applications with minimal downtime requirements, while offline migration might be necessary for larger datasets, even though it entails longer periods of unavailability.

- Staged Migration: Implement a staged migration approach, migrating data in phases. Migrate non-critical data first, followed by critical data. This allows for testing and validation at each stage and reduces the overall impact of any issues.

- Parallel Run: Run both the on-premise and cloud systems in parallel for a period. This allows for testing and validation of the cloud environment before switching over completely. It also provides a fallback option if any issues arise.

- Automation: Automate as much of the migration process as possible to reduce manual intervention and potential errors. Utilize scripting and automation tools to streamline data transfer, validation, and configuration.

- Monitoring and Optimization: Continuously monitor the migration process and optimize performance as needed. Use monitoring tools to track data transfer speeds, identify bottlenecks, and troubleshoot any issues. Regularly review and optimize network settings, storage configurations, and other factors to improve the efficiency of the migration.

Security Considerations

The transition from on-premise infrastructure to a cloud environment necessitates a comprehensive reassessment of security protocols. Cloud environments introduce new threat vectors and necessitate a shift in responsibility. Effective security management involves a proactive approach, incorporating robust access controls, data encryption, and continuous monitoring to mitigate risks and ensure data integrity and confidentiality. Implementing these measures is crucial for maintaining compliance with regulatory requirements and safeguarding sensitive information.

Security Best Practices for Cloud Environments

Adopting security best practices is essential for securing cloud environments. This includes a layered approach to security, incorporating various controls at different levels of the infrastructure.

- Identity and Access Management (IAM): Implement strong IAM policies to control access to cloud resources. This includes multi-factor authentication (MFA), role-based access control (RBAC), and the principle of least privilege, granting users only the necessary permissions. Regularly review and audit user access to ensure ongoing compliance.

- Data Encryption: Encrypt data at rest and in transit. Utilize encryption keys managed securely within the cloud provider’s key management service or a dedicated Hardware Security Module (HSM). Implement Transport Layer Security (TLS) for secure communication between applications and users.

- Network Security: Configure virtual networks with appropriate firewalls, intrusion detection/prevention systems (IDS/IPS), and network segmentation to isolate workloads and control traffic flow. Regularly update network security configurations to address emerging threats.

- Vulnerability Management: Implement a robust vulnerability management program, including regular scanning of systems and applications for vulnerabilities. Patch systems promptly to address identified security flaws. Utilize automated vulnerability scanning tools to streamline the process.

- Security Monitoring and Logging: Implement comprehensive security monitoring and logging to detect and respond to security incidents. Collect and analyze logs from various sources, including operating systems, applications, and network devices. Establish alerts and notifications for suspicious activities.

- Incident Response: Develop and maintain an incident response plan to effectively handle security incidents. This plan should Artikel procedures for detecting, containing, eradicating, and recovering from security breaches. Conduct regular incident response drills to test the plan’s effectiveness.

- Compliance and Governance: Adhere to relevant industry standards and regulatory requirements, such as GDPR, HIPAA, or PCI DSS. Implement security policies and procedures to ensure compliance. Conduct regular audits to assess compliance posture.

- Regular Security Assessments: Conduct periodic security assessments, including penetration testing and vulnerability assessments, to identify and address security weaknesses. Use these assessments to refine security policies and procedures.

Comparison of Security Models

Understanding the differences between various security models is crucial for establishing the appropriate security responsibilities in a cloud environment. The choice of model depends on the service model (IaaS, PaaS, SaaS) and the level of control required.

- Shared Responsibility Model: This is the most common security model in cloud computing. The cloud provider is responsible for the security

-of* the cloud, while the customer is responsible for the security

-in* the cloud. The division of responsibilities varies depending on the service model.- Infrastructure as a Service (IaaS): The cloud provider is responsible for the physical infrastructure, including servers, storage, and networking. The customer is responsible for the operating systems, applications, data, and user access management.

- Platform as a Service (PaaS): The cloud provider manages the underlying infrastructure and operating systems. The customer is responsible for the applications and data.

- Software as a Service (SaaS): The cloud provider manages the entire stack, including the infrastructure, operating systems, applications, and data. The customer typically has limited control over security configurations.

- Customer-Managed Security: In this model, the customer has complete control over all aspects of security. This is typically used in IaaS environments where the customer has full control over the operating systems and applications.

- Provider-Managed Security: In this model, the cloud provider is responsible for the majority of security tasks. This is common in SaaS environments where the provider manages the entire stack.

Guide to Implement Access Controls and Data Encryption

Implementing robust access controls and data encryption are fundamental security practices. These measures help to protect data from unauthorized access and ensure its confidentiality.

- Access Controls Implementation:

- Identity and Access Management (IAM): Use IAM services to manage user identities, roles, and permissions. Implement MFA to enhance account security.

- Role-Based Access Control (RBAC): Define roles with specific permissions and assign users to these roles. This simplifies access management and minimizes the risk of privilege escalation.

- Principle of Least Privilege: Grant users only the minimum necessary permissions to perform their job functions. Regularly review user access to ensure compliance with the principle of least privilege.

- Regular Access Audits: Conduct regular audits of user access to identify and address any unauthorized access or permission issues.

- Data Encryption Implementation:

- Encryption at Rest: Encrypt data stored on disks, databases, and other storage systems. Use encryption keys managed by a key management service or a dedicated HSM.

- Encryption in Transit: Use TLS/SSL to encrypt data transmitted over networks. Configure applications to use secure protocols for communication.

- Key Management: Securely store and manage encryption keys. Implement key rotation policies to minimize the impact of compromised keys. Consider using a Hardware Security Module (HSM) for enhanced key protection.

- Data Loss Prevention (DLP): Implement DLP solutions to monitor and prevent sensitive data from leaving the cloud environment. This includes data classification and content filtering.

Network Configuration and Management

Effective network configuration and management are critical for a successful cloud migration. The network serves as the backbone for all cloud-based operations, dictating performance, security, and overall user experience. Neglecting this aspect can lead to latency issues, security vulnerabilities, and service disruptions. Proper network design and management are therefore essential to leverage the full potential of cloud infrastructure.

Configuring Network Settings for Cloud Connectivity

Establishing connectivity between on-premise resources and the cloud environment necessitates careful configuration of network settings. This involves defining virtual networks, subnets, and routing tables to ensure seamless communication. The process varies slightly depending on the chosen cloud provider (AWS, Azure, GCP, etc.), but the underlying principles remain consistent.To begin, a virtual private cloud (VPC) or equivalent construct must be created within the cloud provider’s infrastructure.

This VPC acts as an isolated network environment, providing a logical boundary for cloud resources. Within the VPC, subnets are defined to segment the network and organize resources based on function, security requirements, or geographic location.Establishing a virtual network involves several key steps:

- Defining IP Address Ranges: Each VPC and subnet requires a unique IP address range, typically using CIDR notation (e.g., 10.0.0.0/16 for the VPC, 10.0.1.0/24 for a subnet). Careful planning of these ranges is crucial to avoid conflicts and ensure future scalability.

- Configuring Subnets: Subnets are created within the VPC and assigned to different availability zones or regions to enhance fault tolerance and geographic distribution. Each subnet requires a defined IP address range.

- Setting Up Routing Tables: Routing tables direct network traffic between subnets and to external networks (e.g., the internet or on-premise data centers). These tables specify the destinations and the next hop for each traffic flow.

- Configuring Network Gateways: Network gateways, such as internet gateways and NAT gateways, enable communication between the VPC and the external internet or other networks. Internet gateways allow resources in public subnets to access the internet, while NAT gateways provide outbound internet access for resources in private subnets.

- Implementing DNS Resolution: Proper DNS configuration is essential for resolving domain names to IP addresses. Cloud providers offer managed DNS services (e.g., AWS Route 53, Azure DNS, Google Cloud DNS) that can be integrated with the VPC to provide reliable and scalable DNS resolution.

For example, consider a scenario where a company is migrating its web application to AWS. The company would create a VPC, define public and private subnets, configure an internet gateway for public access, and set up a NAT gateway for outbound internet access for resources in the private subnet. The company would also configure a routing table to direct traffic to the appropriate resources.

Establishing Secure Connections (VPN, Direct Connect)

Securing the connection between on-premise resources and the cloud environment is paramount. Two primary methods for achieving this are VPN (Virtual Private Network) and Direct Connect. Both provide secure, private connectivity, but they differ in their implementation and performance characteristics.A VPN connection establishes an encrypted tunnel over the public internet, allowing secure communication between on-premise and cloud resources. This is a cost-effective solution for initial connectivity or for workloads with moderate bandwidth requirements.Setting up a VPN connection typically involves the following steps:

- Configuring a VPN Gateway: A VPN gateway must be configured within the cloud provider’s VPC. This gateway acts as the endpoint for the VPN tunnel.

- Configuring a Customer Gateway: A customer gateway is set up on the on-premise side, usually on a firewall or router. This gateway is responsible for encrypting and decrypting traffic.

- Establishing a VPN Tunnel: A VPN tunnel is established between the VPN gateway and the customer gateway. This tunnel uses encryption protocols (e.g., IPsec) to secure the data transmitted over the internet.

- Configuring Routing: Routing tables must be configured to direct traffic between the on-premise network and the cloud VPC through the VPN tunnel.

Direct Connect provides a dedicated, private network connection between the on-premise data center and the cloud provider’s infrastructure. This eliminates the need for the public internet, resulting in lower latency, higher bandwidth, and more consistent performance. Direct Connect is typically used for workloads with high bandwidth requirements, strict latency requirements, or sensitive data.Setting up Direct Connect typically involves these steps:

- Establishing a Physical Connection: A physical connection is established between the on-premise data center and the cloud provider’s network. This often involves cross-connecting with a cloud provider’s partner at a colocation facility.

- Configuring Virtual Interfaces: Virtual interfaces (VIFs) are configured within the cloud provider’s console to connect the Direct Connect connection to the VPC. These VIFs define the network configuration for the connection.

- Configuring Routing: Routing tables are configured to direct traffic between the on-premise network and the cloud VPC through the Direct Connect connection.

For example, a financial institution might use Direct Connect to ensure low-latency, secure access to its cloud-based applications and databases, especially if dealing with sensitive financial transactions.

Monitoring Network Performance and Troubleshooting Connectivity Issues

Continuous monitoring of network performance and proactive troubleshooting are critical for maintaining a stable and efficient cloud environment. Monitoring provides insights into network health, identifying potential bottlenecks and performance issues before they impact users.Network performance monitoring involves collecting and analyzing various metrics, including:

- Latency: The time it takes for data packets to travel between two points. High latency can lead to slow application performance.

- Throughput: The amount of data transferred over a network connection in a given time. Low throughput can indicate network congestion.

- Packet Loss: The percentage of data packets that are lost during transmission. High packet loss can lead to unreliable communication.

- Error Rates: The frequency of errors in network traffic, such as corrupted packets or connection failures.

- Utilization: The percentage of network resources being used. High utilization can indicate network bottlenecks.

Cloud providers offer various monitoring tools and services to track these metrics, such as:

- CloudWatch (AWS): Provides comprehensive monitoring and logging services for AWS resources.

- Azure Monitor (Azure): Offers a unified monitoring solution for Azure resources and applications.

- Cloud Monitoring (GCP): Provides monitoring, logging, and alerting capabilities for Google Cloud resources.

Troubleshooting connectivity issues requires a systematic approach:

- Verify Basic Connectivity: Use tools like ping and traceroute to check network reachability and identify potential network hops causing issues.

- Check Firewall Rules: Ensure that firewall rules on both the on-premise and cloud sides are correctly configured to allow necessary traffic.

- Review Routing Tables: Verify that routing tables are configured correctly to direct traffic to the appropriate destinations.

- Examine Network Logs: Analyze network logs for errors, warnings, and other relevant information. Cloud providers offer logging services to capture network traffic and events.

- Check VPN/Direct Connect Status: If using VPN or Direct Connect, verify the status of the connection, including its uptime, bandwidth usage, and error rates.

- Analyze Network Traffic: Use network analyzers (e.g., Wireshark) to capture and analyze network traffic to identify performance bottlenecks or other issues.

For example, if users report slow application performance, a network administrator might use CloudWatch (in AWS) to monitor network latency, throughput, and packet loss. If high latency is observed, the administrator could use traceroute to identify the network hops causing the delay and investigate the underlying cause.

Application Modernization

Application modernization is a critical component of a successful cloud migration strategy. It involves updating existing applications to leverage the benefits of cloud computing, such as scalability, cost optimization, and improved agility. This process often entails refactoring, rearchitecting, or re-platforming applications to align with cloud-native principles. Effective application modernization unlocks the full potential of the cloud, enabling organizations to innovate faster and respond more effectively to market demands.

Techniques for Modernizing Applications for the Cloud

Several techniques can be employed to modernize applications for the cloud, each with its own trade-offs and suitability depending on the application’s architecture, complexity, and business requirements. Understanding these techniques allows for informed decision-making during the migration process.

- Rehosting (Lift and Shift): This involves migrating an application to the cloud with minimal changes. The application’s code remains largely unchanged, and it’s simply moved to a cloud-based infrastructure. This is often the quickest and least expensive approach for initial migration, but it doesn’t fully leverage cloud-native features. For example, a company might move a virtual machine running a web application from an on-premises data center to an Infrastructure-as-a-Service (IaaS) offering like Amazon EC2 or Azure Virtual Machines.

- Replatforming: This technique involves making some modifications to the application to take advantage of cloud services. The core code remains largely the same, but the application might be adapted to use cloud-based databases or other managed services. For instance, an application might switch from an on-premises SQL Server database to Azure SQL Database or Amazon RDS.

- Refactoring: This involves restructuring and optimizing the existing code to improve its functionality and maintainability without changing its external behavior. This could involve breaking down a monolithic application into smaller, more manageable modules or components. Refactoring can improve code quality and enable easier adoption of cloud-native features.

- Re-architecting: This is a more significant undertaking that involves redesigning the application’s architecture to be cloud-native. This often involves breaking down a monolithic application into a set of microservices, leveraging cloud-native services like serverless functions, and adopting DevOps practices. An example would be converting a monolithic Java application into a set of microservices deployed on Kubernetes.

- Rebuilding: This involves rewriting the application from scratch using cloud-native technologies and architectures. This is the most radical approach, but it can provide the greatest benefits in terms of scalability, performance, and maintainability. This approach is usually reserved for applications that are significantly outdated or where the cost of modernization is prohibitive.

- Replacing: This technique involves replacing the existing application with a software-as-a-service (SaaS) solution. This can be a cost-effective way to move to the cloud, especially for applications that are not core to the business. An example would be switching from an on-premises CRM system to Salesforce or Microsoft Dynamics 365.

Serverless Computing vs. Containerization

Serverless computing and containerization are both cloud-native technologies that offer significant advantages in terms of application deployment and management. They address different aspects of application deployment, and their suitability depends on the specific application requirements.

| Feature | Serverless | Containerization | Comparison |

|---|---|---|---|

| Resource Management | Automatically managed by the cloud provider. Developers don’t need to provision or manage servers. | Developers manage the underlying infrastructure, including servers and operating systems. Containers run on a host machine or cluster. | Serverless abstracts away infrastructure management, simplifying deployment. Containerization provides more control over the environment. |

| Scalability | Highly scalable; automatically scales based on demand. | Scalable through orchestration tools like Kubernetes, but requires more manual configuration. | Serverless offers automatic scaling, while containerization requires more manual configuration and scaling strategies. |

| Cost | Pay-per-use model; only pay for the actual compute time. | Can be more cost-effective for long-running workloads; pay for the resources provisioned. | Serverless can be more cost-effective for infrequent or event-driven workloads. Containerization is often more cost-effective for sustained workloads. |

| Deployment | Easy and fast deployment; functions are deployed as individual units. | More complex deployment process, involving container image creation, orchestration, and management. | Serverless offers simpler deployment; containerization provides more flexibility in deployment strategies. |

| State Management | Stateless by default; state needs to be managed externally (e.g., databases, caches). | Can be stateful or stateless; state can be managed within the container or externally. | Serverless encourages stateless architectures. Containerization allows for more flexible state management. |

| Use Cases | Event-driven applications, APIs, webhooks, scheduled tasks, and microservices. | Microservices, web applications, batch processing, and applications requiring consistent environments. | Serverless is ideal for event-driven and API-centric applications. Containerization suits applications requiring consistent environments or more control over resources. |

Strategy for Refactoring Legacy Applications for Optimal Cloud Performance

Refactoring legacy applications for the cloud requires a strategic approach to ensure optimal performance, scalability, and cost-effectiveness. A phased approach, incorporating iterative improvements and testing, is crucial for a successful transformation.

- Assessment and Planning: Begin by thoroughly assessing the legacy application. Identify the application’s architecture, dependencies, and performance bottlenecks. Determine the business goals for modernization and define key performance indicators (KPIs). Choose the appropriate cloud provider and select the modernization techniques.

- Prioritization: Prioritize the application components for refactoring based on their impact on performance, business value, and complexity. Start with the components that offer the greatest potential for improvement and are less complex.

- Modularization: Break down the monolithic application into smaller, independent modules or microservices. This improves maintainability, scalability, and allows for independent deployments.

- Code Optimization: Optimize the code for cloud-native environments. This includes identifying and resolving performance bottlenecks, improving code efficiency, and removing unnecessary dependencies.

- Database Optimization: Optimize the database for cloud environments. This may involve migrating to a cloud-native database service, optimizing database queries, and implementing caching strategies. Consider the use of managed database services like Amazon RDS, Azure SQL Database, or Google Cloud SQL.

- API Integration: Design and implement APIs to enable communication between different modules and services. APIs facilitate integration with other cloud services and enable greater flexibility.

- Testing and Validation: Implement thorough testing throughout the refactoring process. This includes unit testing, integration testing, and performance testing. Ensure the refactored application meets the defined KPIs and business goals. Employ automated testing frameworks to streamline the testing process.

- Deployment and Monitoring: Deploy the refactored application to the cloud environment. Implement robust monitoring and logging to track performance, identify issues, and optimize the application over time. Use cloud-native monitoring tools like Amazon CloudWatch, Azure Monitor, or Google Cloud Monitoring.

- Iterative Improvement: Continue to iterate and improve the application based on performance data and user feedback. Regularly review the application’s architecture and make adjustments as needed to optimize performance and scalability.

Cost Optimization

Effective cost optimization is a critical component of a successful cloud migration and ongoing cloud management strategy. It involves actively managing and controlling cloud spending to maximize the value derived from cloud resources while minimizing unnecessary expenses. A proactive approach to cost optimization ensures that cloud investments align with business objectives and budget constraints.

Strategies for Managing and Controlling Cloud Costs

Implementing strategies to effectively manage and control cloud costs is essential for maintaining financial efficiency. This involves a multifaceted approach that considers resource utilization, pricing models, and ongoing monitoring.

- Resource Right-Sizing: Regularly assess and adjust the size of cloud resources (e.g., virtual machines, storage) to match actual demand. Over-provisioning leads to unnecessary costs, while under-provisioning can impact performance. Tools and techniques for resource right-sizing include performance monitoring, load testing, and capacity planning. Consider using cloud provider-specific tools like AWS Compute Optimizer or Azure Advisor.

- Cost Allocation and Tagging: Implement a robust cost allocation strategy using tags to categorize and track cloud spending by department, project, or application. This provides detailed insights into where costs are incurred and facilitates chargeback or showback mechanisms. Proper tagging allows for accurate cost analysis and informed decision-making.

- Budgeting and Forecasting: Establish clear budgets and forecasts for cloud spending. Utilize cloud provider tools to set up budget alerts and monitor spending against pre-defined thresholds. Forecast future costs based on historical usage patterns and anticipated changes in demand.

- Automation and Scripting: Automate tasks such as resource provisioning, scaling, and de-provisioning to optimize resource utilization and reduce manual effort. Leverage Infrastructure as Code (IaC) tools to manage and deploy cloud resources consistently and efficiently. Automation minimizes human error and maximizes resource efficiency.

- Governance and Policy Enforcement: Establish and enforce cloud governance policies to control resource provisioning, usage, and cost. Define policies around acceptable resource types, instance sizes, and storage tiers. Use cloud provider services like AWS Organizations or Azure Policy to enforce these policies across the entire cloud environment.

Methods for Implementing Cost-Saving Measures

Several methods can be employed to reduce cloud spending. These strategies often leverage cloud provider pricing models and resource management techniques to optimize costs without compromising performance.

- Reserved Instances (RIs) and Committed Use Discounts (CUDs): Take advantage of reserved instances or committed use discounts offered by cloud providers. These discounts provide significant savings compared to on-demand pricing, but require a commitment to use resources for a specific period (typically one or three years). Consider the stability of workload requirements before committing to long-term reservations. For example, if an application consistently runs on an instance for 24/7, reserving an instance will reduce costs significantly.

- Spot Instances and Preemptible VMs: Utilize spot instances (AWS) or preemptible VMs (Google Cloud) for fault-tolerant and non-critical workloads. These instances offer significant discounts compared to on-demand pricing, but they can be terminated by the cloud provider if the spot price exceeds the bid price. Suitable workloads include batch processing, data analysis, and development environments.

- Storage Tiering and Lifecycle Management: Implement storage tiering strategies to move data to less expensive storage tiers based on access frequency. For example, frequently accessed data can reside in a high-performance storage tier, while infrequently accessed data can be moved to a cheaper archive tier. Automate data lifecycle management using cloud provider tools to automatically transition data between storage tiers.

- Serverless Computing: Leverage serverless computing services (e.g., AWS Lambda, Azure Functions, Google Cloud Functions) to pay only for the actual compute time used. Serverless architectures can significantly reduce costs for applications with variable or unpredictable workloads. This eliminates the need to manage and pay for underlying infrastructure.

- Containerization and Orchestration: Containerize applications and use container orchestration platforms (e.g., Kubernetes) to improve resource utilization. Containers allow for efficient resource packing, enabling more applications to run on the same infrastructure. Orchestration platforms automate container deployment, scaling, and management.

Guide to Monitor and Optimize Cloud Spending Over Time

Continuous monitoring and optimization are essential for maintaining cost efficiency in the cloud. This requires a proactive and iterative approach that involves ongoing analysis, adjustment, and refinement.

- Establish Baseline and Set Key Performance Indicators (KPIs): Define a baseline for cloud spending and establish KPIs to track cost performance. KPIs might include cost per application, cost per user, or cost per transaction. Regularly measure and compare actual spending against the baseline and KPIs.

- Utilize Cloud Provider Cost Management Tools: Leverage cloud provider-specific cost management tools (e.g., AWS Cost Explorer, Azure Cost Management + Billing, Google Cloud Cost Management) to monitor spending, analyze trends, and identify cost-saving opportunities. These tools provide detailed cost breakdowns, reporting capabilities, and recommendations for optimization.

- Regularly Review and Analyze Cost Reports: Conduct regular reviews of cost reports to identify areas where costs can be reduced. Analyze spending patterns, identify anomalies, and investigate potential cost drivers. Use tagging to drill down into specific projects or applications.

- Implement Cost Optimization Recommendations: Act on the recommendations provided by cloud provider tools and cost optimization experts. Implement changes such as right-sizing resources, leveraging reserved instances, or optimizing storage configurations. Measure the impact of these changes and iterate as needed.

- Automate Cost Monitoring and Alerts: Automate cost monitoring and set up alerts to be notified of unusual spending patterns or budget overruns. Configure alerts based on predefined thresholds or deviations from historical trends. This allows for proactive intervention and prevents unexpected cost increases.

- Conduct Regular Cost Optimization Audits: Perform regular cost optimization audits to ensure that cloud resources are being used efficiently and that cost-saving measures are effective. These audits can be conducted internally or by external consultants. Audit findings should be used to inform future cost optimization efforts.

Testing and Validation

Rigorous testing and validation are critical components of a successful on-premise to cloud migration. They ensure that the migrated applications and data function correctly, meet performance expectations, and maintain security posture throughout the transition. A comprehensive testing strategy minimizes risks, reduces downtime, and provides confidence in the new cloud environment.

Importance of Testing and Validation

Testing and validation are essential throughout the migration process for several reasons. It verifies the integrity of data during and after migration. It confirms that application functionality is preserved or enhanced in the cloud environment. It assesses the performance of applications under various load conditions, ensuring scalability and responsiveness. Furthermore, testing identifies and addresses security vulnerabilities introduced during the migration, safeguarding sensitive data and preventing unauthorized access.

By validating each stage, organizations can minimize the risk of disruptions, data loss, and performance degradation, leading to a smoother and more successful cloud transition.

Testing Plan for Various Stages of Migration

A well-defined testing plan should encompass pre-migration, during-migration, and post-migration phases. Each phase has specific testing objectives and activities designed to ensure a seamless transition.

- Pre-Migration Testing: This phase focuses on assessing the existing on-premise environment and preparing for the migration.

- Environment Assessment: Evaluate the existing infrastructure, including servers, databases, applications, and network configurations. This involves documenting the current state to establish a baseline for comparison.

- Compatibility Testing: Verify the compatibility of applications and systems with the target cloud environment. This includes checking operating system compatibility, software dependencies, and potential conflicts.

- Performance Baseline: Establish performance benchmarks for key applications and services in the on-premise environment. This baseline serves as a reference point for comparing performance after migration.

- Security Audits: Conduct security assessments to identify vulnerabilities and potential risks in the existing environment. This helps to prepare for securing the cloud environment.

- During-Migration Testing: Testing during the migration phase validates the migration process itself and ensures data integrity.

- Data Validation: Verify the accuracy and completeness of data migrated to the cloud. This includes checking for data loss, corruption, or inconsistencies. Techniques include data comparison, checksum verification, and sample data validation.

- Migration Process Testing: Evaluate the migration process, including the tools and scripts used, to ensure it functions as expected. This may involve testing different migration approaches, such as lift-and-shift, re-platforming, or refactoring.

- Cutover Testing: Simulate the cutover from the on-premise environment to the cloud environment to ensure a smooth transition. This involves testing the failover and rollback mechanisms.

- Post-Migration Testing: Post-migration testing validates the functionality, performance, and security of the migrated applications in the cloud environment.

- Functional Testing: Verify that all application features and functionalities work as expected in the cloud environment. This includes testing user interfaces, workflows, and integrations.

- Performance Testing: Assess the performance of applications under various load conditions to ensure scalability and responsiveness. This includes load testing, stress testing, and performance monitoring.

- Security Testing: Conduct security assessments to identify vulnerabilities and potential risks in the cloud environment. This includes penetration testing, vulnerability scanning, and security audits.

- User Acceptance Testing (UAT): Involve end-users in testing the migrated applications to ensure they meet their requirements and expectations. This includes providing training and support to users.

- Disaster Recovery Testing: Test the disaster recovery plan to ensure that the applications and data can be recovered in the event of an outage or disaster.

Testing Tools and Techniques for Ensuring Application Functionality

A variety of testing tools and techniques are available to ensure application functionality during cloud migration. These tools and techniques address different aspects of testing, from functional and performance to security.

- Functional Testing Tools: These tools are used to verify that applications function as expected.

- Selenium: An open-source automation testing framework used for web application testing. Selenium allows testers to write automated tests that simulate user interactions with web applications.

- JUnit: A unit testing framework for Java applications. JUnit enables developers to write and run unit tests to verify the functionality of individual code units.

- TestRail: A test management tool used to organize, track, and manage testing activities. TestRail helps testers to create test cases, execute tests, and track defects.

- Performance Testing Tools: These tools are used to assess the performance of applications under various load conditions.

- LoadRunner: A performance testing tool used to simulate a large number of users accessing an application simultaneously. LoadRunner helps testers to identify performance bottlenecks and optimize application performance.

- JMeter: An open-source performance testing tool used to test the performance of web applications, databases, and other services. JMeter allows testers to simulate user load and measure response times.

- BlazeMeter: A cloud-based performance testing platform used to test the performance of web applications and APIs. BlazeMeter provides features for load testing, stress testing, and performance monitoring.

- Security Testing Tools: These tools are used to identify vulnerabilities and potential risks in applications.

- Nessus: A vulnerability scanner used to identify security vulnerabilities in networks, systems, and applications. Nessus helps testers to identify and address security risks.

- OWASP ZAP: An open-source web application security scanner used to identify vulnerabilities in web applications. OWASP ZAP helps testers to identify and address security risks.

- Burp Suite: A web application security testing tool used to identify vulnerabilities in web applications. Burp Suite provides features for intercepting and modifying HTTP traffic.

- Testing Techniques:

- Unit Testing: Testing individual components or modules of an application to verify their functionality.

- Integration Testing: Testing the interaction between different components or modules of an application.

- System Testing: Testing the entire application to verify its functionality and performance.

- User Acceptance Testing (UAT): Testing the application by end-users to ensure it meets their requirements.

Training and Skill Development

The successful migration to the cloud hinges not only on technological prowess but also on the preparedness of the workforce. Cloud adoption introduces new paradigms, tools, and responsibilities, necessitating a strategic approach to training and skill development. Failing to equip the team with the necessary skills can lead to project delays, increased costs, security vulnerabilities, and ultimately, a failed cloud migration.

Investing in training is therefore an investment in the long-term success and operational efficiency of the cloud environment.

Importance of Cloud Skills

The shift to cloud computing requires a fundamental shift in how IT operations are managed. Traditional IT skills, while still valuable, are insufficient to navigate the complexities of cloud environments. Cloud-specific skills are crucial for effectively managing resources, optimizing costs, ensuring security, and enabling innovation. These skills span a wide range of disciplines, from architecture and development to operations and security.

Recommendations for Building a Cloud-Skilled Team

Building a cloud-skilled team requires a multi-faceted approach that combines training, mentorship, and hands-on experience. This includes identifying current skill levels, setting realistic goals, and implementing a training plan that aligns with the organization’s cloud strategy.

- Assess Current Skills and Needs: Conduct a thorough skills assessment to identify existing strengths and weaknesses within the team. This should include technical skills (e.g., scripting, networking, security) and soft skills (e.g., communication, problem-solving, collaboration). Define the roles and responsibilities necessary for cloud operations and determine the required skill sets for each role.

- Develop a Comprehensive Training Plan: Create a customized training plan that addresses the identified skills gaps. This plan should incorporate a mix of training methods, including:

- Formal Training Courses: Utilize vendor-specific training (e.g., AWS Certified Solutions Architect, Microsoft Azure certifications, Google Cloud certifications) and industry-recognized certifications.

- Online Learning Platforms: Leverage online learning platforms like Coursera, Udemy, and A Cloud Guru to provide access to a wide range of cloud-related courses and tutorials.

- Hands-on Labs and Workshops: Provide opportunities for hands-on practice through labs and workshops, allowing team members to apply their knowledge in a practical setting.

- Mentorship Programs: Pair experienced cloud professionals with less experienced team members to provide guidance and support.

- Internal Knowledge Sharing: Encourage knowledge sharing through internal workshops, presentations, and documentation.

- Foster a Culture of Continuous Learning: Encourage a culture of continuous learning by providing resources and support for ongoing skill development. This includes:

- Allocating Time for Learning: Dedicate time for team members to pursue training and certifications.

- Providing Access to Learning Resources: Subscribe to relevant industry publications, blogs, and online communities.

- Supporting Professional Development: Encourage participation in industry conferences and events.

- Invest in Cloud Experts: Consider hiring experienced cloud professionals to provide guidance, mentorship, and expertise. These experts can help accelerate the cloud adoption process and ensure that best practices are followed.

- Establish a Cloud Center of Excellence (CCoE): Create a CCoE to provide a centralized hub for cloud knowledge, best practices, and support. The CCoE can be responsible for developing and delivering training, managing cloud resources, and ensuring compliance with security and governance policies.

Identifying and Addressing Skills Gaps

Identifying and addressing skills gaps is an ongoing process that requires continuous monitoring and evaluation. Regular assessments and feedback mechanisms are essential for ensuring that the team’s skills remain aligned with the evolving demands of the cloud environment.

- Conduct Regular Skills Assessments: Perform regular skills assessments to identify emerging skills gaps and track the progress of training initiatives. These assessments can be conducted through a variety of methods, including:

- Skills Inventories: Create a skills inventory to document the existing skills and expertise of each team member.

- Performance Reviews: Incorporate cloud-related skills into performance reviews to evaluate individual and team performance.

- 360-Degree Feedback: Utilize 360-degree feedback to gather feedback from peers, managers, and subordinates on cloud-related skills.

- Technical Assessments: Administer technical assessments to evaluate the team’s proficiency in specific cloud technologies and concepts.

- Prioritize Skills Gaps: Prioritize the identified skills gaps based on their impact on the organization’s cloud strategy. Focus on addressing the most critical skills gaps first.

- Implement Targeted Training Programs: Develop and implement targeted training programs to address the identified skills gaps. These programs should be tailored to the specific needs of the team and should incorporate a mix of training methods.

- Track Training Effectiveness: Track the effectiveness of training programs by measuring the impact on individual and team performance. Use metrics such as:

- Certification Rates: Track the number of team members who obtain relevant cloud certifications.

- Project Success Rates: Measure the success rates of cloud-related projects.

- Cost Optimization: Monitor cost optimization efforts to assess the impact of cloud skills.

- Security Incident Reduction: Track the number of security incidents related to cloud environments.

- Provide Ongoing Support and Resources: Provide ongoing support and resources to help team members apply their newly acquired skills. This includes access to documentation, online forums, and expert support.

Epilogue

In conclusion, a successful cloud migration is not a one-size-fits-all endeavor but a strategically planned and executed project. By carefully assessing existing infrastructure, selecting the appropriate cloud provider, employing the right migration strategies, and focusing on security and cost optimization, organizations can harness the full potential of the cloud. Continuous monitoring, adaptation, and skill development are crucial for long-term success in this dynamic environment.

Common Queries

What are the primary benefits of migrating to the cloud?

Cloud migration offers scalability, cost efficiency, enhanced security, improved business agility, and access to the latest technologies.

What are the biggest challenges of cloud migration?

Challenges include data security, managing costs, ensuring application compatibility, and the complexity of the migration process itself.

How can I estimate the cost of a cloud migration?

Cost estimation involves analyzing current IT spending, assessing cloud provider pricing models, and considering migration costs, including tools and labor.

What is the difference between Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS)?

IaaS provides basic infrastructure, PaaS provides a platform for application development, and SaaS offers ready-to-use software applications.

How do I ensure data security during the migration process?

Data security involves encrypting data, implementing access controls, using secure transfer protocols, and adhering to compliance standards.