Embarking on a journey to achieve uninterrupted service availability? Blue-green deployments offer a sophisticated yet manageable approach to updating applications without causing downtime. This method involves maintaining two identical environments: one live (blue) serving production traffic, and the other (green) acting as a staging ground for new releases. This guide delves into the intricacies of this deployment strategy, exploring the planning, setup, and execution needed to seamlessly transition between environments.

This document will cover everything from the initial concept and infrastructure setup to advanced topics like database migrations and automation. You’ll discover practical strategies for testing, monitoring, and rolling back deployments, ensuring that your applications remain robust and resilient. Whether you’re a seasoned DevOps engineer or just starting to explore deployment strategies, this guide provides the knowledge and tools to implement blue-green deployments effectively.

Introduction to Blue-Green Deployments

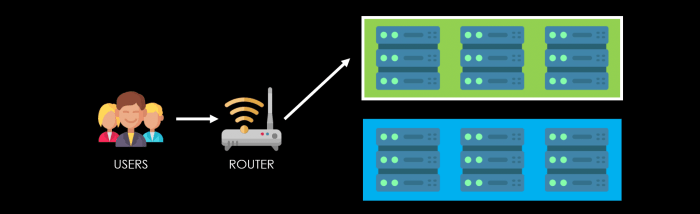

Blue-green deployments are a powerful strategy for deploying software updates with minimal disruption to users. They provide a robust method for achieving zero-downtime deployments, ensuring a seamless user experience during the transition. This approach involves maintaining two identical environments: the “blue” environment, which is the live, production environment serving current traffic, and the “green” environment, which is a staging environment ready for the new software version.

Core Concept of Blue-Green Deployments

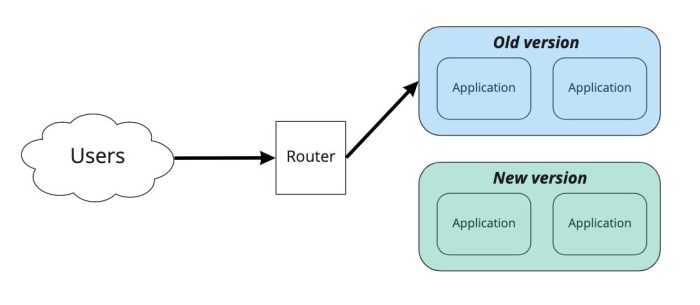

The fundamental principle of blue-green deployments is to have two identical environments, only one of which is live at any given time. When a new version of the application is ready, it’s deployed to the green environment. Once testing and validation are complete, traffic is switched from the blue environment to the green environment. This switch is typically managed by a load balancer or similar traffic management system.

If any issues arise with the new version in the green environment, traffic can be quickly switched back to the blue environment, minimizing the impact on users.

Comparison: Blue-Green Deployments vs. Traditional Deployments

Traditional deployment methods often involve downtime while the application is updated. Blue-green deployments offer a significant advantage by eliminating this downtime. The following points highlight the key differences:

- Downtime: Traditional deployments typically involve downtime for updates, ranging from minutes to hours, depending on the complexity of the update. Blue-green deployments aim for zero downtime by switching traffic between environments.

- Rollback Strategy: Traditional methods may require rolling back to a previous version if an update fails, which can be a complex and time-consuming process. In blue-green deployments, rollback is a simple matter of switching traffic back to the previous, known-good environment (the blue environment).

- Risk Mitigation: Traditional deployments involve higher risk because the update is performed directly on the live environment. Blue-green deployments mitigate risk by testing the new version in a staging environment before exposing it to users.

- Testing: Traditional deployments may have limited testing capabilities due to the impact on the live environment. Blue-green deployments allow for comprehensive testing of the new version in the green environment before the switch.

- Complexity: Traditional deployments can be simpler to implement for small applications. However, as applications grow in complexity, the benefits of blue-green deployments in terms of reduced risk and downtime become more significant. Blue-green deployments are initially more complex to set up, but the benefits often outweigh the costs.

Benefits of Blue-Green Deployments for Zero Downtime

Blue-green deployments provide several key benefits that contribute to achieving zero downtime:

- Reduced Downtime: The primary benefit is the elimination of downtime during deployments. Users experience a seamless transition to the new version of the application.

- Faster Rollbacks: If an issue is detected in the green environment, the rollback process is quick and easy, minimizing the impact on users. Traffic is simply redirected back to the blue environment.

- Improved Risk Management: Deploying to a staging environment (green) allows for thorough testing and validation before exposing the new version to production traffic. This reduces the risk of introducing bugs or performance issues into the live environment.

- Increased Deployment Frequency: With the reduced risk and downtime, teams can deploy updates more frequently, leading to faster feature releases and improved responsiveness to user feedback.

- Enhanced User Experience: Zero downtime ensures a consistently available application, leading to a better user experience and increased user satisfaction.

Planning and Preparation

Blue-green deployments, while offering significant advantages in terms of zero-downtime releases, require meticulous planning and preparation. This phase is crucial for ensuring a smooth transition and minimizing the risk of issues during the deployment process. Thorough preparation encompasses various aspects, from setting up environments to comprehensive testing, all aimed at guaranteeing a successful rollout.

Pre-Deployment Checklist

Before initiating a blue-green deployment, a detailed checklist is essential. This checklist acts as a roadmap, ensuring all necessary steps are completed systematically and preventing potential oversights. Following this checklist helps to maintain consistency and reduce the chances of errors.

- Database Schema Updates: Plan and execute database schema changes. This often involves creating new tables, modifying existing ones, or adding indexes. Consider using database migration tools (e.g., Flyway, Liquibase) to manage schema changes in a controlled and versioned manner. Ensure compatibility between the old and new schemas during the transition period, allowing both green and blue environments to function correctly. This often involves techniques like “expand-contract” migrations where new columns are added first and then old ones are removed, or the use of feature flags to control access to new features.

- Environment Setup: Configure the blue environment to mirror the green environment. This includes setting up servers, configuring network settings, and deploying application code. Use infrastructure-as-code tools (e.g., Terraform, Ansible) to automate environment provisioning and ensure consistency. Verify that all dependencies, including libraries, frameworks, and configurations, are correctly installed and configured in the blue environment.

- Code Deployment: Deploy the new version of the application code to the blue environment. Ensure that the deployment process is automated and repeatable. Use a CI/CD pipeline to build, test, and deploy the code to the blue environment. Verify that the code is correctly deployed and accessible in the blue environment.

- Configuration Synchronization: Synchronize configuration settings between the green and blue environments. This includes database connection strings, API keys, and other environment-specific configurations. Use configuration management tools (e.g., Consul, etcd) to manage and synchronize configurations across environments. Ensure that sensitive information, such as passwords and API keys, is securely stored and managed.

- Data Migration (if applicable): If the application requires data migration, plan and execute the migration process. This may involve migrating data from the green environment to the blue environment or updating data in the blue environment. Use data migration tools (e.g., ETL tools, custom scripts) to migrate data efficiently and accurately. Verify that the data migration process is successful and that the data in the blue environment is consistent with the data in the green environment.

- Health Checks and Monitoring Setup: Implement comprehensive health checks and monitoring for both the green and blue environments. Configure monitoring tools (e.g., Prometheus, Grafana) to monitor key metrics, such as CPU usage, memory usage, and response times. Set up alerts to notify you of any issues in either environment. This proactive monitoring allows for quick identification and resolution of any problems that may arise.

- Rollback Plan: Develop a detailed rollback plan in case the blue deployment fails. This plan should Artikel the steps to revert to the green environment quickly and safely. Test the rollback plan before the actual deployment to ensure it works as expected. Having a robust rollback plan minimizes downtime and the impact of deployment failures.

Infrastructure Considerations

Choosing the right infrastructure is paramount for successful blue-green deployments. The selected infrastructure must support the requirements of the application and the specific deployment strategy. Several factors should be considered when making this selection.

- Cloud vs. On-Premise: Cloud environments (e.g., AWS, Azure, Google Cloud) often provide built-in features and tools that simplify blue-green deployments, such as load balancers, autoscaling, and container orchestration. On-premise environments may require more manual configuration and management. Consider the cost, scalability, and manageability of each option.

- Load Balancing: A robust load balancer is essential for routing traffic between the green and blue environments. The load balancer should support features like health checks and session affinity. Choose a load balancer that can handle the expected traffic volume and provides sufficient performance. Ensure the load balancer is configured correctly to direct traffic to the active environment.

- Containerization: Containerization technologies (e.g., Docker, Kubernetes) can simplify the deployment and management of applications in blue-green environments. Containers provide a consistent and portable environment for running applications. Container orchestration platforms, such as Kubernetes, can automate the deployment, scaling, and management of containerized applications.

- Database Considerations: The database infrastructure must support the deployment strategy. Consider using database replication or clustering to ensure high availability and data consistency. Plan for database schema changes and data migration. Ensure the database can handle the increased load during the transition period.

- Autoscaling: Implement autoscaling to automatically scale the application resources based on demand. Autoscaling ensures that the application can handle traffic spikes and maintain performance. Configure autoscaling rules to scale the application resources in both the green and blue environments.

Thorough Testing Importance

Comprehensive testing is non-negotiable in blue-green deployments. The goal is to ensure the new version of the application functions correctly, performs well, and meets user expectations before switching live traffic. This involves multiple levels of testing, each focusing on different aspects of the application.

- Functional Testing: Verify that all application features and functionalities work as expected in the blue environment. Conduct end-to-end testing, integration testing, and unit testing. Automate functional tests to ensure consistent and repeatable testing. Functional testing identifies bugs and ensures that the application meets its functional requirements.

- Performance Testing: Assess the application’s performance under various load conditions. Conduct load testing, stress testing, and soak testing to evaluate the application’s scalability, responsiveness, and stability. Performance testing identifies performance bottlenecks and ensures that the application can handle the expected traffic volume.

- User Acceptance Testing (UAT): Involve real users in testing the new version of the application. Provide users with access to the blue environment and gather their feedback. UAT identifies usability issues and ensures that the application meets user needs. Address any feedback received from UAT before switching live traffic.

- Security Testing: Conduct security testing to identify and address potential vulnerabilities. Perform penetration testing, vulnerability scanning, and security audits. Security testing ensures that the application is secure and protects user data.

- Testing in Production (TIP) (Canary Testing): This is a technique where a small subset of live traffic is directed to the blue environment for testing. This allows for real-world testing with actual user data and traffic patterns. Monitor the blue environment closely during TIP to identify any issues before rolling out to all users.

- Example: Consider a large e-commerce platform. Before deploying a new checkout process using blue-green, functional testing would involve verifying that users can add items to their cart, proceed to checkout, enter payment information, and successfully complete the purchase. Performance testing would simulate thousands of concurrent users to ensure the checkout process remains responsive during peak traffic. UAT would involve a group of real users testing the new checkout process and providing feedback on usability and any issues encountered.

Security testing would include penetration testing to identify any vulnerabilities in the payment processing system. Finally, a canary test would involve routing a small percentage of live traffic to the new checkout process to monitor its performance and identify any unforeseen issues before the full rollout.

Infrastructure Setup

This section focuses on establishing the blue and green environments, the core of blue-green deployments. Setting up these environments requires careful planning and execution to ensure they are identical, allowing for seamless switching between them. This involves configuring servers, deploying applications, and managing the inevitable configuration differences. We will explore these aspects in detail, including the use of containerization for efficient environment replication.

Setting Up Identical Blue and Green Environments

Creating identical environments is paramount for successful blue-green deployments. This means ensuring the blue and green environments mirror each other in terms of server configuration, software versions, and application deployments. Any differences can lead to unexpected behavior and deployment failures.The following steps are critical for setting up the environments:

- Server Configuration: The servers in both environments should have the same operating system, security patches, and essential software packages installed. This includes web servers (e.g., Apache, Nginx), application servers (e.g., Tomcat, Node.js), databases (e.g., PostgreSQL, MySQL), and any other dependencies required by your application. Consider using Infrastructure as Code (IaC) tools like Terraform or Ansible to automate server provisioning and configuration.

- Application Deployment: Deploy the same version of your application to both environments. This typically involves deploying the application code, configuration files, and any necessary data migrations. Automated deployment pipelines, often using tools like Jenkins, GitLab CI, or CircleCI, are essential for consistent and repeatable deployments.

- Network Configuration: Both environments should reside within the same network, or at least have a clear network path between them. The load balancer will switch traffic between the blue and green environments, so they must be able to communicate. This can be achieved using virtual networks, private subnets, or other network isolation techniques.

- Database Considerations: Database schemas and data should be synchronized between the blue and green environments. This might involve using database replication, or, for simpler applications, periodically copying the database from the active environment to the inactive one. Data migrations should be carefully planned and executed to avoid downtime.

- Monitoring and Logging: Both environments should be configured with the same monitoring and logging tools (e.g., Prometheus, Grafana, ELK stack). This ensures that you can monitor the health and performance of your application in both environments and easily compare them.

Managing Configuration Differences

While the blue and green environments should be as identical as possible, some configuration differences are inevitable. These differences typically relate to environment-specific settings, such as database connection strings, API keys, and service endpoints. Proper management of these differences is crucial for preventing deployment errors.Several strategies can be used to manage configuration differences:

- Environment Variables: Store environment-specific configurations as environment variables. This allows you to easily change the configuration without modifying the application code.

- Configuration Files: Use configuration files (e.g., YAML, JSON) to store environment-specific settings. These files can be deployed with the application code and loaded at runtime. Use tools like `envsubst` or templating engines to substitute variables in the configuration files.

- Configuration Management Tools: Utilize configuration management tools (e.g., Ansible, Chef, Puppet) to manage and deploy configuration files. These tools can ensure that the correct configuration is applied to each environment.

- Feature Flags: Use feature flags to enable or disable specific features based on the environment. This allows you to deploy new features to the green environment without affecting the blue environment.

- Secrets Management: Use a secrets management system (e.g., HashiCorp Vault, AWS Secrets Manager) to securely store and manage sensitive information such as API keys and database passwords.

Containerization for Environment Replication

Containerization, particularly using Docker, simplifies the process of creating and replicating the blue and green environments. Docker allows you to package your application and its dependencies into a container, which can then be deployed consistently across different environments. This approach reduces the risk of configuration drift and ensures that the environments are identical.Here is an example `docker-compose.yml` file for a simple web application:“`yamlversion: “3.8”services: web: image: nginx:latest ports:

“8080

80″ volumes:

./html

/usr/share/nginx/html environment:

ENVIRONMENT=blue # Example environment variable

db: image: postgres:14 ports:

“5432

5432″ environment:

POSTGRES_USER=example

POSTGRES_PASSWORD=password

POSTGRES_DB=exampledb

volumes:

db_data

/var/lib/postgresql/datavolumes: db_data:“`This `docker-compose.yml` file defines two services: `web` and `db`. The `web` service uses an Nginx image and maps port 8080 on the host to port 80 inside the container. It also mounts a volume to serve static HTML content. An environment variable `ENVIRONMENT` is set, showcasing how to inject environment-specific information. The `db` service uses a PostgreSQL image and maps port 5432 on the host to port 5432 inside the container.

It also defines environment variables for the database user, password, and database name. Finally, a named volume `db_data` is used to persist the database data.To deploy this application, you would:

- Build the Docker images (if not using pre-built images).

- Use `docker-compose up -d` to start the containers in each environment (blue and green).

Docker ensures that the application and its dependencies are packaged together, providing a consistent and reproducible environment. This significantly simplifies the setup and management of both the blue and green environments, leading to faster and more reliable deployments. For example, using this approach, teams can consistently deploy and test applications, minimizing the risk of environment-specific issues and ensuring a smoother transition during the blue-green deployment process.

Deployment Strategies and Traffic Routing

Deploying applications with zero downtime is a critical requirement for modern software development. Blue-green deployments achieve this by maintaining two identical environments: blue (current live version) and green (new version). The key to a successful blue-green deployment lies in effectively routing traffic between these environments. This section details various traffic routing strategies and provides guidance on implementing canary releases and selecting appropriate load balancing algorithms.

Traffic Routing Strategies

Several strategies exist for seamlessly switching traffic between the blue and green environments. The choice of strategy depends on factors like infrastructure, budget, and the complexity of the application.

- DNS-based Routing: This method utilizes DNS (Domain Name System) records to direct traffic. During a deployment, the DNS record pointing to the blue environment is updated to point to the green environment.

- Advantages: Relatively simple to implement, cost-effective, and can be used with various infrastructure setups.

- Disadvantages: DNS propagation delays can cause downtime, even if brief. The time it takes for DNS changes to propagate across the internet can vary.

- Load Balancer-based Routing: Load balancers provide more granular control over traffic distribution. They can be configured to direct traffic to either the blue or green environment or to implement more sophisticated strategies like canary releases.

- Advantages: Offers high availability, supports advanced routing algorithms, and allows for more precise control over traffic flow. They also provide health checks to ensure traffic is only sent to healthy instances.

- Disadvantages: Requires a load balancer, which can add to infrastructure costs and complexity. Configuration can be more involved than DNS-based routing.

- Reverse Proxy-based Routing: Reverse proxies, such as Nginx or Apache, can also be used for traffic routing. They sit in front of the application servers and forward requests based on configured rules.

- Advantages: Provides features like caching, SSL termination, and traffic shaping, in addition to routing.

- Disadvantages: Requires configuring and maintaining a reverse proxy server. Performance can be impacted if the proxy becomes a bottleneck.

- Service Mesh-based Routing: Service meshes, like Istio or Linkerd, offer advanced traffic management capabilities, including traffic splitting, canary releases, and fault injection. They operate at the application layer, providing fine-grained control over traffic flow.

- Advantages: Provides sophisticated traffic management, observability, and security features. Enables advanced deployment strategies.

- Disadvantages: Adds significant complexity to the infrastructure. Requires a learning curve to understand and manage the service mesh.

Implementing a Canary Release

A canary release allows a small subset of users to access the new version (green environment) while the majority continue to use the stable version (blue environment). This approach enables testing in a production environment with minimal risk.

- Preparation: Ensure both blue and green environments are running. Deploy the new version (green) alongside the existing version (blue). Configure health checks on the load balancer to monitor the green environment’s health.

- Traffic Splitting: Configure the load balancer to direct a small percentage (e.g., 5-10%) of traffic to the green environment. This can be achieved using various load balancing algorithms (e.g., weighted routing).

- Monitoring: Closely monitor the green environment for errors, performance issues, and unexpected behavior. Implement robust logging and alerting to quickly identify any problems.

- Rollback (if necessary): If issues are detected, immediately roll back by redirecting all traffic to the blue environment.

- Gradual Rollout: If the canary release is successful, gradually increase the percentage of traffic directed to the green environment, monitoring performance and stability at each stage.

- Full Deployment: Once the green environment has proven stable and performs as expected, switch all traffic to the green environment. Decommission the blue environment after verification.

For example, consider a scenario where an e-commerce platform wants to release a new checkout feature. A canary release would involve directing a small portion of traffic (e.g., 5%) to the new checkout feature. If no errors are detected and the performance is acceptable, the traffic percentage would be gradually increased until all traffic is directed to the new feature.

This staged approach minimizes the risk of impacting the entire user base if any issues arise.

Load Balancing Algorithms for Blue-Green Deployments

Choosing the right load balancing algorithm is crucial for efficient traffic routing. The following table compares various algorithms suitable for blue-green deployments.

| Algorithm | Description | Advantages | Disadvantages |

|---|---|---|---|

| Round Robin | Distributes incoming requests sequentially to each server in the pool. | Simple to implement, provides fair distribution of traffic. | Does not consider server capacity or performance; can lead to uneven load distribution if servers have different resources. |

| Least Connections | Directs traffic to the server with the fewest active connections. | Dynamically balances traffic based on server load, improving resource utilization. | Requires monitoring connection counts, which can add overhead. |

| Weighted Round Robin | Assigns weights to each server, allowing for a percentage-based traffic distribution. | Enables canary releases and gradual rollouts by controlling the proportion of traffic directed to each environment. | Requires careful configuration of weights to avoid overloading servers. |

| IP Hash | Routes requests from the same client IP address to the same server. | Useful for session persistence, ensuring users remain on the same server throughout their session. | Can lead to uneven load distribution if clients have a disproportionate number of requests. |

Database Considerations and Migrations

Database management is a critical aspect of blue-green deployments. Ensuring data integrity and zero downtime requires careful planning and execution of database schema changes and connection management. The strategies Artikeld below address these crucial considerations, allowing for seamless transitions between environments.

Handling Database Schema Changes

Database schema changes pose a significant challenge during blue-green deployments. Directly modifying the database schema while live traffic is being served can lead to downtime or data corruption. Several strategies mitigate these risks.

- Backward Compatibility: Implement schema changes that are backward compatible with the existing application version. This allows the new application version to work with the old database schema during the transition period.

- Dual Writes: During the deployment, write to both the old and the new database schemas. The old application version continues to read from the old schema, while the new version reads from the new schema. This ensures data consistency.

- Rolling Migrations: Perform database migrations in a rolling fashion, similar to the application deployment. This involves applying schema changes in small batches, minimizing the impact on the system.

- Schema Versioning: Employ a schema versioning system to track database changes. This allows for easy rollback if a migration fails.

Managing Database Connections and Ensuring Data Consistency

Database connection management and data consistency are paramount in a blue-green deployment. Improper handling can lead to data loss or corruption.

- Connection Pooling: Use connection pooling to efficiently manage database connections. This reduces the overhead of establishing and tearing down connections.

- Read Replicas: Employ read replicas to offload read traffic from the primary database. This improves performance and reduces the load on the primary database during the deployment.

- Transactions: Use transactions to ensure data consistency during database updates. This guarantees that all changes within a transaction are applied atomically.

- Data Synchronization: Implement mechanisms to synchronize data between the blue and green environments, especially for data that is not easily replicated. This can involve techniques like CDC (Change Data Capture) or custom synchronization scripts.

Rolling Database Migration Strategy Example

A rolling database migration strategy minimizes downtime by applying schema changes incrementally. This approach allows the application to function correctly during the migration process. The example below illustrates a simplified rolling migration using SQL scripts.

Scenario: An e-commerce application needs to add a new column `discount_percentage` to the `products` table.

Steps:

- Phase 1: Add the new column. This change is backward-compatible, as the existing application will ignore the new column.

- Phase 2: Update the application to use the new column. The new application version starts using the `discount_percentage` column.

- Phase 3: Populate the `discount_percentage` column. A background job populates the new column with data.

- Phase 4: Remove the old discount logic (if applicable). Once the new column is populated and tested, remove any redundant discount logic from the application.

SQL Script Examples:

Phase 1: Add the new column

-- SQL script to add the discount_percentage column

ALTER TABLE products

ADD COLUMN discount_percentage DECIMAL(5,2) DEFAULT 0.00;

Phase 3: Populate the `discount_percentage` column

-- SQL script to populate the discount_percentage column

UPDATE products

SET discount_percentage = (CASE

WHEN price > 100 THEN 0.10 -- 10% discount for products over $100

ELSE 0.00

END);

Explanation:

This rolling migration strategy is designed to avoid downtime. During the initial phase, the `discount_percentage` column is added. The existing application can continue to function without any changes. The new application version is deployed and starts using the new column. A background process updates the column with relevant data.

Finally, after validation, the old discount logic can be removed.

Monitoring and Rollback Strategies

Effective monitoring and a robust rollback strategy are crucial for the success of blue-green deployments. They provide the necessary visibility into the application’s health and performance, enabling quick identification and resolution of issues. Furthermore, a well-defined rollback procedure minimizes downtime and ensures business continuity if problems arise in the green environment.

Comprehensive Monitoring Plan

A comprehensive monitoring plan should encompass various aspects of the application and infrastructure to ensure optimal performance and identify potential issues proactively. This plan needs to cover the application, infrastructure, and user experience.

- Application Performance Monitoring (APM): APM tools track application performance metrics, such as response times, error rates, and transaction throughput.

- Example: Using tools like New Relic or Datadog to monitor the average response time of critical API endpoints. A sudden increase in response time might indicate a performance bottleneck in the green environment.

- Infrastructure Monitoring: Monitoring infrastructure components, including servers, databases, and network devices, is essential.

- Example: Tracking CPU utilization, memory usage, and disk I/O on servers. High CPU utilization on the green servers after deployment could signal a resource issue.

- User Experience Monitoring: Monitoring the end-user experience provides insights into how users perceive the application’s performance.

- Example: Using Real User Monitoring (RUM) tools to track page load times, and identify errors encountered by users. A significant increase in error rates after a deployment can indicate a problem with the new version.

- Log Aggregation and Analysis: Centralized log aggregation and analysis are crucial for identifying errors, debugging issues, and gaining insights into application behavior.

- Example: Using tools like the ELK stack (Elasticsearch, Logstash, Kibana) or Splunk to collect, analyze, and visualize logs from various sources. Examining logs can help pinpoint the root cause of an issue.

- Synthetic Monitoring: Synthetic monitoring simulates user interactions to proactively test application functionality and performance.

- Example: Using a tool like Pingdom or UptimeRobot to simulate user transactions and monitor the availability and performance of critical functionalities. This can detect issues before users are affected.

Rollback Procedure

A well-defined rollback procedure is vital for quickly reverting to the stable blue environment if issues are detected in the green environment. This procedure should be documented and tested regularly to ensure its effectiveness.

- Trigger Conditions: Define clear conditions that trigger a rollback. These can include exceeding error rate thresholds, significant performance degradation, or critical application failures.

- Example: A rollback is triggered if the error rate of a critical API endpoint exceeds 5% for more than 5 minutes.

- Rollback Steps: Artikel the specific steps to revert to the blue environment. This typically involves redirecting traffic back to the blue environment and potentially reverting any database changes.

- Example: Using the traffic management tool (e.g., a load balancer or service mesh) to redirect all traffic back to the blue environment.

- Database Rollback Considerations: Address database changes made in the green environment during the deployment.

- Example: If the green deployment includes database schema changes, consider using database migration tools or techniques like feature toggles to ensure backward compatibility.

- Testing the Rollback Procedure: Regularly test the rollback procedure to ensure it functions correctly and minimizes downtime.

- Example: Simulating a deployment and intentionally introducing an issue to test the rollback process.

Automated Health Checks and Alerting

Automated health checks and alerting systems are essential for proactive issue detection and timely intervention. These systems continuously monitor key metrics and trigger alerts when predefined thresholds are breached.

- Health Check Endpoints: Implement health check endpoints in the application to provide real-time status information.

- Example: Creating a `/health` endpoint that returns a 200 OK status code if the application is healthy, along with details about the application’s status and dependencies.

- Automated Health Checks: Configure automated health checks to periodically query the health check endpoints.

- Example: Using a monitoring tool like Prometheus or Nagios to regularly check the `/health` endpoint and verify the application’s status.

- Alerting System: Set up an alerting system to notify the operations team when health checks fail or when critical metrics exceed predefined thresholds.

- Example: Configuring an alerting system like PagerDuty or Slack to send notifications when the error rate exceeds a certain threshold or when a health check fails.

- Relevant Metrics: Define relevant metrics to monitor and set appropriate thresholds.

- Error Rate: The percentage of requests that result in errors.

A high error rate can indicate problems with the application code or infrastructure.

- Response Time: The time it takes for the application to respond to a request.

Slow response times can indicate performance bottlenecks.

- Throughput: The number of requests processed per unit of time.

A decrease in throughput can indicate performance issues or resource constraints.

- CPU Utilization: The percentage of CPU resources being used.

High CPU utilization can indicate a resource bottleneck.

- Memory Usage: The amount of memory being used.

High memory usage can lead to performance issues or application crashes.

- Database Connection Errors: The number of errors encountered when connecting to the database.

Database connection errors can indicate problems with the database server or network connectivity.

- Error Rate: The percentage of requests that result in errors.

Automation and Tooling

Automating blue-green deployments is crucial for achieving zero-downtime releases and minimizing manual effort. Automation streamlines the entire process, from code compilation and testing to infrastructure provisioning and traffic switching. This section explores popular automation tools and demonstrates how to implement them effectively.

Popular Automation Tools

Several tools are designed to automate the complexities of continuous integration, continuous delivery, and specifically, blue-green deployments. Choosing the right tool depends on the project’s scale, existing infrastructure, and team expertise.

- Jenkins: An open-source automation server, Jenkins is highly versatile and widely used for building, testing, and deploying software. It supports a vast ecosystem of plugins, making it adaptable to various environments.

- GitLab CI/CD: Integrated directly within GitLab, this CI/CD tool simplifies the automation pipeline for projects hosted on GitLab. It offers features like containerization and dependency management.

- Spinnaker: Developed by Netflix and open-sourced, Spinnaker is a powerful, cloud-native continuous delivery platform specifically designed for complex deployments, including blue-green. It provides features like automated canary analysis and rollback strategies.

- CircleCI: A cloud-based CI/CD platform, CircleCI is known for its ease of use and quick setup. It supports various languages and platforms, making it suitable for diverse projects.

- AWS CodePipeline: A fully managed continuous delivery service offered by Amazon Web Services. It automates the release process by orchestrating different stages, such as build, test, and deploy.

Automating the Deployment Process with Jenkins

Jenkins is a robust choice for automating blue-green deployments. This example illustrates a simplified pipeline configuration using a declarative pipeline script. The script defines the stages for building, testing, and deploying the application to the blue and green environments.

Pipeline Script (Declarative):

pipeline agent any stages stage('Build') steps echo 'Building the application...' // Add build steps here (e.g., Maven, Gradle) stage('Test') steps echo 'Running tests...' // Add testing steps here (e.g., JUnit, integration tests) stage('Deploy Blue') steps echo 'Deploying to Blue environment...' // Deploy to Blue environment (e.g., using Docker, Kubernetes) // Example: sh 'kubectl apply -f blue-deployment.yaml' stage('Test Blue') steps echo 'Testing Blue environment...' // Run post-deployment tests on Blue environment stage('Deploy Green') steps echo 'Deploying to Green environment...' // Deploy to Green environment // Example: sh 'kubectl apply -f green-deployment.yaml' stage('Test Green') steps echo 'Testing Green environment...' // Run post-deployment tests on Green environment stage('Traffic Switch') steps echo 'Switching traffic to Green environment...' // Update load balancer to direct traffic to Green environment // Example: sh 'aws route53 change-resource-record-sets ...' stage('Cleanup Blue') steps echo 'Cleaning up Blue environment...' // Optionally, delete the Blue environment after successful switch post success echo 'Deployment successful!' failure echo 'Deployment failed!' // Optionally, rollback to the previous version Explanation of the Script:

- agent any: Specifies that the pipeline can run on any available agent.

- stages: Defines the stages of the deployment process.

- Build: Builds the application.

- Test: Runs unit and integration tests.

- Deploy Blue/Green: Deploys the application to the blue and green environments. This stage often includes deploying container images, updating configuration files, or any steps specific to the chosen deployment strategy.

- Test Blue/Green: Runs post-deployment tests to validate the new deployment. This may include smoke tests, performance tests, or user acceptance testing.

- Traffic Switch: Switches traffic from the blue environment to the green environment. This is typically done by updating the load balancer configuration.

- Cleanup Blue: After a successful deployment, the blue environment can be removed to free up resources.

- post: Defines actions to be taken after the pipeline completes, such as sending notifications or rolling back in case of failure.

Configuring Jenkins:

- Install Jenkins: Install Jenkins on a server.

- Install Plugins: Install necessary plugins, such as Docker, Kubernetes, and AWS CLI plugins.

- Create a Pipeline Job: Create a new pipeline job in Jenkins.

- Configure the Pipeline: Select “Pipeline script from SCM” and configure the SCM (e.g., Git) to point to your repository containing the Jenkinsfile (the script above).

- Run the Pipeline: Trigger the pipeline manually or configure it to run automatically on code changes.

Comparison of Deployment Automation Tools

The following table compares several popular deployment automation tools, highlighting their key features, ease of use, and pricing models. This comparison assists in selecting the best tool for a specific project’s needs.

| Tool | Key Features | Ease of Use | Pricing |

|---|---|---|---|

| Jenkins | Extensive plugin ecosystem, open-source, highly customizable, supports various deployment strategies. | Moderate – requires configuration and plugin management. | Free (open-source), but requires infrastructure for hosting. |

| GitLab CI/CD | Integrated with GitLab, easy setup, containerization support, built-in dependency management. | Easy – tightly integrated with GitLab’s interface. | Free for basic usage; paid plans for advanced features and support. |

| Spinnaker | Designed for complex deployments, supports blue-green, canary, and rolling updates, automated canary analysis, integrates with various cloud providers. | Complex – steep learning curve, requires in-depth understanding of deployment strategies. | Free (open-source), but requires infrastructure for hosting. |

| CircleCI | Cloud-based, easy setup, fast execution, supports various languages and platforms. | Easy – user-friendly interface and quick configuration. | Free for limited usage; paid plans based on usage. |

| AWS CodePipeline | Fully managed, integrates with other AWS services, supports automated builds, tests, and deployments. | Easy – integrates seamlessly with other AWS services. | Pay-as-you-go based on usage. |

Post-Deployment Verification and Cleanup

After successfully deploying to the green environment, thorough verification and cleanup are crucial for ensuring application stability, optimizing resource utilization, and maintaining a clean and manageable infrastructure. This phase involves rigorous testing, monitoring, and resource decommissioning to solidify the deployment’s success and prepare for future iterations.

Post-Deployment Verification Checklist

Post-deployment verification is a systematic process to confirm the application’s functionality, performance, and stability in the green environment. It involves a series of checks and tests to ensure the deployed application meets the expected criteria. This checklist provides a structured approach to validate the deployment.

- Functional Testing: Verify core application features and user flows.

- Test all critical functionalities, such as user registration, login, data input, and data retrieval.

- Conduct end-to-end testing to simulate real-world user scenarios.

- Ensure all integrations with external services function as expected.

- Performance Testing: Assess application responsiveness and resource utilization under load.

- Conduct load testing to simulate expected user traffic and identify potential bottlenecks.

- Monitor key performance indicators (KPIs) like response time, throughput, and error rates.

- Analyze resource consumption, including CPU, memory, and disk I/O.

- Monitoring and Alerting: Confirm that monitoring systems are correctly configured and generating alerts.

- Verify that monitoring dashboards display relevant metrics, such as application health, error rates, and resource utilization.

- Test alert notifications to ensure they are triggered under predefined conditions.

- Ensure logging is configured correctly to capture application events and errors.

- Security Testing: Validate security configurations and assess potential vulnerabilities.

- Conduct security scans to identify vulnerabilities and misconfigurations.

- Verify that access controls and authentication mechanisms are functioning correctly.

- Test for common security threats, such as cross-site scripting (XSS) and SQL injection.

- Database Verification: Confirm data integrity and consistency in the new environment.

- Verify data migration success, ensuring all data is correctly transferred.

- Validate database schema and configurations.

- Test database queries and transactions.

Decommissioning the Blue Environment

Once the green environment is fully validated and confirmed to be stable, the blue environment, which previously hosted the live application, can be decommissioned. This involves gracefully shutting down the blue environment, releasing associated resources, and updating DNS records to point all traffic to the green environment. The process needs to be executed carefully to prevent service disruption.

- Traffic Cutover: Redirect all incoming traffic to the green environment by updating DNS records or load balancer configurations. This process is often performed gradually to monitor the green environment’s performance and identify any potential issues before fully transitioning all traffic. For instance, if a DNS Time-To-Live (TTL) is set to 60 seconds, the transition could be completed within a few minutes.

- Shutdown Blue Environment: Shut down all application instances and associated services in the blue environment. This includes web servers, application servers, databases, and any other supporting infrastructure. Ensure that all connections to the blue environment are terminated.

- Resource Deallocation: Deallocate and release all resources associated with the blue environment, such as virtual machines, storage volumes, and network resources. This helps to optimize resource utilization and reduce costs. This can include deleting virtual machines in cloud environments, or releasing server licenses in on-premise deployments.

- Data Backup (Optional): Create a final backup of the blue environment’s data, if necessary, for archival or disaster recovery purposes. This step ensures that historical data is preserved. This is especially important if the blue environment contained any data not yet replicated to the green environment, or if the data needs to be retained for compliance reasons.

Cleanup Process

The cleanup process involves removing unused resources and updating DNS records to ensure a smooth transition and maintain a clean and efficient infrastructure. This step ensures that the system is optimized for performance and maintainability.

- Remove Unused Resources: Delete any resources associated with the blue environment, such as virtual machines, storage volumes, and network configurations. This frees up resources and reduces operational costs. Regularly review and remove obsolete or unused resources to prevent unnecessary expenses.

- Update DNS Records: Update DNS records to point to the green environment, ensuring that all incoming traffic is directed to the new active environment. This is a critical step to ensure that users are accessing the correct application version.

- Review and Update Monitoring and Alerting: Adjust monitoring and alerting configurations to reflect the new environment. This ensures that all critical metrics are tracked and alerts are triggered correctly.

- Document the Deployment: Document the entire blue-green deployment process, including all steps taken, any issues encountered, and the resolution strategies. This documentation will be valuable for future deployments and troubleshooting. The documentation should include specific details on the configurations, including the load balancer settings, database connection strings, and any other environment-specific parameters.

Advanced Topics and Considerations

Blue-green deployments, while powerful, present advanced challenges when dealing with complex applications and infrastructure. This section delves into these intricate aspects, offering strategies to ensure seamless transitions and minimize potential disruptions. It covers topics like database migrations, session persistence, and integration with modern cloud-native architectures.

Zero-Downtime Database Migrations for Complex Schemas

Managing database migrations during blue-green deployments is crucial, especially when dealing with complex database schemas. The goal is to perform schema changes without interrupting application availability. Several strategies can be employed to achieve this.

- Backward Compatibility: Ensure that the new version of the application is compatible with the old database schema. This allows both the blue and green environments to access the same database during the transition.

- Schema Evolution: Use techniques like adding new columns or tables instead of modifying existing ones directly. This ensures that the older application version can still function while the newer version utilizes the new schema elements.

- Phased Migrations: Break down large database migrations into smaller, incremental steps. This minimizes the risk associated with large-scale changes and allows for easier rollback if issues arise. For example, instead of altering a table with a large number of columns, you can create a new table with the desired structure, migrate the data, and then switch the application to use the new table.

- Dual Writes: During the migration process, write data to both the old and new schemas. This ensures that the new schema is populated with the latest data while minimizing downtime. This technique is often employed when changing the data types or structure of existing columns.

- Feature Flags: Utilize feature flags to control the visibility of new database features. This allows you to deploy code that uses the new features without exposing them to all users immediately. The flag can be toggled to gradually introduce the new functionality.

- Database Migration Tools: Employ dedicated database migration tools like Flyway or Liquibase. These tools automate the migration process, track schema changes, and provide version control for database scripts.

- Testing and Validation: Rigorously test database migrations in a staging environment that mirrors the production environment. This helps identify potential issues before they impact users. Validate data integrity after each migration step.

Handling Session Persistence and State Management

Session persistence and state management are critical considerations during blue-green deployments, especially for applications that maintain user sessions. Failing to address these aspects can lead to session loss and a poor user experience.

- Sticky Sessions: Configure the load balancer to use sticky sessions, which ensures that a user’s requests are consistently routed to the same server. This can be a simple solution for basic session persistence, but it can become problematic if a server fails, leading to session loss.

- Centralized Session Storage: Use a centralized session store, such as Redis, Memcached, or a dedicated session database. This allows both the blue and green environments to access the same session data, ensuring session persistence during the switchover. This approach is highly scalable and provides resilience against server failures.

- Session Replication: Replicate session data across multiple servers. This provides redundancy and ensures that sessions are not lost if a server goes down. This approach can be resource-intensive and requires careful configuration to avoid data inconsistencies.

- Stateless Applications: Design applications to be stateless, storing all session data on the client-side (e.g., using JWTs) or in the request itself. This simplifies session management and eliminates the need for sticky sessions or centralized session storage.

- Session Migration: Before switching traffic to the green environment, migrate existing sessions from the blue environment to the green environment. This can be achieved by copying session data to the new environment or by using a mechanism to redirect users to the new server with their session data.

- Session Timeouts: Implement short session timeouts to minimize the impact of session loss. This ensures that users are automatically logged out after a period of inactivity, reducing the risk of stale sessions.

- Health Checks: Implement robust health checks to ensure that servers are healthy and able to handle user sessions. The load balancer can use these health checks to automatically remove unhealthy servers from the pool, preventing session loss.

Integrating Blue-Green Deployments with Autoscaling and Cloud-Native Architectures

Integrating blue-green deployments with autoscaling and cloud-native architectures enhances scalability, resilience, and efficiency. The following diagram illustrates a common pattern:

Diagram: Blue-Green Deployment with Autoscaling and Cloud-Native Architecture

A diagram showing a cloud-native architecture utilizing blue-green deployments and autoscaling. The architecture includes the following components:

- Load Balancer: Sits at the front, directing traffic. It has health checks enabled and routes traffic to either the blue or green environment based on configuration.

- Blue Environment: Consists of multiple instances of the application running. Autoscaling is configured to automatically adjust the number of instances based on demand.

- Green Environment: Similar to the blue environment, but initially scaled to zero or a small number of instances. Autoscaling is also configured.

- Database: A shared database accessible by both blue and green environments. Database migrations are carefully managed to ensure compatibility.

- Monitoring and Logging: Integrated monitoring and logging systems provide visibility into the performance and health of both environments.

- CI/CD Pipeline: An automated CI/CD pipeline handles code deployments and environment updates.

The workflow is as follows:

- A new version of the application is deployed to the green environment.

- The CI/CD pipeline automatically scales up the green environment, ensuring sufficient capacity.

- Health checks verify the green environment’s readiness.

- The load balancer switches traffic to the green environment.

- The blue environment is scaled down after the traffic switch.

- If issues are detected, the load balancer can quickly switch back to the blue environment.

This architecture offers the following benefits:

- Zero Downtime: The traffic switch ensures continuous availability.

- Scalability: Autoscaling dynamically adjusts resources based on demand.

- Resilience: The blue and green environments provide redundancy.

- Rollback Capability: Easy rollback to the previous version.

- Efficiency: Resources are used efficiently.

The key aspects of this integration include:

- Automated Deployment Pipelines: Use CI/CD pipelines to automate the deployment process, including building, testing, and deploying the new version to the green environment. This ensures consistent and repeatable deployments.

- Autoscaling: Configure autoscaling to automatically adjust the number of instances in both the blue and green environments based on demand. This ensures that the application can handle fluctuating traffic loads. For example, if the application experiences a sudden surge in traffic, the autoscaling mechanism can automatically provision more instances in the green environment to handle the increased load.

- Health Checks: Implement comprehensive health checks to monitor the health of the application instances in both environments. The load balancer uses these health checks to determine whether an instance is ready to receive traffic. If an instance fails a health check, the load balancer automatically removes it from the pool.

- Traffic Routing: Use a load balancer or service mesh to control traffic routing between the blue and green environments. The load balancer can be configured to gradually shift traffic from the blue to the green environment, allowing for a phased rollout.

- Monitoring and Observability: Implement robust monitoring and observability to track the performance and health of the application in both environments. This includes monitoring metrics such as response times, error rates, and resource utilization. Alerting systems can be configured to notify the operations team of any issues.

- Infrastructure as Code (IaC): Use IaC tools like Terraform or CloudFormation to manage the infrastructure, including the load balancer, autoscaling groups, and other resources. This ensures that the infrastructure is consistent, repeatable, and easily managed.

- Cloud-Native Services: Leverage cloud-native services such as container orchestration (e.g., Kubernetes), service meshes (e.g., Istio, Linkerd), and serverless functions (e.g., AWS Lambda, Google Cloud Functions) to simplify deployment and management.

Final Wrap-Up

In conclusion, mastering blue-green deployments is a transformative step toward achieving continuous availability and minimizing the impact of updates. From environment setup and traffic routing to automated rollbacks and post-deployment verification, the strategies Artikeld here provide a comprehensive framework for successful implementation. By embracing these practices, you can significantly reduce downtime, improve user experience, and streamline your release cycles. Embrace the green and blue, and revolutionize your deployment process!

Top FAQs

What are the primary advantages of blue-green deployments over traditional methods?

Blue-green deployments minimize downtime by allowing you to switch traffic instantly to a new, fully tested environment. They also provide a simple rollback strategy, reduce the risk of deployment failures, and enable faster release cycles.

How do you handle database schema changes during a blue-green deployment?

Database schema changes require careful planning. Strategies include using database migration tools, performing incremental changes that are backward compatible, and ensuring that both the blue and green environments can operate with the old and new schemas during the transition period.

What’s the best way to test a green environment before switching traffic?

Thorough testing is crucial. Implement a combination of functional testing, performance testing, and user acceptance testing (UAT). Consider running a canary release to test a small percentage of production traffic on the green environment before a full switchover.

How do you manage session persistence during a blue-green deployment?

Session persistence can be maintained using techniques such as sticky sessions in your load balancer, or by storing session data in a shared cache (e.g., Redis, Memcached) that both blue and green environments can access. This ensures that users maintain their sessions even when traffic is switched.

What tools are commonly used to automate blue-green deployments?

Popular automation tools include Jenkins, GitLab CI/CD, Spinnaker, and AWS CodeDeploy. These tools help orchestrate the entire deployment process, from building and testing the application to deploying it to the green environment and switching traffic.