Embarking on the journey of rebuilding an application for cloud native architecture represents a significant shift in software development paradigms. This transition moves beyond mere infrastructure changes; it necessitates a fundamental rethinking of application design, deployment, and operational strategies. The provided Artikel delves into the intricacies of this transformation, offering a structured approach to navigate the complexities of modern cloud environments.

It explores the principles of cloud native architecture, the critical steps in assessing existing applications, and the strategic planning required for a successful rebuild.

This guide examines essential aspects, from designing microservices and implementing containerization to establishing robust monitoring and security measures. It aims to provide a comprehensive understanding of the tools, techniques, and best practices necessary to modernize applications for optimal performance, scalability, and resilience within cloud environments. Each section builds upon the previous, creating a cohesive narrative that empowers developers and architects to successfully navigate the challenges and embrace the opportunities of cloud native development.

Understanding Cloud Native Architecture Fundamentals

Cloud native architecture represents a paradigm shift in how applications are designed, built, and deployed, leveraging the full potential of the cloud computing model. This approach focuses on building applications that are specifically designed to take advantage of the scalability, elasticity, and resilience offered by cloud environments. It emphasizes automation, continuous delivery, and the use of microservices to enable rapid innovation and faster time to market.

Core Principles of Cloud Native Architecture

Cloud native architecture is built upon a set of core principles that guide its design and implementation. These principles ensure applications are optimized for cloud environments.

- Microservices: Cloud native applications are composed of independent, deployable, and scalable microservices. Each microservice focuses on a specific business capability and communicates with other services via APIs. This modularity allows for independent development, deployment, and scaling of individual components.

- Containerization: Containerization technologies, such as Docker, package applications and their dependencies into isolated units called containers. Containers provide a consistent environment for applications to run across different infrastructure platforms.

- Automation: Automation is central to cloud native. This includes automating infrastructure provisioning, deployment, scaling, and monitoring. Automation reduces manual effort, improves efficiency, and minimizes the risk of human error.

- DevOps: Cloud native architectures embrace DevOps practices, which emphasize collaboration between development and operations teams. DevOps promotes continuous integration and continuous delivery (CI/CD), enabling faster and more frequent releases.

- Continuous Delivery: Cloud native applications are designed for continuous delivery. CI/CD pipelines automate the build, test, and deployment processes, allowing for frequent and reliable releases of new features and updates.

- Elasticity and Scalability: Cloud native applications are designed to automatically scale up or down based on demand. This elasticity ensures optimal resource utilization and performance.

- Resilience: Cloud native applications are designed to be resilient to failures. This includes implementing techniques such as redundancy, automated failover, and self-healing capabilities.

Comparative Overview of Traditional vs. Cloud Native Application Architectures

Traditional and cloud native architectures differ significantly in their structure, deployment, and operational characteristics. These differences impact scalability, maintainability, and overall efficiency.

| Feature | Traditional Architecture | Cloud Native Architecture |

|---|---|---|

| Application Structure | Monolithic: A single, large application codebase. | Microservices: Independent, small, and focused services. |

| Deployment | Manual or semi-automated deployments. | Automated deployments using CI/CD pipelines. |

| Scalability | Scaling typically involves scaling the entire application, which can be complex and resource-intensive. | Scalability is achieved by scaling individual microservices independently. |

| Technology Stack | Often tied to specific hardware and operating systems. | Cloud-agnostic, utilizing containerization and platform-independent technologies. |

| Resource Utilization | Often inefficient, with resources underutilized during periods of low demand. | Optimized resource utilization through dynamic scaling. |

| Development and Deployment Speed | Slower development cycles and infrequent releases. | Faster development cycles and frequent releases. |

| Fault Isolation | A failure in one part of the application can affect the entire system. | Faults are isolated to individual microservices, minimizing the impact on the overall application. |

Benefits of Adopting Cloud Native Architecture for Application Rebuilding

Rebuilding applications using cloud native principles offers significant advantages over traditional approaches. These benefits contribute to increased agility, efficiency, and cost savings.

- Improved Scalability and Elasticity: Cloud native applications can automatically scale up or down based on demand, ensuring optimal performance and resource utilization. This is especially crucial for applications with fluctuating workloads.

- Faster Time to Market: Microservices and CI/CD pipelines enable faster development cycles and more frequent releases, allowing businesses to respond quickly to market changes and customer needs.

- Increased Agility and Flexibility: Cloud native architectures provide greater flexibility in adapting to changing business requirements. Microservices can be independently updated and deployed, allowing for rapid iteration and innovation.

- Enhanced Resilience and Reliability: Cloud native applications are designed to be resilient to failures. Redundancy, automated failover, and self-healing capabilities ensure high availability and minimize downtime.

- Reduced Costs: Cloud native architectures can help reduce costs through efficient resource utilization, automated operations, and the use of pay-as-you-go cloud services.

- Improved Developer Productivity: Microservices allow developers to focus on specific business capabilities, leading to increased productivity and faster development cycles.

How Microservices Contribute to Cloud Native Design

Microservices are a fundamental building block of cloud native architectures. They provide the modularity and flexibility needed to build scalable and resilient applications.

- Independent Development and Deployment: Each microservice can be developed, deployed, and scaled independently of other services. This allows development teams to work on different parts of the application concurrently and release updates more frequently.

- Technology Diversity: Microservices can be built using different technologies and programming languages, allowing development teams to choose the best tools for each service.

- Fault Isolation: If one microservice fails, it does not necessarily bring down the entire application. This improves the overall resilience of the system.

- Scalability: Individual microservices can be scaled up or down based on demand, allowing for efficient resource utilization.

- Easier Maintenance: Smaller codebases are easier to understand, maintain, and debug. This reduces the time and effort required for maintenance and updates.

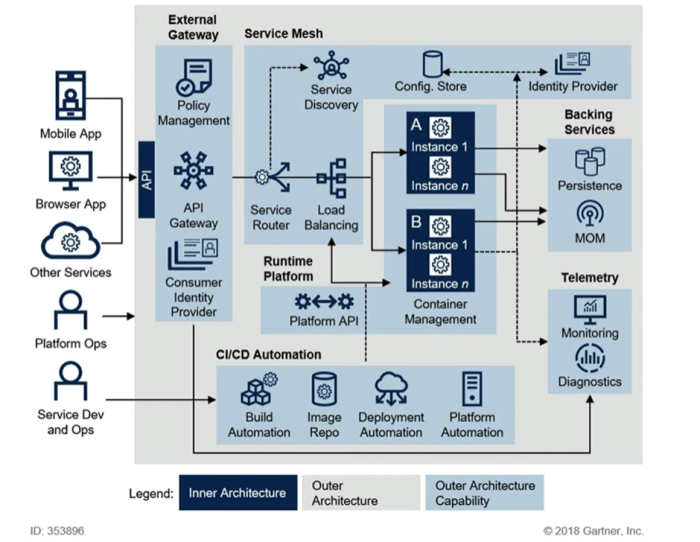

Essential Components of a Cloud Native Platform

A cloud native platform provides the infrastructure and services needed to build, deploy, and manage cloud native applications. This platform includes several essential components.

- Containerization Platform: This platform, such as Kubernetes, orchestrates and manages containerized applications. It handles deployment, scaling, and management of containers.

- Container Registry: A container registry, like Docker Hub or a private registry, stores and manages container images.

- CI/CD Pipeline: A CI/CD pipeline automates the build, test, and deployment processes. Tools like Jenkins, GitLab CI, or CircleCI are commonly used.

- Service Mesh: A service mesh, such as Istio or Linkerd, provides a dedicated infrastructure layer for managing service-to-service communication. It offers features like traffic management, security, and observability.

- Monitoring and Logging: Monitoring and logging tools, such as Prometheus, Grafana, and the ELK stack (Elasticsearch, Logstash, Kibana), provide visibility into application performance and health.

- API Gateway: An API gateway manages API traffic, provides security, and handles routing and rate limiting.

- Configuration Management: Configuration management tools, such as Kubernetes ConfigMaps and Secrets, manage application configuration and secrets.

- Serverless Computing: Serverless computing platforms, like AWS Lambda or Google Cloud Functions, allow developers to run code without managing servers.

Assessment of Existing Application for Rebuilding

The journey of rebuilding an application for cloud-native architecture necessitates a thorough evaluation of the existing application. This assessment serves as the cornerstone for informed decision-making, guiding the transformation strategy and mitigating potential risks. A meticulous analysis ensures that the chosen path aligns with business objectives and technical feasibility. This section details the critical steps involved in evaluating an application’s readiness for a cloud-native transformation.

Identifying the Current State of an Existing Application

A comprehensive understanding of the current application’s architecture, functionality, and operational characteristics is paramount. This involves a multi-faceted approach, combining technical analysis with business context.

- Architecture Review: Document the application’s architecture, including its components, their interdependencies, and communication protocols. This involves analyzing code, infrastructure diagrams, and existing documentation. Understanding the architectural patterns (e.g., monolithic, microservices) and technologies employed (e.g., programming languages, databases, frameworks) is crucial.

- Functional Analysis: Identify and document all functionalities provided by the application. This includes user interfaces, APIs, and background processes. Mapping user journeys and business processes helps to understand the application’s value proposition and identify critical functionalities that must be preserved during the rebuild.

- Performance Analysis: Evaluate the application’s performance characteristics, such as response times, throughput, and resource utilization. This involves analyzing performance metrics, logs, and monitoring data. Performance bottlenecks and areas for optimization should be identified. Load testing and stress testing can provide valuable insights.

- Security Assessment: Conduct a security audit to identify vulnerabilities and potential risks. This involves analyzing code for security flaws, assessing the application’s security posture, and identifying potential attack vectors. Compliance with relevant security standards and regulations should be verified.

- Operational Analysis: Assess the application’s operational characteristics, including deployment processes, monitoring, logging, and disaster recovery strategies. This involves analyzing existing infrastructure, automation tools, and operational procedures. Identifying areas for improvement in terms of automation, scalability, and resilience is essential.

- Cost Analysis: Determine the current costs associated with the application, including infrastructure, maintenance, and support. Understanding the cost structure helps to justify the investment in cloud-native transformation and identify potential cost savings.

Methods to Evaluate an Application’s Suitability for Cloud Native Transformation

Evaluating an application’s suitability involves assessing its inherent characteristics and alignment with cloud-native principles. This assessment helps determine the optimal transformation strategy and the potential benefits.

- Monolith vs. Microservices Assessment: Evaluate the application’s architectural style. Monolithic applications are often challenging to migrate directly to cloud-native environments. Consider breaking down the monolith into microservices, where each service represents a specific business capability.

- Dependency Analysis: Analyze the application’s dependencies, including external libraries, frameworks, and third-party services. Identify dependencies that are incompatible with cloud-native environments or require significant modifications.

- State Management Assessment: Assess how the application manages state, including data persistence and session management. Cloud-native applications often favor statelessness to facilitate scalability and resilience. Evaluate the feasibility of refactoring stateful components to become stateless or leverage cloud-native state management services.

- Scalability and Resilience Evaluation: Determine the application’s ability to scale and handle failures. Cloud-native applications are designed for scalability and resilience. Assess the application’s current capabilities and identify areas for improvement.

- Automation and DevOps Readiness: Evaluate the application’s readiness for automation and DevOps practices. Cloud-native applications rely heavily on automation for deployment, monitoring, and management. Assess the application’s integration with existing DevOps tools and processes.

- Business Value Alignment: Assess the application’s alignment with business objectives and priorities. Identify functionalities that are critical to business operations and those that can be refactored or replaced.

Key Challenges in Refactoring Legacy Applications

Refactoring legacy applications presents several challenges that require careful consideration and planning. These challenges can significantly impact the transformation process, potentially leading to delays and increased costs.

- Technical Debt: Legacy applications often accumulate significant technical debt, including outdated code, poor documentation, and complex dependencies. Addressing technical debt is a crucial but time-consuming process.

- Lack of Documentation: Legacy applications often lack comprehensive documentation, making it difficult to understand their architecture, functionality, and dependencies. Reverse engineering and code analysis may be required to fill the documentation gap.

- Complex Dependencies: Legacy applications often have complex and intertwined dependencies, making it difficult to isolate and refactor individual components. Dependency management becomes a critical challenge.

- Skill Gaps: Legacy applications may be built using outdated technologies and programming languages, requiring specialized skills that may be difficult to find or expensive to acquire. Training or outsourcing may be necessary.

- Testing Complexity: Legacy applications may have limited or outdated testing frameworks, making it difficult to ensure that refactoring efforts do not introduce regressions. Comprehensive testing is essential.

- Business Disruption: Refactoring legacy applications can disrupt business operations, particularly if the application is critical to business processes. Careful planning and execution are required to minimize downtime and impact.

- Cultural Resistance: Resistance to change can hinder the transformation process. Clear communication, stakeholder engagement, and change management are essential.

Checklist for Assessing Application Dependencies and Technical Debt

A comprehensive checklist helps to systematically assess application dependencies and technical debt, providing a structured approach to the evaluation process.

- Dependency Inventory: Create a detailed inventory of all application dependencies, including libraries, frameworks, and third-party services. Document the version, license, and compatibility of each dependency.

- Dependency Analysis: Analyze the dependencies to identify potential issues, such as security vulnerabilities, compatibility problems, and licensing conflicts.

- Code Quality Assessment: Evaluate the code quality using static analysis tools and code reviews. Identify code smells, violations of coding standards, and areas for improvement.

- Documentation Review: Review the existing documentation to assess its completeness and accuracy. Identify gaps in documentation and areas that need to be updated.

- Testing Coverage Analysis: Analyze the existing test coverage to identify areas that are not adequately tested. Implement new tests or update existing tests to improve coverage.

- Performance Testing: Conduct performance testing to identify performance bottlenecks and areas for optimization.

- Security Vulnerability Scan: Perform a security vulnerability scan to identify potential security flaws and risks.

- Technical Debt Tracking: Implement a system for tracking technical debt, including identifying the root causes, estimating the cost of remediation, and prioritizing the work.

- Build Process Analysis: Review the build process to identify areas for improvement, such as automation, speed, and reliability.

- Deployment Process Analysis: Review the deployment process to identify areas for improvement, such as automation, scalability, and resilience.

Impact of Application Size and Complexity on the Rebuilding Process

The size and complexity of an application significantly impact the rebuilding process, influencing the effort, time, and resources required.

- Larger Applications: Larger applications typically require more time, resources, and expertise to rebuild. They often have more complex architectures, dependencies, and functionalities. The transformation may involve breaking down the application into smaller, more manageable components (microservices).

- Complex Applications: Complex applications, characterized by intricate business logic, numerous dependencies, and sophisticated functionalities, pose greater challenges. The transformation process may require a more detailed understanding of the application’s architecture and functionality.

- Monolithic Applications: Monolithic applications, regardless of size, often require significant refactoring to be adapted to cloud-native environments. Breaking down a monolith into microservices can be a complex and time-consuming process.

- Microservices Applications: Microservices applications, while potentially more complex to manage overall, can be rebuilt in a more iterative and modular fashion. Changes can be implemented and deployed to individual services without impacting the entire application.

- Project Management and Planning: The size and complexity of the application significantly impact project management and planning. Larger and more complex applications require more detailed planning, risk assessment, and change management strategies.

- Team Size and Expertise: The size and complexity of the application influence the required team size and expertise. Larger and more complex applications typically require a larger team with a broader range of skills.

Planning the Rebuilding Strategy

Planning the rebuilding strategy is a critical phase in migrating an application to a cloud-native architecture. This involves a meticulous approach that encompasses phased implementation, cloud provider selection, scope definition, risk assessment, and technology choices. A well-defined strategy minimizes disruption, ensures business continuity, and maximizes the benefits of the cloud-native approach.

Design a Phased Approach to Rebuilding the Application in a Cloud Native Manner

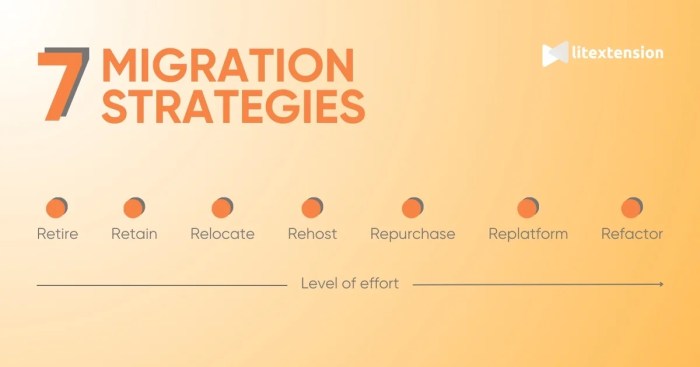

A phased approach, often utilizing an iterative methodology, allows for a controlled transition, mitigating risks and enabling learning throughout the process. This strategy typically involves breaking down the application into manageable components and rebuilding them incrementally.

- Phase 1: Assessment and Planning. This phase reiterates the assessment performed previously. It involves a deep dive into the application’s current state, identifying dependencies, and prioritizing components for rebuilding. The output is a detailed roadmap, including timelines, resource allocation, and success metrics.

- Phase 2: Component-Based Rebuilding. Select specific components of the application to rebuild, focusing on those with the lowest dependencies and highest potential for cloud-native benefits. Implement these components using microservices architecture, containerization (e.g., Docker), and orchestration (e.g., Kubernetes). Consider starting with non-critical functionalities or features.

- Phase 3: Integration and Testing. Integrate the rebuilt components with existing functionalities. Conduct thorough testing, including unit tests, integration tests, and end-to-end tests, to ensure compatibility and performance. Implement continuous integration and continuous delivery (CI/CD) pipelines to automate the build, test, and deployment processes.

- Phase 4: Migration and Deployment. Migrate the rebuilt components to the cloud environment. This could involve a “lift and shift” approach initially, followed by optimizing cloud services. Monitor performance and resource utilization to identify areas for improvement.

- Phase 5: Optimization and Iteration. Continuously monitor and optimize the cloud-native application. This includes scaling resources dynamically, refining the architecture, and incorporating new cloud services to improve performance, reduce costs, and enhance the user experience. Iterate through the phases as necessary, incorporating feedback and learnings.

Detail Strategies for Choosing the Right Cloud Provider and Services

Choosing the right cloud provider and services is a crucial decision that impacts the application’s performance, scalability, and cost-effectiveness. The selection process should be based on a comprehensive evaluation of several factors.

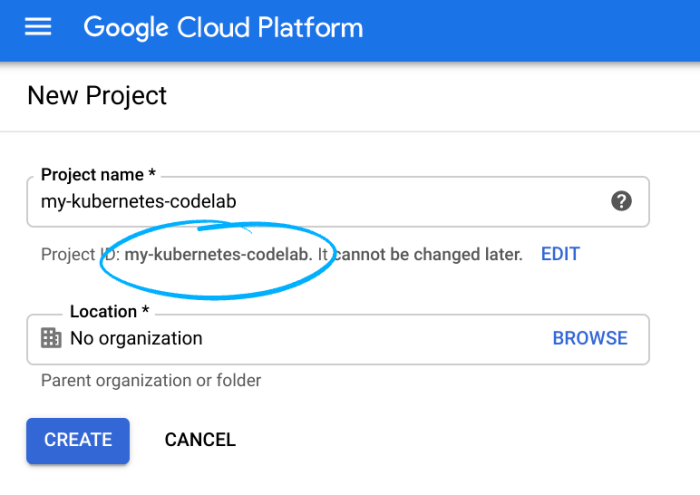

- Evaluate Provider Capabilities. Each cloud provider (e.g., AWS, Azure, Google Cloud) offers a range of services. Assess the specific services offered by each provider that align with the application’s requirements. Consider compute, storage, database, networking, and other specialized services (e.g., serverless functions, AI/ML).

- Assess Service Maturity and Reliability. Evaluate the maturity and reliability of the services offered by each provider. Consider factors such as uptime guarantees, service level agreements (SLAs), and the provider’s track record.

- Analyze Pricing Models. Cloud providers offer different pricing models (e.g., pay-as-you-go, reserved instances, spot instances). Analyze the pricing models and estimate the cost of running the application on each provider. Consider factors such as data transfer costs, storage costs, and compute costs.

- Consider Vendor Lock-in. Be mindful of vendor lock-in, which occurs when an application becomes tightly coupled with a specific provider’s services. Choose services that offer portability and avoid proprietary technologies that limit flexibility.

- Evaluate Security and Compliance. Assess the security and compliance certifications offered by each provider. Consider factors such as data encryption, access control, and compliance with industry regulations (e.g., HIPAA, GDPR).

- Consider Support and Documentation. Evaluate the support and documentation offered by each provider. Consider factors such as technical support, community support, and the availability of tutorials and documentation.

Organize the Steps for Defining the Scope and Goals of the Rebuilding Project

Defining the scope and goals of the rebuilding project is essential for aligning the project with business objectives and ensuring its success. This process requires clear communication and collaboration among stakeholders.

- Define Business Goals. Identify the key business goals for rebuilding the application. These could include improving scalability, reducing costs, increasing agility, enhancing security, and improving the user experience.

- Identify Key Features and Functionalities. Determine the essential features and functionalities of the application that must be included in the rebuilt version. Prioritize features based on their importance to the business and the complexity of rebuilding them.

- Define Scope Boundaries. Clearly define the boundaries of the rebuilding project. Determine which components of the application will be rebuilt and which will remain unchanged. This helps manage the project’s scope and avoid scope creep.

- Establish Success Metrics. Define measurable metrics to evaluate the success of the rebuilding project. These metrics could include performance indicators (e.g., response time, throughput), cost metrics (e.g., infrastructure costs), and business metrics (e.g., user engagement, revenue).

- Create a Project Plan. Develop a detailed project plan that Artikels the tasks, timelines, resources, and dependencies required to complete the rebuilding project. This plan should be flexible enough to accommodate changes and unexpected challenges.

- Communicate and Collaborate. Communicate the project’s scope, goals, and progress to all stakeholders. Foster collaboration among development teams, operations teams, and business stakeholders to ensure alignment and transparency.

Create a Risk Assessment Framework for the Rebuilding Process

A robust risk assessment framework is critical for identifying and mitigating potential risks throughout the rebuilding process. This framework should be integrated into the project plan and continuously updated.

- Identify Potential Risks. Identify potential risks associated with each phase of the rebuilding process. These risks could include technical risks (e.g., integration challenges, performance issues), business risks (e.g., delays, cost overruns), and organizational risks (e.g., lack of skills, communication issues).

- Assess Risk Likelihood and Impact. Evaluate the likelihood of each risk occurring and the potential impact if it does occur. Use a risk matrix to categorize risks based on their likelihood and impact.

- Develop Mitigation Strategies. Develop mitigation strategies for each identified risk. These strategies could include implementing contingency plans, diversifying technologies, and providing additional training.

- Monitor and Track Risks. Continuously monitor and track identified risks throughout the rebuilding process. Regularly review the risk assessment framework and update it as needed.

- Establish Communication Protocols. Establish clear communication protocols for reporting and escalating risks. Ensure that all stakeholders are aware of the risks and the mitigation strategies.

Share the Importance of Selecting Appropriate Technologies and Tools

The selection of appropriate technologies and tools is crucial for the success of a cloud-native application rebuild. The right choices can streamline development, improve performance, and reduce operational overhead.

- Containerization Technologies. Use containerization technologies such as Docker to package and isolate application components. This enables consistent deployments across different environments and simplifies scaling.

- Orchestration Tools. Employ orchestration tools such as Kubernetes to manage and automate the deployment, scaling, and management of containerized applications.

- CI/CD Pipelines. Implement CI/CD pipelines using tools like Jenkins, GitLab CI, or CircleCI to automate the build, test, and deployment processes. This improves agility and reduces the risk of errors.

- Serverless Computing. Utilize serverless computing platforms (e.g., AWS Lambda, Azure Functions, Google Cloud Functions) for event-driven and stateless functionalities. This can reduce infrastructure costs and improve scalability.

- Monitoring and Logging Tools. Implement comprehensive monitoring and logging tools (e.g., Prometheus, Grafana, ELK Stack) to track application performance, identify issues, and gain insights into user behavior.

- Infrastructure as Code (IaC). Adopt IaC practices using tools like Terraform or AWS CloudFormation to automate infrastructure provisioning and management. This promotes consistency and reduces manual errors.

- API Gateways. Use API gateways (e.g., AWS API Gateway, Azure API Management, Google Cloud API Gateway) to manage and secure APIs, and handle traffic routing.

Designing Microservices Architecture

Designing a microservices architecture is a critical step in rebuilding an application for a cloud-native environment. This involves breaking down a monolithic application into a collection of smaller, independent services that communicate with each other. This approach enhances scalability, resilience, and agility, enabling faster development cycles and easier deployments. The following sections detail the key aspects of designing a successful microservices architecture.

Decomposition of a Monolithic Application into Microservices

Decomposing a monolithic application requires careful analysis and strategic planning. The goal is to identify logical boundaries within the application that can be transformed into independent services. This process often involves a combination of business domain analysis and technical considerations.

- Domain-Driven Design (DDD): DDD is a software design approach that focuses on modeling software around a business domain. It’s a powerful tool for identifying service boundaries. By analyzing the business domain, you can identify distinct business capabilities and their associated data and processes. Each capability can then become a microservice. For example, in an e-commerce application, services might include “Product Catalog,” “Order Management,” “Payment Processing,” and “User Authentication.”

- Strangler Fig Pattern: This pattern involves gradually replacing parts of the monolith with microservices. New features are developed as microservices, and existing functionality is refactored into services over time. This approach minimizes risk and allows for a controlled transition. The monolith acts as the “trunk” of the fig tree, and new services are the “strangling” vines that eventually replace it.

- Identifying Service Boundaries: Defining service boundaries is crucial. Services should be loosely coupled and highly cohesive. Loosely coupled means that changes in one service should not require changes in others. Highly cohesive means that a service should perform a specific, well-defined function. Consider the “Single Responsibility Principle” – each service should have one, and only one, reason to change.

- Data Ownership: Each microservice should own its own data. This principle is essential for autonomy and scalability. Data should not be shared directly between services. Instead, services should interact through APIs, and data consistency should be managed through appropriate mechanisms (discussed later).

- Technical Considerations: Consider factors like the existing technology stack, team expertise, and the desired level of agility. Some parts of the monolith might be easier to decompose than others. Starting with less complex areas and gradually tackling more challenging components is a good strategy.

Effective Microservice Design Patterns

Microservice design patterns provide proven solutions for common challenges in microservice architecture. Implementing these patterns improves the design’s quality, maintainability, and performance.

- API Gateway: An API Gateway acts as a single entry point for all client requests. It handles routing, authentication, authorization, and other cross-cutting concerns. This pattern decouples the client from the underlying microservices and simplifies the client’s interaction with the system. The API Gateway can also perform request aggregation, where it combines data from multiple microservices before sending it to the client.

- Circuit Breaker: This pattern protects against cascading failures. When a service fails, the circuit breaker monitors the failures. After a certain number of failures, the circuit “opens,” preventing further requests to the failing service and allowing it time to recover. When the circuit is open, the gateway can return a default response or redirect to a fallback service. This prevents the failure of one service from bringing down the entire system.

- Service Discovery: Microservices are dynamic; their locations (IP addresses and ports) can change frequently. Service discovery enables services to locate each other automatically. A service registers its location with a service registry (e.g., Consul, etcd, or Kubernetes). Other services can then query the registry to find the location of a specific service.

- Bulkhead: The Bulkhead pattern isolates different parts of an application so that a failure in one part does not impact the others. This can be achieved by limiting the number of concurrent requests to a service or by allocating a fixed number of resources (e.g., threads, memory) to a service. If a service becomes overloaded, the bulkhead prevents it from consuming resources from other services.

- CQRS (Command Query Responsibility Segregation): This pattern separates read and write operations. Commands (writes) are handled by one set of services, while queries (reads) are handled by another. This can improve performance and scalability, particularly in applications with a high read-to-write ratio. It also allows for optimizing the data model for reads and writes separately.

Communication Strategies Between Microservices

Choosing the right communication strategy is crucial for performance, scalability, and maintainability. Microservices can communicate using synchronous or asynchronous methods.

- REST (Representational State Transfer): REST is a widely used architectural style for building web services. Microservices communicate using HTTP methods (GET, POST, PUT, DELETE) to interact with resources. REST is simple, well-understood, and easy to implement. However, synchronous REST calls can introduce dependencies between services, potentially leading to cascading failures.

- gRPC (gRPC Remote Procedure Call): gRPC is a high-performance, open-source RPC framework developed by Google. It uses Protocol Buffers for data serialization and HTTP/2 for transport. gRPC is more efficient than REST, especially for high-volume communication, due to its binary data format and multiplexing capabilities. It is suitable for internal service-to-service communication where performance is critical.

- Message Queues (e.g., Kafka, RabbitMQ): Message queues enable asynchronous communication. Services publish messages to a queue, and other services subscribe to the queue to consume the messages. This approach decouples services and improves resilience. If a service is temporarily unavailable, the messages remain in the queue until the service is ready to process them. Message queues are well-suited for handling events, background tasks, and high-volume data streams.

- Event-Driven Architecture: This is a design pattern where services communicate through events. When an event occurs (e.g., an order is placed), a service publishes an event to an event bus. Other services that are interested in the event subscribe to the event bus and react accordingly. This promotes loose coupling and scalability.

Managing Data Consistency in a Microservices Environment

Maintaining data consistency across multiple microservices is a significant challenge. Several strategies can be employed to address this issue, balancing consistency with availability and performance.

- Eventual Consistency: This approach prioritizes availability and performance over immediate consistency. When data is updated in one service, the changes are eventually propagated to other services. This is often achieved using message queues or event buses. For example, when an order is placed, the “Order Service” might publish an “OrderCreated” event. The “Inventory Service” and the “Payment Service” would subscribe to this event and update their respective data.

- Saga Pattern: The Saga pattern manages distributed transactions. A Saga is a sequence of local transactions, where each transaction updates data within a single service. If a transaction fails, the Saga executes compensating transactions to undo the changes. Sagas can be implemented using choreography (where services communicate directly with each other) or orchestration (where a central orchestrator manages the transactions).

- Two-Phase Commit (2PC): While technically possible, 2PC is generally discouraged in microservices environments due to its potential impact on availability and scalability. 2PC requires all participating services to agree on a transaction’s outcome, which can lead to blocking and reduced performance.

- Idempotency: Ensuring that operations are idempotent (can be executed multiple times without changing the result beyond the first execution) is crucial. This prevents data corruption in case of network failures or retries. Each service must be designed to handle duplicate messages or requests without causing unintended side effects.

- Data Replication: Replicating data across multiple services can improve availability and performance. For example, a read-only replica of a service’s data can be used by other services to avoid cross-service calls. This must be balanced with the overhead of maintaining data synchronization.

Defining Service Boundaries and Responsibilities

Carefully defining service boundaries and responsibilities is essential for a well-designed microservices architecture. This process influences the architecture’s maintainability, scalability, and overall success.

- Business Capabilities: Services should be aligned with core business capabilities. Each service should encapsulate a specific business function or set of related functions. This ensures that the services are aligned with the business’s needs and are easy to understand and maintain.

- Bounded Contexts: Employing Domain-Driven Design (DDD) helps to define bounded contexts. A bounded context represents a specific area of the business and defines the terms and concepts used within that area. Each service should be aligned with a bounded context, ensuring that the service’s data and logic are relevant to that context.

- Service Size: Services should be “small” but not “too small.” The optimal size depends on the specific application, but services should generally be focused and perform a limited set of functions. Small services are easier to develop, deploy, and maintain. However, too many small services can increase the complexity of the architecture.

- Data Ownership: Each service should own its data and be responsible for managing it. Services should not directly access data owned by other services. This promotes loose coupling and data autonomy.

- Team Structure: The organization of the development teams should align with the service boundaries. Each service should ideally be owned and managed by a dedicated team. This allows teams to work independently and reduces the risk of dependencies and bottlenecks.

Containerization and Orchestration

Cloud native applications leverage containerization and orchestration to achieve portability, scalability, and resilience. This section explores the critical role of containers, the process of containerizing application components, the utilization of orchestration tools, and the implementation of CI/CD pipelines for automated deployments.

Role of Containers in Cloud Native Applications

Containers, such as those created using Docker, are isolated environments that package an application and all its dependencies, ensuring consistent execution across different environments. They offer a lightweight and efficient way to package, distribute, and run applications.

- Containers provide consistency. Applications behave the same regardless of the underlying infrastructure, as all dependencies are included within the container.

- Containers enhance portability. They can run on any infrastructure that supports the container runtime, allowing for seamless movement between development, testing, and production environments.

- Containers improve resource utilization. They share the host operating system’s kernel, making them more efficient than virtual machines, which require a full operating system for each instance.

- Containers facilitate scalability. Orchestration tools can easily scale containerized applications by creating and managing multiple container instances based on demand.

- Containers simplify deployments. Updates and rollbacks are simplified by deploying new container images or reverting to previous versions.

Containerizing Application Components

Containerizing application components involves creating Dockerfiles that define the instructions for building container images. These images are then used to create running container instances. The process typically includes these steps:

- Choosing a Base Image: Select a suitable base image from a public or private registry. This image should include the necessary operating system and base dependencies. For example, a Java application might use a base image like `openjdk:17-jdk-slim`.

- Defining Dependencies: Include instructions in the Dockerfile to install application dependencies. This might involve using package managers like `apt-get` (for Debian-based systems) or `yum` (for Red Hat-based systems).

- Copying Application Code: Copy the application source code into the container’s file system. This is typically done using the `COPY` instruction.

- Exposing Ports: Define the ports that the application will listen on using the `EXPOSE` instruction. This informs the container runtime about the application’s network requirements.

- Setting the Entrypoint and Command: Specify the command that will be executed when the container starts. The `ENTRYPOINT` and `CMD` instructions are used for this purpose. The `ENTRYPOINT` is generally used to define the executable, and `CMD` provides default arguments.

- Building the Image: Use the `docker build` command to create the container image from the Dockerfile. This process includes fetching the base image, executing the instructions, and creating a layered file system.

- Testing the Image: Run the container image locally to verify that the application functions correctly.

- Pushing the Image to a Registry: Store the image in a container registry (e.g., Docker Hub, Amazon ECR, Google Container Registry) to make it accessible for deployment.

For example, a simplified Dockerfile for a Python web application might look like this:“`dockerfileFROM python:3.9-slim-busterWORKDIR /appCOPY requirements.txt .RUN pip install –no-cache-dir -r requirements.txtCOPY . .EXPOSE 8000CMD [“python”, “app.py”]“`This Dockerfile specifies the base image, sets the working directory, installs dependencies from `requirements.txt`, copies the application code, exposes port 8000, and runs the `app.py` script.

Use of Container Orchestration Tools

Container orchestration tools, such as Kubernetes, automate the deployment, scaling, and management of containerized applications. They provide a declarative approach to managing containerized workloads.

- Deployment and Management: Orchestration tools handle the deployment of containerized applications across a cluster of nodes.

- Scaling: They automatically scale applications up or down based on resource utilization or other metrics.

- Service Discovery: They provide mechanisms for containers to discover and communicate with each other.

- Load Balancing: They distribute traffic across multiple container instances to ensure high availability and performance.

- Health Checks: They monitor the health of containers and automatically restart unhealthy instances.

- Rolling Updates: They facilitate rolling updates, which allow applications to be updated without downtime.

- Resource Management: They allocate resources (CPU, memory) to containers.

Kubernetes, for example, uses a declarative configuration model. Developers define the desired state of their applications in YAML or JSON files. Kubernetes then works to achieve and maintain that state. Key Kubernetes components include:

- Pods: The smallest deployable units in Kubernetes, representing one or more containers that share resources.

- Deployments: Declarative definitions of how to deploy and update applications.

- Services: Abstractions that expose applications running in pods to the network.

- Nodes: Worker machines in the Kubernetes cluster.

- Namespaces: Virtual clusters within a Kubernetes cluster, used for isolating resources.

Managing Container Deployments and Scaling

Container orchestration tools provide mechanisms for managing container deployments and scaling. This includes deploying new versions of applications, scaling applications based on demand, and ensuring high availability.

- Deployment Strategies: Kubernetes supports various deployment strategies, including rolling updates, blue/green deployments, and canary deployments. Rolling updates provide zero-downtime updates by gradually replacing old pods with new ones.

- Scaling Applications: Kubernetes can automatically scale applications based on resource utilization metrics, such as CPU or memory usage. This is achieved through Horizontal Pod Autoscalers (HPAs).

- Service Discovery and Load Balancing: Kubernetes Services provide a stable IP address and DNS name for accessing applications. Load balancing is handled internally, distributing traffic across multiple pod instances.

- Health Checks and Monitoring: Kubernetes performs health checks on pods and automatically restarts unhealthy containers. Monitoring tools, such as Prometheus and Grafana, provide insights into application performance and resource utilization.

- Configuration Management: Kubernetes supports storing and managing application configuration using ConfigMaps and Secrets. This allows applications to be configured without modifying container images.

Example: A Kubernetes Deployment file (YAML)“`yamlapiVersion: apps/v1kind: Deploymentmetadata: name: my-app-deploymentspec: replicas: 3 selector: matchLabels: app: my-app template: metadata: labels: app: my-app spec: containers:

name

my-app-container image: my-app:latest ports:

containerPort

8080“`This deployment file defines a deployment named `my-app-deployment` that runs three replicas of a containerized application. The container image is specified as `my-app:latest`, and it exposes port 8080.

Setting Up CI/CD Pipelines

CI/CD pipelines automate the process of building, testing, and deploying applications. They enable frequent and reliable releases. The key steps in setting up a CI/CD pipeline are:

- Version Control: Use a version control system (e.g., Git) to manage the application source code.

- Continuous Integration (CI): Automate the process of building, testing, and integrating code changes. CI servers, such as Jenkins, GitLab CI, or GitHub Actions, are used to run automated builds and tests whenever code changes are pushed to the repository.

- Container Image Creation: Integrate the CI process with container image creation. The CI pipeline builds the container image based on the Dockerfile and pushes it to a container registry.

- Automated Testing: Implement automated tests, including unit tests, integration tests, and end-to-end tests. These tests are run as part of the CI process to ensure code quality.

- Continuous Delivery (CD): Automate the process of deploying the application to different environments (e.g., development, staging, production). CD pipelines use tools like Kubernetes Deployments or Helm to deploy containerized applications.

- Infrastructure as Code (IaC): Use IaC tools (e.g., Terraform, Ansible) to define and manage the infrastructure required for the application. This ensures consistency and repeatability.

- Monitoring and Alerting: Implement monitoring and alerting to track application performance and identify issues. This includes collecting metrics, setting up dashboards, and configuring alerts.

- Rollback Strategy: Define a rollback strategy to revert to a previous version of the application in case of deployment failures. This can involve using Kubernetes rollbacks or deploying previous container image versions.

Example: A simplified CI/CD pipeline using GitHub Actions:“`yamlname: CI/CD Pipelineon: push: branches: – mainjobs: build: runs-on: ubuntu-latest steps:

uses

actions/checkout@v3

name

Build and Push Docker Image uses: docker/build-push-action@v3 with: context: . push: true tags: | my-app:latest my-app:$ github.sha deploy: runs-on: ubuntu-latest needs: build steps:

name

Deploy to Kubernetes uses: docker/login-action@v2 with: registry: docker.io username: $ secrets.DOCKER_USERNAME password: $ secrets.DOCKER_PASSWORD

name

Deploy to Kubernetes uses: actions/checkout@v3

name

Set up kubectl uses: azure/setup-kubectl@v3 with: version: ‘latest’

name

Deploy to Kubernetes run: | kubectl apply -f deployment.yaml“`This example shows a GitHub Actions workflow that builds and pushes a Docker image upon code changes to the `main` branch and then deploys it to Kubernetes.

Implementing API Gateways and Service Mesh

In a cloud-native architecture, the communication between microservices and external clients, as well as the internal communication between microservices themselves, necessitates robust and efficient management. This is achieved through the strategic implementation of API gateways and service meshes. These components address crucial aspects such as routing, security, observability, and traffic management, ensuring the overall health and scalability of the application.

Function of API Gateways in Microservices Architecture

API gateways serve as the single entry point for all client requests, abstracting the complexities of the underlying microservices architecture. They act as a reverse proxy, directing incoming requests to the appropriate microservices based on defined routing rules. This abstraction provides several benefits.

- Request Routing: API gateways intelligently route client requests to the correct microservices based on the URL, headers, or other criteria. This enables a clean separation between the client and the internal service structure.

- Authentication and Authorization: API gateways handle authentication and authorization, verifying client identities and permissions before allowing access to the microservices. This centralizes security enforcement.

- Rate Limiting and Throttling: To prevent abuse and ensure fair usage, API gateways implement rate limiting and throttling mechanisms, controlling the number of requests allowed within a specific time frame.

- Monitoring and Logging: API gateways collect metrics and logs for all API traffic, providing valuable insights into performance, usage patterns, and potential issues.

- Request Transformation: API gateways can transform requests and responses, adapting them to meet the specific needs of the microservices or the client. This includes protocol translation and data format conversion.

Selecting and Configuring an API Gateway

The selection of an API gateway depends on the specific requirements of the application, considering factors such as performance, scalability, feature set, and ease of use. Popular choices include open-source solutions like Kong and Tyk, as well as cloud-managed services like AWS API Gateway, Azure API Management, and Google Cloud API Gateway.To configure an API gateway, several steps are involved:

- Defining API Endpoints: The first step is to define the API endpoints that the gateway will expose. This involves specifying the URL paths, HTTP methods (GET, POST, PUT, DELETE, etc.), and any required parameters.

- Routing Configuration: Next, configure the routing rules to direct incoming requests to the appropriate backend microservices. This involves mapping URL paths to the service endpoints.

- Authentication and Authorization Setup: Implement authentication and authorization mechanisms, such as API keys, OAuth 2.0, or JWT (JSON Web Tokens), to secure the APIs.

- Rate Limiting and Throttling Implementation: Configure rate limiting and throttling policies to protect the microservices from overload.

- Monitoring and Logging Integration: Integrate the API gateway with monitoring and logging tools to track API usage, performance, and errors.

For example, when using AWS API Gateway, the process involves creating an API, defining resources and methods, integrating with backend services (e.g., Lambda functions, EC2 instances, or other APIs), configuring authorization (e.g., using API keys or OAuth), and setting up monitoring and logging using CloudWatch.

Benefits of Using a Service Mesh

A service mesh provides a dedicated infrastructure layer for managing service-to-service communication. It offers several benefits, enhancing the reliability, security, and observability of microservices applications.

- Service Discovery: Automatically discovers and tracks the location of service instances, simplifying service communication.

- Traffic Management: Enables advanced traffic control features, such as traffic splitting, A/B testing, and canary deployments.

- Security: Provides built-in security features, including mutual TLS (mTLS) for secure service-to-service communication and centralized policy enforcement.

- Observability: Offers comprehensive monitoring and tracing capabilities, providing insights into service performance and dependencies.

- Resilience: Implements features like circuit breaking, retries, and timeouts to improve the resilience of the application.

Popular service mesh implementations include Istio and Linkerd. Istio is a feature-rich and widely adopted service mesh that offers a comprehensive set of capabilities. Linkerd, on the other hand, is known for its simplicity and ease of use.

Implementing Service Discovery and Traffic Management

Service discovery and traffic management are fundamental aspects of a service mesh. They enable services to locate each other and control how traffic flows between them.To implement service discovery:

- Service Registration: Each service instance registers itself with the service mesh, providing information about its location (IP address, port).

- Service Lookup: When a service needs to communicate with another service, it queries the service mesh to discover the location of the target service instance.

Traffic management involves controlling how traffic is routed between services. This includes features such as:

- Traffic Splitting: Distributing traffic across different versions of a service (e.g., for A/B testing or canary deployments).

- Request Routing: Routing requests based on various criteria, such as headers, user agents, or source IP addresses.

- Fault Injection: Introducing controlled failures to test the resilience of the application.

For instance, in Istio, service discovery is managed by its control plane, which automatically detects service instances running within the mesh. Traffic management is configured using Istio’s configuration language (e.g., using `VirtualService` and `DestinationRule` resources) to define routing rules, traffic splitting policies, and fault injection scenarios.

Best Practices for API Security and Authentication

API security and authentication are paramount for protecting microservices and sensitive data. Following best practices is essential.

- Authentication Methods: Choose appropriate authentication methods based on the application’s requirements. Options include:

- API Keys: Simple and widely used for identifying and authenticating clients.

- OAuth 2.0: Industry-standard protocol for delegated authorization, enabling clients to access protected resources on behalf of a user.

- JWT (JSON Web Tokens): Compact and self-contained tokens that carry user information and can be used for authentication and authorization.

- Authorization Policies: Implement granular authorization policies to control which users or clients can access specific resources and perform specific actions.

- Input Validation: Validate all incoming data to prevent injection attacks and ensure data integrity.

- Rate Limiting and Throttling: Implement rate limiting and throttling to protect APIs from abuse and denial-of-service attacks.

- Encryption: Use HTTPS to encrypt all API traffic and protect data in transit.

- Regular Security Audits: Conduct regular security audits to identify and address vulnerabilities.

- Monitoring and Logging: Implement comprehensive monitoring and logging to track API usage, detect suspicious activity, and troubleshoot security incidents.

For example, consider a microservices architecture for an e-commerce platform. To secure the APIs, you might use OAuth 2.0 for authenticating users accessing the storefront APIs, API keys for authenticating third-party integrations, and JWTs for securely passing user information between microservices. Rate limiting would be applied to prevent abuse, and comprehensive logging would be used to monitor API access and detect potential security threats.

Data Management in Cloud Native Applications

Data management is a critical aspect of cloud native application development, as it directly impacts application performance, scalability, and reliability. Cloud native architectures, characterized by their distributed nature, demand specific strategies to handle data effectively. The shift from monolithic applications to microservices necessitates a thoughtful approach to data storage, access, and consistency. This section delves into the key considerations and best practices for managing data in a cloud native environment.

Strategies for Managing Data in a Distributed Environment

Managing data in a distributed environment presents unique challenges, primarily related to data consistency, availability, and performance. Several strategies are employed to address these challenges.

- Data Partitioning (Sharding): Data is divided into smaller, more manageable chunks (shards) and distributed across multiple database instances. This improves scalability by distributing the load and allows for horizontal scaling. Sharding strategies include range-based, hash-based, and directory-based partitioning. For example, in a retail application, customer data might be sharded based on customer ID ranges.

- Data Replication: Copies of the data are maintained across multiple database instances to enhance availability and provide read scalability. Replication strategies include master-slave, master-master, and multi-master configurations. For instance, a read-heavy application can replicate data to multiple read replicas to handle increased traffic without impacting write performance.

- Caching: Frequently accessed data is stored in a cache (e.g., Redis, Memcached) to reduce the load on the primary database and improve response times. Caching strategies include write-through, write-back, and read-through caching. An e-commerce platform might cache product catalogs to quickly serve product information to users.

- Eventual Consistency: A data consistency model where updates are propagated across the system eventually, but not immediately. This allows for higher availability and scalability, but requires careful handling of data conflicts. This approach is often used in distributed systems where immediate consistency is not a strict requirement, such as social media platforms.

- Transactions (ACID Properties): Transactions are used to ensure data integrity by guaranteeing Atomicity, Consistency, Isolation, and Durability. In cloud native environments, distributed transactions often require careful design to minimize performance overhead.

Database Options Suitable for Cloud Native Applications

Choosing the right database is crucial for cloud native applications. The selection depends on the specific application requirements, including data model, read/write patterns, and scalability needs.

- Relational Databases (RDBMS): Offer strong consistency and support ACID transactions. Examples include PostgreSQL, MySQL, and cloud-managed services like Amazon RDS and Google Cloud SQL. Ideal for applications requiring data integrity and complex queries. For instance, a banking application would typically utilize an RDBMS to ensure data accuracy and consistency.

- NoSQL Databases: Designed for scalability and flexibility, often supporting eventual consistency. Various types exist, including:

- Document Databases (e.g., MongoDB): Store data in JSON-like documents. Suitable for applications with evolving data schemas. A content management system might use a document database to store article data.

- Key-Value Stores (e.g., Redis, Memcached): Simple data model with high read/write performance. Often used for caching.

- Wide-Column Stores (e.g., Cassandra): Designed for handling large datasets and high write throughput. Used in applications requiring high scalability and fault tolerance. A time-series database would use wide-column stores.

- Graph Databases (e.g., Neo4j): Optimized for storing and querying relationships between data. Useful for social networks and recommendation engines.

- Cloud-Native Databases: Databases specifically designed for the cloud environment, often offering features like automatic scaling, high availability, and pay-as-you-go pricing. Examples include Amazon Aurora, Google Cloud Spanner, and Azure Cosmos DB. These databases often provide features tailored for microservices, such as support for various data models and global distribution.

Considerations for Data Consistency and Data Access Patterns

Data consistency and access patterns are critical factors in designing data management strategies for cloud native applications. The choice of consistency model and access patterns significantly impacts application performance and reliability.

- Consistency Models:

- Strong Consistency: Guarantees that all reads return the most recent write. Provides the highest data integrity but can impact performance and availability. Suitable for applications where data accuracy is paramount.

- Eventual Consistency: Guarantees that data will eventually become consistent, but reads may reflect stale data in the interim. Offers higher availability and scalability but requires careful handling of data conflicts. Commonly used in distributed systems.

- Data Access Patterns:

- Read-Heavy Applications: Optimize for fast reads by using caching, read replicas, and denormalization. For example, a news website would prioritize read performance to quickly deliver articles to users.

- Write-Heavy Applications: Optimize for high write throughput by using techniques like sharding, asynchronous processing, and eventual consistency. A social media platform would need to handle a high volume of writes from users.

- Mixed Workloads: Employ a combination of strategies to balance read and write performance. This might involve using a combination of caching, replication, and sharding.

- Data Locality: Consider data locality to minimize latency. For example, storing data closer to the users can reduce read times.

Implementing Data Migration Strategies

Data migration is often necessary when transitioning to a cloud native architecture or when evolving the data model. Careful planning and execution are essential to minimize downtime and data loss.

- Big Bang Migration: Involves a complete cutover from the old system to the new system. This approach is suitable for small datasets and less complex applications.

- Parallel Run (Dual Write): Both the old and new systems are run concurrently, with data written to both. This allows for gradual validation of the new system and a safer cutover. This strategy is often used when migrating to a new database or a new application version.

- Strangler Fig Pattern: Gradually replace parts of the old system with new microservices. This approach allows for a phased migration and reduces the risk. For example, individual features can be migrated to microservices while the monolithic application remains in place.

- Data Replication and Synchronization: Utilize tools and techniques to replicate data from the old database to the new database. Ensure data synchronization during the migration process to minimize downtime.

- Data Validation: Implement thorough data validation to ensure data integrity after the migration. This includes comparing data between the old and new systems to identify discrepancies.

Methods for Monitoring and Optimizing Database Performance

Monitoring and optimizing database performance are ongoing processes that ensure the application’s efficiency and responsiveness. Various tools and techniques are used to identify and resolve performance bottlenecks.

- Performance Monitoring Tools: Use monitoring tools (e.g., Prometheus, Grafana, Datadog) to track key performance indicators (KPIs) such as query response times, database load, CPU utilization, memory usage, and disk I/O. These tools provide insights into the database’s health and performance.

- Query Optimization: Analyze and optimize slow queries. This includes using query profiling tools, indexing strategies, and query rewriting techniques. For example, adding an index to a frequently queried column can significantly improve query performance.

- Database Scaling: Scale the database resources (e.g., CPU, memory, storage) based on the application’s needs. Horizontal scaling (adding more instances) and vertical scaling (increasing resources on existing instances) are both viable options.

- Caching Strategies: Implement effective caching strategies to reduce database load and improve response times. Consider caching frequently accessed data at various levels (application, database, and network).

- Connection Pooling: Use connection pooling to manage database connections efficiently. Connection pooling reduces the overhead of establishing new connections for each request.

- Regular Database Maintenance: Perform regular database maintenance tasks such as defragmentation, index optimization, and data backups.

Monitoring, Logging, and Observability

Cloud native applications, characterized by their distributed nature and dynamic scaling, necessitate robust monitoring, logging, and observability practices. These practices are critical for understanding application behavior, identifying performance bottlenecks, diagnosing errors, and ensuring overall system health. Effective implementation allows for proactive issue resolution, optimized resource utilization, and ultimately, a better user experience.

Importance of Monitoring and Logging

Monitoring and logging form the bedrock of operational visibility in cloud native environments. They provide crucial insights into application performance, infrastructure health, and user behavior. Without these capabilities, it is nearly impossible to effectively manage and troubleshoot complex, distributed systems.

- Monitoring focuses on collecting and analyzing metrics to track system performance. This includes metrics like CPU usage, memory consumption, request latency, and error rates. It provides real-time insights into the application’s operational state.

- Logging involves recording events, messages, and activities within the application and infrastructure. Logs are essential for understanding the sequence of events leading up to an issue, diagnosing errors, and auditing system behavior. Logs typically include timestamps, severity levels, and contextual information.

- Observability, a more holistic approach, builds upon monitoring and logging by providing the ability to understand the internal state of a system from its external outputs. It leverages three key pillars: metrics, logs, and traces, to answer questions about system behavior. Observability allows for a deeper understanding of complex interactions and dependencies within a cloud native application.

Tools for Monitoring Application Performance

A variety of tools are available for monitoring application performance in cloud native environments, each offering different capabilities and strengths. Choosing the right tools depends on the specific requirements of the application and the team’s expertise.

- Prometheus: A popular open-source monitoring system designed for collecting and querying time-series data. It excels at collecting metrics from applications and infrastructure components. Prometheus uses a pull-based model to scrape metrics from configured endpoints. It integrates seamlessly with Kubernetes and offers powerful query language (PromQL) for data analysis.

- Grafana: A data visualization and dashboarding tool that can be used to visualize metrics collected by Prometheus and other data sources. Grafana allows users to create customizable dashboards to monitor application performance, infrastructure health, and other key metrics. It supports a wide range of data sources and offers alerting capabilities.

- Datadog: A commercial monitoring and analytics platform that provides comprehensive monitoring, logging, and tracing capabilities. Datadog offers integrations with a vast array of technologies and services, making it suitable for monitoring complex, multi-cloud environments. It provides pre-built dashboards, alerting rules, and anomaly detection features.

- New Relic: Another commercial platform that offers similar capabilities to Datadog, including application performance monitoring (APM), infrastructure monitoring, and log management. New Relic provides detailed insights into application performance, user experience, and infrastructure health.

- Elasticsearch, Logstash, and Kibana (ELK Stack): While primarily used for logging, the ELK stack (or its successor, the Elastic Stack) can also be used for monitoring. Elasticsearch stores and indexes log data, Logstash processes and transforms logs, and Kibana provides a user interface for searching, analyzing, and visualizing logs. This combination can be used to create dashboards that display key performance indicators (KPIs) derived from log data.

Strategies for Implementing Centralized Logging and Log Analysis

Centralized logging is crucial for aggregating and analyzing logs from various microservices and infrastructure components in a cloud native application. This centralized approach enables effective troubleshooting, performance analysis, and security auditing.

- Log Aggregation: The first step is to collect logs from all sources and forward them to a central location. This can be achieved using log shippers or agents deployed on each service or infrastructure node. Common log shippers include Fluentd, Fluent Bit, and Filebeat. These agents collect logs from various sources (files, standard output, etc.) and transmit them to a centralized log management system.

- Log Storage: Choose a suitable storage solution for storing the aggregated logs. Options include Elasticsearch, cloud-based log management services (e.g., AWS CloudWatch Logs, Google Cloud Logging, Azure Monitor), or object storage (e.g., Amazon S3). The choice depends on factors such as scalability, cost, and search requirements.

- Log Parsing and Enrichment: Before storing logs, it is often necessary to parse and enrich them. This involves extracting relevant information from the log messages, such as timestamps, severity levels, service names, and request IDs. Log parsing can be performed using regular expressions or dedicated parsing tools like Logstash. Enrichment involves adding additional context to the logs, such as user information or application metadata.

- Log Analysis and Visualization: Once logs are stored, analyze them to identify patterns, anomalies, and potential issues. Use tools like Kibana, Grafana, or the built-in analysis features of cloud-based log management services to search, filter, and visualize log data. Create dashboards to monitor key metrics and identify trends.

- Log Retention Policies: Implement appropriate log retention policies to manage the storage space and cost associated with logs. Define how long logs should be retained based on their importance and regulatory requirements. Consider archiving older logs to cheaper storage tiers.

Setting Up Alerting and Notification Systems

Alerting and notification systems are essential for proactively identifying and responding to issues in cloud native applications. They allow operations teams to be notified of critical events and take timely action.

- Metric-Based Alerting: Set up alerts based on key performance indicators (KPIs) and metrics. Define thresholds for metrics such as CPU usage, memory consumption, request latency, and error rates. When a metric exceeds a defined threshold, the alerting system triggers a notification.

- Log-Based Alerting: Create alerts based on patterns and s found in logs. Use log analysis tools to identify error messages, warnings, or specific events that indicate a problem. Configure the alerting system to trigger notifications when these patterns are detected.

- Alerting Tools: Utilize alerting tools like Prometheus Alertmanager, Grafana Alerting, Datadog, New Relic, or cloud-based alerting services. These tools allow you to define alert rules, configure notification channels, and manage alert workflows.

- Notification Channels: Configure notification channels to deliver alerts to the appropriate teams. Common notification channels include email, Slack, PagerDuty, and other messaging platforms. Ensure that alerts are routed to the right individuals or teams based on their severity and impact.

- Alerting Best Practices:

- Define clear alert thresholds based on historical data and application behavior.

- Prioritize alerts based on their severity and impact.

- Provide context in alert notifications, including the affected service, the metric that triggered the alert, and relevant log snippets.

- Document alert response procedures to ensure that teams know how to respond to alerts.

- Regularly review and refine alert rules to minimize false positives and ensure that alerts are effective.

Tracing Requests Across Microservices

Tracing requests across microservices is crucial for understanding the flow of requests and identifying performance bottlenecks in distributed applications. It enables you to track the path of a request as it traverses multiple services and pinpoint the source of delays or errors.

- Distributed Tracing Tools: Utilize distributed tracing tools like Jaeger, Zipkin, or the tracing capabilities offered by platforms like Datadog or New Relic. These tools capture and visualize the path of a request as it flows through the system.

- Instrumentation: Instrument your microservices to propagate trace context across service boundaries. This typically involves adding headers to HTTP requests that carry trace IDs and span IDs. Libraries and frameworks like OpenTelemetry and Spring Cloud Sleuth simplify the instrumentation process.

- Span Creation: Create spans to represent individual operations within a service. A span captures information about a specific operation, such as the start and end times, the service name, the operation name, and any relevant tags or metadata.

- Trace Visualization: Use the tracing tool’s user interface to visualize traces. The visualization will show the sequence of spans and the time spent in each span, allowing you to identify performance bottlenecks and understand the flow of requests.

- Context Propagation: Implement context propagation to ensure that trace context is correctly passed between services. This involves propagating trace IDs and span IDs through HTTP headers or other mechanisms. Frameworks and libraries often provide built-in support for context propagation.

- Example: Consider a microservice application where a user request to view a product details page requires calls to the `product-service`, `inventory-service`, and `recommendation-service`. A tracing tool like Jaeger would display a trace that shows the sequence of calls between these services, along with the duration of each call. If the `inventory-service` call takes a long time, the trace would clearly identify this as a bottleneck.

Security Considerations in Cloud Native Environments

Cloud native applications, by their very nature, introduce a new set of security challenges compared to traditional monolithic applications. Their distributed, dynamic, and often ephemeral nature necessitates a shift in security thinking, moving away from perimeter-based defenses towards a more granular, identity-aware approach. This section explores the specific security challenges, implementation methods, and best practices crucial for securing cloud native applications.

Security Challenges Specific to Cloud Native Applications

Cloud native environments present unique security challenges stemming from their architecture and operational model. These challenges require a comprehensive understanding and proactive mitigation strategy.

- Distributed Nature: Microservices-based applications are inherently distributed, increasing the attack surface. Communication between services, often over networks, creates multiple entry points for potential attackers. Each service represents a potential vulnerability.

- Dynamic and Ephemeral Infrastructure: Containerized applications are frequently created, destroyed, and scaled. This dynamism makes it difficult to maintain a consistent security posture. Security controls must be automated and adaptable.