A “big ball of mud” architecture, characterized by tangled code and complex dependencies, can hinder development and maintenance. This comprehensive guide provides a structured approach to refactoring such systems, transforming them into well-organized, maintainable codebases. We’ll explore strategies for identifying, assessing, and addressing the issues inherent in these challenging architectures, culminating in a robust and efficient solution.

This guide breaks down the process into manageable steps, covering everything from defining the problem to implementing testing and validation procedures. We’ll analyze various refactoring strategies, including modularization, encapsulation, and iterative refinement, to provide a practical and effective path forward.

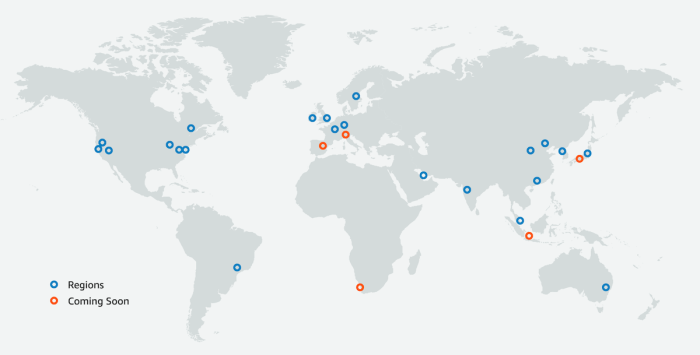

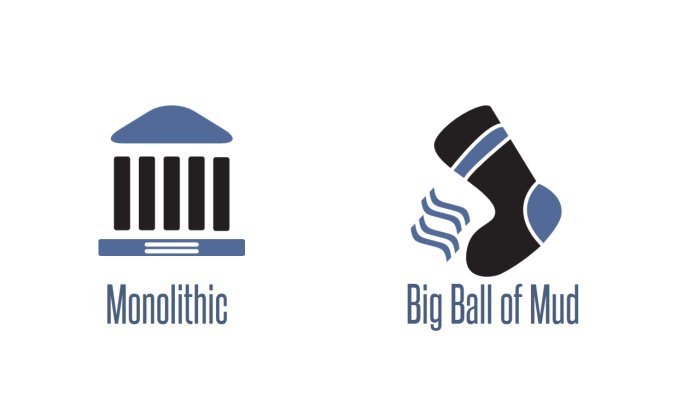

Defining the “Big Ball of Mud”

A “Big Ball of Mud” (BBOM) architecture is a software system characterized by a lack of clear structure and organization. It typically exhibits a complex, intertwined web of code, making it difficult to understand, maintain, and extend. This often results in a system that is brittle and prone to errors, making it challenging for developers to collaborate effectively.This architecture often emerges gradually over time, as code is added without careful consideration of the larger system’s structure.

The lack of well-defined interfaces and modular components contributes to its chaotic nature, making future development efforts cumbersome. Understanding the characteristics and causes of a BBOM is crucial to recognizing and mitigating its detrimental effects.

Description of a Typical BBOM Architecture

A BBOM system typically exhibits a lack of modularity. Code is often scattered across multiple files, with little to no separation of concerns. Functions and classes may perform multiple unrelated tasks, blurring the lines between different responsibilities. Coupling between components is high, meaning changes in one part of the system often necessitate modifications in many other areas.

Data structures are often inconsistent and poorly documented, making it difficult to understand how data flows throughout the system.

Characteristics of a BBOM

The defining characteristics of a BBOM include:

- Lack of Modularity: Functions and classes are often responsible for handling a wide range of tasks, rather than focusing on specific, well-defined responsibilities.

- High Coupling: Changes in one part of the system frequently necessitate modifications in other parts, leading to a ripple effect and potential for errors.

- Poorly Defined Interfaces: The communication between different parts of the system is often unclear and undocumented, hindering understanding and maintenance.

- Inconsistent Data Structures: Data structures may be inconsistent across different parts of the system, making it challenging to understand and use data correctly.

- Inadequate Documentation: A lack of comprehensive documentation further complicates understanding and maintenance.

- Scattered Code: Code is often spread across various files and folders without a clear organizational structure, making it difficult to locate and modify specific components.

Code Snippets Demonstrating BBOM Patterns

These examples illustrate the lack of modularity in a BBOM.“`java// Example 1 (Inconsistent data structures)class User String name; int age; String address; // Sometimes present, sometimes not. // … other methods handling various tasks// Example 2 (Multiple responsibilities)class OrderProcessor // … methods for processing orders // …

methods for managing user accounts // … methods for generating reports“`The `User` class demonstrates inconsistent data structures, as the `address` field might not always be present. The `OrderProcessor` class exhibits multiple responsibilities, handling order processing, user management, and reporting—tasks that should be separated into distinct classes.

Common Causes of BBOM Creation

The creation of a BBOM is often a gradual process, driven by various factors:

- Rapid Development Cycles: Prioritizing speed over design can lead to a lack of planning and structure, resulting in a system that evolves haphazardly.

- Lack of Architectural Planning: Failing to establish clear architectural principles and guidelines can lead to a system that lacks cohesion and organization.

- Changing Requirements: Frequent changes in requirements can make it difficult to maintain a well-structured architecture, potentially leading to a BBOM.

- Inadequate Testing and Code Reviews: A lack of rigorous testing and code reviews can mask design flaws, allowing the system to degrade over time.

Negative Consequences of Maintaining a BBOM System

Maintaining a BBOM system presents significant challenges and has detrimental consequences:

- Increased Development Time and Cost: The complexity of the system makes it harder and slower to develop, test, and modify features.

- Higher Risk of Errors: The interwoven nature of the code makes it prone to errors, increasing the likelihood of bugs and regressions.

- Difficulty in Collaboration: Working on a BBOM can be challenging for teams, as it’s difficult to understand how different parts of the system interact.

- Reduced Maintainability: Making changes to the system can have unpredictable consequences, making it challenging to maintain and update.

- Low Reusability: The lack of modularity reduces the ability to reuse code components in other projects.

Assessing the Impact

A “big ball of mud” system, while often developed rapidly, can lead to significant challenges in maintenance, expansion, and optimization. Understanding the current state of such a system is crucial for planning a successful refactoring process. This assessment phase involves identifying critical dependencies, evaluating potential risks, and understanding the system’s performance implications.Thorough analysis of the “big ball of mud” system’s impact is critical to mitigate potential problems during and after the refactoring process.

This assessment allows for informed decisions regarding the best approach to decoupling and restructuring the codebase.

Evaluating the Current State

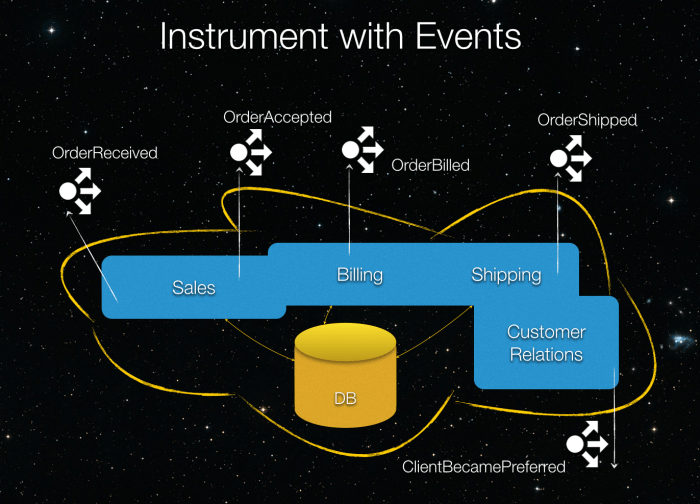

A structured approach to evaluating a “big ball of mud” system is vital. This involves:

- Identifying all modules and their interactions:

- A detailed diagram or map of the system’s components and their connections is essential. This visualization helps in quickly grasping the complex interdependencies.

- Assessing code complexity:

- Measuring code complexity metrics (e.g., cyclomatic complexity, lines of code) can help pinpoint areas with high coupling and potential bottlenecks.

- Documenting data flows:

- Mapping how data is passed between modules is crucial. This helps in understanding the potential impact of changes on the data flow and identifying potential data consistency issues.

- Understanding the system’s history:

- Knowledge of the system’s evolution is important for understanding the context of the current structure and the rationale behind certain decisions.

Identifying Critical Dependencies

Determining dependencies is a critical step in evaluating a “big ball of mud” system.

- Dependency analysis:

- Use tools to analyze code and identify modules that rely on other modules. Understanding these dependencies helps in identifying potential conflicts during refactoring.

- Dependency mapping:

- Creating a visual representation of dependencies helps visualize how changes in one part of the system can affect others. This map allows for more informed decisions during the refactoring process.

- Impact analysis:

- For each dependency, analyze the potential impact of changes on dependent modules. This helps anticipate and mitigate potential problems during refactoring.

Comparing Pros and Cons of a “Big Ball of Mud” Approach

| Pros | Cons |

|---|---|

| Rapid Development | Difficult Maintenance |

| Relatively Simple Initial Structure | High Risk of Errors |

| Potentially Faster Iteration Cycles | Scalability Issues |

| Less upfront design complexity | Difficult to understand and modify |

| Can be efficient for small-scale projects | Increased development time over the project lifecycle |

Risks Associated with Modification

Modifying a “big ball of mud” system carries significant risks.

- Unforeseen consequences:

- Modifications can have unintended consequences in other parts of the system, potentially leading to bugs or system instability. This is amplified by high interdependencies.

- Regression issues:

- Changes can introduce new bugs or regressions in existing functionalities. Careful testing is essential.

- Time and resource commitment:

- Refactoring requires significant time and resources, which can be substantial depending on the system’s complexity and the scope of the refactoring effort.

- Technical debt accumulation:

- Modifications can potentially add more technical debt to the system, increasing future maintenance complexity.

Performance Implications

Performance issues are a frequent concern in “big ball of mud” systems.

- Bottlenecks:

- Complex interdependencies can lead to performance bottlenecks in certain parts of the system. Identifying these bottlenecks is critical to optimizing performance.

- Inefficient code:

- The lack of modularity often leads to inefficient code, impacting performance.

- Poor scalability:

- Without clear modularity, the system may not scale well as the application grows. This often results in decreased performance under load.

Identifying Refactoring Strategies

Refactoring a “big ball of mud” system requires a systematic approach to identify and address the complex interdependencies and tangled code. This process often involves a combination of strategies, each with its own strengths and weaknesses. A crucial aspect is understanding the technical debt embedded within the system, which demands careful prioritization and planning to minimize disruption.Effective refactoring strategies necessitate a clear understanding of the system’s current structure, its potential vulnerabilities, and the desired outcome.

This involves careful consideration of the system’s dependencies, potential risks, and the trade-offs between different refactoring approaches. Thorough documentation and communication are vital throughout the process.

Potential Refactoring Strategies

Refactoring strategies can be categorized into several approaches, each with its own characteristics. Top-down and bottom-up approaches are common, with each having advantages in specific situations. Careful consideration of the system’s complexity and current structure is essential in selecting the most appropriate strategy.

Incremental Refactoring Techniques

Incremental refactoring techniques are crucial for managing the complexity of large systems. These methods involve breaking down the refactoring process into smaller, manageable steps. This allows for continuous testing and validation, mitigating the risk of introducing new bugs. By working in small, focused increments, developers can progressively improve the system’s structure and maintainability. Examples include:

- Extract Method: This technique involves isolating a portion of code from a larger method into a new, dedicated method. This improves code organization and reduces code duplication. For example, if a large method in a class performs multiple distinct tasks, extracting each task into its own method makes the code more readable and maintainable. The original method is then simplified and its functionality is clearly separated.

- Introduce Parameter Object: This approach involves encapsulating multiple parameters within a new object, reducing the number of parameters passed to a method. This leads to more focused methods and improved code clarity. For instance, if a method requires multiple, related data values, creating a parameter object encapsulates these values, simplifying the method’s signature and enhancing readability. This makes the method more focused and easier to understand.

- Replace Conditional with Polymorphism: This strategy involves replacing conditional logic with a set of specialized classes, each handling a specific condition. This approach improves flexibility and maintainability, particularly in complex systems with many conditional branches. For instance, if a method uses several if-else statements to handle different scenarios, creating separate classes for each scenario allows the system to handle those scenarios in a more structured and manageable way, enhancing the code’s flexibility and maintainability.

Refactoring Patterns

Refactoring patterns provide established solutions for common code structure problems. These patterns offer proven strategies for improving code clarity, reducing complexity, and enhancing maintainability.

- Extract Class: This pattern involves creating a new class to encapsulate specific functionality from an existing class. This enhances code modularity and reduces the complexity of the original class.

- Move Method: This pattern involves moving a method from one class to another. This helps organize related functionality within a single class, thereby improving the code’s structure and reducing redundancy.

- Introduce Foreign Key: This pattern involves adding foreign keys to tables to enforce relationships between data entities. This enhances data integrity and reduces potential errors.

Addressing Technical Debt

Technical debt is a crucial aspect of refactoring. It represents the accumulated cost of poor code choices in the past. Identifying and addressing this debt is a vital part of the refactoring process. Strategies include prioritizing refactoring efforts based on the severity of the technical debt and its potential impact on future development.

- Prioritization: Prioritizing refactoring efforts based on the impact on future development, criticality of the system functionality, and urgency. This approach allows focusing on the most impactful areas first, minimizing disruption.

- Documentation: Maintaining thorough documentation of the refactoring process, including the reasons for the changes and the impact on the system. This helps with future maintenance and understanding of the system’s evolution.

Top-Down vs. Bottom-Up Approaches

Top-down and bottom-up approaches represent two contrasting strategies for refactoring. The top-down approach focuses on high-level design changes, potentially leading to significant impacts on the entire system. In contrast, the bottom-up approach focuses on individual modules, potentially leading to more incremental changes. The most effective strategy depends on the specific characteristics of the “big ball of mud” system and the goals of the refactoring.

Modularization and Decomposition

Refactoring a “big ball of mud” system necessitates a systematic approach to break down the monolithic codebase into smaller, more manageable modules. This modularization process is crucial for enhancing maintainability, testability, and future extensibility. By decomposing the system into logical components, developers can better understand and modify specific parts of the application without affecting other sections.

Steps to Modularize a “Big Ball of Mud” System

A systematic approach to modularization involves several key steps. First, identify the core functionalities within the system. Then, categorize these functionalities into logical groupings. These groupings often correspond to different aspects of the application’s domain or specific business processes. Third, create separate modules for each identified group.

Finally, carefully transfer the code related to each group into its designated module. This process ensures a clean separation of concerns, fostering easier comprehension and modification of the codebase.

Criteria for Creating New Modules

Defining clear criteria for creating new modules is essential for preventing the creation of modules that are too small or too large. The following table Artikels these criteria:

| Criterion | Description |

|---|---|

| Functionality | Modules should encapsulate a specific, well-defined set of functionalities. They should not overlap in their responsibilities. |

| Data | Modules should manage and encapsulate the data pertinent to their specific functionality. Data access and manipulation should be controlled within the module. |

| Dependencies | Modules should have minimal dependencies on other modules. This promotes independence and reduces the risk of cascading changes. |

| Testability | Modules should be designed to be easily testable. This is crucial for verifying the correct operation of the system. |

| Maintainability | Modules should be designed for easy maintenance and modification. This includes clear code structure, well-defined interfaces, and comprehensive documentation. |

Decomposing Large Classes and Functions

Large classes and functions are common characteristics of a “big ball of mud” architecture. Their decomposition involves breaking them down into smaller, more manageable units. This decomposition process often involves identifying the distinct responsibilities within the class or function and extracting them into separate methods or classes. For example, a large class handling user registration, order processing, and payment could be decomposed into three separate classes, each focusing on a single aspect.

Similarly, a long function with multiple unrelated tasks could be divided into several smaller, more focused functions.

Use of Interfaces and Abstractions

Interfaces and abstractions play a vital role in improving modularity. Interfaces define the contract that a module must adhere to, without specifying the implementation details. This decoupling allows modules to interact with each other without knowing the internal workings of the other modules. Abstractions provide a higher-level view of the system, hiding complex implementation details and exposing only essential functionalities.

This enhances the modularity and maintainability of the system.

Improving Code Organization and Readability

Code organization and readability are crucial for a maintainable system. This includes using meaningful names for classes, methods, and variables. Consistent coding style and adherence to established naming conventions improve code readability and comprehension. Proper use of comments and documentation is essential to explain the purpose and functionality of the modules. This detailed documentation aids developers in understanding and modifying the code effectively.

For example, well-documented classes and methods significantly reduce the time required to comprehend and modify them.

Encapsulation and Abstraction

Refactoring a “Big Ball of Mud” architecture often necessitates a fundamental shift in how code is structured. Encapsulation and abstraction are crucial for achieving this restructuring, promoting modularity, and improving maintainability. They enable developers to isolate and manage complex components, making the system easier to understand, modify, and extend.Encapsulation and abstraction are complementary techniques. Encapsulation focuses on bundling data and methods that operate on that data within a class or module, thereby controlling access to the internal workings of the component.

Abstraction, on the other hand, simplifies the way other parts of the system interact with a component, presenting only essential details and hiding unnecessary complexity.

Importance of Encapsulation

Encapsulation is vital for creating robust and maintainable software. By restricting direct access to internal data and methods, it safeguards the integrity of the component. This helps prevent unintended side effects and makes the component more resilient to changes in other parts of the system. For example, if the internal representation of data within a class needs to be modified, only the encapsulated methods need to be updated, minimizing the risk of breaking other parts of the application.

Examples of Encapsulation

Consider a banking application with a `BankAccount` class. Encapsulation would involve storing the account balance privately using a `private` access modifier. Methods like `deposit` and `withdraw` would be provided to manipulate the balance, ensuring that only these methods can change the account balance. This prevents direct access and modification of the balance, which is essential for maintaining data integrity.Another example is a `Customer` class, where personal details like address and phone number are encapsulated.

The class might provide methods to update these details instead of exposing the fields directly.

Benefits of Abstraction

Abstraction simplifies interactions with a component by presenting a simplified interface. This reduces the cognitive load on developers working with the component and promotes better understanding of the component’s functionality. A `Shape` class, for example, might have various subclasses like `Circle`, `Rectangle`, and `Triangle`. The `Shape` class provides an abstract interface (methods like `area()` and `perimeter()`) that can be used without needing to know the specific implementation details of each shape.

Design Patterns for Encapsulation and Abstraction

Several design patterns can aid in achieving encapsulation and abstraction effectively. The Factory pattern, for instance, allows creation of objects without specifying the exact concrete class, promoting loose coupling. The Strategy pattern lets you encapsulate different algorithms and allows selection at runtime.

Abstraction Techniques

| Abstraction Technique | Description | Example |

|---|---|---|

| Interface | Defines a contract that classes must implement, specifying methods without providing implementations. | `Drawable` interface defining `draw()` method for different shapes. |

| Abstract Class | A class that cannot be instantiated directly, providing a common structure and behavior for subclasses. | `Shape` abstract class with `area()` method. Subclasses implement the calculation. |

| Information Hiding | Hiding internal implementation details from external components. | `BankAccount` class with private balance and public methods like `deposit` and `withdraw`. |

Testing and Validation

Refactoring a “big ball of mud” system necessitates a rigorous approach to testing to ensure that the changes do not introduce unintended consequences. Thorough testing is critical for maintaining the system’s functionality and stability throughout the refactoring process. A robust testing strategy is crucial to mitigate risks and build confidence in the revised architecture.The testing strategy must adapt to the evolving nature of the refactoring process.

Each step of modularization, encapsulation, and decomposition must be validated to confirm that the system continues to function as expected. This approach ensures that the refactoring does not compromise existing functionalities or introduce new bugs.

Significance of Testing During Refactoring

Testing during refactoring is essential for verifying that modifications do not break existing functionality. It provides confidence that the refactoring process maintains the system’s integrity and stability. Testing is a vital process to prevent regressions and ensure the system remains reliable and functional after refactoring. Comprehensive testing activities should be planned and executed alongside each refactoring step to verify that the desired changes have been successfully implemented without causing unintended side effects.

Testing Strategies for a “Big Ball of Mud” System

Initial testing strategies for a “big ball of mud” system should prioritize comprehensive functional testing, including but not limited to smoke tests and regression tests. These tests should cover all critical functionalities to ensure that the core processes are unaffected by the changes. The goal is to identify any immediate failures and deviations from the expected system behavior. This approach is essential for validating that the modifications do not introduce inconsistencies or errors.

Designing Unit, Integration, and End-to-End Tests

Unit tests isolate individual components or modules, verifying their functionality in isolation. This strategy ensures that each module operates correctly without external dependencies. Integration tests validate interactions between different modules. These tests ensure that the modules work together harmoniously to achieve the intended outcomes. End-to-end tests, which simulate real-world scenarios, provide a comprehensive validation of the entire system’s flow, from initial input to final output.

This ensures the system’s integrity and functionality in all scenarios.

Managing Regression Risks During Refactoring

A structured approach to managing regression risks is essential during refactoring. This includes a comprehensive test suite covering various scenarios. Regular regression testing, ideally automated, should be executed after each refactoring step to identify any newly introduced issues. This proactive approach is key to preventing the introduction of regressions.

Testing Tools and Frameworks

Numerous testing tools and frameworks are available to verify changes. JUnit, pytest, and Mocha are popular frameworks for unit testing, providing a structured approach for defining and executing tests. Selenium and Cypress are examples of frameworks used for end-to-end testing. These tools offer various features for managing test cases, reporting results, and automating the testing process. Automated testing frameworks significantly reduce the time and effort needed to verify the changes, thereby enhancing efficiency and accuracy.

Version Control and Collaboration

Refactoring a “Big Ball of Mud” architecture requires meticulous planning and execution. Version control systems are crucial for tracking changes, managing different versions of the codebase, and facilitating collaboration among developers. Effective use of version control significantly reduces the risk of introducing errors during refactoring and enables easy rollback if necessary.Version control provides a historical record of every modification made to the code, enabling developers to revert to previous versions if needed.

This historical record is essential during the refactoring process, allowing for the identification of potential issues and enabling the restoration of prior functionality if necessary.

Role of Version Control During Refactoring

Version control systems, such as Git, play a critical role in managing the refactoring process. They provide a centralized repository for the codebase, allowing multiple developers to work on different parts of the project simultaneously. This collaborative environment is essential for efficient and controlled refactoring. Furthermore, the ability to track changes meticulously and revert to previous states helps to mitigate potential errors and ensure the stability of the system throughout the refactoring effort.

Utilizing Version Control Systems Effectively

Effective use of version control involves adhering to best practices. This includes creating meaningful commit messages that clearly describe the changes made, committing frequently to capture progress, and utilizing branching strategies to isolate changes. The use of descriptive commit messages ensures that the history of the codebase is easily understandable and helps in tracing the evolution of the code.

Branching Strategies for Refactoring

Branching strategies are vital for isolating refactoring efforts. One common strategy is the feature branch approach. Developers create a new branch for each refactoring task. Once the refactoring is complete and tested, the changes are merged back into the main branch. This strategy prevents the main branch from being disrupted by ongoing refactoring activities, promoting stability and preventing conflicts.Another effective approach is the Gitflow branching model, which divides the project into specific branches for development, releases, and hotfixes.

This approach is particularly useful in larger projects or those with established release cycles. It provides structure and predictability to the refactoring process.

Clear Commit Messages

Clear and concise commit messages are essential for maintaining a readable and understandable commit history. They should describe the changes made, the rationale behind the changes, and any potential impact. Well-documented commit messages facilitate collaboration, provide context for future changes, and make it easier to understand the evolution of the codebase.

“A good commit message should be concise, informative, and descriptive, detailing the changes made and the rationale behind them.”

Examples of effective commit messages include:

- Refactored user registration form to improve UX and reduce redundancy.

- Implemented new database schema for improved performance and scalability.

- Fixed bug in authentication process caused by incorrect password validation.

Tools for Collaborative Refactoring

Several tools can aid in collaborative refactoring efforts. These tools often provide features for code review, conflict resolution, and project management. Utilizing these tools can streamline the process and improve communication among team members.

- GitKraken: A GUI client for Git that simplifies branching, merging, and other Git operations. Its visual representation of the code history helps in understanding the evolution of the codebase.

- GitHub: A platform for hosting Git repositories and facilitating collaboration. Its pull request system allows for code review and conflict resolution.

- Bitbucket: A similar platform to GitHub, offering similar features for hosting and managing Git repositories, code reviews, and collaborative development.

- SourceTree: A GUI client for Git that provides a user-friendly interface for managing Git repositories. Its visual representation of branches and commits makes it easy to navigate the codebase history.

Documentation and Communication

Refactoring a “Big Ball of Mud” system is a complex undertaking. Effective documentation and clear communication are critical to the success of the project. This process involves not only technical documentation but also transparent communication with stakeholders to ensure everyone understands the changes and their implications. This section focuses on strategies for documenting refactoring decisions, maintaining communication, and creating user-friendly documentation.

Importance of Documentation

Thorough documentation of the refactoring process is essential for several reasons. It provides a clear record of the changes made, the rationale behind those changes, and the impact on the system. This detailed record helps maintain the system’s integrity and allows future developers to understand and maintain the codebase. Furthermore, it helps in tracking progress, identifying potential issues early on, and facilitating knowledge transfer within the team.

Refactoring Decision Documentation Template

A well-structured template facilitates the documentation of refactoring decisions. This template should include:

- Refactoring ID: A unique identifier for the refactoring activity.

- Date: The date when the refactoring was performed.

- Developer(s): The names of the developers involved.

- Original Code Snippet: A clear representation of the original problematic code segment.

- Refactored Code Snippet: The improved code segment after the refactoring.

- Rationale: A concise explanation of the reasons for the refactoring. This should include a description of the problem addressed, the improvements achieved, and the specific design principles followed.

- Impact Assessment: A summary of how the refactoring affects other parts of the system. This might include changes to API calls, database interactions, or external dependencies.

- Testing Results: A report of the testing performed to verify the correctness of the refactoring. This should include the tests run, expected outcomes, and actual results.

Key Aspects to Document for Each Refactoring Step

The following table Artikels the key aspects to document for each refactoring step. Detailed documentation helps in tracking progress and ensures that everyone understands the refactoring’s impact.

| Refactoring Step | Documentation Aspects |

|---|---|

| Identifying modules | Description of the identified modules, their responsibilities, and their relationships. |

| Encapsulating data | Specification of the data being encapsulated, the new access methods, and the reasons for encapsulation. |

| Implementing abstractions | Description of the abstractions created, their interfaces, and how they simplify interactions with the code. |

| Modifying dependencies | Description of the changes made to dependencies, how the new structure interacts with external systems, and the reasons for the modifications. |

| Adding tests | Detailed description of the tests added to verify the functionality of the refactored components. This should include test cases, expected results, and actual results. |

Communication Strategies

Effective communication is vital during refactoring. This ensures stakeholders are aware of the progress, potential issues, and the overall impact on the system. Regular progress updates, clear communication of potential risks, and open dialogue with stakeholders can mitigate potential issues and ensure everyone is on the same page.

User Manuals and API Documentation

User manuals and API documentation are essential for both internal and external users. User manuals should be clear and concise, providing step-by-step instructions for using the system. API documentation should clearly Artikel the available functions, their parameters, return values, and usage examples. This documentation is crucial for both current and future users, fostering understanding and minimizing errors.

Examples of good documentation include detailed code comments, API reference guides, and user manuals that clearly explain functionality.

Choosing the Right Tools

Refactoring a “Big Ball of Mud” architecture requires careful selection of tools to support the process. Appropriate tools can streamline the identification of problematic code, facilitate safe modifications, and ensure the integrity of the system throughout the process. Selecting the right tools is critical to successfully navigating the complexity of refactoring and achieving a more maintainable codebase.Effective refactoring hinges on the use of various tools.

These tools range from simple IDE features to dedicated code analysis and refactoring platforms. Understanding the capabilities and limitations of each tool allows developers to make informed decisions and maximize their efficiency.

Static Analysis Tools

Static analysis tools examine the code without executing it. This allows for the identification of potential issues early in the development cycle, reducing the risk of introducing errors during the refactoring process. These tools excel at identifying potential bugs, security vulnerabilities, and style inconsistencies.

- FindBugs: This open-source tool is widely used for identifying potential bugs in Java code. It analyzes code for common programming errors, such as null pointer exceptions, resource leaks, and incorrect usage of collections. FindBugs provides detailed reports that highlight the potential issues and their locations in the code, enabling developers to address them proactively.

- PMD: PMD (Programming Mistake Detector) is another open-source tool that analyzes Java, C++, and other programming languages. It detects potential code smells, which are code patterns that indicate potential problems or areas for improvement. Examples of code smells include complex conditional statements, excessive class coupling, and duplicated code. PMD helps developers identify these issues early, enabling them to refactor and improve code quality.

- SonarQube: SonarQube is a platform that integrates various static analysis tools. It provides a unified view of code quality issues across different projects. SonarQube can identify a wider range of code quality issues, such as code complexity, duplication, and adherence to coding standards. The platform generates reports and metrics to track the progress of refactoring efforts.

Code Analysis Tools

These tools delve deeper into the code structure to identify potential issues and areas that need attention during the refactoring process. They can be invaluable in finding hidden complexities, performance bottlenecks, and areas of potential vulnerability.

- Code Climate: Code Climate provides insights into code quality, including code complexity, potential bugs, and security vulnerabilities. It uses a combination of static analysis and dynamic analysis to provide a comprehensive understanding of the codebase’s health. It offers suggestions and recommendations for improvements.

- DeepSource: DeepSource is a cloud-based tool for analyzing codebases and providing recommendations for improvement. It leverages a range of static and dynamic analysis techniques to provide feedback on code quality, security, and maintainability. DeepSource is useful in detecting potential bugs and vulnerabilities, and improving code style.

Refactoring Tools

Dedicated refactoring tools provide a structured approach to modifying code. They support the automated application of refactoring patterns, ensuring that changes are made safely and consistently. These tools help prevent accidental errors and maintain code consistency throughout the refactoring process.

- Eclipse Refactoring Browser: Eclipse provides built-in refactoring capabilities, allowing developers to apply common refactoring patterns within the IDE. The Eclipse refactoring browser provides a graphical view of the codebase, allowing developers to identify and apply refactorings more efficiently.

- IntelliJ IDEA Refactoring Tools: IntelliJ IDEA offers a comprehensive set of refactoring tools. These tools are integrated into the IDE, making them easily accessible and enabling seamless application of refactoring patterns. IntelliJ IDEA offers a variety of refactorings, such as renaming variables, extracting methods, and moving classes.

IDE Features

Integrated Development Environments (IDEs) offer a plethora of features that support refactoring efforts. These features streamline the process, enhancing developer productivity and reducing the risk of introducing errors. Using these features can lead to a smoother and more efficient refactoring process.

- Automated Code Completion: IDEs provide code completion features, which can help developers quickly modify code and ensure that changes are made correctly. Auto-completion reduces errors and improves the consistency of refactoring efforts.

- Search and Replace: IDEs offer search and replace functionalities, which can help locate and modify code elements efficiently. This helps ensure that refactoring changes are consistently applied across the entire codebase.

- Debugging Tools: Debugging tools in IDEs allow developers to identify and fix errors during the refactoring process. This process is important in preventing unintended consequences of refactoring and ensures the integrity of the codebase.

Handling Dependencies

Refactoring a “big ball of mud” architecture often requires careful consideration of dependencies. Dependencies can be intricate and intertwined, impacting multiple parts of the system. Effective dependency management during refactoring is crucial to ensure stability and prevent unintended consequences. Strategies for identifying, resolving, and managing these dependencies are key to a successful transition.

Dependency Management Strategies

Careful planning and execution are vital when managing dependencies during refactoring. This involves identifying all affected areas and anticipating potential ripple effects. A structured approach minimizes risks and ensures a smoother transition.

Identifying and Resolving Dependencies

Dependencies can be direct or indirect, and manifest as calls to functions, classes, or modules. Thorough code analysis is crucial to uncover all dependencies. This involves using tools and techniques to trace the flow of data and control within the system. Analyzing the codebase’s structure, including class diagrams and function calls, can illuminate these connections. Once identified, resolving dependencies often involves redesigning interfaces, creating new modules, or altering existing ones to accommodate changes.

Careful planning and thorough testing are crucial to prevent introducing new bugs or instability.

Impact of Refactoring on Dependencies

The following table illustrates the potential impact of refactoring on different dependency types.

| Dependency Type | Potential Impact | Mitigation Strategy |

|---|---|---|

| Internal Dependencies (within the same module) | Relatively straightforward changes. Potentially minimal impact. | Careful testing and verification to ensure the refactored module still functions correctly with internal dependencies. |

| External Dependencies (on other modules or external systems) | Can be complex and require careful consideration. Changes to external dependencies might necessitate adjustments to the affected modules. | Detailed documentation of external dependencies. Careful testing and validation to ensure compatibility with the external systems. |

| Third-party Dependencies | Modifications might require adjustments to the project’s configuration or setup. New versions of the library could be necessary. | Careful review of the third-party library’s documentation. Using dependency management tools (like package managers) to streamline updates and avoid conflicts. |

Handling External Dependencies During Refactoring

External dependencies, such as database connections, API calls, or file systems, require special consideration during refactoring. A crucial step is to document all external dependencies meticulously. This includes understanding the expected input and output formats, error handling mechanisms, and any constraints imposed by the external systems. Creating mocks or stubs for external dependencies allows the refactored code to be tested in isolation, reducing the risk of introducing bugs during integration.

Careful attention to version compatibility and API changes is also important.

Refactoring Dependent Modules

Refactoring dependent modules requires a phased approach. First, analyze the dependent modules to understand how they interact with the refactored module. Next, design changes to the dependent modules to accommodate the new interfaces or functionalities. Implement the changes and thoroughly test the dependent modules in isolation and in integration with the refactored module. This iterative process ensures stability and minimizes the risk of introducing errors.

Careful planning and clear communication are vital to ensure a smooth transition.

Iterative Refinement

Refactoring a “big ball of mud” architecture is rarely a one-time event. Instead, it’s a process that requires careful consideration, methodical steps, and continuous evaluation. Iterative refinement allows for incremental improvements, minimizing disruption and maximizing the likelihood of success. This approach allows developers to focus on manageable portions of the codebase, ensuring the system’s functionality remains intact throughout the process.Iterative refinement is crucial because it breaks down a potentially overwhelming task into smaller, more digestible steps.

This strategy enables developers to address specific areas of concern, identify and resolve issues systematically, and gradually transform the codebase into a more maintainable and robust structure. The iterative nature of this process is paramount to managing the complexity and ensuring that the refactoring effort does not lead to unforeseen consequences.

Framework for Iterative Refactoring Steps

A structured approach to refactoring is essential. This framework Artikels the key steps for each iteration:

- Identify a specific area for improvement: Select a module, class, or function that exhibits problematic characteristics, such as high coupling, poor cohesion, or excessive complexity. Clearly define the desired outcome for this specific area. This focused approach avoids overwhelming the development team and ensures measurable progress.

- Plan the refactoring steps: Decompose the selected area into smaller, manageable tasks. Document the intended changes and their anticipated impact on the existing functionality. This proactive planning phase allows for thorough assessment and prevents unexpected issues during the implementation phase.

- Implement the changes: Carefully implement the planned refactoring steps, ensuring adherence to the planned changes. This involves applying modularization, encapsulation, and other techniques to improve code structure and readability. Thorough code reviews at this stage are vital.

- Test and validate: Rigorously test the modified code to ensure that the changes haven’t introduced bugs or broken existing functionality. Employ unit tests, integration tests, and potentially even user acceptance tests to verify the refactoring’s efficacy. This verification is essential to maintain the system’s functionality throughout the refactoring process.

- Evaluate the results: Analyze the impact of the refactoring iteration on the overall system. Assess if the planned improvements were achieved and whether any unexpected issues arose. This evaluation allows for adjustments and improvements in subsequent iterations.

Tracking Progress During Iterative Refactoring

Tracking progress is vital for managing expectations and ensuring that the refactoring process remains on track.

| Iteration Number | Area of Improvement | Planned Changes | Actual Changes | Test Results | Evaluation |

|---|---|---|---|---|---|

| 1 | Order Processing Module | Extract Order Processing Class | Extract Order Processing Class and validate order validity | All unit tests passed; No regressions | Successful; minor adjustments required for database integration |

| 2 | Customer Data Management | Implement data validation rules | Implement data validation rules; enhance error handling | All tests passed; improved error reporting | Successful; enhanced data integrity |

Assessing Refactoring Iteration Success

Refactoring iteration success is not solely determined by the absence of errors. The success is judged by the following factors:

- Functionality Preservation: Verify that the refactored code maintains the system’s existing functionality. Any changes must not introduce bugs or break existing features.

- Code Quality Improvement: Evaluate the refactoring’s impact on code readability, maintainability, and testability. Measure metrics like code complexity and coupling to assess improvements.

- Performance Enhancement: Assess the refactoring’s impact on the system’s performance. Measure metrics like response time and resource utilization to ensure that performance hasn’t been negatively affected.

Maintaining System Functionality During Refactoring

Maintaining existing functionality is paramount during the refactoring process. This can be achieved through these strategies:

- Comprehensive Testing: Employ a robust testing strategy that includes unit tests, integration tests, and potentially user acceptance tests. This strategy helps ensure that refactoring does not introduce defects.

- Incremental Implementation: Implement changes in small, manageable steps. This minimizes the risk of introducing errors and allows for easier debugging.

- Version Control: Utilize a version control system to track changes and allow for rollback to previous versions if necessary. This enables controlled and auditable modifications.

Final Review

Successfully refactoring a “big ball of mud” architecture requires a methodical approach, encompassing careful assessment, strategic planning, and rigorous testing. By following the steps Artikeld in this guide, developers can transform complex systems into clean, maintainable, and scalable applications, ultimately enhancing development productivity and system longevity.

This guide empowers developers to navigate the complexities of legacy codebases and build more resilient, future-proof software. The practical examples and detailed explanations ensure a deep understanding of the refactoring process, allowing you to apply these techniques confidently and effectively.

Key Questions Answered

What are some common causes of a “big ball of mud” architecture?

Rapid development cycles, insufficient planning, lack of clear design principles, and inadequate code reviews are among the common causes.

How can I estimate the time needed for refactoring a large “big ball of mud” system?

Estimating the time involves assessing the system’s complexity, identifying critical dependencies, and estimating the effort required for each refactoring step. A detailed task breakdown and potential roadblocks will assist in accurate estimation.

What are some tools to help identify code smells in a “big ball of mud” architecture?

Static analysis tools, such as SonarQube and FindBugs, can help detect code smells and potential issues within the system, guiding refactoring efforts effectively.

How can I communicate refactoring plans and progress to stakeholders?

Clear communication with stakeholders is essential. Regular updates, presentations, and documentation of the refactoring process can maintain transparency and keep stakeholders informed.