Centralized logging offers a powerful solution for managing the voluminous data generated by distributed systems. This comprehensive guide details the crucial steps involved in establishing a robust centralized logging infrastructure, enabling efficient monitoring, troubleshooting, and performance optimization. From understanding fundamental concepts to implementing practical solutions, this document provides a practical roadmap for success.

This guide dives deep into the intricacies of centralized logging, examining various log data formats, collection strategies, storage options, and processing techniques. Crucially, security considerations and troubleshooting strategies are also addressed, ensuring a complete understanding of the implementation and maintenance process.

Introduction to Centralized Logging

Centralized logging in distributed systems is a crucial practice for monitoring and troubleshooting. It involves collecting log data from various components of a distributed application and storing it in a single, accessible location. This centralized repository simplifies the process of analyzing system performance, identifying issues, and ensuring compliance. A well-designed centralized logging system provides a comprehensive view of the entire application’s behavior, enabling quicker responses to problems and facilitating proactive maintenance.Decentralized logging, on the other hand, stores logs on individual nodes or components.

This approach can be challenging to manage and analyze, as it requires coordinating data across multiple sources. Centralized logging overcomes this by providing a unified view of the system’s activity, making it significantly easier to diagnose issues, track performance trends, and meet regulatory requirements.

Definition of Centralized Logging

Centralized logging for distributed systems is the aggregation of log data from various components into a single repository. This unified view allows for efficient analysis and troubleshooting, enhancing observability and improving operational efficiency.

Benefits of Centralized Logging

Centralized logging offers several advantages over decentralized approaches. These include improved troubleshooting, enhanced observability, and increased operational efficiency. Centralized logging simplifies issue resolution by providing a consolidated view of events across the entire system. This unified perspective facilitates quicker identification of root causes and enables faster resolution. Enhanced observability allows for proactive monitoring of system performance, enabling early detection of potential issues.

Furthermore, centralized logging streamlines operational efficiency by reducing the complexity of managing and analyzing logs across multiple components.

Key Components of a Centralized Logging Setup

A robust centralized logging system comprises several key components, each playing a critical role in the overall architecture. These components work together to capture, process, and store log data from various sources.

- Log Collectors: These components are responsible for collecting log data from various distributed nodes or services. They often utilize agent-based or agentless approaches, depending on the system’s architecture. Agents reside on each node, forwarding logs to a central collector, while agentless approaches rely on configured communication channels. Each method has its own advantages and considerations for security and management.

- Log Transport Mechanism: This component facilitates the transmission of collected logs from the collectors to the central logging repository. Common transport mechanisms include protocols like Kafka, RabbitMQ, or custom solutions, depending on the specific requirements and infrastructure.

- Centralized Logging Repository: This component stores the collected log data. Common options include specialized log management systems (like ELK Stack, Splunk, or Graylog), databases, or cloud-based storage solutions. The choice of repository should align with the volume of data, storage requirements, and the desired analysis capabilities.

- Log Processing/Analysis Tools: These tools are used to analyze and interpret the centralized log data. They can be integrated with visualization dashboards or reporting mechanisms for effective monitoring and analysis. Examples include Kibana, Grafana, or custom reporting tools.

Architecture Diagram

The following diagram illustrates a simple architecture for a centralized logging system in a distributed application.“`+—————–+ +—————–+ +—————–+| Node 1 (Agent) | –> | Log Collector 1 | –> | Central Log |+—————–+ +—————–+ +—————–+| Node 2 (Agent) | –> | Log Collector 2 | | Repository |+—————–+ +—————–+ +—————–+ .

. . . . .

. . .| … Other Nodes | –> | … Other Collectors | | |+—————–+ +—————–+ +—————–+ | | v +——–+ | Analysis | +——–+ | | Reporting/Visualization | Tools (e.g., Kibana, Grafana) | +—————–+“`This diagram depicts the flow of log data from distributed nodes to a central logging repository.

Log collectors capture the logs, transmit them to the central repository, and the repository stores the data for later analysis and troubleshooting. Tools are available to help visualize and report on this data, providing insights into the application’s behavior.

Log Data Formats and Standards

Choosing the appropriate log data format is crucial for efficient analysis and effective troubleshooting in distributed systems. A well-structured log format facilitates quick identification of issues, trends, and patterns, enabling swift responses to system anomalies. The format selected should be easily parsed by log aggregation and analysis tools, allowing for automated data extraction and reporting.Different log data formats offer varying levels of flexibility and complexity, impacting the ease of analysis and the insights obtainable.

This section delves into common log formats, comparing their strengths and weaknesses, and outlining best practices for creating effective log messages.

Common Log Data Formats

Various formats are used for log data, each with its own advantages and disadvantages. Understanding these differences allows system administrators to select the format best suited for their needs. Common formats include plain text, JSON, and CSV.

- Plain Text: A simple, widely used format, often consisting of timestamped messages in a human-readable format. It is straightforward to parse, but lacks the structured information of other formats. Examples include common log file formats used by web servers.

- JSON (JavaScript Object Notation): A structured format based on key-value pairs. JSON offers the benefit of rich data representation, enabling detailed information to be recorded in a machine-readable way. It is widely supported by analysis tools, and parsing tools can quickly extract relevant information. For example, JSON can be used to record user actions, system events, or metrics with clarity and detail.

- CSV (Comma-Separated Values): A simple format used for tabular data, consisting of rows and columns separated by commas. CSV is easy to generate and read but less flexible for complex data structures than JSON. It is well-suited for representing metrics or numerical data, which can be further processed and analyzed using spreadsheet applications or databases.

Log Message Structure Best Practices

To maximize the value of log data, structured log messages are essential. A clear and consistent structure simplifies analysis and allows for efficient filtering and searching.

- Timestamp: Include a precise timestamp to facilitate correlation of events and track the sequence of actions. The timestamp should be in a consistent format, like ISO 8601.

- Log Level: Use log levels (e.g., DEBUG, INFO, WARNING, ERROR, CRITICAL) to categorize the importance of the log message. This allows for filtering and prioritizing alerts.

- Source Information: Clearly identify the source of the log message, including the application or component that generated it. This helps pinpoint the location of an issue.

- Contextual Data: Include relevant contextual information such as user IDs, transaction IDs, or request parameters. This enhances the understanding of the event and its impact.

- Structured Data: Utilize structured formats like JSON to provide detailed information about the event, making it easier to extract specific data points for analysis. Avoid embedding lengthy explanations or text within log messages, using the structured data format instead.

Comparison of Log Formats

The choice of log format should align with the complexity of the data and the analysis requirements.

| Format | Advantages | Disadvantages |

|---|---|---|

| Plain Text | Simple, widely supported, easy to read | Lacks structure, difficult to extract specific data points, less suitable for complex data |

| JSON | Structured, machine-readable, easy to parse, supports complex data, well-suited for analysis | Can be verbose, requires parsing tools for analysis |

| CSV | Simple, easy to read and generate, well-suited for tabular data, easily imported into spreadsheets | Limited for complex data structures, not ideal for complex analyses |

Log Collection Strategies

Centralized logging in distributed systems necessitates efficient log collection mechanisms to aggregate logs from various sources. Effective log collection ensures that all relevant information is available for analysis and troubleshooting, facilitating the monitoring and management of the entire system. These strategies play a crucial role in ensuring data integrity, enabling timely identification of issues, and providing valuable insights into system performance and behavior.

Log Collection Methods

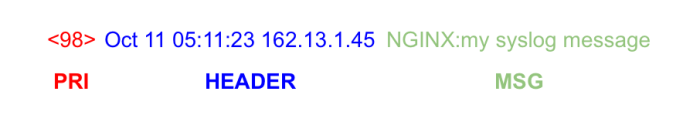

Various methods exist for collecting logs from distributed systems. Understanding the strengths and weaknesses of each method is essential for selecting the most suitable solution. Key methods include syslog, Fluentd, and Logstash.

- Syslog: A widely adopted standard protocol for exchanging log messages between systems. It offers simplicity and broad compatibility, making it a common choice for many applications. However, syslog’s structure can be rigid, limiting its adaptability to complex log formats. Its performance might not be optimal for very high-volume log ingestion.

- Fluentd: A powerful and flexible log collector designed for high-volume log ingestion. It supports various log formats and provides extensive filtering and transformation capabilities. Its architecture allows for real-time log processing and aggregation, making it a robust choice for modern distributed systems. Fluentd’s flexibility comes with a slightly steeper learning curve compared to simpler solutions.

- Logstash: A versatile log management tool that excels at processing and enriching log data. It allows for complex transformations, data enrichment, and indexing. Logstash’s strengths lie in its ability to manipulate and prepare log data for analysis and storage. However, its processing power might be a constraint in very high-volume environments.

Performance Comparison

The performance characteristics of log collection tools vary significantly. Factors such as processing speed, scalability, and flexibility impact overall system efficiency.

| Tool | Processing Speed | Scalability | Flexibility |

|---|---|---|---|

| Syslog | Generally moderate | Relatively good for moderate volumes | Limited |

| Fluentd | High | Excellent, designed for high volume | High |

| Logstash | High | Good, but can be limited by complex pipelines | Very High |

Configuration Steps for Fluentd

Fluentd configuration involves defining input sources, filters, and output destinations. This structured approach ensures logs are collected, processed, and routed effectively.

A typical Fluentd configuration file for collecting logs from multiple servers would include:

agent.type = forwardagent.tag = my-applicationagent.port = 24224input.stdin type = stdinfilter.json key = messageoutput.elasticsearch host = "elasticsearch.example.com" port = 9200 index = "my-application-%time"

Ingesting Logs from Multiple Nodes

To ingest logs from multiple distributed nodes, the log collector needs to be configured to listen on the appropriate ports or utilize the correct protocols.

A strategy often employed involves using a central Fluentd instance that collects logs from multiple distributed application servers. This instance can then forward these logs to a central logging platform, such as Elasticsearch or Splunk, for storage and analysis.

Data Storage and Management

Centralized logging systems require robust storage solutions to handle the volume, velocity, and variety of log data generated by distributed systems. Effective storage choices significantly impact the performance and scalability of the entire logging infrastructure. Proper data retention policies are crucial for meeting compliance requirements, enabling analysis, and preventing data loss.

Storage Options for Log Data

Different storage options cater to varying needs in terms of cost, scalability, and performance. Databases, cloud storage, and specialized log management solutions are common choices. The optimal solution depends on factors such as the volume of log data, the desired query capabilities, and the budget constraints.

- Databases: Relational databases (like PostgreSQL or MySQL) can store log data structured in tables. This structure allows for efficient querying and analysis. However, they might not be as optimized for the sheer volume of log data generated by high-throughput systems.

- Cloud Storage: Cloud storage services (like Amazon S3, Google Cloud Storage, or Azure Blob Storage) offer scalable and cost-effective solutions for storing large volumes of log data. Their pay-as-you-go model allows flexibility in adjusting storage capacity based on demand. However, querying log data in cloud storage can sometimes be less efficient compared to dedicated database systems.

- Specialized Log Management Solutions: Platforms like Splunk, Elasticsearch, Logstash, and Kibana (ELK stack) are designed specifically for log management. They offer powerful indexing and querying capabilities, optimized for high-volume log data. These solutions provide robust tools for real-time analysis and visualization of log data.

Performance and Scalability Comparison

The performance and scalability of different storage options vary significantly. Consider factors like query speed, data ingestion rate, and the ability to handle increasing volumes of data. For example, cloud storage can handle massive increases in data volume, but querying large datasets might take longer than using a database designed for structured data. Specialized log management systems often excel in both aspects, offering a balance between query efficiency and scalability.

Data Retention Policies

Data retention policies are essential for centralized logging systems. These policies dictate how long log data is retained and what happens to it after the retention period. Organizations must define retention periods based on regulatory compliance, business needs, and legal requirements. Robust policies ensure data is available for analysis when needed and is deleted in a controlled manner to meet data privacy and security standards.

Implementing proper data retention policies helps prevent data breaches and maintains compliance with regulations.

Comparison Table of Storage Solutions

| Feature | Database (e.g., PostgreSQL) | Cloud Storage (e.g., S3) | Specialized Log Management (e.g., ELK) |

|---|---|---|---|

| Cost | Potentially higher upfront cost for licensing and maintenance | Scalable, cost-effective pay-as-you-go model | Can be expensive depending on the features and scale |

| Scalability | Limited scalability compared to cloud storage or specialized solutions | Highly scalable, can handle massive data volumes | Highly scalable, optimized for high-volume log data |

| Performance (Querying) | Generally high performance for structured data | Lower querying performance for large datasets | Optimized for fast querying and analysis of log data |

| Ease of Use | Requires expertise in database management | Relatively easy to use, intuitive interfaces | Requires some technical knowledge, but offers advanced features |

| Data Analysis | Supports complex queries and data analysis | Limited query capabilities | Optimized for log analysis and visualization |

Log Processing and Analysis

Effective log analysis is crucial for diagnosing issues, optimizing performance, and understanding system behavior within distributed systems. A comprehensive approach involves processing and analyzing collected log data to extract meaningful insights. This section details the various tools and techniques used for this critical task.

Log Processing Tools and Techniques

Log processing tools and techniques facilitate the efficient handling and analysis of vast amounts of log data. This encompasses a range of methods, from simple log aggregation to complex filtering and transformation processes. The key aspect is to convert raw log data into actionable information.

- Log Aggregation: This involves collecting log data from multiple sources and consolidating it into a central repository. This centralized storage allows for a unified view of the entire system’s activities. Tools like Splunk and ELK stack (Elasticsearch, Logstash, Kibana) excel at this task, providing a consolidated view of events across various application components.

- Log Filtering: Filtering allows focusing on specific events or logs relevant to a particular issue or investigation. This is achieved using predefined criteria, enabling targeted analysis. For example, filtering by error messages or specific timestamps can significantly reduce the volume of data to be examined.

- Log Parsing: Log parsing transforms raw log data into a structured format. This structuring allows for more efficient analysis and querying. Tools like logstash and custom scripts can be used for parsing various log formats.

- Log Enrichment: Log enrichment adds context to log entries by incorporating data from other sources, such as system metrics or user information. This context enhances the understanding of events, enabling more accurate root cause analysis.

Querying and Analyzing Log Data

Effective querying and analysis of log data are essential for troubleshooting issues and understanding system behavior. This involves formulating queries to extract specific information from the log repository and subsequently analyzing the results to identify patterns and anomalies.

- Query Language Support: Log analysis tools often support specialized query languages (e.g., Splunk’s search language, Elasticsearch’s query DSL). These languages allow users to formulate complex queries to extract specific information, filter results, and perform aggregations.

- Pattern Recognition: Identifying patterns in log data is critical for detecting recurring issues or anomalies. Tools and techniques can automatically or manually identify patterns that might indicate problems, such as unusual spikes in error rates or specific sequences of events leading to failures.

- Trend Analysis: Trend analysis reveals long-term patterns and trends in log data. This analysis can be used to predict future issues or optimize system performance over time. For example, monitoring error rates over several weeks can identify recurring problems that need immediate attention.

Log Data for Performance Monitoring and Optimization

Log data plays a vital role in performance monitoring and optimization. Analyzing logs allows for the identification of performance bottlenecks and the optimization of system components.

- Performance Metrics Extraction: Log files often contain performance metrics, such as response times, resource utilization, and request counts. Extracting these metrics from logs enables a comprehensive understanding of system performance.

- Identifying Bottlenecks: By correlating log data with performance metrics, bottlenecks in the system can be identified. Analyzing slow response times or high resource utilization reported in logs can help pinpoint the specific components causing performance issues.

- Optimization Strategies: Understanding the performance bottlenecks allows for the implementation of optimization strategies. This might involve code improvements, infrastructure adjustments, or database optimizations, ultimately leading to improved system performance.

Comparison of Log Analysis Tools

The following table provides a comparative overview of common log analysis tools, highlighting their features and functionalities.

| Tool | Features | Functionalities |

|---|---|---|

| Splunk | Powerful search language, extensive visualizations, alerting capabilities, machine learning | Log aggregation, analysis, correlation, threat detection, security monitoring |

| ELK Stack | Open-source, flexible, scalable, supports various data formats | Log aggregation, indexing, searching, visualization, dashboards |

| Graylog | Open-source, distributed architecture, real-time log processing | Log aggregation, analysis, visualization, alerting |

| Fluentd | Open-source data collector, supports various data sources, high-performance | Log aggregation, forwarding, parsing, indexing, real-time data processing |

Security Considerations

Centralized logging, while offering significant advantages for distributed systems, introduces new security considerations. Protecting the integrity and confidentiality of log data is paramount to maintaining system security and compliance with regulations. Vulnerabilities associated with centralized logging can have far-reaching consequences if not addressed proactively.Implementing robust security measures throughout the logging pipeline is crucial. This includes protecting log data at rest, during transit, and ensuring only authorized personnel can access and utilize the collected information.

Comprehensive security policies and procedures are essential to mitigating risks and maintaining the trustworthiness of the entire system.

Security Vulnerabilities of Centralized Logging

Centralized logging systems, by their nature, collect data from various sources, increasing the attack surface. Compromised components within the distributed system can potentially inject malicious log entries, masking true system activity or misleading security analysts. Poorly configured logging systems, or systems lacking appropriate access controls, can expose sensitive information to unauthorized individuals. Unencrypted log data transmission creates another avenue for interception and data breaches.

Security Best Practices for Log Data

Protecting log data at rest and in transit is critical. Employing strong encryption protocols, such as Transport Layer Security (TLS), for log transmission is essential. Data at rest should be encrypted using industry-standard algorithms and stored in secure environments with limited access. Regular security audits and penetration testing are vital to identify and address potential vulnerabilities.

Access Control Mechanisms for Log Data

Implementing granular access control is essential for preventing unauthorized access to log data. Role-based access control (RBAC) allows administrators to define specific permissions for different users and roles. This limits access to only the necessary log data for each user’s job function, minimizing the risk of sensitive information disclosure. Implementing multi-factor authentication (MFA) further strengthens access controls.

Security Measures to Prevent Unauthorized Access

Preventing unauthorized access to log data requires a multi-layered approach.

- Encryption: Encrypting log data both in transit and at rest is fundamental. Use strong encryption algorithms and key management practices to protect data confidentiality. This mitigates risks associated with data breaches during transmission and while stored.

- Network Segmentation: Isolating the logging system from other critical infrastructure reduces the impact of a potential breach. This isolates potential attack vectors and minimizes the scope of potential damage.

- Regular Security Audits: Conduct periodic security assessments to identify and address vulnerabilities in the logging system. This includes penetration testing to evaluate the system’s resistance to attacks. These audits are vital to proactively identifying and addressing security gaps.

- Access Control Lists (ACLs): Define granular access control lists for different users and roles, granting access only to authorized individuals. This limits the scope of potential damage and ensures compliance with security policies.

- Monitoring and Alerting: Implement robust monitoring and alerting systems to detect suspicious activities or unauthorized access attempts. Proactive monitoring is key to identifying security incidents promptly and mitigating their impact.

- Data Loss Prevention (DLP): Employ DLP solutions to prevent sensitive information from being logged or transmitted. This proactive measure helps prevent the exposure of confidential data.

Real-World Use Cases

Centralized logging offers significant advantages for distributed systems, enabling comprehensive monitoring, efficient troubleshooting, and robust security posture. Practical application of these principles in diverse environments demonstrates the value of this approach. Real-world examples showcase the effectiveness of centralized logging in managing complex, distributed architectures.

E-commerce Platform

E-commerce platforms often experience significant traffic fluctuations and require real-time insights into system performance. Centralized logging facilitates the monitoring of critical metrics like request latency, error rates, and database response times. This allows for quick identification and resolution of bottlenecks, ensuring a smooth user experience. Logging helps in understanding and optimizing various components, including web servers, payment gateways, and database systems.

For instance, by analyzing log data, platform administrators can detect spikes in database query times, indicating potential scaling issues, and proactively implement solutions.

Cloud-Based Streaming Service

Streaming services handle massive amounts of data and concurrent users, demanding robust monitoring and efficient troubleshooting. Centralized logging allows for detailed tracking of user interactions, system performance, and resource utilization across different servers. This enables rapid identification of issues, such as network latency or server overload, and aids in ensuring a consistent streaming experience. Logging can track issues like failed video transcoding or network congestion, facilitating quick diagnosis and resolution, improving user satisfaction.

For example, a sudden increase in error logs related to video buffering could signal a network problem requiring immediate intervention.

Financial Transaction Processing System

Financial institutions require extremely high levels of reliability and security in their transaction processing systems. Centralized logging provides a crucial record of every transaction, aiding in auditing and compliance. This detailed logging allows for tracing transactions, identifying suspicious activities, and facilitating investigations. Robust log data facilitates compliance with regulatory requirements, such as PCI DSS, and enables the rapid detection of fraudulent transactions.

Implementing centralized logging, coupled with advanced log analysis tools, enables early identification of potentially fraudulent transactions, preventing significant financial losses. For instance, unusual transaction patterns, such as multiple large transactions from a single IP address within a short timeframe, can trigger alerts and prompt investigation.

Implementation Details

- E-commerce Platform: A centralized logging server, accessible to all components, was implemented. Each component logged critical events using a standardized format. Logstash was employed for collecting and processing the logs, while Elasticsearch and Kibana provided storage and visualization capabilities. The system utilized dashboards to monitor key performance indicators (KPIs) in real-time, allowing administrators to respond promptly to performance issues.

- Cloud-Based Streaming Service: A distributed logging infrastructure, capable of handling the high volume of logs, was established. A dedicated logging agent was deployed on each server to collect and forward logs to the central repository. The log data was stored in a distributed database for scalability and efficiency. Tools like Grafana were used for visualizing system performance metrics derived from the log data.

- Financial Transaction Processing System: A robust logging system, designed for high availability and security, was implemented. Each transaction was logged with detailed information, including timestamps, user IDs, transaction amounts, and system responses. Security measures were implemented to protect the log data. Log aggregation and analysis tools were used to monitor system health and detect anomalies.

Monitoring and Troubleshooting

Centralized logging enables comprehensive monitoring and troubleshooting across distributed systems. Real-time dashboards provide insights into system performance, allowing administrators to proactively identify and address issues. Log analysis tools facilitate the identification of patterns and trends in the log data, enabling deeper insights into system behavior. The detailed records facilitate quick and efficient troubleshooting of errors, leading to improved system reliability and performance.

This structured approach to logging significantly shortens problem resolution times and minimizes downtime, ultimately improving the overall user experience.

Tools and Technologies

Centralized logging relies heavily on specialized tools and technologies to effectively collect, process, and analyze log data from various distributed systems. These tools automate the often-complex tasks of log aggregation, parsing, and storage, enabling efficient troubleshooting and monitoring. Choosing the right toolset depends on factors such as the volume and variety of log data, the desired level of analysis, and the budget constraints.

Popular Logging Tools

A wide range of tools cater to centralized logging needs, each with unique strengths and weaknesses. Some of the most popular choices include Elasticsearch, Logstash, and Kibana (ELK stack), Fluentd, Graylog, Splunk, and various cloud-based logging services. These tools offer diverse capabilities, ranging from simple log aggregation to advanced analytics and visualization.

- ELK Stack (Elasticsearch, Logstash, Kibana): This popular open-source stack provides a comprehensive logging solution. Elasticsearch acts as the data store, Logstash handles log ingestion and transformation, and Kibana facilitates visualization and querying. Its scalability and flexibility make it suitable for a wide range of distributed systems. It is highly customizable and allows for complex queries and visualizations of log data.

It also offers a REST API, which makes it easy to integrate with other applications.

- Fluentd: A powerful data collector and forwarder, Fluentd is known for its efficiency and ability to collect data from various sources, including applications, servers, and devices. It offers a wide range of input plugins to support diverse log formats and protocols. Fluentd’s flexible configuration allows it to be tailored to specific logging needs. It can also handle high volumes of data and can be easily integrated into existing infrastructure.

- Graylog: Graylog is a centralized logging platform that provides a user-friendly interface for managing and analyzing logs. It features advanced search capabilities and visualization tools, enabling users to quickly identify critical events and trends. Graylog is particularly useful for security teams needing to monitor system activity for potential threats. It also supports various log formats and can ingest data from different sources.

- Splunk: A widely used enterprise-grade platform for log management and analysis. Splunk excels at indexing and searching vast quantities of log data, providing powerful tools for identifying patterns and anomalies. It offers sophisticated dashboards and reporting capabilities for visualizing trends and insights. Splunk’s strength lies in its ability to correlate events from various sources and provide comprehensive analyses.

- Cloud-based Logging Services (e.g., AWS CloudWatch Logs, Azure Monitor Logs, Google Cloud Logging): Cloud providers offer integrated logging services that often provide seamless integration with their other services. These solutions simplify the setup and management of centralized logging, taking advantage of cloud infrastructure for scalability and reliability. These services are often cost-effective for organizations that already leverage cloud platforms. They typically offer detailed monitoring capabilities and readily integrate with other cloud services.

Integration with Distributed Systems

The integration process varies based on the chosen tool and the distributed system. Common approaches include using agent-based systems, which install agents on individual systems to collect logs, and using configuration files to specify log forwarding. The integration often involves setting up log shippers or collectors to collect data from different sources.

- Agent-based systems are often used for collecting logs from servers or applications. The agent monitors the target system for log files and transmits them to the central logging system.

- Configuration files define the log sources and destinations for the centralized logging system. These configurations specify how log data should be collected, processed, and stored.

- Log shippers or collectors are dedicated programs that receive logs from various sources and forward them to the centralized logging system. They typically support diverse protocols and formats.

Comparison of Tools

The table below provides a comparative overview of some popular centralized logging tools.

| Tool | Ease of Use | Features | Pricing |

|---|---|---|---|

| ELK Stack | Medium | High scalability, flexibility, powerful search and visualization | Open-source (Elasticsearch, Logstash, Kibana) but commercial support available |

| Fluentd | Medium | High performance, versatile, supports various data sources | Open-source |

| Graylog | High | User-friendly interface, advanced search, visualization | Commercial |

| Splunk | Medium-High | Comprehensive analysis, powerful correlation, enterprise-grade features | Commercial |

| Cloud-based Logging Services | High | Seamless integration with cloud services, scalability, often managed | Usually pay-as-you-go or subscription-based |

Troubleshooting Common Issues

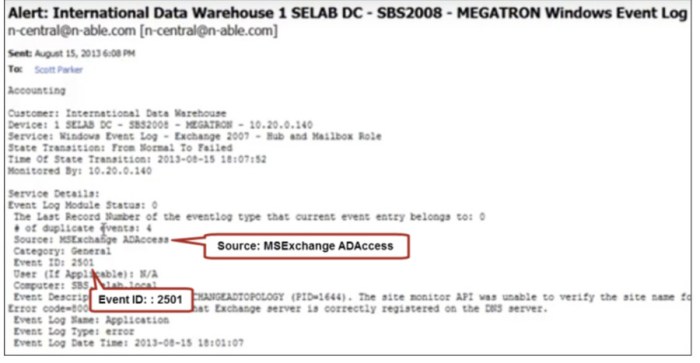

Troubleshooting centralized logging setups requires a systematic approach to identify and resolve problems efficiently. Common issues often stem from configuration errors, network connectivity problems, or data volume overload. This section details common pitfalls and effective strategies to address them, providing practical examples and solutions.Effective troubleshooting involves a methodical approach, beginning with a clear understanding of the expected behavior of the system.

This often involves checking logs for errors, analyzing system metrics, and verifying configuration settings. Careful examination of error messages, combined with understanding of the architecture, is crucial in pinpointing the root cause of the issue.

Configuration Errors

Configuration errors are a frequent source of problems in centralized logging. These errors can arise from incorrect log format specifications, misconfigured routing rules, or improper settings in the logging agents. Careful review of the configuration files is essential.

- Incorrect Log Format: If the log format is not compatible with the log processing tools, the data will not be parsed correctly. This can manifest as errors in the log processing pipeline or inaccurate analysis results. Example: Incorrectly specified timestamp format. Solution: Review the log format specifications and ensure they align with the log processing tools.

Use a tool to validate the log format against the expected structure. Verify data types and expected fields.

- Misconfigured Routing Rules: If the logging agents are not configured to forward logs to the central logging server, logs will not be collected. This can be caused by incorrect IP addresses, port numbers, or firewall rules. Example: Agent configured to send logs to an incorrect server address. Solution: Double-check the configuration files for routing rules. Verify network connectivity between the agents and the central logging server.

Use a network tool to trace the path of the logs.

- Agent Configuration Issues: Problems with the logging agents themselves can cause issues. This may include agent failures, incorrect installation, or missing dependencies. Example: Logging agent fails to connect to the central logging server. Solution: Check the agent logs for error messages. Verify the agent’s installation and dependencies.

Ensure the agent is running correctly. Restart the logging agent if needed.

Network Connectivity Problems

Network connectivity issues can hinder the collection of logs. Problems with firewalls, network segmentation, or server outages can prevent logs from reaching the central logging server. Troubleshooting involves verifying network connectivity and addressing any firewall restrictions.

- Firewall Restrictions: Firewall rules can block communication between the logging agents and the central logging server. This requires careful examination of firewall configurations to allow log traffic. Example: Firewall rules blocking UDP port

514. Solution: Review firewall rules to identify and allow traffic to the appropriate ports (e.g., UDP 514). Ensure the central logging server’s IP address is whitelisted. - Network Segmentation: Network segmentation can prevent logs from reaching the central logging server if the agents and the server are in different segments. Example: Logging agents in a different VLAN than the central logging server. Solution: Verify network connectivity between the logging agents and the central logging server. Check for any network segmentation that might be preventing the flow of log data.

- Server Outages: If the central logging server is unavailable, logs will not be received. Example: Central logging server experiencing downtime. Solution: Monitor the central logging server’s health and availability. Implement failover mechanisms for the logging server to ensure continuous log collection.

Data Volume Overload

High volumes of log data can overwhelm the central logging system. This can lead to slow response times, dropped logs, and performance issues. Appropriate storage and processing solutions are crucial to address this.

- Storage Capacity Issues: If the storage capacity of the central logging server is insufficient, it can lead to log data loss. Example: Logs exceeding the storage capacity. Solution: Implement a log rotation policy to manage log data effectively. Utilize a distributed storage solution to handle large volumes of log data.

- Processing Bottlenecks: If the log processing pipeline is not optimized, it can result in delays in analyzing log data. Example: Processing pipeline not designed to handle high data volumes. Solution: Use optimized log processing tools. Implement a distributed processing architecture to handle high data throughput.

Common Errors and Solutions

| Error | Solution |

|---|---|

| Agent not connecting to central server | Verify network connectivity, firewall rules, and agent configuration. |

| Log format not recognized | Correct the log format in the agent or log source to match the expected format. |

| Logs not being written to the central server | Check agent configuration, log source settings, and network connectivity. |

| High latency in log processing | Optimize log processing pipelines, upgrade processing tools, or distribute processing tasks. |

Closure

In conclusion, establishing centralized logging for distributed systems is a multifaceted process demanding careful consideration of data formats, collection methods, storage solutions, and security protocols. This guide provides a structured approach, equipping readers with the knowledge and tools to build and maintain a robust logging infrastructure. By adhering to best practices, organizations can effectively monitor, analyze, and troubleshoot their distributed systems, ultimately driving performance optimization and ensuring system reliability.

Top FAQs

What are the common pitfalls to avoid when choosing a log data format?

Choosing the wrong log data format can lead to difficulties in analysis and hinder troubleshooting. Consider factors like data volume, the complexity of queries needed, and the tools available for processing and visualizing the logs. Overly complex formats might be difficult to parse, while simple formats might lack the necessary detail for in-depth analysis.

How do I ensure the security of my centralized log data during transit and at rest?

Implementing robust security measures is paramount. Encryption during transit is crucial. Strong access controls, including user roles and permissions, are essential for protecting log data at rest. Regular security audits and penetration testing should be part of the ongoing maintenance strategy.

What are some common challenges in integrating a log collector with various distributed systems?

Integrating log collectors with different distributed systems can present challenges due to varying log formats and data structures. Understanding the specific log formats and protocols used by each system is critical. Proper configuration and testing are essential to ensure smooth integration and data capture.

How do I effectively manage the volume of log data generated by a large distributed system?

High volumes of log data necessitate careful data management strategies. Implementing log rotation policies, using efficient storage solutions, and employing filtering and aggregation techniques are essential for managing data volume without impacting performance.