Understanding how to use CloudWatch Logs for Lambda functions is crucial for effective monitoring, troubleshooting, and optimization of serverless applications. CloudWatch Logs serves as a central repository for the output generated by Lambda functions, capturing valuable information about their execution, including errors, performance metrics, and custom application logs. This detailed analysis delves into the core functionalities of CloudWatch Logs, offering a step-by-step approach to effectively leverage its capabilities for building robust and scalable serverless architectures.

The utilization of CloudWatch Logs transcends mere log collection; it provides the tools for in-depth analysis, enabling developers to pinpoint issues, identify performance bottlenecks, and gain valuable insights into application behavior. From setting up logging configurations to implementing advanced log analysis techniques, this exploration equips readers with the knowledge and skills necessary to fully harness the power of CloudWatch Logs within the context of Lambda functions.

Introduction to CloudWatch Logs for Lambda Functions

CloudWatch Logs serves as a centralized logging service within the AWS ecosystem, providing a robust mechanism for collecting, storing, and monitoring log data generated by various AWS services, including Lambda functions. This is crucial for operational efficiency, allowing for proactive identification and resolution of issues.

Core Purpose of CloudWatch Logs in the Context of Lambda Functions

CloudWatch Logs’ primary function is to capture and store the output from Lambda functions, which includes application logs, error messages, and any custom logging statements implemented within the function code. This centralized repository enables comprehensive monitoring and analysis of function behavior. The logs are essential for debugging, performance analysis, and auditing of Lambda function executions. They provide a historical record of function invocations, allowing for the identification of trends and anomalies over time.

This capability is critical for maintaining the reliability and scalability of serverless applications.

How CloudWatch Logs Captures Logs Generated by Lambda Functions

Lambda functions automatically send their standard output (stdout) and standard error (stderr) streams to CloudWatch Logs. When a Lambda function is invoked, it writes its logs to a log stream within a log group. The log group is named after the Lambda function, and the log stream is typically named after the function’s execution timestamp. Each log entry contains the log message, a timestamp, and the function’s request ID, facilitating correlation of log events with specific function invocations.

This structured approach allows for efficient searching, filtering, and analysis of logs.

Benefits of Using CloudWatch Logs for Monitoring and Troubleshooting Lambda Functions

Using CloudWatch Logs offers several key advantages for monitoring and troubleshooting Lambda functions.

- Real-time Monitoring: CloudWatch Logs provides near real-time access to logs, allowing developers to monitor function behavior as it occurs. This enables immediate identification of errors or performance issues.

- Error Detection and Debugging: By examining logs, developers can quickly pinpoint the root cause of errors, whether they originate from the function code, external dependencies, or infrastructure components. The detailed log entries provide valuable context for debugging.

- Performance Analysis: Logs can be used to analyze function performance, identifying bottlenecks and areas for optimization. The logs contain information on execution times, memory usage, and other performance metrics.

- Auditing and Compliance: CloudWatch Logs provides a historical record of function invocations, which is essential for auditing and compliance purposes. This record includes details such as the function’s input, output, and execution duration.

- Log Filtering and Search: CloudWatch Logs offers powerful filtering and search capabilities, enabling developers to quickly find specific log entries based on s, patterns, or time ranges. This significantly accelerates troubleshooting efforts.

- Integration with Other AWS Services: CloudWatch Logs integrates seamlessly with other AWS services, such as CloudWatch Metrics and CloudWatch Alarms. This allows developers to create custom dashboards, set up alerts, and automate responses to specific log events. For example, an alarm can be triggered if a certain error message appears in the logs, and that alarm can trigger an automated response such as sending a notification or invoking another Lambda function to remediate the issue.

Setting Up CloudWatch Logs for a Lambda Function

Configuring CloudWatch Logs for a Lambda function is a crucial step in monitoring, debugging, and auditing function execution. Proper setup allows for the collection, storage, and analysis of logs, providing valuable insights into function behavior and performance. This section details the initial setup steps, necessary IAM permissions, and configuration of logging settings.

Enabling Logging for a New Lambda Function: Initial Steps

Enabling logging for a new Lambda function involves several initial steps to ensure that function execution data is captured and accessible. These steps establish the foundation for effective log management.

- Function Creation: The first step is the creation of a new Lambda function through the AWS Management Console, AWS CLI, or an Infrastructure as Code (IaC) tool like AWS CloudFormation or Terraform. The function’s code, runtime, and other initial configurations are defined during this process.

- IAM Role Assignment: Each Lambda function must be associated with an IAM role that defines its permissions. This role determines what AWS resources the function can access, including CloudWatch Logs. During function creation, either an existing role can be selected, or a new role can be created. It is important to create a role with appropriate permissions.

- Code Implementation (Logging Statements): Within the Lambda function’s code, logging statements need to be implemented. These statements, typically using a logging library or the language’s built-in logging capabilities (e.g., `console.log` in Node.js or `logging.info` in Python), write messages to the standard output stream. The Lambda service automatically captures this output and forwards it to CloudWatch Logs.

- Deployment: After writing the function code and logging statements, the function needs to be deployed. This involves uploading the code package (e.g., a ZIP file or container image) to AWS and configuring the function’s settings, such as the handler, memory allocation, and timeout. Deployment activates the function, making it ready to execute.

- Testing: After deployment, the function should be tested by invoking it with sample input. This step verifies that the function executes correctly and generates logs. The logs can be checked in CloudWatch Logs to ensure that they are being captured and that the logging statements are functioning as intended.

IAM Permissions Required for Lambda Function Logging

The IAM role assigned to a Lambda function must have specific permissions to write logs to CloudWatch. These permissions allow the function to create log groups and streams, and to write log events.

The essential permissions are typically granted through an IAM policy that includes the following actions:

logs:CreateLogGroup: This permission allows the Lambda function to create a new log group in CloudWatch Logs. Log groups organize log streams, providing a logical structure for log data.logs:CreateLogStream: This permission enables the function to create a log stream within a log group. Log streams represent individual function executions and contain the log events generated during each invocation.logs:PutLogEvents: This permission is crucial, as it allows the function to write log events (the actual log messages) to a log stream. This is the core permission for sending log data to CloudWatch Logs.logs:DescribeLogGroups: This permission is often included to allow the function to describe existing log groups. This can be helpful for retrieving information about log groups, such as their names and retention policies.

A typical IAM policy granting these permissions might look like this (in JSON format):

“`json “Version”: “2012-10-17”, “Statement”: [ “Effect”: “Allow”, “Action”: [ “logs:CreateLogGroup”, “logs:CreateLogStream”, “logs:PutLogEvents”, “logs:DescribeLogGroups” ], “Resource”: “arn:aws:logs:*:*:*” ]“`

In this example, the `Resource` is set to `arn:aws:logs:*:*:*`, which grants the permissions to all log groups and streams within the AWS account and region. It is possible to restrict the permissions to specific log groups or streams for enhanced security.

Configuring Lambda Function Logging Settings

Configuring the Lambda function’s logging settings involves specifying the log group and stream names, which dictate how logs are organized and stored in CloudWatch. While much of this configuration is handled implicitly by the Lambda service, understanding these settings is important for log management.

The key logging settings include:

- Log Group Name: The log group name is a logical container for log streams. By default, Lambda automatically creates a log group for each function, named after the function itself: `/aws/lambda/

`. This default naming convention is convenient for simple deployments. However, users can customize the log group name during function creation or through updates to the function configuration. This allows for better organization, such as grouping logs by environment (e.g., `dev`, `prod`) or application component. - Log Stream Name: The log stream name represents a specific instance of a function execution. By default, Lambda automatically creates a log stream for each function invocation. The log stream name typically includes the function version and a timestamp. This approach facilitates easy identification of individual executions and their corresponding logs. For instance, a log stream name might be `2023/10/27/[$LATEST]xxxxxxxxxxxxxxxxxxxx`.

The `[$LATEST]` signifies the latest version of the function, and the string of characters is a timestamp.

- Retention Policy: While not a direct configuration setting within the Lambda function itself, the retention policy of the log group is crucial. The retention policy determines how long logs are stored in CloudWatch Logs before being automatically deleted. Default retention policies vary depending on the AWS account. It’s essential to configure the retention policy to meet compliance requirements and storage needs.

Options range from 1 day to indefinitely. This setting is managed within the CloudWatch Logs console.

- Log Format: Lambda functions typically output logs as plain text, which is automatically captured by CloudWatch Logs. However, users can control the format of the log messages within their function code. For instance, structured logging using JSON can enhance readability and facilitate analysis. Using a structured logging approach is highly recommended.

To modify the default logging settings, such as the log group name or retention policy, you typically need to use the AWS Management Console, the AWS CLI, or an Infrastructure as Code (IaC) tool. The Lambda function code itself does not directly control these settings.

Log Format and Structure

Understanding the structure of logs generated by Lambda functions and how to customize them is crucial for effective monitoring, debugging, and analysis. CloudWatch Logs provides a default format that is readily available, but tailoring this format allows for the inclusion of vital context, improving the usability of the logs for specific operational needs. Customization facilitates easier parsing and more meaningful insights from the data.

Default Lambda Log Format

The default log format generated by Lambda functions and processed by CloudWatch provides a baseline for understanding function behavior. This format includes key elements that are essential for basic troubleshooting.The default format consists of:

- Timestamp: The time the log event occurred, in UTC.

- Log Level: Indicates the severity of the log event (e.g., INFO, WARN, ERROR).

- Request ID: A unique identifier for the invocation of the Lambda function.

- Function Name: The name of the Lambda function.

- Log Message: The actual message logged by the function.

The standard structure, when viewing logs in CloudWatch, typically presents each log entry on a new line, prefixed with the timestamp and log level. The message content follows. This structure is designed for human readability and provides basic information for identifying and diagnosing issues. The information displayed is crucial for identifying the origin of errors, the time of their occurrence, and the specific function instance involved.

Customizing Log Format

Customizing the log format involves modifying the output from the Lambda function to include specific information. This is achieved by formatting the log messages within the function’s code to include relevant data. This allows for the inclusion of custom fields, which significantly improves the ability to filter, search, and analyze the logs.Customization can be achieved by:

- Using structured logging: Instead of simple string messages, format log messages as JSON objects. This allows for easy parsing and querying.

- Adding custom fields: Include fields like request IDs, user IDs, or any other relevant data within the JSON object.

- Using log levels: Use appropriate log levels (e.g., DEBUG, INFO, WARN, ERROR) to categorize log events.

Using JSON format is particularly advantageous because CloudWatch automatically parses JSON logs, enabling structured search and filtering. This capability significantly streamlines the process of identifying and resolving issues. The ability to search and filter based on custom fields greatly enhances the efficiency of debugging and monitoring.

Example of a Well-Structured JSON Log Entry

A well-structured JSON log entry is essential for effective analysis. The following example demonstrates a JSON log entry that includes a timestamp, log level, request ID, function name, and custom data. This example is designed to be easily parsed and analyzed by tools like CloudWatch Insights.Here’s an example:“`json “timestamp”: “2024-01-20T10:00:00.123Z”, “level”: “INFO”, “requestId”: “a1b2c3d4-e5f6-7890-1234-567890abcdef”, “functionName”: “my-lambda-function”, “message”: “User authentication successful”, “userId”: “user123”, “ipAddress”: “192.168.1.1”“`In this example:

- The `timestamp` field records the time of the event in ISO 8601 format.

- The `level` field indicates the severity of the log event.

- The `requestId` field is the unique identifier for the Lambda function invocation.

- The `functionName` identifies the Lambda function.

- The `message` field contains a descriptive log message.

- The `userId` and `ipAddress` are custom fields providing context.

This structured format allows for precise filtering and analysis within CloudWatch. For example, you could easily search for all log entries with `userId` equal to `user123` or filter for all `ERROR` level logs originating from a specific `ipAddress`. This level of detail drastically improves the speed and efficiency of identifying and resolving issues. By including relevant data, such as user IDs and IP addresses, logs become significantly more valuable for troubleshooting and security investigations.

Filtering and Searching Logs

Effectively searching and filtering logs is crucial for extracting actionable insights from Lambda function executions. CloudWatch Logs provides a suite of tools designed to help users pinpoint specific events, identify anomalies, and troubleshoot issues efficiently. These tools range from simple filtering capabilities to sophisticated query languages, allowing for varying levels of analysis depending on the user’s needs.

Methods for Filtering Logs in CloudWatch Logs

CloudWatch Logs offers several methods for filtering log data, catering to different levels of complexity and analytical requirements. These methods enable users to narrow down the scope of their analysis, focusing on relevant information while discarding irrelevant data.

- Log Group Filtering: This involves filtering at the log group level. Users can view only the log streams within a specific log group. This is a fundamental filtering technique that provides a broad scope.

- Log Stream Filtering: Within a log group, users can filter by specific log streams. Each Lambda function instance creates its own log stream, so filtering by stream can be useful for examining the logs of a particular function execution or a subset of executions.

- Real-time Metrics and Filters: CloudWatch Logs can be configured to create metrics and alarms based on specific log patterns. This real-time filtering allows for immediate detection of critical events, such as errors or performance degradation. This feature allows for proactive monitoring and alerting.

- Metric Filters: Users can define metric filters that extract numerical values from log events. These filters can then be used to create CloudWatch metrics, which can be visualized in dashboards and used for creating alarms. This enables quantitative analysis of log data.

- Subscription Filters: Subscription filters allow for real-time processing of log data by sending it to other AWS services, such as Kinesis Data Streams, Kinesis Data Firehose, or Lambda functions. This enables custom processing and analysis of log data, such as data transformation or integration with other systems.

Using CloudWatch Logs Insights for Advanced Log Searching and Analysis

CloudWatch Logs Insights provides a powerful query language for searching, analyzing, and visualizing log data. It enables users to extract specific information from log events, perform aggregations, and identify patterns that might not be apparent through basic filtering. This tool is invaluable for in-depth troubleshooting and performance analysis.

- Query Syntax: CloudWatch Logs Insights uses a query language that allows users to specify the fields they want to extract, the conditions they want to filter by, and the aggregations they want to perform. The syntax is similar to SQL, making it relatively easy to learn for users familiar with database querying.

- Field Extraction: Insights can automatically extract fields from structured logs (e.g., JSON logs) or users can define custom fields using regular expressions. This allows for the analysis of specific data points within the log events.

- Aggregation Functions: Insights supports a wide range of aggregation functions, such as `count`, `sum`, `avg`, `min`, and `max`. These functions allow users to calculate statistics on their log data.

- Visualization: Insights can visualize the results of queries as tables or charts, making it easier to identify trends and patterns.

- Saved Queries: Users can save their queries for later use, which is particularly helpful for recurring analysis tasks.

Examples of Common Log Search Queries

Practical examples illustrate the power of CloudWatch Logs Insights for identifying errors, performance bottlenecks, and other issues. These queries demonstrate how to leverage the query language to extract valuable information from log data.

- Identifying Errors: To find all log events that contain the word “ERROR,” use the following query:

`filter @message like “ERROR”`

This query searches for the string “ERROR” within the `@message` field, which contains the log message.

- Identifying Specific Error Codes: To search for a specific error code, such as a 500 HTTP status code, the query might look like this, assuming the logs contain the HTTP status code:

`filter @message like “500”`

This identifies all log entries containing the string “500”. This helps to pinpoint potential issues with specific error types.

- Calculating Average Execution Time: Assuming the logs contain a field for execution time (e.g., `executionTime`), the following query calculates the average execution time:

`stats avg(executionTime) as avgExecutionTime`

This query aggregates the `executionTime` field, calculating the average and labeling it `avgExecutionTime`. This helps to assess the function’s performance.

- Identifying Slowest Lambda Function Invocation: This query, assuming the logs contain an execution time field (`executionTime`), and a timestamp field (`@timestamp`), would help pinpoint the slowest invocations:

`sort executionTime desc | limit 1`

This sorts the logs by execution time in descending order and returns the top one, representing the slowest invocation.

- Counting Successful and Failed Invocations: If the logs contain a status field (e.g., `status`), the following query can be used to count successful and failed invocations:

`stats count(*) as total, count(status = “success”) as success, count(status = “failure”) as failure`

This provides an overview of the function’s operational health by quantifying successful and failed invocations.

Metrics and Alarms based on Logs

CloudWatch Logs provides the capability to transform raw log data into actionable insights through metrics and alarms. This functionality allows for proactive monitoring of Lambda function performance and health, enabling rapid identification and resolution of issues. By creating metrics from log patterns and setting up alarms, users can automate responses to specific events, such as errors or performance degradations, improving the overall reliability and efficiency of their serverless applications.

Creating CloudWatch Metrics from Log Data

Creating CloudWatch metrics from log data involves extracting relevant information from the logs and transforming it into numerical data that can be tracked over time. This process is essential for visualizing trends, identifying anomalies, and setting up alarms.To create CloudWatch metrics, the following steps are undertaken:

- Define a Metric Filter: This is the core component. A metric filter is a pattern that CloudWatch uses to search for specific strings or patterns within the log data. The filter defines the criteria for extracting data. For instance, a filter could look for the string “ERROR” to count the number of errors logged by a Lambda function.

- Assign a Metric Name and Namespace: Once the filter is defined, a metric name and namespace are assigned. The metric name is a user-defined label for the metric, such as “LambdaErrors”. The namespace is used to categorize metrics, often based on the application or service. The namespace helps organize the metrics. For example, a Lambda function deployed as part of a user authentication service might be in the “UserAuthenticationService” namespace.

- Configure Metric Value: Specify how to calculate the metric’s value. This typically involves counting occurrences of the matched pattern. The CloudWatch service automatically aggregates the values over the specified time periods (e.g., 1 minute, 5 minutes, 1 hour).

- Apply the Filter to a Log Group: Associate the metric filter with the relevant log group containing the Lambda function’s logs. This ensures that the filter is applied to the correct log data.

- Visualize Metrics: Use CloudWatch dashboards to visualize the generated metrics. These dashboards provide graphs and charts that show the metric values over time, allowing for trend analysis and anomaly detection.

For example, consider a Lambda function that processes image uploads. The function logs errors when an image fails to upload. A metric filter could be created to search for the string “UploadError” within the logs. The metric name could be “ImageUploadErrors,” and the namespace could be “ImageProcessingService.” The metric value would be the count of “UploadError” occurrences. This metric would then be displayed on a CloudWatch dashboard, allowing monitoring of upload error rates.

Setting Up CloudWatch Alarms to Trigger Based on Specific Log Patterns

CloudWatch alarms provide automated monitoring and alerting capabilities. They are triggered when a specified metric exceeds, falls below, or breaches a defined threshold for a specific period. Alarms enable automated responses to issues identified in the log data.Setting up CloudWatch alarms involves the following steps:

- Choose a Metric: Select the CloudWatch metric to monitor. This is the metric generated from the log data, such as the “LambdaErrors” metric.

- Define the Threshold: Specify the threshold value that triggers the alarm. For example, an alarm might be triggered if the “LambdaErrors” metric exceeds 10 errors within a 5-minute period.

- Set the Evaluation Period: Configure the evaluation period, which defines the number of data points that must meet the threshold condition to trigger the alarm. This helps to prevent false alarms due to transient fluctuations.

- Specify the Alarm State: Define the alarm state, which can be “OK,” “ALARM,” or “INSUFFICIENT_DATA.” The alarm state changes based on the metric’s behavior relative to the threshold.

- Configure Actions: Define the actions to be taken when the alarm enters the “ALARM” state. These actions can include sending notifications (e.g., email, SMS), triggering automated remediation actions (e.g., scaling resources), or integrating with other services.

Consider the image upload Lambda function example. An alarm could be created for the “ImageUploadErrors” metric. The alarm would be triggered if the error count exceeded 10 errors within a 5-minute period. When the alarm enters the “ALARM” state, it would trigger a notification to the operations team, alerting them to the potential issue.

Configuring Notifications (e.g., Email, SMS) When Alarms Are Triggered

Notifications are a critical component of CloudWatch alarms, enabling timely responses to detected issues. Notifications are typically delivered via email, SMS, or other supported channels.Configuring notifications involves these steps:

- Create an SNS Topic: Create an SNS (Simple Notification Service) topic. This topic acts as a central point for sending notifications.

- Subscribe to the SNS Topic: Subscribe to the SNS topic with the desired endpoints (e.g., email addresses, phone numbers). Subscribers receive notifications sent to the topic.

- Configure the Alarm to Send Notifications: When creating or editing the CloudWatch alarm, configure it to send notifications to the SNS topic.

- Test the Notification: After configuration, test the notification by simulating the alarm state or by manually triggering the alarm to ensure notifications are delivered as expected.

For the image upload example, an SNS topic would be created. The operations team members would subscribe to this topic with their email addresses. The “ImageUploadErrors” alarm would be configured to send notifications to this SNS topic. When the alarm is triggered, the operations team would receive an email notification, alerting them to the high error rate. This enables them to quickly investigate and resolve the issue, such as a misconfiguration in the image processing pipeline.

The email could contain details about the alarm, the affected Lambda function, and the timestamp of the event. This allows for faster troubleshooting and a more proactive approach to incident management.

Log Retention and Storage

Effective log management necessitates careful consideration of data retention policies and storage solutions. The lifecycle of log data, from initial generation to eventual archival or deletion, is a critical aspect of compliance, security, and operational efficiency. CloudWatch Logs provides built-in mechanisms for managing log retention, along with options for exporting logs to other storage services for long-term archiving and analysis.

Default Log Retention Settings in CloudWatch Logs

CloudWatch Logs provides default retention settings for log groups. These defaults are crucial for understanding the initial lifecycle of log data.The default log retention period is set to “Never expire.” This means that, by default, logs are retained indefinitely. This setting allows for comprehensive historical analysis and is suitable for situations where long-term data availability is paramount. However, this can lead to increased storage costs if not managed appropriately.CloudWatch Logs also provides a range of configurable retention periods, offering flexibility to align with various requirements.

These configurable options enable users to strike a balance between data availability and cost-effectiveness.

Configuring Custom Log Retention Periods

Customizing log retention periods allows for aligning log management with compliance mandates, data governance policies, and cost optimization strategies. This process involves modifying the retention settings of individual log groups.The configuration process is initiated within the AWS Management Console, the AWS CLI, or through infrastructure-as-code tools like AWS CloudFormation or Terraform. Within the CloudWatch Logs console, you can select a log group and modify its retention settings.

The available retention periods range from one day to ten years.

- Setting the Retention Period: You can choose from predefined retention periods, such as 1 day, 3 days, 5 days, 7 days, 14 days, 30 days, 60 days, 90 days, 180 days, 365 days, 730 days, 1827 days, 2555 days, or “Never expire.”

- Impact of Setting Changes: Changing the retention period applies to all future log events ingested into the log group. Existing logs are subject to the new retention policy, and CloudWatch Logs automatically deletes logs that exceed the specified retention period.

- Considerations for Compliance: Many compliance frameworks, such as PCI DSS or HIPAA, specify minimum log retention requirements. Carefully evaluate the requirements of your compliance framework to determine the appropriate retention period.

Example:For a financial institution subject to PCI DSS, a minimum retention period of one year (365 days) might be required for security logs. Configuring this setting in CloudWatch Logs ensures compliance with this requirement.

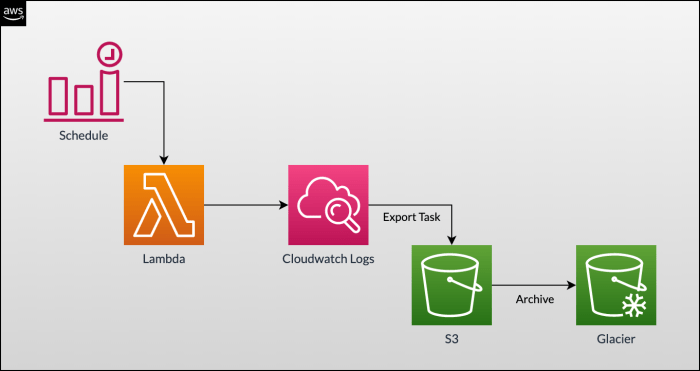

Exporting Logs to Other Storage Solutions

For long-term archiving, disaster recovery, and advanced analytics, exporting logs to other storage solutions is essential. CloudWatch Logs facilitates this process by enabling the export of log data to Amazon S3. This allows for storing logs in a more cost-effective manner and utilizing the comprehensive analytical capabilities of S3 and other services that integrate with S3, such as Amazon Athena, Amazon EMR, and AWS Lake Formation.The export process involves creating a log export task.

This task specifies the source log group, the time range for the logs to be exported, and the destination S3 bucket.

- Creating an Export Task: This task can be initiated through the AWS Management Console, the AWS CLI, or the CloudWatch Logs API. You must specify the source log group, the start and end times for the export, and the destination S3 bucket and prefix.

- Data Format and Structure: The logs are exported in a JSON format, which is suitable for analysis and processing. The data is structured with the log events and metadata, including the timestamp, log stream name, and other relevant details.

- Cost Considerations: Exporting logs to S3 incurs costs associated with S3 storage and potential data transfer costs. Analyze your storage requirements and data access patterns to optimize costs. Consider S3 storage classes like S3 Glacier for cost-effective long-term archiving.

- Security Considerations: Ensure that appropriate security measures are in place for the S3 bucket, including encryption and access controls. Use IAM roles with least-privilege access to the S3 bucket to minimize security risks.

Example:A retail company wants to archive its application logs for regulatory compliance and potential security investigations. They configure a log export task to export logs from their Lambda functions to an S3 bucket. They use S3 Glacier for the archived logs to minimize storage costs while maintaining the ability to retrieve logs when needed. They also implement S3 bucket policies to control access to the archived logs, ensuring that only authorized personnel can access the data.

Troubleshooting Common Logging Issues

Effective troubleshooting is critical for maintaining the reliability and observability of Lambda functions. Identifying and resolving issues that prevent logs from appearing in CloudWatch is paramount for debugging, performance monitoring, and security auditing. This section Artikels common problems, diagnostic steps, and solutions to ensure proper log delivery and analysis.

Identifying Issues Preventing Log Appearance

Several factors can hinder the appearance of logs in CloudWatch. Understanding these potential roadblocks is the first step in effective troubleshooting.

- Incorrect IAM Permissions: The Lambda function’s execution role must have the necessary permissions to write logs to CloudWatch. Insufficient permissions are a frequent cause of missing logs. The role requires permissions such as

logs:CreateLogGroup,logs:CreateLogStream, andlogs:PutLogEvents. - Function Configuration Errors: Misconfigured Lambda function settings can prevent log generation. This includes incorrect log group names or retention policies.

- Code-Level Errors: Errors within the function code, such as exceptions that are not properly handled or logging statements that are not executed, can lead to incomplete or missing logs.

- Concurrency Limits: Lambda functions are subject to concurrency limits. If the function is throttled due to exceeding concurrency limits, logs might be delayed or not written at all during the throttling period.

- Network Connectivity Issues: If the Lambda function requires network access (e.g., to reach external services or databases), and there are network connectivity problems, the logging operations might fail.

- Log Group/Stream Not Created: CloudWatch requires the presence of a log group and log stream to receive logs. If these resources are not created, or are created with incorrect settings, logs will not be stored.

- Logging Volume and Throttling: Excessive logging volume can lead to throttling by CloudWatch. This can result in dropped log events. Lambda functions and CloudWatch Logs have their own throttling mechanisms.

Diagnosing and Resolving Logging Problems

A systematic approach to diagnosing logging issues is essential. This involves checking various components and configurations.

- Verify IAM Permissions: Review the Lambda function’s execution role and ensure it has the necessary CloudWatch Logs permissions. Use the AWS Management Console or the AWS CLI to examine the role’s attached policies.

- Check Function Configuration: Examine the Lambda function’s configuration in the AWS Management Console. Verify the log group name, log stream settings, and the configured retention policy.

- Examine Code for Errors: Review the function’s code for exceptions, errors, and any logging statements. Use debuggers or print statements (temporarily) to verify that the logging statements are being executed and that the function’s execution path is as expected.

- Test Function Execution: Trigger the Lambda function with different inputs and test cases to ensure that logs are generated under various conditions. Check the execution logs for errors and warnings.

- Monitor CloudWatch Metrics: Utilize CloudWatch metrics to monitor the function’s invocation count, errors, and throttles. This data can help pinpoint potential performance issues.

- Analyze CloudWatch Logs Insights: Use CloudWatch Logs Insights to query and analyze the logs. This tool can help identify patterns, errors, and performance bottlenecks.

- Review Network Connectivity: If the function requires network access, verify that it can reach the required resources. Check for any network connectivity issues using tools like

pingortraceroutewithin the Lambda function (if possible) or by examining network configuration settings.

Troubleshooting Guide for Missing or Incomplete Logs

A step-by-step guide to troubleshooting common logging issues can streamline the resolution process.

- Check IAM Permissions: Confirm that the Lambda function’s execution role has the required CloudWatch Logs permissions (

logs:CreateLogGroup,logs:CreateLogStream,logs:PutLogEvents). - Verify Function Configuration: Review the function’s configuration in the AWS Management Console, paying close attention to the log group name and retention policy.

- Inspect the Code:

- Examine the function code for errors, exceptions, and unhandled conditions.

- Verify that logging statements are correctly placed and executed.

- Use temporary print statements or a debugger to trace the function’s execution flow.

- Test Function Execution:

- Trigger the function with various inputs to simulate different scenarios.

- Check for errors in the execution logs.

- Monitor CloudWatch Metrics:

- Use CloudWatch metrics to monitor function invocations, errors, and throttles.

- Analyze the metrics to identify performance bottlenecks or throttling issues.

- Analyze CloudWatch Logs Insights:

- Use CloudWatch Logs Insights to query and analyze the logs.

- Search for specific error messages or patterns.

- Check for Network Issues: If the function requires network access, verify connectivity.

- Review CloudWatch Service Limits: Ensure the account isn’t exceeding CloudWatch Logs service limits.

- Check Log Group and Stream Creation: Ensure that the correct log group and stream exist and that the Lambda function is configured to write to them.

- Consider Log Volume and Throttling: If logs are being dropped, review the volume of logs being generated and the throttling limits. Consider using a different logging level (e.g., INFO instead of DEBUG) to reduce the log volume.

Advanced Log Analysis Techniques

Analyzing logs effectively is crucial for understanding the behavior of Lambda functions, identifying performance bottlenecks, and proactively addressing potential issues. Advanced techniques extend beyond basic searching and filtering, enabling deeper insights through data extraction, visualization, and integration with other monitoring tools. This section delves into these sophisticated methods, providing a comprehensive understanding of how to leverage CloudWatch Logs for advanced log analysis.

Log Patterns for Data Extraction

Log patterns facilitate the extraction of specific data points from unstructured log entries. This process transforms raw log data into structured information, enabling more effective analysis and monitoring. Defining and implementing these patterns allows for targeted data retrieval and analysis, leading to actionable insights.To define a log pattern, consider the following steps:

- Identify Key Data Points: Determine the specific pieces of information that need to be extracted from the logs. These could include request IDs, timestamps, error codes, user IDs, or any other relevant data.

- Analyze Log Structure: Examine the format of the log entries to understand how the data points are represented. Logs often contain fixed fields or patterns, such as a timestamp followed by a log level, a message, and then other relevant data.

- Create the Pattern: Develop a regular expression that matches the format of the log entries and captures the desired data points. CloudWatch Logs supports a specific pattern syntax. The syntax utilizes s like `?` (matches zero or one occurrences), `*` (matches zero or more occurrences), and other regex-based patterns to define the extraction rules.

- Test the Pattern: Use CloudWatch Logs Insights to test the pattern against a sample of log entries. Verify that the pattern correctly extracts the desired data points.

- Apply the Pattern: Once the pattern is validated, apply it to the log group or stream. CloudWatch Logs Insights can then use the pattern to extract data from the logs.

For example, consider a log entry like:

2024-10-27T10:00:00Z [INFO] Request ID: abcdef12-3456-7890-abcd-ef1234567890 - User ID: user123 - Processing completed successfully.

A suitable log pattern could be:

"Request ID: "(?

[0-9a-fA-F]8-[0-9a-fA-F]4-[0-9a-fA-F]4-[0-9a-fA-F]4-[0-9a-fA-F]12)* "User ID " - (?

\w+)*

This pattern extracts the `request_id` and `user_id` from the log entry. The `(?

CloudWatch Logs Insights for Data Visualization

CloudWatch Logs Insights provides powerful data visualization capabilities, enabling the creation of charts and dashboards to monitor Lambda function performance and identify trends. Visualization tools offer an intuitive way to understand complex data patterns and anomalies, which helps in the proactive identification of issues.CloudWatch Logs Insights supports several visualization types:

- Line Charts: Ideal for visualizing trends over time, such as the number of invocations, error rates, or latency metrics.

- Bar Charts: Useful for comparing data across different categories, such as the number of errors per function version or the distribution of latency values.

- Pie Charts: Suitable for displaying the proportion of different values, such as the percentage of successful and failed invocations.

- Stacked Area Charts: Can represent the accumulation of data points over time, showing the contribution of each component to the overall total.

To create visualizations, follow these steps:

- Query the Logs: Use CloudWatch Logs Insights to write queries that extract the data needed for the visualization. This might involve using log patterns to extract specific data points or using aggregation functions to calculate metrics.

- Select Visualization Type: Choose the appropriate visualization type based on the data being displayed. Consider the type of data, the insights needed, and the ease of interpretation.

- Configure the Chart: Customize the chart’s appearance, including the title, axes labels, and color schemes. This enhances readability and clarity.

- Save the Visualization: Save the visualization to the CloudWatch dashboard for ongoing monitoring.

For instance, to visualize the average latency of a Lambda function, a query might be written:

filter @type = "REPORT"| stats avg(billedDuration) as avg_billed_duration| sort avg_billed_duration desc| limit 20

This query filters for `REPORT` log entries, extracts the `billedDuration`, calculates the average, sorts the results, and limits the output to 20 entries. This can be displayed as a line chart over time, revealing latency trends. The visualization helps in identifying performance degradation or improvements over time.

Integrating CloudWatch Logs with Other Monitoring Tools

Integrating CloudWatch Logs with other monitoring tools enhances the overall observability of Lambda functions. This integration allows for centralized monitoring, correlation of data across different systems, and the creation of more comprehensive dashboards.Several integration options are available:

- CloudWatch Dashboards: CloudWatch Dashboards are the native tool for visualizing CloudWatch Logs data alongside other AWS service metrics. Custom widgets can be created to display charts, graphs, and tables derived from CloudWatch Logs Insights queries.

- Third-Party Monitoring Tools: Many third-party monitoring tools, such as Datadog, New Relic, and Splunk, offer integrations with CloudWatch Logs. These integrations typically involve forwarding logs to the third-party tool, where they can be analyzed and visualized. This can be done through the use of subscription filters or by utilizing AWS Lambda function to send logs.

- Custom Integrations: For more specialized needs, custom integrations can be built using AWS Lambda functions and other AWS services. These integrations can process logs in real-time, transform them, and send them to other systems for analysis. For instance, a Lambda function can be triggered by a CloudWatch Logs subscription filter to forward logs to a custom data lake.

To integrate with a third-party tool, the following steps are commonly involved:

- Configure the Subscription Filter: Create a subscription filter on the log group that routes logs to the third-party tool. The subscription filter specifies which logs to forward and how to format them.

- Set Up the Destination: Configure the destination for the logs within the third-party tool. This might involve creating an API endpoint or configuring a data ingestion pipeline.

- Verify the Integration: Verify that logs are being successfully forwarded to the third-party tool and that they are being processed correctly.

For example, to integrate CloudWatch Logs with Datadog, a subscription filter can be configured to forward logs to Datadog’s HTTP log intake. The subscription filter’s destination would be the Datadog HTTP endpoint, and the logs would be formatted in a way that Datadog understands. The result is the ability to view Lambda function logs alongside other application and infrastructure metrics within the Datadog platform.

Best Practices for Lambda Function Logging

Effective logging is crucial for the operational success and maintainability of Lambda functions. Well-implemented logging provides insights into function behavior, facilitates debugging, and enables performance monitoring. Adhering to best practices ensures that logs are informative, efficient, and easily analyzed.

Recommendations for Writing Effective Log Messages

Creating informative log messages is a core component of effective Lambda function logging. This involves crafting messages that are clear, concise, and provide sufficient context for understanding the function’s execution path and any encountered issues.

- Use Descriptive and Contextual Messages: Log messages should clearly describe the event that occurred and provide sufficient context to understand its significance. Instead of a generic message like “Error,” include details such as “Error processing record X from SQS queue Y due to invalid data format.”

- Include Relevant Data: Incorporate relevant data, such as input parameters, function execution timestamps, and the results of operations. This allows for tracing the flow of data and identifying the root cause of problems. For example, log the `event` object received by the Lambda function, or the ID of the item being processed.

- Use Different Log Levels: Utilize different log levels (e.g., DEBUG, INFO, WARN, ERROR) to categorize messages based on their severity. This enables filtering and prioritizing log messages during analysis. DEBUG messages are typically used for detailed tracing during development, INFO messages for general operation, WARN messages for potential issues, and ERROR messages for critical failures.

- Avoid Sensitive Information: Never log sensitive information, such as API keys, passwords, or personally identifiable information (PII). This is critical for security and compliance. Consider using placeholders or redaction techniques when logging potentially sensitive data.

- Be Consistent with Formatting: Maintain consistent formatting across all log messages. This makes it easier to parse and analyze logs programmatically. Employ a standardized format, such as JSON, to structure your logs.

Strategies for Minimizing the Impact of Logging on Lambda Function Performance

Logging, while essential, can impact the performance of Lambda functions if not implemented carefully. Excessive or inefficient logging can increase function execution time, consume more resources, and increase costs. Implementing these strategies minimizes the performance overhead.

- Log Selectively: Only log the information that is truly necessary for debugging and monitoring. Avoid logging excessive amounts of data, especially at INFO or DEBUG levels, unless explicitly needed for troubleshooting a specific issue.

- Use Asynchronous Logging: Whenever possible, use asynchronous logging to avoid blocking the function’s execution. Lambda functions are designed for short execution times; synchronous logging can add significant latency. Libraries that support asynchronous logging can help decouple the logging process from the function’s primary logic.

- Batch Log Messages: If your logging framework supports it, batch log messages to reduce the number of individual log entries. This can improve efficiency, especially when logging many small events.

- Optimize Log Message Construction: Construct log messages efficiently. Avoid expensive string concatenation operations, especially within loops. Consider using string formatting techniques that are optimized for performance.

- Monitor Logging Performance: Regularly monitor the performance impact of logging on your Lambda functions. Use CloudWatch metrics to track the function’s execution time, memory usage, and the volume of logs generated. Adjust your logging strategy as needed based on these metrics.

Importance of Using Structured Logging for Easier Analysis

Structured logging, often using formats like JSON, significantly enhances the usability of logs. It enables easier parsing, filtering, and analysis, making it simpler to extract meaningful insights from the data.

- Facilitates Automated Analysis: Structured logs are easily parsed by automated tools and services, such as CloudWatch Logs Insights, log aggregation systems, and monitoring dashboards. This enables you to automate log analysis tasks and gain insights more efficiently.

- Enables Precise Filtering and Searching: With structured logs, you can filter and search for specific events based on their attributes. For example, you can search for all errors related to a specific user ID or all events that occurred within a certain time frame.

- Improves Data Visualization: Structured logs allow you to visualize log data in various ways, such as creating charts and graphs that show trends and patterns. This helps in identifying performance bottlenecks, understanding user behavior, and detecting anomalies.

- Supports Complex Queries: Structured logging allows for more complex queries, such as joining data from different log entries or performing calculations on log data. This provides a deeper understanding of your application’s behavior.

- Enhances Debugging and Troubleshooting: By providing a consistent and structured format, structured logs make it easier to debug and troubleshoot issues. You can quickly identify the root cause of problems by examining the relevant log attributes. For example, a JSON log might include the `statusCode`, `errorMessage`, and `requestId` of an API call, making it easier to pinpoint the failure.

Outcome Summary

In conclusion, mastering how to use CloudWatch Logs for Lambda functions is indispensable for serverless application development. This comprehensive guide has Artikeld the essential aspects of log management, from initial setup and configuration to advanced analysis and integration with other monitoring tools. By embracing the best practices and techniques discussed, developers can significantly enhance the reliability, performance, and observability of their Lambda-based applications, paving the way for a more efficient and effective development lifecycle.

Essential Questionnaire

What are the primary benefits of using CloudWatch Logs for Lambda functions?

CloudWatch Logs provides centralized log storage, facilitates real-time monitoring, enables proactive troubleshooting, supports performance analysis, and offers cost-effective log retention and archival options.

How do I ensure my Lambda function has the necessary permissions to write logs to CloudWatch?

You must configure the Lambda function’s IAM role to include the `cloudwatch:PutLogEvents` and `cloudwatch:CreateLogStream` permissions. This grants the function the authorization to create log streams and write data to them within CloudWatch Logs.

What is the default log format generated by Lambda functions?

Lambda functions typically generate logs in a structured JSON format, including timestamps, request IDs, and function-specific output. This format facilitates easier parsing and analysis.

How can I search for specific errors or events within my Lambda function logs?

CloudWatch Logs Insights allows you to perform advanced log searches using SQL-like queries. You can filter by specific s, timestamps, request IDs, or custom log fields to pinpoint relevant information.

How can I set up alarms based on log patterns?

You can create CloudWatch metrics from log data by defining log patterns. Then, configure CloudWatch alarms that trigger when these metrics exceed predefined thresholds, enabling automated notifications and actions.