Serverless computing represents a paradigm shift in application development, offering developers the ability to build and deploy applications without managing the underlying infrastructure. This introductory exploration of serverless design patterns and best practices delves into the core concepts that underpin this architecture, emphasizing the optimization of serverless applications. We will dissect the key patterns and best practices, analyzing their impact on efficiency, scalability, and cost-effectiveness within the serverless framework.

The core of this discussion revolves around understanding how to leverage serverless architectures effectively. We will dissect a range of serverless design patterns, including Event-Driven Architecture, API Gateway Pattern, Fan-Out Pattern, and CQRS, highlighting their individual strengths and appropriate application contexts. Furthermore, we will delve into critical best practices for serverless application development, covering aspects such as code organization, monitoring and logging, security protocols, and cost optimization strategies.

The aim is to provide a comprehensive and analytical guide for navigating the serverless landscape.

Introduction to Serverless Design Patterns

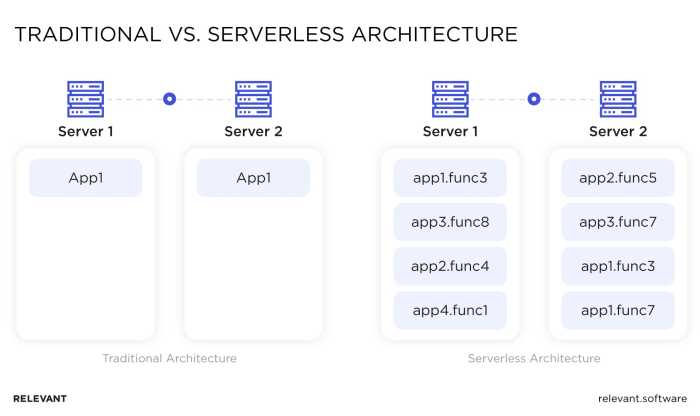

Serverless computing represents a paradigm shift in cloud architecture, abstracting away the underlying infrastructure management and allowing developers to focus on writing and deploying code. This approach enables the creation of highly scalable and cost-effective applications, fostering agility and innovation. Serverless design patterns are crucial for harnessing the full potential of this technology.

Core Concept of Serverless Computing

Serverless computing is a cloud computing execution model where the cloud provider dynamically manages the allocation of machine resources. Developers upload code, and the cloud provider handles all aspects of infrastructure management, including server provisioning, scaling, and maintenance. This event-driven model allows applications to scale automatically based on demand, resulting in pay-per-use pricing. Key characteristics include:

- No Server Management: Developers are not responsible for managing or provisioning servers.

- Automatic Scaling: Applications automatically scale up or down based on demand.

- Event-Driven Architecture: Applications are triggered by events, such as HTTP requests, database updates, or scheduled tasks.

- Pay-per-Use Pricing: Users are charged only for the compute time and resources consumed.

- High Availability: Cloud providers typically ensure high availability through built-in redundancy.

Definition of Serverless Design Patterns

Serverless design patterns are reusable, proven solutions to common challenges encountered when building serverless applications. They provide architectural blueprints, best practices, and code snippets that optimize application performance, scalability, cost-efficiency, and maintainability. These patterns offer a structured approach to building complex serverless systems, reducing development time and minimizing potential pitfalls. The patterns help to navigate complexities arising from distributed, event-driven architectures, and the ephemeral nature of serverless functions.

Benefits of Using Serverless Design Patterns

Employing serverless design patterns offers several advantages that contribute to more robust and efficient application development. These benefits include:

- Improved Scalability: Patterns like the “Fan-Out” pattern enable parallel processing of events, significantly improving scalability. For instance, consider a social media platform that needs to process a large number of posts simultaneously. The Fan-Out pattern would distribute the processing of each post to multiple serverless functions, allowing the platform to handle a high volume of user activity without performance degradation.

- Enhanced Cost Efficiency: Patterns such as the “Throttling” pattern help to manage resource consumption and prevent unexpected costs. This is especially critical in serverless environments where you pay only for what you use. For example, a website that experiences a sudden surge in traffic can use the Throttling pattern to limit the number of requests processed concurrently, preventing the system from being overwhelmed and controlling costs.

- Increased Maintainability: Design patterns promote modularity and separation of concerns, making applications easier to understand, modify, and debug. This is critical in serverless architectures where individual functions are often independent and can be updated without affecting the entire application.

- Faster Development: Utilizing pre-built patterns accelerates the development process by providing ready-made solutions for common serverless challenges. This reduces the need to reinvent the wheel and allows developers to focus on business logic.

- Improved Reliability: Patterns such as “Retry” and “Circuit Breaker” enhance the resilience of serverless applications by handling transient failures and preventing cascading failures. The Retry pattern, for example, automatically retries failed function invocations, increasing the chances of successful execution. The Circuit Breaker pattern prevents the repeated invocation of a failing function.

Common Serverless Design Patterns

Event-driven architecture is a fundamental pattern in serverless computing, enabling loosely coupled, scalable, and resilient systems. This approach centers around events, which are significant occurrences within the system, triggering actions in other components. This section delves into the implementation, advantages, disadvantages, and practical applications of event-driven architecture in serverless environments.

Event-Driven Architecture Implementation in Serverless Environments

Serverless event-driven architecture leverages cloud-provider services to facilitate event production, routing, and consumption. These services typically include event sources, event buses or brokers, and event consumers (functions).

- Event Sources: These are the origins of events. Examples include:

- Cloud Storage: Object creation, deletion, or modification events from services like Amazon S3, Google Cloud Storage, or Azure Blob Storage.

- Database Changes: Updates, inserts, or deletes in databases such as Amazon DynamoDB, Google Cloud Firestore, or Azure Cosmos DB.

- API Gateways: Events triggered by API requests received by services like Amazon API Gateway, Google Cloud Endpoints, or Azure API Management.

- Message Queues: Messages published to services like Amazon SQS, Google Cloud Pub/Sub, or Azure Service Bus.

- Event Buses/Brokers: These services act as intermediaries, routing events from producers to consumers. Examples include:

- EventBridge: A fully managed serverless event bus offered by AWS, enabling routing based on event patterns.

- Pub/Sub: Google Cloud’s global, scalable, and real-time messaging service.

- Azure Event Grid: A fully managed event routing service provided by Microsoft Azure.

- Event Consumers (Functions): Serverless functions, such as AWS Lambda, Google Cloud Functions, or Azure Functions, are triggered by events. They perform the necessary actions in response to the events.

The workflow typically involves an event source generating an event, which is then published to an event bus or broker. The event bus filters and routes the event to the appropriate event consumers (serverless functions) based on defined rules or event patterns. The functions then execute the business logic required to process the event. This design allows for asynchronous communication and decoupling of components, promoting scalability and resilience.

Advantages and Disadvantages of Event-Driven Architecture

Event-driven architecture offers several benefits, but also presents some challenges.

- Advantages:

- Scalability: Serverless functions can scale automatically to handle the load generated by events, allowing the system to accommodate fluctuating traffic.

- Loose Coupling: Components are decoupled, meaning they don’t directly depend on each other. This increases flexibility and simplifies maintenance.

- Resilience: Failures in one component do not necessarily impact others. Events can be retried or replayed if necessary.

- Real-time Processing: Events can be processed in real-time, enabling rapid responses to changes and updates.

- Cost-Effectiveness: Serverless functions are only invoked when an event occurs, reducing costs compared to constantly running infrastructure.

- Disadvantages:

- Complexity: Implementing and managing event-driven systems can be more complex than traditional architectures, requiring careful design and monitoring.

- Debugging: Debugging can be challenging because events are asynchronous and distributed. Tracing and logging are essential.

- Eventual Consistency: Data consistency may not be immediate due to the asynchronous nature of event processing.

- Testing: Testing event-driven systems requires simulating event generation and consumption, which can be more complex than testing synchronous systems.

- Monitoring: Monitoring event flows and function execution is crucial for identifying and resolving issues.

Real-World Examples of Event-Driven Serverless Applications

Event-driven architecture is employed in various real-world serverless applications across different industries.

- E-commerce Order Processing:

- Scenario: An order is placed on an e-commerce platform.

- Events: Order placed, payment received, inventory updated, shipping initiated.

- Functions: A function updates the inventory, a function triggers the shipping process, and another sends order confirmation emails.

- Real-time Data Streaming and Analytics:

- Scenario: Analyzing sensor data from IoT devices.

- Events: Sensor data readings from various devices.

- Functions: Functions process data, trigger alerts if thresholds are exceeded, and store the data in a data warehouse.

- Image and Video Processing:

- Scenario: Users upload images or videos to a cloud storage service.

- Events: Object creation in cloud storage.

- Functions: Functions resize images, generate thumbnails, extract metadata, and perform video transcoding.

- Chat Applications:

- Scenario: Users send messages in a chat application.

- Events: Message sent.

- Functions: Functions process messages, send notifications, and update chat histories.

- Financial Transaction Processing:

- Scenario: A financial transaction occurs.

- Events: Transaction initiated, transaction completed, fraud detected.

- Functions: Functions process transactions, update account balances, trigger fraud detection, and send notifications.

Common Serverless Design Patterns

Serverless architectures often require careful consideration of how APIs are exposed and managed. The API Gateway pattern is a critical component in this context, providing a centralized point of entry for client applications to interact with serverless functions and other backend services. This pattern abstracts the complexities of the underlying infrastructure, enabling developers to focus on business logic.

API Gateway Pattern

The API Gateway pattern acts as a reverse proxy, sitting in front of one or more serverless functions or other services. This central point of contact provides several crucial functionalities that enhance security, performance, and manageability of serverless applications.The primary role of an API Gateway is to route incoming requests to the appropriate backend service based on the request’s path, method, and other criteria.

This routing capability allows for a decoupled architecture where backend services can evolve independently without impacting the client-facing API.An API Gateway offers several key functionalities:

- Routing: Directs incoming requests to the appropriate backend services based on the request’s URL, method (GET, POST, PUT, DELETE, etc.), and other headers. This allows for flexible and scalable API design. For example, a request to `/users` might be routed to a serverless function responsible for user data retrieval, while a request to `/products` might be routed to a function handling product information.

- Authentication: Verifies the identity of the client making the request. This can involve checking API keys, validating JWT (JSON Web Tokens), or integrating with identity providers like OAuth 2.0. Secure authentication mechanisms are crucial for protecting sensitive data and controlling access to API resources.

- Authorization: Determines whether the authenticated client has permission to access the requested resource or perform the requested action. Authorization policies define which users or roles can access specific API endpoints or perform certain operations.

- Rate Limiting: Controls the number of requests a client can make within a given time period. This helps to prevent abuse, protect backend services from overload, and ensure fair usage of API resources. Rate limiting can be implemented based on API keys, user identities, or other criteria.

- Request Transformation: Modifies the incoming request before it reaches the backend service. This can involve adding headers, transforming the request body, or validating the request parameters. Request transformation allows the API Gateway to adapt the client’s request to the specific requirements of the backend service.

- Response Transformation: Modifies the response from the backend service before it is returned to the client. This can involve formatting the response, adding headers, or masking sensitive information. Response transformation enables the API Gateway to customize the response to meet the client’s needs.

- Monitoring and Logging: Captures metrics about API usage, performance, and errors. This data is essential for monitoring the health of the API, identifying performance bottlenecks, and troubleshooting issues. API Gateways typically integrate with logging and monitoring tools to provide insights into API traffic.

Here’s a simple HTML table illustrating a basic API Gateway configuration with up to four responsive columns for different API endpoints. This example shows how different paths are routed to different serverless functions, including basic authentication and rate limiting considerations.

| API Endpoint | HTTP Method | Backend Function | Security/Rate Limiting |

|---|---|---|---|

| /users | GET | GetUserFunction | API Key Authentication, 100 requests/minute |

| /users | POST | CreateUserFunction | API Key Authentication, 10 requests/minute |

| /products/productId | GET | GetProductFunction | JWT Authentication, 50 requests/minute |

| /orders | POST | CreateOrderFunction | JWT Authentication, 5 requests/minute |

This table represents a simplified configuration. In a real-world scenario, the API Gateway might also include features like request and response transformations, caching, and more sophisticated security policies.

Common Serverless Design Patterns

Serverless design patterns provide reusable solutions to common challenges encountered when building applications on serverless platforms. These patterns optimize performance, improve scalability, and reduce operational overhead. Understanding and applying these patterns is crucial for building efficient and cost-effective serverless applications.

Fan-Out Pattern

The Fan-Out pattern distributes a single event or request to multiple parallel processing units, enabling concurrent execution and improved throughput. This pattern is particularly beneficial for tasks that can be broken down into independent sub-tasks. It’s a fundamental pattern for building scalable serverless applications that need to handle a large volume of incoming data or requests.

Procedure to Implement the Fan-Out Pattern

Implementing the Fan-Out pattern typically involves these steps:

- Event Trigger: An event, such as a file upload, a message in a queue, or an HTTP request, triggers the initial serverless function.

- Fan-Out Function: This function receives the event and is responsible for dividing the work. It determines the number of parallel tasks required and creates individual events or messages for each sub-task. These events are typically sent to a queue or directly invoke other serverless functions.

- Parallel Processing Units: Separate serverless functions (or other compute resources) consume the events or messages created by the Fan-Out function. These functions perform the individual sub-tasks independently.

- Aggregation (Optional): If the results from the sub-tasks need to be combined, a final function (or process) aggregates the results. This can be triggered by a completion event from the sub-tasks or by polling for results.

For instance, consider processing a large image. The initial function receives the image upload event. The fan-out function, upon receiving this event, might create several sub-tasks: resizing the image to different dimensions, generating thumbnails, and extracting metadata. Each sub-task could be handled by a separate serverless function.

Benefits of Using the Fan-Out Pattern in a Specific Scenario

Consider a scenario where a large e-commerce platform needs to process thousands of product reviews daily. Each review requires sentiment analysis, spam detection, and potential moderation. Using the Fan-Out pattern provides several advantages:

- Increased Throughput: By distributing the processing of each review across multiple parallel functions, the overall processing time is significantly reduced. Instead of processing reviews sequentially, the platform can analyze them concurrently. This is crucial during peak traffic periods, such as during sales or promotions.

- Improved Scalability: The serverless platform automatically scales the number of processing functions based on the volume of incoming reviews. As the number of reviews increases, the platform can spin up more instances of the processing functions to handle the load, ensuring the system remains responsive.

- Enhanced Fault Tolerance: If one of the processing functions fails, only the corresponding review will be affected. The other reviews will continue to be processed by the remaining functions. This inherent fault tolerance minimizes the impact of individual function failures on the overall system.

- Cost Optimization: Serverless platforms typically charge based on the actual compute time consumed. By processing reviews in parallel, the platform can complete the processing faster, reducing the total compute time and, consequently, the cost. The cost savings become more pronounced with higher volumes of reviews.

- Simplified Development and Maintenance: Each processing function can be developed and deployed independently. This modularity simplifies development, testing, and maintenance. Changes to one function do not require changes to the others, facilitating easier updates and bug fixes.

Common Serverless Design Patterns

Serverless architectures, while offering significant advantages in scalability and cost-effectiveness, present unique challenges when designing complex applications. Choosing the right design pattern is crucial for optimizing performance, maintainability, and data consistency. This section focuses on the implementation of the Command Query Responsibility Segregation (CQRS) pattern within a serverless context.

CQRS Implementation in Serverless Architectures

CQRS separates read and write operations, allowing for independent scaling and optimization of each side. This decoupling is particularly beneficial in serverless environments where resources are provisioned dynamically. Implementing CQRS in a serverless context typically involves leveraging various serverless services to handle commands and queries.

- Command Processing: Commands, which represent write operations, are typically submitted through an API Gateway. The API Gateway triggers a serverless function (e.g., AWS Lambda, Azure Functions, Google Cloud Functions) that processes the command. This function validates the command, performs the necessary business logic, and persists the data to a write-optimized data store. Examples of write-optimized data stores include Amazon DynamoDB, Azure Cosmos DB, or Google Cloud Datastore, depending on the cloud provider.

- Eventual Consistency: After a command is successfully processed, an event is often published to an event stream (e.g., Amazon EventBridge, Azure Event Hubs, Google Cloud Pub/Sub). This event notifies the read side of the data changes.

- Query Processing: Queries, which represent read operations, are handled by separate serverless functions. These functions retrieve data from a read-optimized data store. This data store can be a denormalized view of the data, optimized for specific query patterns. Common read-optimized data stores include Amazon DynamoDB, Azure Cosmos DB, or Google Cloud SQL.

- Data Synchronization: Read models are populated by subscribing to the event stream generated by the write side. Serverless functions listen for these events and update the read-optimized data stores accordingly. This ensures eventual consistency between the write and read models.

Comparison of CQRS with Other Design Patterns

CQRS offers distinct advantages compared to other design patterns, particularly in scenarios with high read/write ratio or complex data models. Understanding these differences helps in selecting the most appropriate pattern for a given application.

- CQRS vs. CRUD (Create, Read, Update, Delete): CRUD operations typically involve a single data store for both reads and writes. This can lead to performance bottlenecks, especially under heavy load. CQRS separates reads and writes, allowing for independent scaling and optimization. For example, a social media platform may benefit from CQRS because it has far more reads than writes. Users read posts and profiles more often than they update their own content.

- CQRS vs. Event Sourcing: Event Sourcing is often used in conjunction with CQRS. Event Sourcing stores the history of state changes as a sequence of events. CQRS uses these events to build the read models. While CQRS focuses on separating reads and writes, Event Sourcing provides a complete audit trail and the ability to reconstruct the state of the system at any point in time.

Event Sourcing can be implemented with the same event streams that trigger the read model updates in a CQRS architecture.

- CQRS vs. Single-Source-of-Truth: Single-Source-of-Truth designs maintain a single, canonical data store for all operations. While simpler to implement, this approach can become a performance bottleneck under heavy load. CQRS allows for the creation of read models specifically optimized for query patterns, leading to faster read performance.

Challenges and Considerations of CQRS in Serverless

Implementing CQRS in a serverless environment presents specific challenges that must be carefully considered to ensure success.

- Eventual Consistency Management: Maintaining eventual consistency between the write and read models is crucial. This requires careful design of the event stream, event handling logic, and error handling mechanisms. Strategies include implementing idempotency in event handlers to prevent duplicate processing and using retry mechanisms for failed updates.

- Data Synchronization Latency: The time it takes for data to propagate from the write model to the read model introduces latency. This can impact the user experience, especially in scenarios where near real-time data is required. Consider techniques like optimizing event handlers for speed, choosing appropriate data stores, and potentially implementing techniques like request aggregation to minimize the impact.

- Complexity: CQRS adds complexity to the application architecture. Developers need to understand the separation of concerns, event handling, and data synchronization. This complexity can increase development time and the potential for errors. Careful design and thorough testing are essential.

- Data Consistency and Transactions: While serverless architectures often lack native support for distributed transactions, mechanisms like optimistic locking or compensating transactions can be employed to ensure data consistency. Optimistic locking involves checking a version number before updating a record. Compensating transactions are used to undo a set of actions if an error occurs.

- Monitoring and Debugging: Debugging a CQRS system can be more challenging due to the asynchronous nature of event processing. Robust logging, monitoring, and tracing are essential for identifying and resolving issues. Tools that can trace events across different serverless functions and services are invaluable.

Best Practices for Serverless Application Development

Organizing serverless code effectively is crucial for maintainability, scalability, and team collaboration. A well-structured codebase minimizes errors, simplifies debugging, and facilitates future enhancements. The inherent characteristics of serverless architectures, such as distributed functions and event-driven interactions, demand a disciplined approach to code organization to prevent complexity from spiraling out of control.

Code Organization: Modularity and Reusability

Modular and reusable code components are foundational to successful serverless application development. By breaking down complex logic into smaller, independent, and reusable functions, developers can significantly improve code quality and reduce development time. This approach also enhances testability, as individual modules can be tested in isolation. The goal is to create a system where changes in one area of the application have minimal impact on other parts.Effective code organization involves the following best practices:

- Single Responsibility Principle (SRP): Each function should have a single, well-defined purpose. This principle promotes focused code, making it easier to understand, test, and maintain. For instance, a function responsible for processing an order should not also handle payment processing; these should be separate functions.

- Directory Structure: A clear and consistent directory structure is essential. A common approach is to organize code by function, feature, or domain. For example:

- `src/`

- `src/orders/`

- `src/orders/createOrder.js` (Function to create an order)

- `src/orders/getOrder.js` (Function to retrieve an order)

- `src/payments/`

- `src/payments/processPayment.js` (Function to process a payment)

- `src/users/`

- `src/users/getUser.js` (Function to retrieve user information)

This structure clearly separates concerns and facilitates navigation.

- Code Libraries and Shared Modules: Extract common functionality into reusable libraries or modules. This reduces code duplication and promotes consistency. For example, a library could handle database interactions, logging, or authentication.

- Dependency Injection: Inject dependencies into functions rather than hardcoding them. This improves testability and allows for easier swapping of implementations. For example, instead of directly instantiating a database connection within a function, pass the database connection as an argument.

- Configuration Management: Store configuration settings (e.g., API keys, database connection strings) separately from the code. Use environment variables or a dedicated configuration management service. This allows for easy deployment to different environments without modifying the code.

- Error Handling and Logging: Implement robust error handling and logging mechanisms. Log errors and relevant information to facilitate debugging and monitoring. Consider using a centralized logging service to aggregate logs from all functions. For example:

“`javascript try // … code that might throw an error …catch (error) console.error(‘An error occurred:’, error); // Log the error to a logging service // Return an appropriate error response “`

- Versioning: Use version control (e.g., Git) and version your functions. This allows you to track changes, revert to previous versions, and collaborate effectively. Consider semantic versioning for your functions and libraries.

- Infrastructure as Code (IaC): Define your serverless infrastructure (e.g., API Gateway, Lambda functions, databases) as code using tools like AWS CloudFormation, Terraform, or Serverless Framework. This allows you to manage your infrastructure in a consistent, repeatable, and automated manner.

Best Practices for Serverless Application Development

Monitoring and logging are crucial for the operational success of serverless applications. The ephemeral nature of serverless functions and the distributed architecture they embrace make traditional monitoring approaches inadequate. Effective monitoring and logging strategies provide insights into application behavior, performance bottlenecks, and potential errors, enabling proactive issue resolution and performance optimization. Without these practices, troubleshooting becomes significantly more difficult, and the overall reliability of the application suffers.

Monitoring and Logging Importance

Monitoring and logging are essential for several reasons in a serverless environment. These practices allow for a comprehensive understanding of the application’s state and performance.

- Rapid Troubleshooting: Serverless architectures can be complex, with numerous functions and services interacting. Detailed logs and real-time monitoring data pinpoint the source of issues quickly, reducing mean time to resolution (MTTR).

- Performance Optimization: Monitoring tools provide metrics on function execution time, memory usage, and invocation frequency. This data allows developers to identify and optimize slow-performing functions, leading to improved overall application performance and reduced costs.

- Cost Management: Serverless platforms charge based on resource consumption. Monitoring resource usage, such as function execution time and memory allocation, helps to identify potential cost inefficiencies and optimize function configurations for optimal cost-effectiveness.

- Security and Compliance: Logging provides an audit trail of application activity, which is essential for security investigations and compliance with regulatory requirements. Monitoring security-related events, such as unauthorized access attempts, helps to identify and mitigate security threats.

- Proactive Issue Detection: By establishing baselines for performance metrics and setting up alerts, developers can detect anomalies and potential issues before they impact users. This proactive approach minimizes downtime and improves the user experience.

Effective Monitoring and Logging Tools and Strategies

Implementing effective monitoring and logging requires choosing the right tools and employing appropriate strategies. A combination of these approaches ensures a comprehensive view of the application’s behavior.

- Centralized Logging: Employ a centralized logging service, such as Amazon CloudWatch Logs, Google Cloud Logging, or Azure Monitor Logs, to collect logs from all functions and services in a single location. This simplifies log analysis and correlation across different components.

- Structured Logging: Use structured logging formats, such as JSON, to facilitate easier parsing and analysis of log data. Include relevant context information, such as function name, request ID, and timestamp, in each log entry.

- Metric Collection: Collect key performance indicators (KPIs) using monitoring tools like Amazon CloudWatch Metrics, Google Cloud Monitoring, or Azure Monitor. Track metrics such as function invocation count, execution time, error rates, and resource utilization.

- Distributed Tracing: Implement distributed tracing to track requests as they flow through the serverless application. Tools like AWS X-Ray, Google Cloud Trace, and Azure Application Insights provide insights into request latency and dependencies between services.

- Alerting and Notifications: Set up alerts based on predefined thresholds for critical metrics. Configure notifications to alert relevant teams when issues arise, enabling prompt action and minimizing impact.

- Log Retention and Analysis: Define a log retention policy that balances the need for historical data with cost considerations. Regularly analyze logs to identify patterns, trends, and potential areas for improvement. Utilize log analysis tools to identify anomalies and insights from large datasets.

Mock Monitoring Dashboard Design

A well-designed monitoring dashboard provides a visual representation of key metrics and allows for easy analysis of application health and performance. The dashboard should display real-time data and historical trends.

The following is a descriptive breakdown of a mock monitoring dashboard:

| Metric | Description | Data Source | Visualization | Alerting Threshold |

|---|---|---|---|---|

| Function Invocation Count | The total number of times a function has been executed within a specific time period. This is a measure of function activity and application usage. | CloudWatch Metrics (or equivalent from other providers) | Line graph showing invocation count over time. | Alert if invocations drop significantly (e.g., a 50% decrease from the average over the past hour) to indicate potential issues or reduced user activity. |

| Function Execution Time (P95) | The 95th percentile of execution times for a specific function. This metric identifies the slowest executions, providing insight into potential performance bottlenecks. | CloudWatch Metrics (or equivalent) | Line graph showing the P95 execution time over time, with a horizontal line indicating the average execution time. | Alert if the P95 execution time exceeds a predefined threshold (e.g., 500ms) to indicate performance degradation. |

| Error Rate | The percentage of function invocations that result in an error (e.g., 5xx HTTP status codes). This metric indicates the overall health of the application. | CloudWatch Metrics (or equivalent) and application logs. | Line graph showing the error rate over time. | Alert if the error rate exceeds a certain percentage (e.g., 1% or 5%) to indicate potential issues. |

| Concurrent Executions | The number of function instances running simultaneously. This metric indicates the scaling behavior of the application. | CloudWatch Metrics (or equivalent) | Line graph showing concurrent executions over time. | Alert if the concurrent executions reach the account’s or function’s configured concurrency limits, indicating the need for further scaling or optimization. |

| Throttling Errors | The number of times a function invocation was throttled due to resource limits. This metric indicates if the function is hitting resource limits. | CloudWatch Metrics (or equivalent) | Line graph showing throttling errors over time. | Alert immediately if any throttling errors occur, indicating the need for resource adjustments. |

| Cold Start Count | The number of times a function instance had to be initialized (cold start). This metric is crucial for understanding the impact of function initialization on performance. | CloudWatch Metrics (or equivalent) and function logs. | Line graph showing cold start count over time. | Alert if the cold start count is consistently high, as it can negatively impact the user experience. |

The dashboard would also include:

- Service Map: A visual representation of the application’s architecture, showing the relationships between different functions and services, and their current health status (e.g., green for healthy, red for error).

- Log Search and Analysis: Direct access to log data, allowing for quick filtering and searching based on function name, request ID, or other relevant criteria.

- Detailed Function View: A drill-down view for each function, providing detailed metrics, logs, and traces specific to that function.

Best Practices for Serverless Application Development: Security

Serverless architectures, while offering numerous benefits, introduce unique security challenges. The distributed nature of serverless applications, with their reliance on third-party services and event-driven interactions, expands the attack surface. Therefore, a proactive and comprehensive security strategy is paramount to protect serverless applications from vulnerabilities and ensure data integrity. Implementing robust security measures is crucial for maintaining trust and complying with regulatory requirements.

Key Security Considerations for Serverless Applications

Several critical areas require careful attention when securing serverless applications. These considerations encompass various aspects of the application lifecycle, from development to deployment and ongoing operation. Ignoring these can lead to severe security breaches.

- Identity and Access Management (IAM): Properly managing identities and controlling access to resources is fundamental. Incorrectly configured IAM roles can lead to unauthorized access and data breaches.

- Function Security: Securing individual serverless functions involves protecting their code, dependencies, and execution environment. This includes minimizing dependencies and regularly patching vulnerabilities.

- Data Security: Data encryption, both in transit and at rest, is essential to protect sensitive information. This involves utilizing encryption keys and implementing secure storage solutions.

- Event Source Security: Securing the event sources that trigger serverless functions is crucial. Malicious events can trigger unintended function executions and potentially lead to denial-of-service or data manipulation attacks.

- Monitoring and Logging: Comprehensive monitoring and logging are vital for detecting and responding to security incidents. This involves collecting logs, setting up alerts, and analyzing security events.

- Dependency Management: Serverless functions often rely on external libraries and dependencies. Regularly scanning these dependencies for vulnerabilities and updating them promptly is crucial to mitigate risks.

- Compliance: Serverless applications must comply with relevant security standards and regulations. This includes adhering to industry best practices and implementing necessary controls to meet compliance requirements.

Examples of Security Best Practices: Access Control and Data Encryption

Implementing access control and data encryption are two cornerstones of securing serverless applications. These practices, when properly applied, can significantly reduce the risk of unauthorized access and data compromise.

- Access Control Best Practices:

- IAM Roles and Permissions: Assign IAM roles with the minimum necessary permissions to each function. Avoid granting broad, unrestricted access. For example, a function that processes images stored in an S3 bucket should only have read access to that specific bucket.

- Function-Specific Permissions: Grant permissions to functions based on their specific tasks. Do not allow functions to access resources they do not need.

- API Gateway Security: Utilize API Gateway features like authentication and authorization to control access to APIs. Implement token-based authentication and integrate with identity providers.

- Context-Aware Access: Consider using context-aware access control, which takes into account factors like user location, device, and time of day.

- Regular Audits: Regularly audit IAM roles and permissions to ensure they remain appropriate and compliant with security policies.

- Data Encryption Best Practices:

- Encryption in Transit: Use HTTPS for all communications between clients and serverless functions. This encrypts data as it travels over the network.

- Encryption at Rest: Encrypt data stored in databases, object storage, and other data stores. Utilize managed encryption services provided by cloud providers, such as AWS KMS or Azure Key Vault.

- Key Management: Securely manage encryption keys. Avoid hardcoding keys in code. Utilize key management services to generate, store, and rotate keys.

- Envelope Encryption: Use envelope encryption to encrypt data with a data encryption key (DEK) and then encrypt the DEK with a key encryption key (KEK). This allows for more secure key management.

- Regular Key Rotation: Rotate encryption keys regularly to minimize the impact of a potential key compromise.

Effective access control ensures that only authorized users and services can access specific resources and functions. Implementing the principle of least privilege is a crucial element.

Data encryption protects sensitive information from unauthorized access, even if the underlying storage or network is compromised. Implementing encryption both in transit and at rest is crucial.

Guide to Securing Serverless Functions

Securing serverless functions requires a multi-layered approach that encompasses code, configuration, and operational practices. The following guide provides a step-by-step approach to securing your serverless functions.

- Secure Code Development:

Implement secure coding practices to prevent vulnerabilities in your function code.

- Input Validation: Validate all input data to prevent injection attacks (e.g., SQL injection, cross-site scripting).

- Dependency Management: Use a package manager to manage dependencies and regularly update them to patch security vulnerabilities.

- Code Reviews: Conduct code reviews to identify and address security flaws.

- Static Analysis: Utilize static analysis tools to automatically scan your code for vulnerabilities.

- Secrets Management: Never hardcode secrets (e.g., API keys, database credentials) in your code. Use a secrets management service (e.g., AWS Secrets Manager, Azure Key Vault) to store and retrieve secrets securely.

Configure your functions securely to protect their execution environment.

- IAM Role Configuration: Assign an IAM role with the principle of least privilege to each function.

- Network Configuration: Control network access to your functions. Use VPCs and security groups to restrict access to specific IP addresses or subnets.

- Resource Limits: Set resource limits (e.g., memory, execution time) to prevent denial-of-service attacks.

- Logging and Monitoring: Enable detailed logging and monitoring to track function activity and detect security incidents.

Implement secure practices throughout the deployment and operational phases.

- Automated Deployments: Use automated deployment pipelines to ensure consistent and repeatable deployments.

- Infrastructure as Code (IaC): Use IaC tools (e.g., Terraform, AWS CloudFormation) to define and manage your infrastructure securely.

- Vulnerability Scanning: Regularly scan your function code and dependencies for vulnerabilities.

- Incident Response: Develop and test an incident response plan to handle security incidents effectively.

- Regular Audits: Conduct regular security audits to assess your security posture and identify areas for improvement.

Best Practices for Serverless Application Development

Serverless computing offers significant advantages in terms of scalability and agility, but its cost structure requires careful management. Unlike traditional infrastructure where costs are often fixed, serverless costs are directly tied to resource consumption. Therefore, optimizing cost is a critical aspect of serverless application development. This involves understanding how serverless platforms charge for resources and implementing strategies to minimize unnecessary expenditure.

Cost Optimization

Cost optimization in serverless applications focuses on minimizing resource consumption and leveraging the pay-per-use model efficiently. This necessitates a proactive approach to monitoring, analyzing, and refining the application’s architecture and implementation. The goal is to balance performance requirements with cost constraints, ensuring the application delivers value without excessive operational expenses.

- Right-Sizing Functions: Function memory allocation directly impacts cost. Allocating more memory than required increases costs without necessarily improving performance. Conversely, insufficient memory can lead to performance degradation and potentially increased invocation duration, which also translates to higher costs. The optimal memory allocation depends on the function’s workload and the performance characteristics of the underlying platform.

For example, a function processing image thumbnails might initially be allocated 1GB of memory.

By monitoring its performance and resource utilization (CPU, memory, and network I/O), it can be determined if this is excessive. If the function consistently utilizes only a fraction of the allocated memory and CPU, the memory allocation can be reduced. A/B testing different memory allocations can further refine the optimal configuration. In AWS Lambda, this can be done by adjusting the “Memory (MB)” setting during function configuration.

- Optimizing Resource Usage: Minimizing the duration and frequency of function invocations directly translates to cost savings. Several techniques can be employed to achieve this.

- Efficient Code: Writing efficient code that minimizes execution time is crucial. This includes optimizing algorithms, reducing the number of external API calls, and minimizing the size of dependencies. For instance, avoiding unnecessary data serialization/deserialization can reduce execution time.

- Connection Pooling: For functions that interact with databases, establishing and reusing database connections through connection pooling significantly reduces connection overhead. This can reduce the function’s cold start time and execution duration.

- Caching: Implementing caching mechanisms, such as using in-memory caches or external caching services like Amazon ElastiCache or Redis, can reduce the load on backend services and minimize the need for repeated data retrieval.

- Idempotency: Ensuring that functions are idempotent (can be safely executed multiple times without unintended side effects) allows for the safe implementation of retry mechanisms, which can help mitigate transient errors and reduce the overall number of failed invocations.

- Leveraging Event-Driven Architectures: Employing event-driven architectures can optimize costs by triggering functions only when necessary. This avoids unnecessary function invocations.

For instance, instead of polling for changes in a database, a serverless application can use database triggers to invoke functions only when data is inserted, updated, or deleted. This reduces the compute time and resources consumed. AWS DynamoDB Streams and Google Cloud Pub/Sub are examples of services that support event-driven architectures. - Monitoring and Alerting: Implementing comprehensive monitoring and alerting systems is essential for identifying cost anomalies and performance bottlenecks. Metrics such as function invocation duration, memory utilization, error rates, and cold start times should be tracked.

Setting up alerts that trigger when specific thresholds are exceeded allows for proactive intervention. For example, an alert can be configured to notify the development team if the average function invocation duration exceeds a defined threshold.This can prompt an investigation into potential performance issues or cost inefficiencies. CloudWatch in AWS and Cloud Monitoring in Google Cloud are examples of monitoring services that can be used for serverless applications.

- Choosing the Right Platform and Region: Serverless platforms have different pricing models and costs that vary depending on the region. Selecting the most cost-effective platform and region for the application’s requirements is important.

For example, AWS Lambda pricing varies based on the region, with some regions offering lower prices than others. Evaluating the pricing across different regions and choosing the region closest to the application’s users can minimize latency and potentially reduce costs.Similarly, comparing the pricing models of different serverless platforms, such as AWS Lambda, Google Cloud Functions, and Azure Functions, is essential to determine the most cost-effective solution for a specific use case.

- Optimizing Data Transfer Costs: Data transfer costs can significantly contribute to the overall serverless application costs. Reducing data transfer involves several practices.

- Data Compression: Compressing data before transfer reduces the amount of data that needs to be transferred, thus lowering the cost. Gzip or Brotli can be used for this purpose.

- Data Localization: Storing data closer to the function’s execution location minimizes data transfer costs. Utilizing Content Delivery Networks (CDNs) for static content also helps.

- Batching Operations: Batching operations, like database writes, reduces the number of individual operations, potentially lowering costs associated with those operations.

- Using Serverless Compute Services Appropriately: Not all workloads are well-suited for serverless. Certain workloads, such as long-running batch jobs or computationally intensive tasks, might be more cost-effective when running on traditional virtual machines or container-based services.

Evaluating the application’s characteristics and selecting the appropriate compute service for each component is important. For instance, an application might use serverless functions for API endpoints and a container-based service for processing large datasets.

End of Discussion

In conclusion, mastering serverless design patterns and adhering to best practices are crucial for building robust, scalable, and cost-efficient applications. This analysis has underscored the importance of strategic pattern selection, efficient code organization, vigilant monitoring, robust security, and proactive cost management. The serverless landscape is dynamic; continuous learning and adaptation are vital to fully exploit its potential. By embracing these principles, developers can harness the power of serverless computing to create innovative and impactful solutions.

FAQ Section

What is the primary advantage of using serverless computing?

The primary advantage is the reduced operational overhead; developers can focus on writing code without managing servers, leading to faster development cycles and reduced infrastructure costs.

How does serverless impact scalability?

Serverless applications are inherently scalable. The cloud provider automatically scales resources based on demand, allowing applications to handle fluctuating workloads without manual intervention.

What are the common security concerns in serverless applications?

Common concerns include access control, data encryption, and securing function code. Proper authentication, authorization, and input validation are crucial for mitigating risks.

How can I optimize the cost of a serverless application?

Cost optimization strategies include right-sizing functions, optimizing resource usage, and implementing cost monitoring tools to identify and address inefficiencies.

What is the role of an API Gateway in a serverless architecture?

An API Gateway acts as a central entry point for API requests, handling routing, authentication, rate limiting, and other functionalities to manage and secure API access.