The shift towards serverless architecture represents a fundamental evolution in cloud computing, promising significant advantages over traditional infrastructure models. This analysis will explore the core benefits of adopting serverless, examining its impact on cost, scalability, developer productivity, and overall business agility. By examining the underlying principles and practical applications, we aim to provide a clear and concise understanding of the value proposition serverless offers.

Serverless computing, at its core, allows developers to build and run applications without managing servers. This paradigm shift fundamentally alters the operational landscape, automating infrastructure management tasks and enabling a focus on code and innovation. The following sections will delve into the specifics of each benefit, providing a comprehensive overview of how serverless architecture can transform application development and deployment.

Cost Optimization

Serverless architecture presents significant advantages in cost management compared to traditional infrastructure models. The core principle driving these savings is the “pay-per-use” model, which eliminates the need for upfront resource provisioning and minimizes idle capacity costs. This section will delve into the mechanisms behind these cost reductions, providing a comparative analysis and highlighting specific scenarios where serverless demonstrates superior cost efficiency.

Pay-per-Use Model

The serverless approach fundamentally alters the cost structure of application deployment. Instead of paying for provisioned resources regardless of utilization, serverless providers charge only for the actual compute time, memory consumption, and other resources consumed by a function execution. This contrasts sharply with traditional models where developers must provision and pay for servers, databases, and other infrastructure components, even when these resources are idle.

Cost Comparison: Serverless vs. Traditional Architectures

The following table illustrates the cost differences between serverless and traditional architectures when handling fluctuating workloads. The data provided is a simplified representation and actual costs may vary depending on the specific cloud provider, region, and application requirements. However, the trends and relative cost differences remain consistent.

| Workload Scenario | Traditional Architecture (e.g., VMs) | Serverless Architecture (e.g., Functions as a Service) | Cost Implications |

|---|---|---|---|

| Low Traffic / Idle Time | Fixed costs for provisioned resources (e.g., server instances) – Significant waste. | Costs only incurred when functions are invoked – Minimal cost. | Serverless significantly cheaper due to zero cost during idle periods. |

| Moderate Traffic | Scaling costs (e.g., adding more server instances) – Requires manual intervention or automated scaling. | Automatic scaling based on demand – Pay only for actual resource consumption. | Serverless generally cheaper due to optimized resource allocation and auto-scaling capabilities. |

| High Traffic / Spikes | Scaling challenges – Potential for performance bottlenecks and over-provisioning to handle peak loads. | Automatic scaling with minimal latency – Handles sudden spikes efficiently. | Serverless offers better performance and cost efficiency due to elastic scaling and reduced over-provisioning needs. |

| Peak Traffic / Sustained High Load | Requires careful capacity planning and potential for over-provisioning to ensure performance. | Costs increase proportionally to resource consumption – Pay-as-you-go model. | Serverless can still be cost-effective, especially if the traditional architecture requires substantial over-provisioning. The difference is that costs are directly linked to usage. |

Cost Efficiency in Specific Scenarios

Serverless architecture excels in cost efficiency, particularly in several application categories. This advantage stems from the pay-per-use model and the ability to scale resources automatically.

- Event-Driven Applications: Event-driven applications, which respond to triggers like file uploads, database updates, or message queue entries, are ideally suited for serverless. Because functions are only invoked when an event occurs, the cost is directly tied to the number of events processed. For example, an image resizing service triggered by file uploads to a cloud storage bucket would only incur costs when images are uploaded, making it highly cost-effective.

- Infrequent Tasks: Tasks that are executed infrequently, such as batch processing jobs, scheduled reports, or background maintenance operations, benefit significantly from serverless. The absence of constant resource allocation means that costs are minimized. For example, a daily data aggregation job might only run for a few minutes each day, incurring minimal serverless costs compared to the continuous cost of a traditional server.

- Web Applications with Variable Traffic: Web applications that experience fluctuating traffic patterns can leverage serverless scaling capabilities to reduce costs. During periods of low traffic, the application consumes minimal resources, resulting in lower costs. When traffic increases, the serverless platform automatically scales the resources, ensuring optimal performance without requiring manual intervention or incurring high fixed costs.

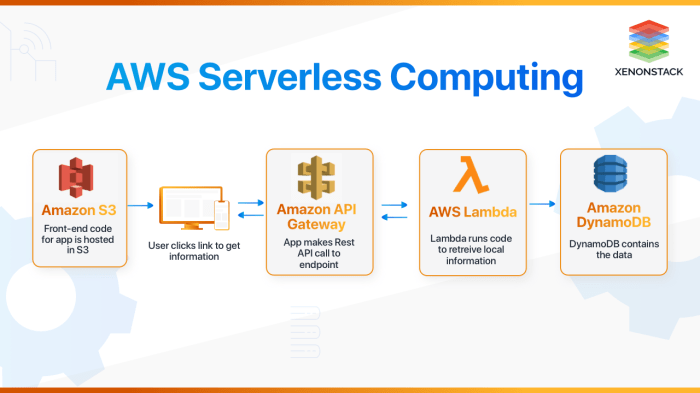

- API Gateways: Serverless API gateways, such as Amazon API Gateway or Google Cloud Endpoints, can efficiently handle API requests and responses. These gateways often integrate with serverless functions to process API logic. The pay-per-use model ensures that costs scale with the number of API calls, avoiding unnecessary infrastructure costs during periods of low API usage.

Scalability and Availability

Serverless architectures are inherently designed to address the critical aspects of scalability and availability, offering significant advantages over traditional infrastructure models. By abstracting away server management, these platforms provide automated mechanisms to handle fluctuating workloads and ensure continuous service operation. This leads to improved user experience and operational efficiency.

Automatic Resource Scaling

Serverless platforms dynamically adjust resource allocation based on incoming traffic, providing on-demand scaling. This automatic scaling is a core feature, ensuring optimal performance under varying load conditions.The process unfolds as follows:

- Event-Driven Triggers: Serverless functions are typically triggered by events, such as HTTP requests, database updates, or scheduled timers. These events serve as the initial signals for scaling.

- Monitoring and Detection: The platform constantly monitors the function’s performance, including metrics like request latency, execution time, and concurrent executions. When the system detects increased load (e.g., more requests than the current function instances can handle), it initiates scaling.

- Instance Provisioning: The platform automatically provisions additional instances of the function to handle the increased load. This provisioning happens almost instantaneously, allowing the system to keep up with the incoming requests.

- Resource Allocation: Each function instance is allocated the necessary compute resources (CPU, memory) to process requests efficiently. The platform manages this allocation based on the defined function configuration and the demands of the incoming traffic.

- Load Balancing: The platform automatically distributes incoming requests across the available function instances. This load balancing ensures that no single instance is overwhelmed and that all instances contribute to processing the workload.

High Availability and Fault Tolerance Mechanisms

Serverless architectures incorporate several built-in mechanisms to ensure high availability and fault tolerance, protecting against service disruptions. These mechanisms contribute to a resilient and reliable system.The key components are:

- Redundancy: Serverless platforms inherently run functions across multiple availability zones (AZs) or data centers within a region. This geographic distribution provides redundancy; if one AZ experiences an outage, the platform automatically redirects traffic to healthy AZs.

- Automated Health Checks: The platform continuously monitors the health of function instances. If an instance fails or becomes unhealthy, the platform automatically detects it and removes it from the load balancer, preventing it from receiving new requests.

- Automatic Failover: In the event of a hardware or software failure, the platform automatically spins up new function instances to replace the failed ones. This failover process is transparent to the end-user, ensuring continuous service availability.

- Data Replication: Data storage services used by serverless functions (e.g., databases, object storage) typically employ data replication across multiple locations. This replication ensures that data remains accessible even if one location fails.

Real-World Example: Handling Traffic Spikes

Consider an e-commerce website that experiences a sudden surge in traffic during a flash sale. Traditional infrastructure might struggle to handle this sudden increase, leading to slow loading times, errors, and potential downtime.With a serverless architecture:

- Increased Demand: As the number of user requests increases, the serverless platform automatically detects the spike in traffic.

- Rapid Scaling: The platform quickly provisions additional function instances to handle the increased load. For example, if the function normally handles 100 requests per second, and the traffic increases to 1000 requests per second, the platform automatically scales up the number of instances to meet the demand.

- Seamless User Experience: The user experience remains consistent, with minimal impact on loading times or performance, even during the peak traffic.

- Preventing Downtime: The built-in redundancy and fault tolerance mechanisms of the serverless platform ensure that the service remains available, even if individual instances or components fail.

In this scenario, the e-commerce site avoids the negative consequences of downtime and provides a smooth shopping experience for its customers, maximizing sales during the promotional event. This is in contrast to a scenario where a traditional architecture would likely have required manual intervention and could have resulted in significant revenue loss.

Increased Developer Productivity

Serverless architectures significantly enhance developer productivity by abstracting away the complexities of server management and infrastructure provisioning. This allows developers to focus on writing code and delivering value to the business, rather than spending time on operational tasks. The inherent benefits of serverless, such as automated scaling and reduced operational overhead, contribute to faster development cycles and quicker time-to-market for new features and applications.

Simplified Development through Serverless Abstraction

Serverless computing simplifies development by eliminating the need for developers to manage servers, operating systems, and other infrastructure components. This abstraction allows developers to focus solely on writing and deploying code. The serverless platform automatically handles the provisioning, scaling, and management of the underlying infrastructure. This shift in responsibility significantly reduces the operational burden on developers.

Step-by-Step Guide to Deploying a Simple Serverless Function

Deploying a serverless function is a straightforward process. Consider the example of deploying a simple “Hello, World!” function using AWS Lambda.

1. Code Creation

Write the function code in a supported language (e.g., Python, Node.js, Java). For example, in Python: “`python def lambda_handler(event, context): return ‘statusCode’: 200, ‘body’: ‘Hello, World!’ “`

2. Packaging

Package the code, often with any necessary dependencies, into a deployment package (e.g., a .zip file).

3. Console or CLI Deployment

Utilize the cloud provider’s console or command-line interface (CLI) to deploy the function. For example, using the AWS CLI: “`bash aws lambda create-function \ –function-name my-hello-world-function \ –runtime python3.9 \ –handler lambda_function.lambda_handler \ –zip-file fileb://deployment-package.zip \ –role arn:aws:iam::123456789012:role/lambda-execution-role “` This command creates a new Lambda function, specifying its name, runtime, handler, deployment package, and execution role.

4. Configuration

Configure the function, including memory allocation, timeout settings, and environment variables, through the cloud provider’s console.

5. Invocation

Invoke the function, either directly through the console, CLI, or by configuring an event trigger (e.g., an API Gateway endpoint, an S3 bucket event).This process highlights the ease with which serverless functions can be deployed, eliminating the complexities of server provisioning and configuration.

Tools and Services that Enhance Developer Productivity

Several tools and services in a serverless environment contribute to increased developer productivity. These resources streamline development, testing, and deployment workflows.

- Cloud Provider’s Serverless Services: Platforms like AWS Lambda, Azure Functions, and Google Cloud Functions provide the core infrastructure for serverless execution, handling scaling, availability, and infrastructure management automatically.

- Integrated Development Environments (IDEs) and Code Editors: Modern IDEs and code editors often include plugins and integrations that support serverless development, such as code completion, debugging tools, and deployment utilities. Examples include VS Code with AWS Toolkit, IntelliJ IDEA with plugins for serverless frameworks.

- Serverless Frameworks: Frameworks like the Serverless Framework and AWS SAM (Serverless Application Model) simplify the definition, deployment, and management of serverless applications through declarative configurations (e.g., YAML or JSON files). This enables Infrastructure as Code (IaC) practices, promoting repeatability and automation.

- CI/CD Pipelines: Continuous Integration and Continuous Deployment (CI/CD) pipelines automate the build, test, and deployment processes. Tools like AWS CodePipeline, Azure DevOps, and Google Cloud Build integrate seamlessly with serverless platforms, enabling rapid and reliable deployments.

- Monitoring and Logging Tools: Cloud providers offer comprehensive monitoring and logging services, such as AWS CloudWatch, Azure Monitor, and Google Cloud Operations. These tools provide real-time insights into application performance, health, and errors, allowing developers to quickly identify and resolve issues.

- API Gateway Services: Services like AWS API Gateway, Azure API Management, and Google Cloud API Gateway provide a managed service for creating, publishing, maintaining, and securing APIs. These services simplify API management and integration with serverless functions.

- Testing Frameworks and Tools: Various testing frameworks and tools are available to support serverless application testing. These tools enable developers to write unit tests, integration tests, and end-to-end tests to ensure the quality and reliability of their serverless functions. For instance, tools like Jest and Mocha can be used for unit testing, while frameworks like Serverless Framework provide testing capabilities.

Reduced Operational Overhead

Serverless architectures significantly diminish the operational burden associated with managing infrastructure. This shift allows development teams to focus on writing code and delivering features, rather than spending time on server administration and maintenance tasks. This transformation is achieved through the delegation of operational responsibilities to the cloud provider.

Automated Server Management

Serverless computing abstracts away the complexities of server management, including provisioning, scaling, and maintenance. The cloud provider handles these tasks automatically, reducing the need for manual intervention.

- Automated Scaling: Serverless platforms automatically scale resources up or down based on demand. This dynamic scaling ensures optimal performance and cost efficiency. For example, a function triggered by a sudden increase in user requests will automatically scale to handle the load without requiring manual intervention.

- Automatic Patching and Updates: Security patches and software updates are applied automatically by the cloud provider. This eliminates the need for developers to manually update servers, reducing the risk of vulnerabilities and ensuring that the underlying infrastructure is always up-to-date.

- Infrastructure Management: The underlying infrastructure, including servers, operating systems, and networking, is completely managed by the provider. Developers do not need to configure or maintain these components. This simplifies operations and frees up resources for other tasks.

- Monitoring and Logging: Serverless platforms provide built-in monitoring and logging capabilities. These tools automatically collect and analyze data, allowing developers to monitor application performance and troubleshoot issues without having to set up and manage their own monitoring infrastructure.

Comparison of Operational Effort

The operational effort required for traditional and serverless setups differs significantly. The following blockquote illustrates the contrasting responsibilities.

Traditional Setup:

- Server Provisioning: Manual server setup, configuration, and ongoing maintenance.

- Operating System Management: Patching, updates, and security hardening.

- Scaling: Manual scaling based on anticipated demand, often leading to over-provisioning or performance bottlenecks.

- Monitoring: Requires setup and management of monitoring tools, including alerting and logging.

- Deployment: Manual deployments and configuration of application servers.

Serverless Setup:

- Serverless functions are triggered by events, eliminating the need for server management.

- Operating System and Infrastructure Management: Managed by the cloud provider.

- Scaling: Automatic scaling based on demand.

- Monitoring: Built-in monitoring and logging provided by the platform.

- Deployment: Automated deployment and version control.

Faster Time to Market

Serverless architecture significantly accelerates the development and deployment cycles of applications, allowing businesses to release new features and products more quickly. This accelerated pace is a direct result of the streamlined development processes, reduced operational overhead, and increased developer productivity that serverless offers. This agility is a critical advantage in today’s competitive market, enabling companies to respond rapidly to changing customer needs and market trends.

Accelerated Development and Deployment Lifecycle

Serverless architectures intrinsically speed up the development and deployment processes. This acceleration is achieved through several key mechanisms:

- Simplified Infrastructure Management: Serverless platforms abstract away the complexities of infrastructure management. Developers no longer need to provision, configure, or manage servers, databases, or other underlying resources. This reduction in operational tasks allows developers to focus their efforts on writing and deploying code, significantly shortening the development cycle.

- Automated Deployment Pipelines: Serverless platforms often integrate with automated deployment pipelines. These pipelines automate the processes of building, testing, and deploying code changes. Automation reduces manual errors, speeds up deployments, and ensures consistency across environments.

- Rapid Iteration and Rollback: Serverless environments facilitate rapid iteration and rollback capabilities. Developers can quickly deploy small code changes and test them in production environments. In the event of an issue, the platform enables swift rollbacks to previous versions, minimizing downtime and impact on users.

- Reduced Dependency on Operations Teams: The self-managing nature of serverless functions minimizes the need for extensive involvement from operations teams. Developers can deploy and manage their code with minimal assistance, leading to faster deployments and reduced bottlenecks.

Rapid Prototyping and Iterative Development

Serverless architecture is exceptionally well-suited for rapid prototyping and iterative development, which is a crucial factor for startups and established businesses looking to innovate. This approach allows for experimentation and validation of ideas with minimal upfront investment and faster feedback loops.

- Low Barrier to Entry: Serverless platforms provide a low barrier to entry for developers. The ease of deploying individual functions allows developers to quickly prototype new features and functionalities without the overhead of setting up a full-fledged infrastructure.

- Cost-Effective Experimentation: Serverless pricing models, typically based on pay-per-use, make experimentation more cost-effective. Developers can deploy and test new features without incurring significant infrastructure costs, even if the experiments are not successful.

- Fast Feedback Loops: Serverless platforms enable fast feedback loops. Developers can quickly deploy code changes, test them in production environments, and gather user feedback. This iterative process allows for continuous improvement and refinement of applications.

- Modular Design: Serverless encourages a modular design approach. Applications are built as a collection of independent functions that can be developed, deployed, and updated independently. This modularity makes it easier to iterate on individual features without impacting the entire application.

Case Study: Netflix

Netflix, a global streaming giant, leverages serverless technologies to power various aspects of its platform. Netflix’s adoption of serverless, particularly through AWS Lambda, exemplifies the benefits of faster time to market. Although not solely attributed to serverless, its role is significant.

- Use Case: Netflix uses serverless functions for a variety of tasks, including video encoding, user authentication, and API endpoints. The ability to quickly deploy and scale these functions is critical to their operations.

- Impact on Time to Market: By adopting serverless, Netflix has been able to accelerate the development and deployment of new features. This agility allows Netflix to quickly respond to changing market demands and provide users with a continuously improving streaming experience. For instance, the implementation of new video encoding formats and personalized recommendations, critical for user engagement, could be rolled out more rapidly.

- Data-Driven Results: While precise data on time-to-market improvements is not publicly available, the continuous release of new features and updates, along with the ability to handle massive scaling during peak viewing times, indicates a substantial acceleration in their development and deployment cycles. The company’s ability to swiftly adapt to new encoding standards and provide region-specific content, which would be complex without the scalability and agility of serverless, further supports this assertion.

- Key Takeaway: The case of Netflix illustrates how serverless enables large-scale, high-traffic applications to be developed and deployed rapidly. The ability to focus on code rather than infrastructure allows for quicker innovation and responsiveness to user needs.

Enhanced Agility and Innovation

Serverless architecture fosters a dynamic environment where organizations can readily experiment with new features, embrace emerging technologies, and rapidly adapt to evolving business needs. This capability stems from the inherent characteristics of serverless, including its granular deployment model, automated scaling, and pay-per-use pricing. These features collectively empower development teams to iterate quickly, reduce the risk associated with experimentation, and accelerate the time-to-market for innovative solutions.

Enabling Rapid Feature Development and Technology Adoption

Serverless facilitates a paradigm shift in how software is developed and deployed. By abstracting away the underlying infrastructure management, developers can focus on writing code and delivering value to users. This allows teams to quickly prototype and test new features without the burden of provisioning, configuring, and maintaining servers. The ability to deploy and update individual functions independently minimizes the impact of changes, reducing the risk of disrupting existing functionality.

Furthermore, the pay-per-use pricing model encourages experimentation, as developers are only charged for the resources consumed during testing and deployment. This reduces the financial barrier to innovation and allows for more exploration of new technologies and approaches.

Adapting to Changing Business Requirements

The flexibility inherent in serverless architecture enables organizations to respond swiftly to shifting market demands and evolving customer expectations. When business requirements change, serverless applications can be readily modified and scaled to accommodate new workloads. For instance, a retail company might leverage serverless functions to dynamically adjust pricing based on real-time market data or promotional campaigns. This adaptability allows businesses to remain competitive and capitalize on emerging opportunities.

The ability to quickly deploy new features, such as personalized product recommendations or targeted marketing campaigns, enhances customer engagement and drives revenue growth.

Innovative Applications and Use Cases

Serverless architecture has unlocked a wide array of innovative applications and use cases across various industries. The following list showcases some prominent examples:

- Real-time Data Processing and Analytics: Serverless functions can be triggered by events, such as data ingestion from IoT devices or social media feeds, to process and analyze data in real-time. This enables organizations to gain valuable insights from their data streams, such as identifying trends, detecting anomalies, and personalizing user experiences. For example, a logistics company can use serverless to monitor the location and condition of shipments in real-time, enabling proactive intervention and improved delivery efficiency.

- Chatbots and Conversational Interfaces: Serverless platforms provide a robust foundation for building intelligent chatbots and conversational interfaces. These applications can be deployed and scaled rapidly to handle a large volume of user interactions. The serverless architecture allows for seamless integration with various messaging platforms and natural language processing (NLP) services. For example, a customer service department can deploy a serverless chatbot to automate responses to frequently asked questions, improving customer satisfaction and reducing operational costs.

- API Gateways and Microservices: Serverless facilitates the development and deployment of API gateways and microservices, enabling organizations to build scalable and resilient applications. API gateways act as a central point of entry for client requests, routing them to the appropriate backend services. Microservices are small, independent services that perform specific functions, allowing for independent scaling and deployment. For example, a financial institution can use serverless to build a secure API gateway that manages access to various banking services, improving security and scalability.

- Event-Driven Architectures: Serverless is particularly well-suited for event-driven architectures, where applications respond to events triggered by other services or external systems. This allows for building loosely coupled, highly scalable, and resilient systems. For example, a media company can use serverless to automatically generate thumbnails and perform video transcoding when a new video is uploaded, improving content delivery and reducing manual intervention.

- IoT Backends: Serverless platforms provide a cost-effective and scalable solution for building IoT backends. They can handle the high volume of data generated by IoT devices, process it in real-time, and integrate with other services. For example, a smart agriculture company can use serverless to collect and analyze data from sensors deployed in fields, optimizing irrigation and fertilization.

Improved Security

Serverless architectures offer significant advantages in terms of security, stemming from their inherent design and the managed services they leverage. This approach shifts a considerable portion of security responsibility to the cloud provider, allowing developers to focus on application logic while benefiting from robust security measures. This shift can lead to a more secure and resilient system compared to traditional infrastructure-based deployments.

Automated Patching and Reduced Attack Surface

Serverless platforms automatically handle patching of the underlying infrastructure, including operating systems and runtime environments. This automation eliminates a major security burden for developers and reduces the window of opportunity for attackers to exploit known vulnerabilities. The reduced attack surface is a key benefit.Serverless functions are typically short-lived and stateless, which limits the potential impact of a successful attack. Furthermore, the cloud provider manages the infrastructure, reducing the attack surface compared to traditional infrastructure where the entire stack, including servers, networking, and security configurations, needs to be managed.

Security Best Practices for Serverless Applications

Implementing robust security practices is crucial for securing serverless applications. These practices include authentication, authorization, data encryption, and other critical measures.Authentication ensures that only authorized users or services can access the application.

- Authentication Methods: Serverless applications commonly utilize various authentication methods, including API keys, OAuth 2.0, OpenID Connect (OIDC), and custom authentication mechanisms. For example, using Amazon Cognito or Google Cloud Identity Platform simplifies user authentication and authorization, reducing the need for developers to build and maintain these systems from scratch.

- Multi-Factor Authentication (MFA): Implementing MFA adds an extra layer of security by requiring users to provide multiple forms of verification. This can significantly reduce the risk of unauthorized access, even if credentials are compromised.

Authorization controls which authenticated users or services can access specific resources and perform certain actions.

- Least Privilege Principle: Adhering to the principle of least privilege, where each function or service only has the minimum necessary permissions, minimizes the potential damage from a security breach. For example, using AWS IAM roles or Google Cloud IAM policies to grant specific permissions to serverless functions is essential.

- Role-Based Access Control (RBAC): RBAC helps define and manage access rights based on user roles, ensuring that users only have access to the resources they need. This simplifies access management and improves security.

Data encryption protects sensitive data at rest and in transit.

- Encryption at Rest: Encrypting data stored in databases, object storage, and other data stores protects against unauthorized access. For instance, using AWS KMS (Key Management Service) or Google Cloud KMS to encrypt data stored in S3 buckets or Cloud Storage provides an additional layer of security.

- Encryption in Transit: Using HTTPS/TLS for all communication between clients and serverless functions, and between serverless functions and other services, encrypts data as it travels over the network. This prevents eavesdropping and man-in-the-middle attacks.

Other important security considerations include:

- Input Validation: Validating all user inputs to prevent injection attacks (e.g., SQL injection, cross-site scripting).

- Monitoring and Logging: Implementing comprehensive monitoring and logging to detect and respond to security incidents. Services like AWS CloudWatch or Google Cloud Logging provide tools for collecting, analyzing, and alerting on security-related events.

- Regular Security Audits: Conducting regular security audits and penetration testing to identify and address vulnerabilities.

- Secrets Management: Securely storing and managing secrets, such as API keys and database credentials, using services like AWS Secrets Manager or Google Cloud Secret Manager.

Secure Serverless Deployment Architecture Illustration

A secure serverless deployment architecture typically incorporates several key components to ensure the confidentiality, integrity, and availability of the application. This architecture is designed to minimize the attack surface, enforce least privilege, and provide robust monitoring and logging capabilities.This architecture illustrates a hypothetical e-commerce application.

Component Descriptions:

- User Interface (Web/Mobile): The front-end application that users interact with.

- API Gateway (e.g., AWS API Gateway, Google Cloud API Gateway): Manages API requests, handles authentication, authorization, and rate limiting.

- Authentication Service (e.g., AWS Cognito, Google Cloud Identity Platform): Handles user authentication and identity management.

- Serverless Functions (e.g., AWS Lambda, Google Cloud Functions): Execute business logic, such as processing orders, updating inventory, and sending notifications. Each function performs a specific task and has limited permissions.

- Data Storage (e.g., Amazon DynamoDB, Google Cloud Datastore): Stores data, such as product information, customer details, and order data. Data is encrypted at rest.

- Message Queue (e.g., Amazon SQS, Google Cloud Pub/Sub): Enables asynchronous communication between functions. For example, when an order is placed, a message is added to the queue, and a function processes the order.

- Monitoring and Logging (e.g., AWS CloudWatch, Google Cloud Logging): Collects logs and metrics from all components. Alerts are triggered based on security events or anomalies.

- Security Information and Event Management (SIEM) (Optional): A centralized system that collects and analyzes security logs and events from various sources, such as the API gateway, functions, and data storage. SIEM tools, such as Splunk or Sumo Logic, help security teams detect and respond to security threats.

Security Measures in the Architecture:

- API Gateway: Implements authentication, authorization, and rate limiting to protect the API endpoints.

- Authentication Service: Provides secure user authentication and identity management, including multi-factor authentication (MFA).

- Functions: Functions are designed with the least privilege principle, only having the necessary permissions to access resources. Input validation is performed to prevent injection attacks.

- Data Storage: Data is encrypted at rest using KMS or similar services.

- Encryption in Transit: All communication between components uses HTTPS/TLS.

- Monitoring and Logging: Comprehensive monitoring and logging are implemented to detect and respond to security incidents.

- SIEM (Optional): SIEM tools collect and analyze security logs and events to provide a comprehensive view of the security posture.

Simplified Deployment and Management

Migrating to a serverless architecture significantly simplifies deployment and management processes compared to traditional infrastructure. This simplification stems from the inherent design of serverless platforms, which abstract away much of the underlying infrastructure management, allowing developers to focus primarily on code and functionality. This shift results in faster release cycles, reduced operational overhead, and increased agility in responding to changing business needs.

Streamlining the Deployment Process with Serverless Platforms

Serverless platforms streamline the deployment process by automating many tasks traditionally performed manually. These platforms provide tools and services that handle infrastructure provisioning, scaling, and patching automatically. This automation reduces the time and effort required to deploy and manage applications, leading to faster time-to-market and increased developer productivity. The platform manages the underlying infrastructure, allowing developers to upload their code and configure the triggers and events that will execute it.

Comparing Deployment Steps: Traditional vs. Serverless

The following bullet points compare the deployment steps in traditional and serverless environments. The contrast highlights the operational efficiencies gained through a serverless approach.

- Traditional Deployment:

- Infrastructure Provisioning: Requires manual setup and configuration of servers, networks, and other resources. This can involve physical hardware, virtual machines, or cloud-based infrastructure-as-a-service (IaaS) offerings.

- Operating System and Runtime Configuration: Involves installing, configuring, and maintaining the operating system, runtime environments (e.g., Java, Node.js), and dependencies.

- Application Packaging: Requires packaging the application code, dependencies, and configuration files into a deployable artifact (e.g., a WAR file, a Docker image).

- Deployment: Involves transferring the artifact to the target server and deploying it. This often requires specific deployment scripts and processes.

- Scaling and Monitoring: Requires setting up and managing load balancers, auto-scaling groups, and monitoring tools to ensure application availability and performance.

- Ongoing Maintenance: Includes patching, security updates, and capacity planning to maintain the infrastructure.

- Serverless Deployment:

- Code Upload: Developers upload their code (typically as a function) to the serverless platform.

- Configuration: Developers configure triggers (e.g., HTTP requests, database events) and specify resource requirements (e.g., memory, execution time).

- Automatic Provisioning: The serverless platform automatically provisions the necessary infrastructure to run the function.

- Automatic Scaling: The platform automatically scales the function based on demand, without any manual intervention.

- Monitoring and Logging: The platform provides built-in monitoring and logging tools to track function execution and performance.

- No Infrastructure Management: Developers do not need to manage servers, operating systems, or runtime environments.

User-Friendly Interface for Managing Serverless Functions and Resources

Serverless platforms typically offer a user-friendly interface, often a web-based console, that simplifies the management of functions and resources. This interface provides a centralized location for developers to deploy, configure, monitor, and manage their serverless applications. These interfaces provide a visual representation of the architecture, allowing developers to easily understand and troubleshoot issues.

- Function Deployment and Configuration: The interface allows developers to upload code, configure triggers, set environment variables, and define resource limits (e.g., memory, execution time) through a graphical user interface (GUI) or a command-line interface (CLI).

- Monitoring and Logging: Provides real-time monitoring of function execution, including metrics such as invocation count, execution time, and error rates. Logging capabilities allow developers to track application behavior and troubleshoot issues. These logs often include details about the invocation, the input data, and any errors that occurred.

- Resource Management: The interface provides tools to manage other serverless resources, such as databases, storage buckets, and APIs. This includes creating, configuring, and monitoring these resources.

- Version Control and Rollback: Many platforms integrate with version control systems and provide features for deploying new versions of functions and rolling back to previous versions if necessary.

- Security Management: Enables the configuration of security policies, access controls, and identity and access management (IAM) roles to secure serverless applications.

Event-Driven Architecture Advantages

Serverless architecture offers a natural synergy with event-driven architectures, creating a powerful combination for building responsive, scalable, and resilient applications. This approach allows systems to react to events in real-time, improving efficiency and user experience. Event-driven architectures, when combined with serverless, lead to more flexible and easily maintainable systems.

Facilitating Event-Driven Architectures

Serverless platforms are inherently well-suited for event-driven architectures due to their event-trigger capabilities. Serverless functions can be triggered by a wide range of events, such as changes in data stores, scheduled tasks, API requests, or messages from message queues. This event-driven nature simplifies the development of applications that react to changes in real-time.

- Event Sources: Serverless platforms provide direct integration with various event sources, including databases (e.g., DynamoDB, MongoDB), message queues (e.g., AWS SQS, Azure Service Bus), object storage (e.g., AWS S3, Azure Blob Storage), and API gateways. This seamless integration enables functions to be triggered by events generated from these sources.

- Event Triggers: Functions can be configured to be triggered by specific events. For instance, a function can be invoked when a new object is uploaded to an S3 bucket, or when a new record is added to a database table. This granular control over event handling allows developers to build highly responsive systems.

- Asynchronous Processing: Serverless functions are typically executed asynchronously, which is a core characteristic of event-driven architectures. This asynchronous nature ensures that event processing does not block other operations and allows for efficient handling of concurrent events.

- Scalability and Resilience: Serverless platforms automatically scale function instances based on the number of incoming events. This ensures that the system can handle bursts of events without performance degradation. Moreover, the distributed nature of serverless platforms enhances the resilience of the system.

Example: Serverless Event-Driven Application

Consider a real-world example of an image processing service built on a serverless platform, such as AWS. This application processes images uploaded to an Amazon S3 bucket, generating thumbnails and applying watermarks.

- Event Source: Amazon S3, which acts as the event source, triggering events whenever a new image is uploaded to a designated bucket.

- Event Trigger: An AWS Lambda function is configured to be triggered by the “ObjectCreated” event in S3. This function acts as the event handler.

- Event Handler (Lambda Function): When a new image is uploaded, the Lambda function is invoked. It retrieves the image from S3, performs image processing tasks (e.g., resizing, watermarking) using libraries like ImageMagick, and stores the processed image back into another S3 bucket.

- Downstream Services: The processed image can trigger further actions, such as updating a database record to store image metadata or sending a notification to the user.

This architecture is highly scalable and cost-effective because the Lambda function is only invoked when a new image is uploaded, and the platform automatically scales the function based on the upload rate. This eliminates the need for maintaining a continuously running server. The pay-per-use model ensures cost efficiency.

Diagram: Event Flow in a Serverless System

The following diagram illustrates the flow of events and data in the image processing example.

Diagram Description:The diagram depicts an event-driven serverless architecture for image processing.

1. Image Upload

A user uploads an image to an Amazon S3 bucket (designated as the event source).

2. Event Trigger

S3 emits an “ObjectCreated” event, triggering an AWS Lambda function. The event contains information about the uploaded image (e.g., object key, bucket name).

3. Lambda Function

The Lambda function is invoked. It retrieves the image from S3.

4. Image Processing

The Lambda function processes the image (e.g., resizing, watermarking).

5. Output Storage

The processed image is saved to another S3 bucket.

6. Metadata Update (Optional)

The Lambda function can optionally update a database (e.g., DynamoDB) with metadata about the processed image.

7. User Notification (Optional)

The Lambda function can trigger an event to send a notification to the user (e.g., via email or push notification) indicating that the image has been processed.

This diagram clearly visualizes the flow of events and data, highlighting the key components and interactions within the event-driven system. The arrow lines represent the flow of events and data. The use of S3, Lambda, and DynamoDB represents a standard serverless setup.

Closing Summary

In conclusion, migrating to a serverless architecture presents a compelling strategy for organizations seeking to optimize costs, enhance scalability, and accelerate their time to market. The inherent advantages in developer productivity, operational efficiency, and security, coupled with the enablement of event-driven architectures, make serverless a transformative force. By embracing this technology, businesses can unlock new levels of agility and innovation, positioning themselves for success in the dynamic digital landscape.

FAQ Compilation

What is the primary cost-saving mechanism in serverless architecture?

Serverless architectures primarily save costs through a pay-per-use model. Users are charged only for the actual compute time and resources consumed, eliminating the need to pay for idle capacity, unlike traditional server setups.

How does serverless improve developer productivity?

Serverless simplifies development by abstracting away server management tasks. Developers can focus on writing code and deploying functions without dealing with infrastructure provisioning, patching, or scaling, thus reducing operational overhead and improving productivity.

What are the main security advantages of serverless?

Serverless architectures enhance security through automated patching, reduced attack surface (as there are fewer servers to manage), and built-in security features offered by cloud providers, such as automated security audits and threat detection.

Is serverless suitable for all types of applications?

While serverless is highly beneficial for many applications, it may not be ideal for all. Applications with very long-running processes or those requiring persistent connections might be better suited for traditional infrastructure or other cloud-native approaches. Serverless excels in event-driven applications, APIs, and web applications with fluctuating traffic.