Embarking on a DevOps journey promises enhanced efficiency, faster deployments, and improved collaboration. However, the path to adopting DevOps isn’t always smooth. This exploration delves into the critical challenges organizations face when transitioning to a DevOps model, providing a comprehensive overview of the obstacles that can hinder progress and the strategies to overcome them.

From cultural shifts and skill gaps to technological complexities and security concerns, we’ll dissect the multifaceted nature of DevOps adoption. This analysis aims to equip you with the knowledge to anticipate potential roadblocks and develop effective solutions, ensuring a successful and sustainable DevOps implementation.

Cultural and Organizational Hurdles

Adopting DevOps necessitates a significant shift in organizational culture, often proving more challenging than the technological aspects. Overcoming ingrained resistance to change, fostering collaboration, and breaking down traditional silos are crucial for successful implementation. This section explores the cultural and organizational obstacles frequently encountered during DevOps adoption and provides strategies for navigating these hurdles.

Resistance to Change

Resistance to change is a pervasive obstacle in DevOps adoption, stemming from various sources. It can manifest as a reluctance to adopt new tools and processes, a fear of job displacement, or a general skepticism towards the benefits of DevOps. This resistance can significantly impede the progress of DevOps initiatives, leading to delays, increased costs, and ultimately, failure to realize the intended benefits.The root causes of resistance often include:

- Fear of the Unknown: Employees may be apprehensive about new technologies, processes, and responsibilities, especially if they lack adequate training or understanding.

- Lack of Trust: A lack of trust between teams, or between management and employees, can foster resistance. This can stem from past negative experiences or a perceived lack of transparency.

- Entrenched Processes: Existing, well-established processes, even if inefficient, can be difficult to change due to familiarity and perceived stability.

- Organizational Structure: Traditional hierarchical structures can hinder collaboration and communication, creating silos that resist the cross-functional nature of DevOps.

To mitigate resistance, organizations should:

- Communicate Clearly: Provide transparent and consistent communication about the goals, benefits, and implementation plan of DevOps.

- Provide Training and Support: Offer comprehensive training programs to equip employees with the necessary skills and knowledge to embrace the changes.

- Foster Early Wins: Identify and prioritize quick wins to demonstrate the value of DevOps and build momentum.

- Involve Employees: Engage employees in the planning and implementation process to increase their sense of ownership and reduce resistance.

- Lead by Example: Leadership must champion DevOps principles and actively participate in the transformation.

Common Cultural Clashes

During DevOps implementation, several cultural clashes frequently arise between development and operations teams, stemming from their traditionally distinct goals, priorities, and work styles. These clashes can undermine collaboration and hinder the overall success of DevOps initiatives.Common examples of cultural clashes include:

- Different Priorities: Developers often prioritize speed and feature delivery, while operations teams prioritize stability and reliability. This can lead to conflicts when new features are deployed, potentially destabilizing the production environment.

- Blame Culture: In traditional environments, when problems arise, the focus is often on assigning blame rather than collaboratively resolving the issue. This hinders learning and continuous improvement.

- Communication Breakdown: Poor communication between development and operations teams can lead to misunderstandings, delays, and inefficiencies. Siloed communication channels exacerbate this issue.

- Resistance to Shared Responsibility: Developers may be reluctant to take on operational responsibilities, while operations teams may resist changes to established processes.

- Tooling Differences: Different teams might favor different tools and technologies, leading to integration challenges and communication barriers.

Overcoming these clashes requires a conscious effort to build a shared understanding and a collaborative environment. This involves:

- Establishing Shared Goals: Aligning the goals of development and operations teams around common objectives, such as faster time to market and improved application stability.

- Promoting Blameless Postmortems: Implementing a culture of blameless postmortems to analyze incidents and identify areas for improvement without assigning blame.

- Improving Communication: Establishing clear communication channels, using shared dashboards, and implementing regular cross-functional meetings.

- Encouraging Cross-Functional Training: Providing training opportunities for developers to learn about operations and vice versa, fostering a shared understanding of each other’s roles and responsibilities.

- Adopting Shared Tooling: Standardizing on shared tools and technologies to improve collaboration and streamline workflows.

Fostering a Collaborative Culture

Building a collaborative culture is the cornerstone of successful DevOps adoption. It requires a shift from traditional, siloed approaches to a model where development and operations teams work together seamlessly. This involves promoting shared responsibility, fostering open communication, and encouraging continuous learning and improvement.Strategies for fostering a collaborative culture include:

- Cross-Functional Teams: Creating cross-functional teams that include both developers and operations personnel, working together on specific projects or products.

- Shared Ownership: Encouraging shared ownership of the entire software development lifecycle, from development to deployment and monitoring.

- Open Communication: Implementing open communication channels, such as shared chat platforms, wikis, and regular stand-up meetings.

- Knowledge Sharing: Encouraging knowledge sharing through documentation, training sessions, and mentorship programs.

- Automation: Automating repetitive tasks and processes to reduce manual effort and improve efficiency, freeing up teams to focus on collaboration and innovation.

- Continuous Feedback: Establishing a culture of continuous feedback, where teams regularly review and improve their processes and workflows.

- Empowerment and Autonomy: Empowering teams with the autonomy to make decisions and take ownership of their work.

A successful example is the adoption of DevOps at Amazon. According to a 2018 report, Amazon implemented a “two-pizza team” structure, where teams are small enough to be fed by two pizzas. These small, autonomous teams were responsible for specific services, and were cross-functional, including developers, operations engineers, and product managers. This structure facilitated rapid iteration, increased ownership, and fostered a collaborative environment, contributing to Amazon’s ability to release new features and services at an unprecedented pace.

Skill Gaps and Training Needs

Successful DevOps implementation requires a significant shift in the skills and knowledge base of IT professionals. Traditional IT roles often have specialized skill sets, whereas DevOps demands a more holistic and collaborative approach. Addressing skill gaps through targeted training is crucial for accelerating DevOps adoption and realizing its benefits.

Specific Technical Skills Required for Successful DevOps Implementation

DevOps professionals need a broad range of technical skills to effectively manage the entire software development lifecycle. These skills encompass areas such as automation, infrastructure management, and coding.

- Coding and Scripting: Proficiency in scripting languages like Python, Ruby, or Bash is essential for automating tasks, configuring infrastructure, and creating deployment pipelines. Developers use these languages to automate builds, tests, and deployments. For instance, a DevOps engineer might use Python scripts to automate the deployment of application updates across multiple servers, reducing manual effort and potential errors.

- Configuration Management: Experience with configuration management tools such as Ansible, Chef, or Puppet is crucial for automating infrastructure provisioning and configuration. These tools allow teams to define infrastructure as code, ensuring consistency and repeatability. Using Ansible, a team can define the desired state of a server, and Ansible will automatically configure it to match that state.

- Containerization and Orchestration: Understanding containerization technologies like Docker and container orchestration platforms such as Kubernetes is critical. These tools enable efficient application packaging, deployment, and scaling. A DevOps team might use Docker to package an application and its dependencies into a container, then use Kubernetes to manage the deployment and scaling of that container across a cluster of servers.

- Continuous Integration/Continuous Deployment (CI/CD): Expertise in CI/CD pipelines is essential for automating the software release process. This involves using tools like Jenkins, GitLab CI, or CircleCI to automate build, test, and deployment stages. A CI/CD pipeline can automatically build, test, and deploy code changes whenever a developer commits them to a code repository.

- Monitoring and Logging: Skills in monitoring tools like Prometheus, Grafana, and ELK stack (Elasticsearch, Logstash, Kibana) are vital for tracking application performance and identifying issues. Monitoring tools provide real-time insights into system health and performance. For example, a DevOps engineer can use Grafana to create dashboards that visualize key performance indicators (KPIs) such as server CPU usage, response times, and error rates.

- Cloud Computing: Familiarity with cloud platforms like AWS, Azure, or Google Cloud Platform (GCP) is increasingly important. This includes knowledge of cloud services such as virtual machines, storage, databases, and networking. Cloud platforms provide scalable and flexible infrastructure that DevOps teams can leverage. A DevOps team might use AWS services like EC2 for virtual machines, S3 for object storage, and RDS for databases.

- Security: Understanding security best practices and tools is critical for protecting applications and infrastructure. This includes knowledge of security principles, vulnerability scanning, and security automation. Security is an integral part of the DevOps lifecycle. A DevOps team can use tools like SonarQube for static code analysis to identify potential security vulnerabilities early in the development process.

Comparison of Traditional IT Roles with the New Roles Created by DevOps

DevOps introduces new roles and responsibilities that often blur the lines between traditional IT roles. The focus shifts from specialized silos to collaborative teams.

| Traditional IT Role | DevOps Role | Key Responsibilities |

|---|---|---|

| System Administrator | DevOps Engineer | Managing and maintaining servers and infrastructure. |

| Developer | Software Engineer/Developer | Writing and testing code. |

| Operations Engineer | Site Reliability Engineer (SRE) | Ensuring system uptime, performance, and reliability. |

| Quality Assurance (QA) Engineer | Quality Assurance Engineer/SDET | Testing software and ensuring quality. |

| Database Administrator (DBA) | Database DevOps Engineer | Managing databases, performance, and security. |

Note: While the titles and responsibilities may vary, the core principle is that DevOps roles are more collaborative and cross-functional. For example, a DevOps engineer might be responsible for both infrastructure and code deployment, whereas a traditional system administrator would focus primarily on infrastructure.

Common Skill Gaps that Hinder DevOps Adoption Within Teams

Several common skill gaps can impede the successful adoption of DevOps within teams. Addressing these gaps through training and knowledge sharing is essential for enabling a smooth transition.

- Lack of Automation Skills: Many teams lack the skills to automate tasks, leading to manual processes that slow down deployments and increase the risk of errors. For example, a team might rely on manual scripting to deploy code, which is time-consuming and prone to human error.

- Insufficient CI/CD Expertise: Teams often struggle to implement and maintain effective CI/CD pipelines, which is critical for automating the software release process. This can result in slower release cycles and reduced agility.

- Poor Understanding of Infrastructure as Code (IaC): Teams may lack the knowledge to manage infrastructure using code, which limits their ability to provision and configure infrastructure efficiently. This can lead to inconsistent environments and increased operational overhead.

- Limited Cloud Computing Skills: As organizations move to the cloud, a lack of cloud computing skills can hinder their ability to leverage cloud services effectively. This can result in underutilized resources and increased costs.

- Inadequate Security Knowledge: A lack of security expertise can expose applications and infrastructure to vulnerabilities. DevOps teams must integrate security practices throughout the software development lifecycle.

- Ineffective Monitoring and Logging Practices: Without proper monitoring and logging, teams struggle to identify and resolve issues quickly, which can impact application performance and user experience. This lack of visibility makes it difficult to diagnose and fix problems promptly.

- Communication and Collaboration Challenges: DevOps relies heavily on effective communication and collaboration between teams. Lack of experience in cross-functional communication can create silos and impede the flow of information.

Technology and Tooling Complexity

Integrating the right technology and tools is crucial for a successful DevOps implementation. However, this aspect presents significant challenges. The landscape of DevOps tools is vast and constantly evolving, making it difficult to choose the right ones and integrate them effectively. This complexity can lead to increased operational overhead, security vulnerabilities, and ultimately, hinder the benefits of DevOps.

Integrating Diverse Tools and Technologies

The core principle of DevOps involves automating the software development lifecycle. This automation relies heavily on a collection of tools, each designed to address a specific phase, from code creation and testing to deployment and monitoring. The challenge arises from the need to seamlessly integrate these diverse tools, which often come from different vendors and have varying functionalities and integration methods.This integration challenge manifests in several ways:

- Compatibility Issues: Different tools may not be designed to work together directly. This can lead to conflicts, data inconsistencies, and the need for custom scripting or workarounds. For example, a continuous integration (CI) tool might not natively integrate with a particular monitoring platform, requiring custom API integrations.

- Data Silos: Without proper integration, data generated by one tool may not be accessible to others, creating information silos. This hinders visibility across the entire software delivery pipeline and can lead to delays and errors. Consider the scenario where testing results from a specific tool are not automatically available to the deployment tool, thus slowing down the release process.

- Complex Toolchains: As organizations adopt more tools to address specific needs, the overall toolchain becomes increasingly complex. Managing and maintaining this complex toolchain requires specialized expertise and can increase the risk of failures. For example, the combination of CI/CD, configuration management, and infrastructure-as-code tools might create a complex setup.

- Security Risks: Integrating multiple tools can increase the attack surface. Each tool introduces potential vulnerabilities, and the connections between them can create new security risks if not properly secured. For example, a vulnerability in a CI tool could be exploited to compromise the entire deployment pipeline.

Selecting the Right Tools for DevOps Practices

Choosing the right tools is critical to a successful DevOps implementation. The selection process should be guided by the specific needs of the organization, the existing infrastructure, and the desired DevOps practices. A poorly chosen tool can undermine the benefits of DevOps and lead to increased complexity and inefficiency.The following steps are essential in the selection process:

- Define Requirements: Identify the specific DevOps practices the organization wants to implement (e.g., continuous integration, continuous delivery, infrastructure as code). Define the functionalities required from the tools based on these practices. For instance, if automating infrastructure provisioning is a key requirement, the organization needs to select a tool that supports infrastructure as code (IaC).

- Evaluate Available Tools: Research and evaluate available tools that meet the defined requirements. Consider factors such as features, scalability, ease of use, community support, cost, and integration capabilities. Analyze vendor documentation, read user reviews, and conduct proof-of-concept (POC) tests.

- Consider Integration Capabilities: Prioritize tools that integrate well with existing infrastructure and other tools in the toolchain. Look for tools that offer APIs, plugins, or integrations with popular platforms. Seamless integration is crucial for automating workflows and avoiding manual interventions.

- Assess Security and Compliance: Ensure the chosen tools meet the organization’s security and compliance requirements. Evaluate the security features of each tool and assess its compliance with relevant industry standards. For example, the tool should support secure access controls and data encryption.

- Conduct Proof-of-Concept (POC) Tests: Before committing to a tool, conduct POC tests to evaluate its performance, usability, and integration capabilities. This helps validate the tool’s suitability for the organization’s specific needs and identify potential challenges before full deployment.

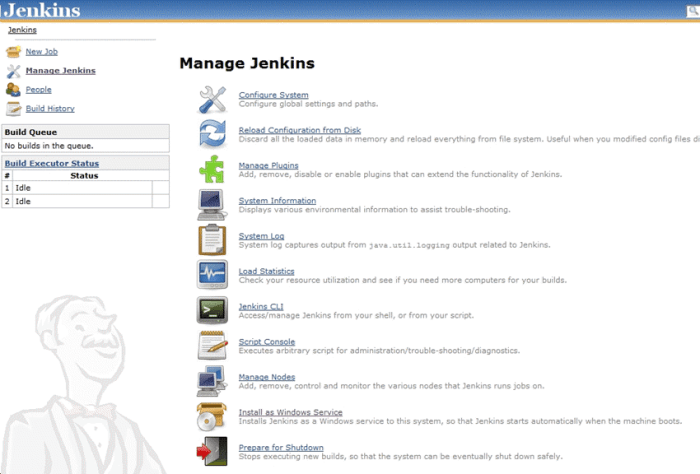

Pros and Cons of Popular CI/CD Tools

The selection of CI/CD tools is pivotal for automating the software delivery pipeline. Several tools offer various features and functionalities. Choosing the right one requires a careful evaluation of its pros and cons, based on the organization’s specific requirements and context. The following table provides a comparative analysis of some popular CI/CD tools.

| CI/CD Tool | Pros | Cons | Use Cases and Examples |

|---|---|---|---|

| Jenkins |

|

|

|

| GitLab CI/CD |

|

|

|

| CircleCI |

|

|

|

| Azure DevOps |

|

|

|

Automation Challenges

Automation is the cornerstone of DevOps, enabling rapid and reliable software delivery. However, implementing automation effectively is a complex undertaking, fraught with potential pitfalls. Failing to automate correctly can negate the benefits of DevOps, leading to increased deployment times, higher error rates, and frustrated teams.

Common Automation Pitfalls

Several common mistakes can derail automation efforts. Understanding these pitfalls is crucial for successful DevOps adoption.

- Over-Automation: Attempting to automate everything at once, often before processes are well-defined or understood. This can lead to brittle, complex automation that is difficult to maintain and debug.

- Lack of Standardization: Inconsistent configurations, scripts, and tooling across environments create maintenance headaches and increase the risk of errors during deployments.

- Poor Version Control: Failing to version control automation scripts and configurations makes it impossible to track changes, revert to previous states, and collaborate effectively.

- Ignoring Testing: Insufficient testing of automated processes can result in deployments that fail, leading to downtime and requiring manual intervention.

- Insufficient Monitoring: Without proper monitoring and alerting, it’s difficult to identify and resolve issues with automated processes quickly.

- Ignoring Security: Neglecting security considerations in automation can expose systems to vulnerabilities and attacks. This includes securely storing and managing credentials.

- Ignoring Feedback Loops: Automation should be a continuous cycle of improvement. Failing to gather and incorporate feedback from users and operations teams leads to inefficient processes.

- Lack of Documentation: Poorly documented automation scripts and processes make it difficult for others to understand, modify, and troubleshoot them.

Gradual and Iterative Approach to Automation

A phased, iterative approach is essential for successful automation. This strategy allows teams to learn, adapt, and build a robust automation framework over time.

- Identify and Prioritize: Begin by identifying the most time-consuming and error-prone manual tasks. Prioritize automation efforts based on their potential impact and feasibility. Start with tasks that are well-defined and have a clear scope.

- Automate Small Tasks: Focus on automating small, discrete tasks initially. This allows teams to gain experience, refine their processes, and build confidence before tackling more complex automation projects.

- Test Thoroughly: Rigorous testing is crucial at every stage. Develop automated tests to verify that automation scripts and processes function as expected. This includes unit tests, integration tests, and end-to-end tests.

- Iterate and Refine: Continuously monitor and evaluate the performance of automated processes. Gather feedback from users and operations teams to identify areas for improvement. Make incremental changes and iterate on the automation as needed.

- Document Everything: Maintain clear and comprehensive documentation for all automated processes, including scripts, configurations, and procedures. This makes it easier for others to understand, maintain, and troubleshoot the automation.

- Build a Culture of Automation: Encourage a culture of automation within the team. Provide training and support to help team members develop the necessary skills and knowledge. Foster collaboration and knowledge sharing.

Impact of Poor Automation on Deployment Frequency and Lead Time

Ineffective automation significantly hinders deployment frequency and increases lead time, the two key metrics for measuring DevOps success.Poor automation often results in:

- Reduced Deployment Frequency: Manual processes and errors during deployments lead to longer release cycles and fewer deployments. For example, a team relying on manual deployments might release code once a month, while a team with robust automation might deploy multiple times a day.

- Increased Lead Time: The time it takes to go from code commit to production increases significantly. This includes the time spent on manual testing, approvals, and deployments.

- Higher Error Rates: Manual processes are prone to human error. Poorly automated deployments are more likely to fail, requiring rollback and manual intervention.

- Increased Risk: Manual deployments are riskier. The chance of making a mistake increases, leading to outages and data loss.

- Lower Team Morale: Frustration with manual tasks and frequent failures can negatively impact team morale and productivity.

Consider a real-world example: a large e-commerce company that struggles with manual deployments. They experience frequent outages and can only deploy new features once a quarter. After adopting automated testing and deployment pipelines, their deployment frequency increased to weekly, lead time decreased from months to days, and error rates plummeted. This resulted in increased customer satisfaction and faster time-to-market for new features.

Security Concerns and Compliance

Integrating security into a DevOps lifecycle presents significant challenges, as it requires a shift from traditional, siloed security practices to a more collaborative and automated approach. This transition necessitates addressing concerns about potential vulnerabilities introduced by rapid deployment cycles, ensuring adherence to industry regulations, and fostering a security-conscious culture across the development and operations teams. Effectively managing these challenges is crucial for maintaining data integrity, protecting against threats, and ensuring business continuity.

Integrating Security Practices into the DevOps Lifecycle

Integrating security into the DevOps lifecycle demands a fundamental change in how security is perceived and implemented. This involves embedding security practices throughout the entire development pipeline, from the initial planning stages to deployment and monitoring. This shift, known as “DevSecOps,” aims to automate security checks and integrate them seamlessly with other DevOps processes.Implementing DevSecOps involves several key strategies:

- Shift Left Approach: This emphasizes the early integration of security practices, such as security testing and vulnerability scanning, during the development phase. This proactive approach helps identify and address security flaws early on, reducing the cost and effort of remediation later in the lifecycle. For example, static code analysis tools can be integrated into the build process to automatically identify potential vulnerabilities in the code.

- Automated Security Testing: Automated security testing is crucial for ensuring that security checks are performed consistently and frequently. This includes incorporating security tests into continuous integration and continuous delivery (CI/CD) pipelines. Automated testing can encompass various techniques, such as vulnerability scanning, penetration testing, and fuzzing.

- Security as Code: This involves defining security configurations and policies in code, enabling automation and version control. This approach allows security to be treated as an integral part of the infrastructure and application code, making it easier to manage, replicate, and update security controls.

- Continuous Monitoring and Incident Response: Continuous monitoring is essential for detecting and responding to security incidents in real-time. This involves implementing tools and processes for monitoring logs, network traffic, and application behavior. When a security incident is detected, a well-defined incident response plan should be in place to mitigate the damage and prevent future occurrences.

- Collaboration and Communication: DevSecOps requires close collaboration between development, operations, and security teams. This involves establishing clear communication channels, sharing knowledge, and fostering a culture of shared responsibility for security. Regular training and awareness programs can help ensure that all team members understand their roles in maintaining security.

Implementing “Security as Code”

“Security as Code” is a critical concept in DevSecOps, enabling the automation, version control, and repeatability of security configurations and policies. It involves treating security as an integral part of the infrastructure and application code, allowing for consistent and efficient security management.The implementation of Security as Code involves several key practices:

- Infrastructure as Code (IaC) for Security: Use IaC tools to define and manage security configurations for infrastructure components, such as firewalls, virtual machines, and network configurations. Tools like Terraform, Ansible, and CloudFormation can be used to define security policies and deploy infrastructure securely.

- Configuration Management: Employ configuration management tools to ensure that systems are configured according to security best practices. This involves defining and enforcing security policies for operating systems, applications, and other system components. Tools like Chef, Puppet, and SaltStack can automate the configuration management process.

- Policy as Code: Define security policies and compliance rules in code, enabling automated enforcement and monitoring. Tools like Open Policy Agent (OPA) and AWS CloudWatch can be used to define and enforce policies for various aspects of the infrastructure and applications.

- Automated Security Testing in CI/CD: Integrate security testing into the CI/CD pipeline to automatically check for vulnerabilities and compliance violations. This includes using tools for static code analysis, dynamic application security testing (DAST), and software composition analysis (SCA).

- Version Control for Security Configurations: Store security configurations and policies in a version control system, such as Git, to track changes, collaborate effectively, and revert to previous configurations if needed. This provides a complete audit trail and enables efficient management of security configurations.

For example, consider a scenario where a company needs to configure its cloud infrastructure to meet the security requirements of the Payment Card Industry Data Security Standard (PCI DSS). Instead of manually configuring each server, the company can use IaC to define the required security settings, such as firewall rules, encryption configurations, and access controls, as code. This code can then be version-controlled and automatically deployed across the infrastructure, ensuring consistency and compliance.

Ensuring Compliance with Industry Regulations in a DevOps Environment

Ensuring compliance with industry regulations in a DevOps environment requires a proactive and automated approach. This involves integrating compliance checks into the CI/CD pipeline, using tools to monitor and enforce security policies, and maintaining a comprehensive audit trail. This ensures that the organization meets the necessary regulatory requirements while maintaining the speed and agility of DevOps.Methods for ensuring compliance include:

- Automated Compliance Checks: Integrate automated compliance checks into the CI/CD pipeline to ensure that code and infrastructure configurations meet the requirements of relevant regulations. Tools like SonarQube, OWASP ZAP, and commercial compliance scanning tools can be used to automate these checks. For instance, a static code analysis tool can automatically check for coding practices that violate PCI DSS guidelines, such as storing sensitive data in plain text.

- Policy Enforcement and Governance: Define and enforce security policies and governance rules as code, enabling automated enforcement and monitoring. Tools like OPA can be used to define and enforce policies for various aspects of the infrastructure and applications. This ensures that all deployments adhere to the organization’s security policies and comply with regulatory requirements.

- Continuous Monitoring and Auditing: Implement continuous monitoring and auditing to track compliance status and identify any violations. This involves collecting and analyzing logs, monitoring system behavior, and generating reports. Tools like Splunk, ELK Stack, and commercial security information and event management (SIEM) solutions can be used for this purpose.

- Version Control and Change Management: Use version control to track changes to code and infrastructure configurations, providing a complete audit trail. This enables organizations to demonstrate that they have followed proper change management procedures and can easily revert to previous configurations if needed.

- Regular Training and Awareness: Provide regular training and awareness programs to educate employees about compliance requirements and security best practices. This ensures that all team members understand their roles in maintaining compliance and are aware of the latest threats and vulnerabilities.

Monitoring and Observability

In a DevOps environment, continuous monitoring and robust observability are essential for maintaining system health, identifying issues proactively, and ensuring optimal performance. They provide the necessary feedback loop for continuous improvement, enabling teams to understand the impact of changes and quickly respond to incidents. Without effective monitoring and observability, the benefits of DevOps, such as faster release cycles and improved collaboration, can be severely compromised.

Importance of Comprehensive Monitoring

Comprehensive monitoring is the backbone of a successful DevOps implementation. It allows teams to track the performance of applications and infrastructure, identify bottlenecks, and troubleshoot issues in real-time. This proactive approach minimizes downtime, enhances user experience, and supports informed decision-making.

- Real-time Visibility: Provides immediate insights into system behavior, enabling quick identification of performance degradations or failures. This real-time view allows for immediate action to mitigate any impact.

- Performance Optimization: Helps identify areas for improvement, such as inefficient code or resource allocation, leading to optimized application performance and resource utilization.

- Proactive Issue Detection: Allows teams to detect potential problems before they impact users, reducing the risk of major outages and improving overall system stability.

- Improved Troubleshooting: Provides data and context for faster and more effective troubleshooting when issues arise, reducing mean time to resolution (MTTR).

- Compliance and Auditing: Facilitates compliance with regulatory requirements by providing detailed logs and performance data for audits.

Comparison of Monitoring Strategies

Different monitoring strategies cater to various aspects of a system. Understanding the distinctions between them is crucial for creating a holistic monitoring approach.

- Application Performance Monitoring (APM): APM focuses on the performance of applications and their components. It provides insights into code-level performance, transaction tracing, and user experience. Examples include tools like Datadog APM, New Relic APM, and Dynatrace. APM helps identify slow database queries, inefficient code execution, and other application-specific bottlenecks.

- Infrastructure Monitoring: Infrastructure monitoring focuses on the underlying hardware and resources that support applications. It tracks metrics such as CPU utilization, memory usage, disk I/O, and network performance. Tools like Prometheus, Grafana, and Nagios are commonly used. Infrastructure monitoring ensures that the underlying resources are healthy and can support application workloads.

- Log Management: Log management involves collecting, storing, and analyzing log data from various sources, including applications, servers, and network devices. Tools like the ELK Stack (Elasticsearch, Logstash, Kibana), Splunk, and Graylog are used for log aggregation and analysis. Log management provides insights into application behavior, security events, and error occurrences.

- User Experience Monitoring (UEM): UEM focuses on measuring the end-user experience. It tracks metrics like page load times, user interactions, and error rates. Tools such as Catchpoint, Pingdom, and Google Analytics can provide UEM insights. UEM helps identify and address issues that affect user satisfaction.

Challenges of Data Collection and Analysis

Collecting and analyzing data from various monitoring tools presents several challenges in a DevOps environment. These challenges can impact the effectiveness of monitoring efforts if not addressed proactively.

- Data Volume: The sheer volume of data generated by modern applications and infrastructure can be overwhelming. Managing and processing this data efficiently requires scalable storage and processing capabilities.

- Data Silos: Data often resides in silos across different tools and platforms, making it difficult to correlate information and gain a holistic view of system performance.

- Tool Complexity: The variety of monitoring tools available, each with its own configuration and data format, can create complexity and require specialized expertise.

- Alert Fatigue: Too many alerts, especially false positives, can lead to alert fatigue, where teams become desensitized to important issues.

- Data Correlation: Correlating data from different sources to identify root causes can be complex and time-consuming, requiring advanced analytics and visualization capabilities.

- Security and Privacy: Ensuring the security and privacy of monitoring data is critical, especially when dealing with sensitive information.

Legacy Systems Integration

Integrating legacy systems with modern DevOps practices presents a significant challenge for organizations. These older systems, often built with different technologies and architectures, can be difficult to integrate into a continuous delivery pipeline. The differences in technology, processes, and organizational structures require careful planning and execution to avoid disrupting existing operations and maximizing the benefits of DevOps.

Difficulties of Integrating Legacy Systems

The integration of legacy systems into a DevOps environment is often fraught with difficulties. These systems, which may include mainframe applications, older databases, and custom-built software, frequently present unique obstacles.

- Technology Mismatch: Legacy systems are frequently built using outdated technologies, programming languages, and frameworks that may not be compatible with modern DevOps tools and practices. For instance, integrating a COBOL application with a modern containerization platform can be complex.

- Lack of Documentation: Many legacy systems suffer from inadequate documentation, making it difficult to understand their architecture, dependencies, and functionalities. This lack of information hinders the ability to modify, test, and integrate these systems.

- Complex Dependencies: Legacy systems often have intricate dependencies on other systems and components, making it challenging to isolate and modify parts of the system without impacting the entire application.

- Limited Automation Capabilities: Legacy systems may lack the built-in automation capabilities that are crucial for DevOps. Automating testing, deployment, and monitoring of these systems can be difficult or even impossible without significant effort.

- Skill Gaps: The skills required to maintain and modernize legacy systems may be different from the skills needed for modern DevOps practices. Finding individuals with the right combination of legacy system knowledge and DevOps expertise can be challenging.

- Security Vulnerabilities: Older systems may have inherent security vulnerabilities that are difficult to address without significant refactoring. Integrating these systems into a DevOps environment without proper security measures can expose the entire system to risk.

Strategies for Modernizing Legacy Applications

Modernizing legacy applications is a crucial step in successfully integrating them into a DevOps environment. Several strategies can be employed to address the challenges associated with legacy systems.

- Rehosting: This involves moving the legacy application to a new infrastructure, such as a cloud platform, without changing the code. This approach can provide immediate benefits in terms of scalability, cost, and availability.

- Replatforming: This involves migrating the application to a new platform, such as a different operating system or database, while making minimal code changes. This can improve performance and reduce maintenance costs.

- Refactoring: This involves restructuring and rewriting portions of the legacy code to improve its design, readability, and maintainability. Refactoring can help to reduce technical debt and make the application easier to integrate with modern DevOps practices.

- Re-architecting: This involves completely redesigning and rebuilding the application using modern technologies and architectures, such as microservices. This approach provides the greatest potential for modernization but also carries the highest risk and cost.

- Replacing: This involves replacing the legacy application with a new, modern application. This approach is typically used when the legacy application is outdated, difficult to maintain, or no longer meets business needs.

- Wrapping: This involves creating an interface or “wrapper” around the legacy application to allow it to interact with modern systems. This can be a quick and cost-effective way to integrate the legacy application into a DevOps environment.

Ignoring legacy system integration in DevOps adoption can lead to:

- Increased Technical Debt: Continued reliance on outdated technologies and practices.

- Slowed Innovation: Inability to rapidly deploy new features and updates.

- Reduced Agility: Difficulty in responding to changing business needs.

- Higher Costs: Increased maintenance and operational expenses.

- Increased Risk: Exposure to security vulnerabilities and system failures.

Budget and Resource Constraints

Adopting DevOps often requires significant investment in tools, training, and infrastructure. However, budget and resource limitations can significantly hinder the pace and success of this transformation. Effectively managing these constraints is crucial for achieving a successful DevOps implementation.

Impact of Limited Budgets on DevOps Adoption

Limited budgets can significantly impact the adoption of DevOps in several ways, slowing down the overall transformation and potentially leading to incomplete implementations.

- Delayed Tool Acquisition: A restricted budget can delay the purchase of essential DevOps tools for automation, monitoring, and collaboration. This delay can hamper the ability to automate processes, reduce manual errors, and improve the speed of software delivery.

- Restricted Training Opportunities: Insufficient funds may limit the training available for employees on DevOps practices and technologies. This can lead to skill gaps within the team, hindering the effective implementation of DevOps principles and potentially increasing the risk of project failures.

- Inadequate Infrastructure: Budget constraints might prevent the organization from investing in the necessary infrastructure, such as cloud services or powerful servers, to support DevOps practices. This can lead to performance bottlenecks and limit the scalability of applications.

- Slower Pace of Implementation: With limited resources, organizations often need to adopt a phased approach, implementing DevOps practices gradually. This slower pace can extend the overall transformation timeline, delaying the realization of benefits like faster time-to-market and improved software quality.

- Reduced Innovation: When resources are tight, teams may focus on maintaining existing systems rather than experimenting with new technologies and approaches. This can stifle innovation and limit the organization’s ability to adapt to changing market demands.

Prioritizing Investments in DevOps Tools and Training

Given budget limitations, strategic prioritization is essential to maximize the return on investment in DevOps tools and training. This involves carefully evaluating needs and allocating resources effectively.

- Identify Critical Needs: Start by assessing the most pressing needs within the organization. This involves identifying areas where automation, improved collaboration, and faster feedback loops can deliver the greatest impact. For example, if the build and deployment process is a major bottleneck, investing in Continuous Integration/Continuous Delivery (CI/CD) tools should be a priority.

- Evaluate Tools Based on ROI: When selecting tools, consider their potential return on investment (ROI). Evaluate the cost of each tool against the benefits it offers, such as reduced manual effort, faster deployment times, and improved software quality. Prioritize tools that offer the highest ROI.

- Prioritize Training Programs: Invest in training programs that address the most critical skill gaps within the team. Focus on training that covers the tools and technologies that have been prioritized. Consider online courses, workshops, and mentorship programs to build the necessary expertise.

- Phased Implementation: Adopt a phased approach to tool and training implementation. Start with a small set of tools and a pilot project to demonstrate the value of DevOps. Once the benefits are realized, gradually expand the implementation to other areas of the organization.

- Leverage Open-Source Tools: Explore the use of open-source tools, which can provide cost-effective alternatives to commercial software. Open-source tools often offer robust features and a large community of users and contributors.

- Negotiate with Vendors: Negotiate pricing and licensing terms with tool vendors to obtain the best possible deals. Consider volume discounts and other incentives.

Securing Stakeholder Buy-in for DevOps Initiatives

Gaining buy-in from stakeholders is crucial for securing the necessary resources and support for DevOps initiatives. This involves effectively communicating the benefits of DevOps and demonstrating its value to the organization.

- Clearly Define Objectives and Benefits: Articulate the goals of the DevOps initiative and clearly explain the benefits to stakeholders. Highlight how DevOps can improve software delivery speed, reduce costs, enhance quality, and improve customer satisfaction.

- Demonstrate the Value Proposition: Use data and metrics to demonstrate the value of DevOps. Present case studies and success stories from other organizations that have successfully implemented DevOps.

- Develop a Compelling Business Case: Create a detailed business case that Artikels the costs, benefits, and risks associated with the DevOps initiative. Include a clear ROI analysis to demonstrate the financial value of the transformation.

- Involve Stakeholders Early: Engage stakeholders early in the planning process. Solicit their input and feedback to ensure that the initiative aligns with their priorities and concerns.

- Communicate Progress Regularly: Provide regular updates to stakeholders on the progress of the DevOps initiative. Share key milestones, metrics, and achievements to demonstrate the value of the transformation.

- Address Concerns and Mitigate Risks: Proactively address any concerns or risks that stakeholders may have. Develop mitigation plans to address potential challenges and ensure that the initiative stays on track.

- Focus on Quick Wins: Identify opportunities to achieve quick wins that demonstrate the value of DevOps. These early successes can build momentum and gain support for the initiative. For instance, improving the deployment time of a critical application by automating the process.

Testing and Quality Assurance

In a DevOps environment, Testing and Quality Assurance (QA) is not just a phase but an integral part of the entire software development lifecycle. The shift towards continuous integration and continuous delivery (CI/CD) necessitates a robust and efficient testing strategy. This ensures that code changes are validated rapidly and reliably, minimizing the risk of defects and accelerating the release cycle.

Implementing effective testing practices is crucial for maintaining software quality, user satisfaction, and overall business success in a DevOps context.

Implementing Continuous Testing Challenges

Implementing continuous testing within a DevOps environment presents several challenges that organizations must address to realize its full potential. Successfully integrating testing throughout the CI/CD pipeline requires careful planning and execution.

- Test Automation Complexity: Automating tests can be complex, particularly for intricate applications or those with legacy components. Designing, developing, and maintaining automated test suites require significant effort and expertise. This includes choosing the right tools, frameworks, and scripting languages.

- Test Data Management: Providing realistic and relevant test data is crucial for accurate testing. Managing test data can be challenging, especially when dealing with large datasets or sensitive information. Organizations must establish effective strategies for creating, masking, and refreshing test data.

- Test Environment Management: Ensuring that test environments accurately reflect production environments is critical. Managing test environments can be difficult, especially when dealing with multiple environments, complex infrastructure, and dependencies. Automating environment provisioning and configuration is essential for efficiency.

- Test Coverage and Scope: Determining the appropriate level of test coverage and scope can be challenging. Organizations must balance the need for comprehensive testing with the constraints of time and resources. Prioritizing tests based on risk and impact is essential for maximizing efficiency.

- Test Flakiness: Flaky tests, which intermittently fail and pass without any code changes, can undermine the reliability of continuous testing. Identifying and resolving flaky tests requires careful analysis and debugging.

- Integration with CI/CD Pipelines: Seamlessly integrating tests into the CI/CD pipeline is crucial for achieving continuous testing. This requires automating test execution, reporting, and analysis. Organizations must choose appropriate tools and technologies to support this integration.

- Skill Gaps: Implementing and maintaining continuous testing often requires specialized skills in test automation, scripting, and testing frameworks. Addressing skill gaps through training and recruitment is essential for success.

- Organizational Culture: Fostering a culture of quality and collaboration is essential for continuous testing. Developers, testers, and operations teams must work together to ensure that testing is integrated throughout the development lifecycle.

Testing Methodologies Comparison

Different testing methodologies serve distinct purposes in the software development lifecycle. Each methodology has its strengths and weaknesses, and the choice of which to use depends on the specific needs of the project.

- Unit Testing: Unit testing involves testing individual components or modules of the software in isolation. The primary goal is to verify that each unit functions as expected.

- Advantages: Early detection of defects, easier debugging, and improved code quality.

- Disadvantages: Limited scope, does not test interactions between units, and can be time-consuming.

- Example: Testing a function that calculates the sum of two numbers to ensure it returns the correct result for different input values.

- Integration Testing: Integration testing focuses on testing the interactions between different modules or components of the software. The primary goal is to verify that these components work together correctly.

- Advantages: Detects integration issues, verifies data flow, and improves system reliability.

- Disadvantages: Can be complex to design and execute, and may require specific test environments.

- Example: Testing the interaction between a user interface and a database to ensure data is correctly saved and retrieved.

- End-to-End Testing: End-to-end (E2E) testing simulates the user’s interaction with the entire application from start to finish. The primary goal is to verify that the application functions as expected across all its components and layers.

- Advantages: Verifies the complete user experience, detects system-level issues, and validates business processes.

- Disadvantages: Can be time-consuming and complex to execute, and may be prone to false positives.

- Example: Testing a complete e-commerce transaction, including user login, product selection, payment processing, and order confirmation.

- Performance Testing: Performance testing evaluates the software’s performance characteristics, such as speed, stability, and scalability. This includes load testing, stress testing, and endurance testing.

- Advantages: Identifies performance bottlenecks, ensures the application can handle expected load, and improves user experience.

- Disadvantages: Requires specialized tools and expertise, and can be expensive.

- Example: Using a load testing tool to simulate a large number of concurrent users accessing a web application to measure its response time and resource utilization.

Automating Test Suites Common Challenges

Automating test suites is crucial for achieving continuous testing, but it also presents several common challenges. Overcoming these challenges is essential for maximizing the benefits of test automation.

- Test Script Maintenance: Test scripts can become brittle and difficult to maintain as the software evolves. Changes to the application’s user interface, business logic, or underlying infrastructure can require frequent updates to test scripts.

- Test Data Management: Providing realistic and relevant test data is critical for accurate testing. Managing test data can be challenging, especially when dealing with large datasets or sensitive information.

- Test Environment Management: Ensuring that test environments accurately reflect production environments is crucial. Managing test environments can be difficult, especially when dealing with multiple environments, complex infrastructure, and dependencies.

- Flaky Tests: Flaky tests, which intermittently fail and pass without any code changes, can undermine the reliability of automated test suites. Identifying and resolving flaky tests requires careful analysis and debugging.

- Test Coverage: Determining the appropriate level of test coverage can be challenging. Organizations must balance the need for comprehensive testing with the constraints of time and resources.

- Tooling and Framework Selection: Choosing the right tools and frameworks for test automation is crucial. The selection should consider the application’s technology stack, testing requirements, and team’s expertise.

- Skill Gaps: Implementing and maintaining automated test suites often requires specialized skills in test automation, scripting, and testing frameworks. Addressing skill gaps through training and recruitment is essential.

- Reporting and Analysis: Generating meaningful test reports and analyzing test results is essential for identifying defects and improving software quality. Automating the reporting process and integrating it with CI/CD pipelines is critical.

Governance and Standardization

Adopting DevOps necessitates a robust governance framework to ensure consistency, control, and alignment with organizational goals. Without effective governance, DevOps practices can become fragmented, leading to inefficiencies, security vulnerabilities, and difficulties in scaling. This section delves into the critical aspects of governance and standardization within a DevOps environment.

Importance of Governance in DevOps

Governance in DevOps provides the necessary structure to manage risk, ensure compliance, and maintain operational efficiency. It establishes the guidelines, policies, and processes that govern how teams operate, collaborate, and deploy applications.Governance is essential for:

- Risk Management: It helps identify and mitigate potential risks associated with the development and deployment lifecycle. This includes security vulnerabilities, compliance breaches, and operational failures.

- Compliance: It ensures adherence to industry regulations and internal policies, protecting the organization from legal and financial penalties.

- Consistency: It promotes standardized processes and practices across teams, leading to greater predictability and reduced errors.

- Efficiency: It streamlines workflows, automates tasks, and improves collaboration, ultimately accelerating the delivery of value to the business.

- Scalability: It provides a framework for managing growth and adapting to changing business needs. A well-governed DevOps environment can more easily scale its operations to meet increased demand.

Standardizing Processes and Practices

Standardization is a cornerstone of successful DevOps implementation. It involves establishing common practices, tools, and workflows across all teams to promote consistency and reduce variability. This approach simplifies operations, improves collaboration, and accelerates the delivery pipeline.Examples of standardization include:

- Code Repositories and Version Control: Enforcing the use of a single, centralized code repository (e.g., Git) and standardized branching strategies (e.g., Gitflow) ensures all code is managed consistently. This facilitates collaboration, tracks changes, and simplifies rollback procedures.

- Infrastructure as Code (IaC): Using IaC tools (e.g., Terraform, Ansible) to define and manage infrastructure resources consistently. This enables automated provisioning, reduces configuration drift, and promotes repeatability. A standardized IaC approach ensures that infrastructure deployments are predictable and easily reproducible across environments.

- Continuous Integration/Continuous Delivery (CI/CD) Pipelines: Implementing standardized CI/CD pipelines using tools like Jenkins, GitLab CI, or CircleCI. This ensures that code changes are automatically built, tested, and deployed in a consistent manner. Standardized pipelines also improve the reliability and speed of the release process.

- Monitoring and Logging: Establishing a centralized monitoring and logging system (e.g., Prometheus, Grafana, ELK stack) to collect and analyze data across all environments. Standardized monitoring practices provide a consistent view of application performance, system health, and security events, enabling proactive issue detection and resolution.

- Security Practices: Implementing standardized security practices, such as static code analysis, vulnerability scanning, and penetration testing, to identify and mitigate security risks. This helps to ensure that applications and infrastructure are secure by design and that security vulnerabilities are addressed proactively.

Establishing Clear Roles and Responsibilities

Defining clear roles and responsibilities is crucial for effective collaboration and accountability within a DevOps environment. This clarifies who is responsible for specific tasks and decisions, minimizing confusion and ensuring that all aspects of the DevOps lifecycle are adequately addressed.Methods for establishing clear roles and responsibilities include:

- Defining RACI Matrices: Utilizing RACI (Responsible, Accountable, Consulted, Informed) matrices to clearly define the roles and responsibilities for each task or activity within the DevOps process. This provides a visual representation of who is involved and their level of participation.

- Creating DevOps Teams: Organizing teams with specific roles, such as developers, operations engineers, security specialists, and release managers. Each role has defined responsibilities and collaborates with other teams. This fosters specialization and collaboration.

- Establishing a Center of Excellence (CoE): Forming a CoE to provide guidance, training, and support for DevOps practices across the organization. The CoE can also be responsible for defining standards, best practices, and governance policies.

- Implementing a Change Management Process: Establishing a formal change management process to manage changes to the environment, including infrastructure, applications, and configurations. This process ensures that changes are reviewed, approved, and implemented in a controlled and documented manner.

- Using Agile Methodologies: Employing agile methodologies, such as Scrum or Kanban, to facilitate collaboration and communication. These methodologies promote iterative development, continuous feedback, and a shared understanding of roles and responsibilities.

Measuring DevOps Success

Assessing the effectiveness of DevOps initiatives is crucial for continuous improvement and demonstrating the value of the transformation. Measuring success goes beyond simply implementing new tools or practices; it involves quantifying the impact on key business and technical outcomes. A robust measurement strategy provides insights into areas for optimization, helps justify investments, and fosters a data-driven culture within the organization.

Key Metrics for Assessing DevOps Success

Measuring DevOps success requires a multi-faceted approach, focusing on both technical and business outcomes. These metrics provide a comprehensive view of the DevOps transformation’s impact.

- Deployment Frequency: This metric measures how often code changes are deployed to production. Increased deployment frequency is a key indicator of improved agility and faster time-to-market. For example, companies adopting DevOps often see deployment frequencies increase from quarterly or monthly releases to daily or even multiple times a day. This rapid iteration allows for quicker feedback and faster response to customer needs.

- Lead Time for Changes: This measures the time it takes from code commit to code successfully running in production. A shorter lead time signifies faster delivery cycles and reduced time to resolve issues. Shorter lead times correlate with increased business value.

- Change Failure Rate: This is the percentage of deployments that result in a failure or require a rollback. A lower change failure rate indicates more stable and reliable deployments. This metric is crucial for maintaining service quality and preventing disruptions.

- Mean Time to Recovery (MTTR): This metric quantifies the average time it takes to restore service after an incident or outage. Reduced MTTR demonstrates improved incident response capabilities and enhanced system resilience. Efficient incident resolution minimizes downtime and protects the user experience.

- Team Performance and Productivity: This includes metrics such as code commit frequency, story points completed per sprint (in Agile environments), and defect density. Increased productivity and collaboration are essential for effective DevOps. Improved team performance is reflected in faster development cycles and higher-quality software.

- Customer Satisfaction: This is often measured through surveys, Net Promoter Scores (NPS), and customer feedback. Higher customer satisfaction indicates that DevOps practices are delivering value to the end-users. Satisfied customers are more likely to remain loyal and recommend the product or service.

- Business Outcomes: This includes metrics such as revenue growth, market share, and cost reduction. DevOps initiatives should contribute to tangible business results. For example, improved deployment frequency and lead time can lead to faster feature releases and increased customer acquisition.

Common Pitfalls in Measuring DevOps Performance

Accurate and meaningful measurement is essential, but several pitfalls can undermine the effectiveness of the measurement process. Avoiding these common mistakes is crucial for a successful DevOps transformation.

- Vanity Metrics: Focusing on metrics that look good on the surface but don’t reflect actual progress or business value. Examples include a high number of deployments without a corresponding increase in customer satisfaction or revenue.

- Lack of Baseline Measurement: Failing to establish a baseline before implementing DevOps practices makes it difficult to measure improvement. Without a baseline, it is impossible to assess the impact of the changes.

- Ignoring Context: Not considering the specific context of the organization and its goals. Metrics should be aligned with the organization’s unique needs and priorities.

- Data Silos: Data scattered across different tools and teams can make it difficult to get a complete picture of DevOps performance. Integration and data sharing are essential for comprehensive analysis.

- Lack of Automation: Manual data collection and analysis are time-consuming and prone to errors. Automating the measurement process is crucial for efficiency and accuracy.

- Focusing Solely on Technical Metrics: Ignoring the impact on business outcomes. DevOps success should be measured by its contribution to overall business goals, such as revenue growth and customer satisfaction.

Using Data to Improve DevOps Practices

Data is a powerful tool for continuous improvement in DevOps. Analyzing metrics and using the insights to make informed decisions can lead to significant improvements in efficiency, quality, and business outcomes.

- Identify Bottlenecks: Analyze data to identify bottlenecks in the software delivery pipeline. This could include slow build times, lengthy testing processes, or inefficient deployment procedures. Addressing these bottlenecks can significantly improve lead time and deployment frequency.

- Optimize Processes: Use data to identify areas for process optimization. For example, if the change failure rate is high, investigate the root causes of failures and implement measures to prevent them.

- Improve Collaboration: Use metrics to assess the effectiveness of collaboration between development, operations, and other teams. For example, track the number of incidents caused by miscommunication or lack of coordination.

- Prioritize Investments: Use data to justify investments in new tools, technologies, or training. For example, if a new testing tool is expected to reduce the change failure rate, track the impact of the tool on this metric.

- Foster a Data-Driven Culture: Encourage teams to regularly review and analyze metrics. Make data accessible and visible to all team members. This will help promote a culture of continuous improvement and data-informed decision-making.

- Iterate and Refine: Continuously monitor and analyze metrics, and use the insights to refine DevOps practices. The DevOps journey is a continuous process of learning and improvement.

Final Conclusion

In conclusion, embracing DevOps requires a strategic and holistic approach. By understanding and proactively addressing the inherent challenges—from cultural resistance to technical complexities—organizations can unlock the full potential of DevOps. The journey demands commitment, continuous learning, and a willingness to adapt. Successfully navigating these challenges will pave the way for a more agile, efficient, and secure software development lifecycle, ultimately driving business value and innovation.

Popular Questions

What is the biggest hurdle in DevOps adoption?

The biggest hurdle is often cultural resistance. Overcoming ingrained habits, siloed teams, and a lack of understanding of DevOps principles can significantly slow down the transition.

How long does it typically take to fully adopt DevOps?

There is no one-size-fits-all answer, but it usually takes several months to a few years to fully realize the benefits of DevOps, depending on the organization’s size, complexity, and existing infrastructure.

What are the key performance indicators (KPIs) for measuring DevOps success?

Key KPIs include deployment frequency, lead time for changes, mean time to recovery (MTTR), change failure rate, and customer satisfaction.

Is DevOps only for large organizations?

No, DevOps can benefit organizations of all sizes. While larger companies might have more resources to dedicate to the transition, the core principles of DevOps can be adapted and applied to smaller teams and projects.

What are the most important skills for a DevOps engineer?

Essential skills include automation (scripting), cloud computing, infrastructure as code, CI/CD pipelines, monitoring and observability, and a strong understanding of development and operations principles.