Serverless computing, while promising unparalleled scalability and agility, presents a unique set of cost management challenges. Understanding these challenges and mastering the art of cost optimization is crucial for realizing the full potential of serverless architectures. This exploration delves into the multifaceted strategies for minimizing cloud spending in serverless environments, moving beyond superficial understanding to a comprehensive analysis of techniques that drive efficiency and value.

The journey begins with establishing a foundational understanding of serverless principles, contrasting it with the common misconceptions surrounding cost efficiency. We will then navigate through essential areas such as monitoring, resource right-sizing, code optimization, event-driven architectures, service selection, and vendor-specific pricing models. Further, we will explore critical aspects like cold start mitigation, data storage optimization, automation through Infrastructure as Code (IaC), and, finally, real-world case studies that demonstrate successful cost optimization strategies.

Introduction to Serverless Cost Optimization

Serverless computing, a cloud computing execution model, fundamentally alters how applications are built and deployed. It allows developers to build and run applications and services without managing servers, shifting the operational burden to the cloud provider. This shift fundamentally changes the cost landscape, introducing both opportunities for and challenges to cost optimization.Serverless computing’s cost implications are primarily based on a pay-per-use model.

This contrasts with traditional cloud infrastructure where resources are often provisioned and paid for continuously, regardless of actual utilization. This on-demand pricing can lead to significant cost savings when workloads are intermittent or spiky, but also requires careful monitoring and optimization to prevent unexpected expenses.

The Genesis of Serverless Computing and its Cloud Spending Impact

Serverless computing emerged in the mid-2010s, initially with Function-as-a-Service (FaaS) offerings. AWS Lambda, launched in 2014, was a pioneering example, enabling developers to execute code without provisioning or managing servers. This innovation quickly gained traction, leading to the development of various serverless services for databases, storage, and APIs. The initial impact was often seen in reduced operational overhead and potentially lower costs for workloads with fluctuating demand.

However, the true potential for cost savings often remained unrealized due to a lack of mature tooling and understanding of the new pricing models. The shift from a model of paying for provisioned capacity to paying only for consumed resources has significantly changed the dynamics of cloud spending. This change requires a new set of strategies for optimizing cloud costs.

Common Misconceptions About Serverless Cost Efficiency

Several misconceptions often cloud the understanding of serverless cost efficiency. These misunderstandings can lead to suboptimal design choices and inflated cloud bills.

- Myth: Serverless is always cheaper. This is a widespread misconception. While serverless can be more cost-effective for certain workloads, it’s not universally cheaper. Factors such as the consistency of traffic, the efficiency of code, and the choice of services heavily influence costs. For instance, a consistently high-volume workload might be more cost-effective on provisioned infrastructure due to the fixed cost model.

- Myth: Serverless automatically scales to zero, eliminating costs during inactivity. While many serverless services scale automatically, it’s not always instantaneous, and some services may incur costs even when idle. Cold starts, the time it takes for a function to initialize, can impact performance and incur cost. Additionally, the configuration of resources like memory allocation can affect the cost.

- Myth: Serverless eliminates the need for cost management. Serverless, while simplifying infrastructure management, increases the need for careful monitoring and optimization. The pay-per-use model requires vigilance to prevent unexpected costs. Developers must understand how their code interacts with serverless services to control expenses. The focus shifts from infrastructure provisioning to code optimization and resource usage.

- Myth: Serverless is only suitable for small projects. Serverless architectures can be applied to large-scale, complex applications. However, such projects require careful planning, architectural design, and cost optimization strategies. Serverless is not limited to simple use cases.

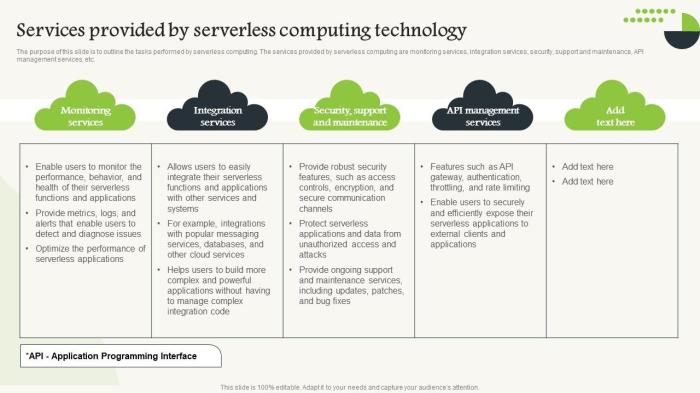

Monitoring and Observability for Cost Control

Effective monitoring and observability are crucial for managing costs in serverless environments. They provide the necessary insights to understand resource consumption, identify inefficiencies, and proactively address potential cost overruns. Without robust monitoring, it’s difficult to determine the drivers of costs and to implement effective optimization strategies.

Importance of Monitoring in Serverless Environments

Serverless architectures inherently involve complex, distributed systems. Because of this complexity, monitoring becomes even more important. It allows for real-time tracking of application performance and resource utilization, helping to identify and resolve issues that could impact costs.

- Visibility into Resource Consumption: Monitoring tools provide granular insights into the resources consumed by each serverless function and service. This includes metrics such as invocation count, execution time, memory usage, and network traffic. This visibility is fundamental to understanding how costs are incurred.

- Proactive Issue Detection: By establishing baselines and setting up alerts, monitoring helps identify anomalies and potential problems before they significantly impact costs. For instance, an unexpected increase in invocation duration or memory usage can trigger alerts, enabling quick investigation and remediation.

- Performance Optimization: Monitoring enables performance optimization by pinpointing bottlenecks and inefficiencies. This could involve optimizing code, adjusting memory allocation, or reconfiguring services to improve execution speed and reduce resource consumption, ultimately leading to cost savings.

- Cost Allocation and Analysis: Monitoring facilitates cost allocation by allowing you to attribute costs to specific functions, services, or even business units. This helps in understanding where the money is being spent and identifying areas for cost reduction.

- Compliance and Governance: Monitoring provides the data necessary for compliance and governance requirements. It ensures that resources are being used according to defined policies and that security best practices are being followed, indirectly impacting costs through efficient resource management.

Key Metrics to Track for Cost Optimization

Tracking the right metrics is essential for effective cost optimization. These metrics provide a comprehensive view of resource consumption and performance, enabling informed decision-making.

- Invocation Count: This metric tracks the number of times a function is executed. Higher invocation counts directly correlate with increased costs, particularly in pay-per-use models. Analyzing invocation trends helps identify potential optimization opportunities, such as reducing unnecessary function calls.

- Invocation Duration: The time it takes for a function to execute is a critical cost driver. Longer execution times result in higher costs. Monitoring invocation duration helps identify performance bottlenecks and inefficiencies in code. For example, a function consistently taking longer than expected may indicate inefficient code or resource contention.

- Memory Usage: Serverless functions are typically charged based on the amount of memory allocated. Tracking memory usage helps optimize resource allocation. Over-provisioning memory increases costs without providing benefits. Conversely, under-provisioning can lead to performance issues.

- Error Rate: A high error rate can indicate inefficient code, which leads to increased invocations and potentially higher costs due to retries. Monitoring error rates is crucial for identifying and resolving code defects.

- Cold Starts: Cold starts, where a function’s execution environment needs to be initialized, can impact performance and cost. Frequent cold starts increase invocation duration. Monitoring cold start frequency helps identify the need for optimization, such as using provisioned concurrency.

- Network Traffic: Network traffic, including data transfer in and out, can incur costs. Monitoring network traffic is important, particularly for functions that process large datasets or interact with external services.

- Concurrency: Monitoring concurrency levels helps ensure that resources are efficiently utilized. High concurrency can indicate that functions are scaling effectively. However, if concurrency is consistently high, it might be necessary to optimize code or increase resource allocation.

- Cost per Invocation: Calculating the cost per invocation provides a direct measure of the cost efficiency of each function. This metric helps compare the cost of different functions and identify areas for optimization.

Setting Up Alerts for Cost Anomalies and Unexpected Spikes

Automated alerts are essential for proactively managing costs in serverless environments. They provide timely notifications of potential issues, allowing for immediate action to prevent cost overruns.

- Threshold-Based Alerts: Set up alerts that trigger when a specific metric exceeds a predefined threshold. For example, an alert can be configured to trigger if the invocation duration of a function exceeds a certain time, indicating a performance problem.

- Anomaly Detection: Use anomaly detection algorithms to automatically identify unusual patterns in the data. These algorithms can detect unexpected spikes in invocation counts, memory usage, or other key metrics.

- Cost Budget Alerts: Set up alerts based on predefined cost budgets. These alerts notify you when spending approaches or exceeds the budget, allowing you to take corrective action.

- Trend-Based Alerts: Analyze historical data to establish trends and set alerts that trigger when the trend deviates significantly. This is particularly useful for identifying gradual increases in costs that might not be immediately apparent.

- Alerting Tools: Leverage cloud provider-specific monitoring and alerting tools (e.g., AWS CloudWatch, Azure Monitor, Google Cloud Monitoring) to configure and manage alerts. These tools provide features for defining thresholds, setting up notifications (e.g., email, SMS), and integrating with incident management systems.

- Notification Channels: Configure alerts to send notifications through appropriate channels, such as email, Slack, or PagerDuty, to ensure that the right people are notified promptly.

- Example: Consider a scenario where a serverless function experiences a sudden spike in invocation count. An alert set up to trigger when the invocation count exceeds a certain threshold would immediately notify the operations team, allowing them to investigate the cause (e.g., a denial-of-service attack or a code bug) and take corrective action. This immediate response can prevent significant cost overruns.

Right-Sizing Resources

Right-sizing resources is a critical aspect of serverless cost optimization, involving the precise allocation of compute resources to serverless functions to match actual workload demands. This process minimizes waste by avoiding over-provisioning, which leads to unnecessary costs, and prevents under-provisioning, which can cause performance degradation and impact user experience. The objective is to find the sweet spot where resource allocation aligns perfectly with the function’s needs, ensuring both cost efficiency and optimal performance.

Determining Optimal Resource Allocation for Serverless Functions

Determining the optimal resource allocation for serverless functions requires a data-driven approach, considering factors such as memory, CPU, and execution time. Accurate measurement of these parameters allows for informed decisions on resource allocation.

- Memory Allocation: Memory allocation significantly impacts both cost and performance. Insufficient memory can lead to function failures or increased execution times, incurring higher costs. Excess memory, conversely, results in unnecessary expenses.

- Profiling: Employ profiling tools to monitor the memory usage of the function during different workload scenarios. These tools can identify memory leaks and inefficient code that consumes excessive memory.

- Benchmarking: Conduct benchmark tests using various memory configurations to determine the optimal setting. Test the function’s performance with different memory values, measuring execution time and cost for each configuration.

- Observability: Utilize monitoring tools to track the function’s memory consumption in production. Analyze metrics such as `MemoryUtilization`, `MemoryAllocated`, and `OutOfMemoryErrors` to understand how the function behaves under real-world conditions.

- CPU Allocation: The CPU allocation is implicitly linked to memory allocation in many serverless platforms. However, understanding its impact is essential for optimizing performance.

- Performance Testing: Execute performance tests with varying memory and CPU configurations to determine the optimal balance between cost and latency. Identify the configuration that meets performance requirements at the lowest cost.

- Concurrency: Consider the function’s concurrency requirements. A function that processes multiple requests concurrently may require more CPU resources to avoid throttling.

- Execution Time Analysis: Analyze the execution time of the function to identify bottlenecks. Long execution times may indicate a need for increased CPU allocation.

- Execution Time: Execution time directly affects cost, as serverless platforms typically charge based on the duration of function execution.

- Code Optimization: Optimize the function’s code to reduce execution time. This includes improving algorithms, minimizing external dependencies, and using efficient programming practices.

- Caching: Implement caching mechanisms to store frequently accessed data, reducing the need to execute computationally expensive operations repeatedly.

- Asynchronous Operations: Use asynchronous operations to avoid blocking the function’s execution. This can significantly reduce the execution time by allowing the function to continue processing other tasks while waiting for external resources.

Designing a Method for Automatically Adjusting Resource Allocation Based on Workload

Automated resource adjustment, or autoscaling, is a proactive approach to serverless cost optimization. This approach dynamically adjusts resource allocation based on real-time workload demands, ensuring optimal performance and cost efficiency.

- Metrics Collection: Implement a system to collect real-time metrics, such as function invocation count, execution duration, and memory utilization. These metrics provide insights into the function’s performance and resource needs.

- Thresholds and Rules: Define thresholds and rules based on the collected metrics to trigger resource adjustments. For example, if the average execution time exceeds a predefined threshold, increase the memory allocation.

- Autoscaling Policies: Configure autoscaling policies that automatically adjust the function’s resource allocation based on the defined rules. These policies can scale memory, CPU, and concurrency.

- Horizontal Scaling: Implement horizontal scaling to increase concurrency. If the invocation count exceeds a threshold, scale up the number of concurrent function instances.

- Vertical Scaling: Implement vertical scaling to adjust memory and CPU. If memory utilization exceeds a threshold, increase the memory allocation.

- Testing and Validation: Rigorously test the autoscaling policies to ensure they function correctly and do not introduce unintended consequences. Monitor the function’s performance and cost after implementing autoscaling to validate its effectiveness.

- Integration with Cloud Provider Tools: Integrate the autoscaling mechanism with the serverless platform’s native autoscaling features. Most cloud providers offer built-in autoscaling capabilities for serverless functions, simplifying the implementation and management of autoscaling policies.

Comparison of Costs Associated with Different Resource Configurations

Comparing costs across different resource configurations requires a structured approach, including benchmarking, detailed cost analysis, and the use of tools to estimate and track expenses. The following table illustrates a hypothetical comparison, demonstrating the cost implications of different memory allocations for a serverless function. This table is illustrative and costs will vary depending on the cloud provider, region, and other factors.

| Configuration | Memory (MB) | CPU | Cost per Million Invocations (USD) |

|---|---|---|---|

| Low Memory | 128 | 0.2 vCPU | $0.20 |

| Medium Memory | 512 | 1 vCPU | $0.50 |

| High Memory | 1024 | 2 vCPU | $1.00 |

| Optimal Configuration | 512 | 1 vCPU | $0.50 |

Notes on the table: The “Cost per Million Invocations” is a simplified representation. Actual costs depend on factors like execution time, number of invocations, and the specific pricing model of the serverless provider.

Code Optimization for Serverless Costs

Optimizing the code within serverless functions is paramount for minimizing execution time and, consequently, reducing associated costs. Efficient code execution directly translates to lower resource consumption, fewer invocations, and ultimately, a more cost-effective serverless architecture. This section details key strategies for achieving this efficiency, including profiling techniques and practical code optimizations.

Guidelines for Writing Efficient Serverless Code

Adhering to specific coding practices can significantly improve the performance and cost-effectiveness of serverless functions. These guidelines focus on minimizing execution time, reducing resource usage, and optimizing data processing.

- Minimize Dependencies: Reduce the number and size of external libraries and dependencies. Larger dependencies increase deployment package size, leading to longer cold start times and increased storage costs. Use only essential libraries and consider alternative, lightweight implementations where possible. For instance, when manipulating JSON data, utilize built-in language features or minimal libraries instead of heavy-duty JSON processing libraries.

- Optimize Data Serialization and Deserialization: Choose efficient serialization formats such as Protocol Buffers or MessagePack over JSON when possible, especially for high-volume data transfer. These formats are typically more compact and faster to serialize and deserialize, reducing data transfer size and execution time.

- Implement Efficient Data Processing: Avoid unnecessary data processing. Filter and transform data as early as possible in the function’s execution flow. Leverage built-in language features and optimized data structures to minimize computational complexity. For example, use array methods like `map`, `filter`, and `reduce` judiciously to avoid creating unnecessary intermediate data structures.

- Reuse Connections: Establish and reuse database connections, API client connections, and other external resource connections. Creating a new connection for each function invocation incurs overhead. Implement connection pooling or connection caching to reduce this overhead.

- Handle Errors Gracefully: Implement robust error handling to prevent unexpected function terminations, which can lead to incomplete operations and potentially wasted resources. Log errors thoroughly to facilitate debugging and performance analysis. Use try-catch blocks and proper exception handling mechanisms.

- Use Asynchronous Operations: Whenever possible, offload long-running tasks to asynchronous processes, such as message queues or event buses. This allows the function to return quickly, reducing its execution time and preventing it from blocking the processing of other requests.

- Optimize Loops and Conditional Statements: Minimize the number of iterations in loops and the complexity of conditional statements. Optimize the logic within these structures to reduce execution time. Consider using efficient algorithms and data structures to improve performance. For instance, using a `switch` statement can be more efficient than a series of `if-else` statements when dealing with multiple conditions.

- Reduce Cold Start Times: Minimize the size of the deployment package and optimize function initialization code to reduce cold start times. Cold starts are a significant contributor to latency and can increase costs. Pre-warm functions if possible.

Profiling Serverless Functions to Identify Performance Bottlenecks

Profiling serverless functions is crucial for identifying performance bottlenecks and areas for optimization. Profiling tools provide insights into function execution time, resource consumption, and code-level performance issues.

- Utilize Cloud Provider Profiling Tools: Most cloud providers offer built-in profiling tools for serverless functions. These tools provide detailed performance metrics, including execution time, memory usage, and CPU utilization. For example, AWS X-Ray, Google Cloud Profiler, and Azure Application Insights offer comprehensive profiling capabilities.

- Instrument Code with Logging and Metrics: Implement detailed logging and custom metrics to track function performance. Log the start and end times of critical operations, along with the resource usage. Use custom metrics to track the frequency and duration of specific operations. This approach provides valuable insights into the function’s behavior.

- Use Tracing Tools: Employ distributed tracing tools to monitor the execution flow across multiple services and functions. Tracing tools, such as Jaeger or Zipkin, help identify performance bottlenecks in distributed systems. This enables identifying issues that are difficult to spot within a single function’s execution.

- Analyze Function Logs: Regularly analyze function logs to identify errors, slow operations, and unexpected behavior. Logs provide valuable information about the function’s execution, including the time spent in various code sections.

- Employ Performance Testing: Conduct performance tests to simulate real-world workloads and identify performance issues under load. This involves testing the function with different input data and concurrency levels to assess its performance characteristics. Tools like JMeter or Gatling can be used for performance testing.

Code Optimizations That Minimize Data Processed

Reducing the amount of data processed by a serverless function directly translates to reduced execution time and costs. Several code optimization techniques focus on minimizing data processing.

- Implement Data Filtering at the Source: Filter data at the source, such as a database or API, to reduce the amount of data retrieved and processed by the function. Use query parameters and filtering mechanisms to retrieve only the necessary data. For instance, if querying a database, use `WHERE` clauses to filter data before it is returned.

- Use Pagination: Implement pagination to retrieve data in smaller chunks, rather than retrieving the entire dataset at once. This reduces the amount of data transferred and processed in each function invocation.

- Optimize Data Transformation: Perform data transformations efficiently to minimize the amount of processing required. Use optimized data structures and algorithms to perform data transformations.

- Lazy Loading: Load data only when it is needed. Avoid loading unnecessary data upfront. Implement lazy loading techniques to improve performance.

- Caching: Implement caching mechanisms to store frequently accessed data, reducing the need to retrieve data from external sources repeatedly. Use caching libraries or services, such as Redis or Memcached, to store cached data.

- Data Compression: Compress data before transferring it to reduce data transfer size and execution time. Use compression algorithms, such as Gzip or Brotli, to compress data.

- Reduce Data Redundancy: Avoid storing or processing redundant data. Identify and eliminate unnecessary data duplication to reduce the amount of data processed.

- Batch Processing: Process data in batches to reduce the overhead of individual function invocations. Combine multiple data operations into a single function invocation.

Event-Driven Architecture and Cost Implications

Event-driven architectures (EDAs) are a cornerstone of modern serverless applications, enabling highly scalable and responsive systems. However, the inherent complexity of EDAs can introduce significant cost implications if not carefully managed. The asynchronous nature of event processing, coupled with the pay-per-use pricing models of serverless platforms, demands a strategic approach to cost optimization. Understanding the nuances of event handling and its impact on resource consumption is crucial for controlling costs in serverless environments.

Event-Driven Architecture Impact on Serverless Costs

EDAs impact serverless costs through several key mechanisms. Each event trigger, processing step, and integration point can incur costs based on the number of invocations, execution time, and resources consumed. This necessitates a thorough understanding of the event flow and its associated costs.The primary cost drivers within an EDA include:

- Event Ingestion and Storage: The cost of storing and managing events before they are processed. This can include the cost of message queues (e.g., AWS SQS, Azure Queue Storage), event buses (e.g., AWS EventBridge, Azure Event Grid), or other storage mechanisms. The volume of events and the duration for which they are stored directly impact costs.

- Function Invocations: The cost of executing serverless functions triggered by events. This includes the cost of compute time, memory allocation, and any associated dependencies. The frequency of event triggers and the complexity of function logic directly influence these costs.

- Data Transfer: The cost of transferring data between services and components within the EDA. This can include data transfer costs between storage services, message queues, and serverless functions. The volume of data transferred and the geographical locations involved can significantly impact costs.

- Monitoring and Logging: The cost of collecting, storing, and analyzing logs and metrics related to event processing. This includes the cost of using logging services (e.g., AWS CloudWatch Logs, Azure Monitor Logs) and monitoring tools. The level of detail captured and the duration for which logs are retained contribute to these costs.

- Integration Costs: The cost of integrating with external services or APIs. Serverless functions often interact with other services, and the cost of these interactions, including API calls and data transfer, needs to be considered.

Strategies for Managing Event Processing Costs in Serverless Systems

Managing the cost of event processing requires a multi-faceted approach that focuses on optimizing event flow, resource utilization, and monitoring.Key strategies include:

- Optimize Event Filtering and Routing: Implement efficient event filtering and routing mechanisms to minimize the number of unnecessary function invocations. Utilize event filtering capabilities provided by event sources and event buses to ensure that only relevant events trigger functions. For example, using AWS EventBridge’s filtering rules to route events to specific targets based on event content.

- Right-Size Function Resources: Carefully allocate the appropriate amount of memory and compute resources to serverless functions. Over-provisioning resources leads to higher costs, while under-provisioning can result in performance bottlenecks and increased execution time. Performance testing and monitoring are essential for determining the optimal resource allocation.

- Implement Batch Processing: When feasible, batch multiple events together for processing by a single function invocation. This reduces the number of function invocations and can lead to significant cost savings, especially for high-volume event streams. For instance, using AWS Lambda’s batch processing capabilities with SQS to process multiple messages in a single invocation.

- Optimize Function Code: Write efficient function code that minimizes execution time and resource consumption. Avoid unnecessary dependencies, optimize database queries, and use appropriate data structures. Code profiling and performance testing are crucial for identifying areas for optimization.

- Leverage Caching: Implement caching mechanisms to reduce the number of external service calls and database queries. Caching frequently accessed data can significantly improve performance and reduce costs. Using a service like Amazon ElastiCache or Azure Cache for Redis to cache the results of database queries.

- Monitor and Analyze Costs: Continuously monitor and analyze event processing costs using monitoring tools and dashboards. Identify cost drivers, track performance metrics, and set up alerts for anomalies. Utilizing AWS Cost Explorer or Azure Cost Management + Billing to track costs and identify areas for optimization.

- Implement Throttling and Rate Limiting: Implement throttling and rate-limiting mechanisms to control the rate of event processing and prevent resource exhaustion. This helps to manage costs during peak periods and prevent unexpected spikes in usage. Using AWS Lambda’s concurrency limits or API Gateway’s rate limiting features.

Best Practices for Designing Event-Driven Systems to Minimize Unnecessary Invocations

Designing event-driven systems with cost efficiency in mind requires careful consideration of the event flow, function design, and infrastructure configuration. Minimizing unnecessary function invocations is a critical goal.Best practices include:

- Design Granular Events: Define events that are specific and focused on a single action or state change. Avoid creating overly broad events that trigger unnecessary processing steps. For example, instead of a generic “OrderUpdated” event, create more specific events like “OrderShipped,” “OrderCancelled,” or “OrderPaymentReceived.”

- Implement Idempotency: Design functions to be idempotent, meaning that they can be executed multiple times without unintended side effects. This helps to mitigate the impact of duplicate event deliveries and ensures that processing occurs only once.

- Use Dead-Letter Queues (DLQs): Configure DLQs to handle events that fail to be processed. This allows for the isolation and analysis of failed events, preventing them from continuously triggering function invocations and incurring unnecessary costs.

- Implement Circuit Breakers: Implement circuit breakers to prevent functions from repeatedly calling failing external services. If a service is unavailable or experiencing errors, the circuit breaker can temporarily stop the function from making calls, preventing unnecessary retries and cost accumulation.

- Utilize Event Aggregation: When appropriate, aggregate multiple events into a single event before processing. This can reduce the number of function invocations and simplify the processing logic. For example, aggregating multiple “OrderLineItemUpdated” events into a single “OrderUpdated” event.

- Employ Eventual Consistency: Design systems that can tolerate eventual consistency. Avoid triggering functions immediately after every event; instead, schedule processing based on time intervals or other criteria to reduce the frequency of invocations.

- Leverage Serverless Event Bus Features: Utilize features offered by serverless event bus services like AWS EventBridge and Azure Event Grid, such as filtering, transformation, and retry policies, to optimize event processing and reduce costs.

Choosing the Right Serverless Services

Selecting the appropriate serverless services is crucial for optimizing costs. The choice impacts not only the direct expenses associated with compute resources but also influences development time, operational overhead, and the overall efficiency of the application. A strategic selection process involves understanding the nuances of various serverless offerings, evaluating their strengths and weaknesses in the context of specific use cases, and carefully considering the trade-offs between cost, performance, and complexity.

Cost Differences Between Serverless Compute Options

The cost structures of serverless compute options vary significantly across different cloud providers. These differences arise from factors such as pricing models (pay-per-use vs. reserved instances), memory allocation granularity, and the inclusion of free tiers. Analyzing these distinctions is essential for making informed decisions.

- AWS Lambda: AWS Lambda’s pricing is based on the number of requests, the duration of the function execution (measured in milliseconds), and the amount of memory allocated. The free tier offers a certain number of free requests and compute time per month. Lambda’s cost is highly dependent on the efficiency of the code, as optimized functions consume fewer resources and thus incur lower charges.

Consider the example of an image processing function: if optimized to process images faster and with less memory, the Lambda function will cost less compared to an unoptimized version.

- Azure Functions: Azure Functions pricing also follows a pay-per-use model, with charges based on the number of executions and the resource consumption (memory and execution time). Azure Functions offers a consumption plan (pay-per-use) and a premium plan, providing more control over the environment and scaling. Azure Functions also provides a free tier, but the specifics and limitations can vary. For instance, a simple HTTP trigger function that processes small amounts of data will likely be cheaper on the consumption plan, while a function with high traffic and stringent performance requirements might benefit from the premium plan.

- Google Cloud Functions: Google Cloud Functions follows a pay-per-use model, with pricing based on the number of invocations, the execution time, and the memory allocated. Google Cloud Functions provides a free tier that includes a certain number of invocations, compute time, and network egress per month. Google also offers a “Cloud Functions (2nd gen)” with improved performance and pricing models. A web application backend using Cloud Functions for API endpoints will incur costs based on the number of API calls, execution time, and memory usage.

The following table illustrates a simplified comparison of the cost factors, assuming a generic function execution. Note that the actual pricing is subject to change and varies based on region, function configuration, and usage patterns.

| Feature | AWS Lambda | Azure Functions | Google Cloud Functions |

|---|---|---|---|

| Pricing Model | Pay-per-use (requests, duration, memory) | Pay-per-use (executions, memory, execution time) | Pay-per-use (invocations, execution time, memory) |

| Free Tier | Yes (requests, compute time) | Yes (executions, compute time) | Yes (invocations, compute time, network egress) |

| Memory Allocation Granularity | 64MB increments | Varies depending on the plan | 128MB increments |

Factors for Selecting Serverless Services for Different Use Cases

The selection of serverless services should be driven by the specific requirements of the use case. Several factors must be considered to make the most cost-effective and performant choice.

- Function Complexity and Requirements: The complexity of the task and the resource requirements significantly influence service selection. Simple tasks with low resource needs might be best suited for basic serverless functions, while complex operations could necessitate services with greater capabilities and control. For example, a simple form submission process might be efficiently handled by a basic function, whereas a computationally intensive data analysis job may require a service with more memory and longer execution times.

- Traffic Patterns and Scalability Needs: The anticipated traffic volume and the need for scalability are crucial. Serverless platforms automatically scale based on demand, but the cost implications of scaling vary. Services with more granular scaling options can be more cost-effective for variable workloads. A website with unpredictable traffic spikes will benefit from a service that can quickly scale up and down to match demand, avoiding over-provisioning and associated costs.

- Integration Requirements: The ease of integration with other services and systems within the cloud environment is important. Some services offer seamless integration with other components, simplifying development and reducing operational overhead. Choosing a service that integrates well with existing databases, message queues, and other cloud services will streamline the application architecture.

- Development and Operational Considerations: The development experience, available tools, and operational aspects such as monitoring and logging capabilities should also be considered. Services with robust tooling and comprehensive monitoring features can improve developer productivity and simplify troubleshooting. The choice of service will also depend on the skills and expertise of the development team.

Trade-offs Between Cost, Performance, and Complexity

Choosing serverless services involves trade-offs between cost, performance, and complexity. A careful evaluation of these factors is essential to achieve optimal results.

- Cost vs. Performance: Optimizing for performance often leads to increased costs, especially when using serverless services. Increasing the memory allocation or using a more powerful instance can improve execution speed, but at a higher price. A trade-off must be made to balance the desired performance with the acceptable cost. For example, a real-time video processing service might benefit from higher memory allocation for faster frame processing, even if it increases the cost per invocation.

- Cost vs. Complexity: Some serverless services provide more features and flexibility, which can increase complexity. Simpler services might be cheaper but may not offer the same level of control or the ability to handle complex scenarios. Selecting a service that strikes the right balance between cost and complexity is important for the specific application needs. For instance, using a managed service like a database can simplify development and reduce operational overhead compared to managing a database instance, even if the managed service is slightly more expensive.

- Performance vs. Complexity: Services with more features and control often offer greater performance potential, but at the cost of increased complexity. The development team must evaluate the trade-offs between ease of development and the ability to optimize performance. A service with built-in caching mechanisms may improve performance but could also introduce additional complexity in the configuration and management of the cache.

The selection of serverless services should always be driven by a comprehensive understanding of the application’s requirements, the available options, and the trade-offs involved. This will ensure that the chosen services meet the performance, cost, and complexity goals of the project.

Leveraging Serverless Vendor Pricing Models

Understanding and effectively utilizing serverless vendor pricing models is crucial for optimizing costs. Cloud providers offer a variety of pricing structures, discounts, and tools that, when leveraged strategically, can significantly reduce operational expenses. This section explores these models, provides strategies for maximizing savings, and demonstrates the use of pricing calculators.

Serverless Pricing Models

Serverless pricing models are designed to align costs with actual resource consumption. These models typically involve a pay-per-use structure, but can also include options for reserved capacity or discounted pricing based on usage volume.

- Pay-Per-Use: This is the most common model, where users are charged only for the compute time, memory, and other resources consumed by their serverless functions. Pricing is typically based on factors like:

- Function Invocations: The number of times a function is executed.

- Compute Time: The duration of function execution, often measured in milliseconds.

- Memory Allocation: The amount of memory allocated to the function.

- Requests: The number of requests processed by services like API Gateway.

- Data Transfer: The amount of data transferred in and out of the service.

This model provides flexibility and cost-efficiency for workloads with fluctuating demands, as you only pay for what you use.

- Pay-Per-Request: Some services, such as API Gateway, charge based on the number of requests processed. This is a straightforward model suitable for API-driven applications.

- Reserved Instances (for some services): While not universally available for all serverless services, some vendors offer reserved capacity options. This model allows users to pre-purchase a certain amount of compute capacity for a specific period (e.g., one or three years) at a discounted rate. This is beneficial for workloads with predictable and consistent resource requirements. The discount offered can be substantial, but it requires accurate forecasting of resource needs.

- Provisioned Concurrency (for specific services): Certain services, like AWS Lambda, allow users to provision a specific number of concurrent function executions. This model helps to minimize cold start latency and ensure that a certain level of performance is always available. It’s typically charged based on the amount of provisioned concurrency and the duration it is provisioned.

Strategies for Vendor-Specific Discounts and Cost-Saving Programs

Cloud providers frequently offer various programs and discounts to help customers optimize their serverless spending. Proactive engagement with these programs can lead to significant cost reductions.

- Free Tiers: Many cloud providers offer free tiers for their serverless services, allowing users to experiment and run small-scale applications without incurring charges. These tiers often include a certain number of function invocations, compute time, or data transfers per month.

- Committed Use Discounts: Cloud providers may offer discounts for users who commit to a certain level of usage or spend over a defined period. These discounts can be significant, but they require a good understanding of your application’s resource needs and growth projections.

- Volume-Based Discounts: As your usage increases, cloud providers may offer volume-based discounts. This is particularly beneficial for applications with high traffic or data processing demands.

- Savings Plans: AWS Savings Plans, for example, offer a flexible pricing model that allows you to commit to a consistent amount of usage (measured in dollars per hour) for compute services. You can choose from different plan types (Compute Savings Plans and EC2 Instance Savings Plans) and receive significant discounts.

- Partner Programs: Cloud providers often collaborate with partners to offer discounts or special pricing for their services. Exploring these partnerships can uncover additional cost-saving opportunities.

- Negotiation: For large enterprises with substantial cloud spending, negotiating pricing directly with the cloud provider is a viable option. This can result in custom pricing agreements tailored to your specific needs.

- Cost Optimization Tools: Utilize the cloud provider’s cost management tools (e.g., AWS Cost Explorer, Azure Cost Management) to identify areas for optimization, such as idle resources or inefficient function configurations.

Using Pricing Calculators to Estimate Serverless Costs

Cloud providers offer pricing calculators that allow users to estimate the cost of their serverless applications before deployment. These tools are invaluable for planning, budgeting, and comparing different service configurations.

- Input Parameters: Pricing calculators typically require users to input parameters such as:

- Function Invocations per Month: The expected number of times your functions will be executed.

- Average Execution Time: The average duration of function executions.

- Memory Allocation: The amount of memory allocated to your functions.

- Number of Requests (for API Gateway): The expected number of API requests.

- Data Transfer: The expected amount of data transferred.

- Region: The geographic region where your application will be deployed (pricing varies by region).

- Output: The calculator generates an estimated monthly cost based on the provided inputs. It often breaks down the cost by service and component, providing a detailed view of your expected spending.

- Scenario Analysis: Use the calculator to experiment with different configurations and scenarios. For example, you can compare the cost of different memory allocations for your functions or evaluate the impact of optimizing your code to reduce execution time.

- Regular Updates: Cloud providers frequently update their pricing models and calculators. Ensure you are using the latest version of the calculator to obtain the most accurate estimates.

- Example: Consider an application using AWS Lambda, API Gateway, and DynamoDB. Using the AWS Pricing Calculator, you input the estimated number of function invocations, average execution time, memory allocation, number of API requests, and data transfer. The calculator then provides an estimated monthly cost for each service, allowing you to analyze the overall cost and make informed decisions. If the estimated cost is too high, you can adjust the parameters (e.g., reduce execution time through code optimization or optimize API calls to reduce the number of requests) and recalculate the cost to find a more cost-effective configuration.

Reducing Cold Starts

Cold starts are a significant concern in serverless environments, impacting both performance and cost. They occur when a function is invoked and no instances are readily available to handle the request. This necessitates the creation of a new container or execution environment, leading to latency and increased resource consumption. Understanding and mitigating cold starts is crucial for optimizing serverless applications.

Impact of Cold Starts on Costs and Performance

Cold starts introduce a delay in function execution, directly affecting user experience and potentially leading to timeouts. This latency also translates to increased costs.Cold starts increase the time a function consumes resources, as the initialization process requires CPU and memory allocation. Serverless providers often charge based on execution time and resource usage (e.g., GB-seconds or MB-seconds). Therefore, longer execution times due to cold starts inflate costs.

For example, a function that typically executes in 100 milliseconds but experiences a 1-second cold start will be billed for 1.1 seconds, increasing the cost by 1000%.Performance is also negatively impacted. Latency-sensitive applications, such as web APIs or real-time data processing, suffer from slow response times during cold starts. This can lead to a degraded user experience and potentially impact business metrics.

Methods for Minimizing Cold Start Times

Several techniques can be employed to reduce the frequency and duration of cold starts. These methods focus on keeping function instances warm and optimizing the function’s code and configuration.

Techniques to Reduce Cold Starts

- Provisioned Concurrency: Provisioned concurrency allows you to pre-initialize a specific number of function instances. These instances are always ready to serve requests, eliminating cold starts for the provisioned capacity. This is particularly useful for applications with predictable traffic patterns. However, it comes with a cost, as you pay for the provisioned capacity regardless of whether it is used. For example, an e-commerce site expecting peak traffic during a flash sale could provision a certain number of concurrent instances to ensure quick responses during the sale.

- Keep-Warm Strategies: Implement strategies to periodically invoke functions, keeping them “warm.” This can be achieved using scheduled events (e.g., a CloudWatch event in AWS) that trigger the function at regular intervals. The interval should be less than the function’s idle timeout setting. The function execution itself can be lightweight, such as a simple “ping” or “heartbeat” function.

- Increase Memory Allocation: Allocating more memory to a function can sometimes improve cold start performance. Serverless platforms allocate CPU proportionally to memory. More memory can lead to faster initialization times, particularly for functions with large dependencies or complex initialization logic. However, allocating excessive memory can also increase costs. The optimal memory allocation requires careful testing and profiling.

- Optimize Code and Dependencies: Minimize the size of your function’s deployment package. Larger packages take longer to download and initialize. Optimize your code to reduce initialization time.

- Reduce Dependencies: Only include necessary dependencies in your function’s deployment package. Analyze your dependencies and remove any unused libraries or packages.

- Lazy Loading: Initialize resources only when they are needed. Delaying the loading of large libraries or resources until the first function invocation can reduce initialization time.

- Code Profiling: Use profiling tools to identify performance bottlenecks in your code. Optimize slow code sections to improve overall function execution time.

- Use Efficient Runtime Environments: Choose the appropriate runtime environment for your function. Some runtimes, like Node.js, might have slower cold start times compared to others, such as Go or Rust. Experiment with different runtimes to determine the best option for your use case.

- Reduce Function Size: Break down large, monolithic functions into smaller, more focused functions. Smaller functions typically have faster cold start times. This approach also aligns with the principles of microservices architecture, improving maintainability and scalability.

- Configure Timeout Settings: Adjust the function’s timeout setting appropriately. A longer timeout allows more time for initialization and execution, which can mitigate issues caused by cold starts. However, setting the timeout too long can lead to unnecessary resource consumption if the function fails.

Optimizing Data Storage and Transfer Costs

Data storage and transfer costs represent a significant portion of the operational expenditure in serverless architectures. Efficiently managing these costs is crucial for maximizing the benefits of serverless computing, which includes scalability and pay-per-use pricing. This section delves into strategies for optimizing data storage and transfer costs within serverless applications, focusing on cost-effective solutions and architectural best practices.

Optimizing Data Storage Costs in Serverless Applications

Optimizing data storage costs requires careful consideration of data access patterns, storage tiers, and data lifecycle management. Selecting the appropriate storage solution and implementing effective data management strategies are essential for cost efficiency.

- Choosing the Right Storage Tier: Serverless applications often interact with object storage services like Amazon S3, Azure Blob Storage, or Google Cloud Storage. These services offer different storage tiers, each with varying costs based on access frequency and data redundancy requirements.

For example, Amazon S3 offers Standard, Standard-IA (Infrequent Access), Glacier, and Glacier Deep Archive tiers.- Standard: Suitable for frequently accessed data. It provides high availability and performance but is the most expensive tier.

- Standard-IA: Ideal for infrequently accessed data that needs to be accessed rapidly when required. Offers lower storage costs compared to Standard, with a retrieval cost.

- Glacier: Designed for archival data with infrequent access. Offers the lowest storage costs but has longer retrieval times (hours).

- Glacier Deep Archive: The lowest-cost storage tier, suitable for long-term data archiving, with the longest retrieval times.

The choice of tier should align with the data access patterns. For instance, log files that are rarely accessed can be stored in Glacier or Glacier Deep Archive, while frequently accessed application data should reside in Standard or Standard-IA.

- Data Compression: Compressing data before storing it can significantly reduce storage costs. This is especially effective for text-based data, logs, and large files. Compression algorithms, such as gzip or Brotli, can be applied. For example, if a log file is 100MB before compression, it might be reduced to 20MB after compression. This results in a substantial reduction in storage costs.

The trade-off is the computational cost of compression and decompression, which should be considered.

- Data Lifecycle Management: Implementing a data lifecycle management policy is critical for automating the movement of data between different storage tiers. This ensures that data is stored in the most cost-effective tier based on its access frequency. For example, a policy could be configured to automatically move data from Standard to Standard-IA after 30 days of inactivity and then to Glacier after a year.

This automation reduces the need for manual intervention and optimizes storage costs.

- Data Deduplication: Deduplication involves identifying and eliminating redundant data. This is particularly useful for applications that store multiple copies of the same data. Data deduplication techniques, either built into the storage service or implemented at the application level, can significantly reduce storage requirements and costs.

Strategies for Reducing Data Transfer Costs in Serverless Environments

Data transfer costs arise when data moves in and out of a serverless application, including data transferred between different cloud regions, across the internet, and within the same availability zone. Optimizing data transfer involves minimizing data volume, utilizing cost-effective network paths, and leveraging caching mechanisms.

- Data Minimization: Reducing the amount of data transferred is a primary strategy for cost optimization. This can be achieved through several techniques:

- Efficient Data Serialization: Use efficient data serialization formats, such as Protocol Buffers or Avro, instead of less efficient formats like JSON, particularly for transferring large datasets.

- Data Filtering: Filter data at the source to only transfer the necessary information. For example, in a database query, only select the required columns instead of retrieving the entire row.

- Pagination: Implement pagination when retrieving large datasets to avoid transferring the entire dataset at once.

These methods directly reduce the volume of data transferred, thus lowering costs.

- Using Content Delivery Networks (CDNs): CDNs cache content closer to users, reducing the distance data needs to travel and minimizing data transfer costs, especially for static content such as images, videos, and JavaScript files. For example, if users worldwide access images stored in a central S3 bucket, a CDN like Amazon CloudFront can cache these images at edge locations globally. This reduces the load on the origin server and decreases the data transfer costs from the origin to the users.

- Optimizing Network Paths: Choose network paths that minimize data transfer costs.

- Same Region Transfers: Transfer data within the same region whenever possible, as inter-region data transfer is typically more expensive.

- Private Endpoints: Utilize private endpoints or VPC endpoints to keep data transfer within the cloud provider’s network, avoiding the public internet.

- Direct Connect: For high-volume data transfers, consider using dedicated network connections like AWS Direct Connect or Azure ExpressRoute to reduce costs and improve performance.

- Data Compression for Transfer: Similar to storage optimization, compressing data before transfer can reduce the amount of data transmitted, leading to cost savings. This is particularly effective for transferring text-based data and large files. Use compression algorithms like gzip or Brotli before transmitting data.

Selecting Cost-Effective Data Storage Solutions for Serverless Applications

Choosing the right data storage solution is crucial for balancing cost, performance, and scalability. The selection process should consider factors such as data access patterns, data volume, and the need for data durability and availability.

- Object Storage: Object storage services like Amazon S3, Azure Blob Storage, and Google Cloud Storage are often the most cost-effective solutions for storing large amounts of unstructured data, such as images, videos, and documents. Their pay-per-use pricing models and tiered storage options make them suitable for various data access patterns.

For example, an application that stores user-generated content can use S3 to store images and videos.The tiered storage options (Standard, Standard-IA, Glacier) allow for cost optimization based on access frequency.

- Database Services: For structured data, database services like Amazon DynamoDB, Azure Cosmos DB, and Google Cloud Datastore offer scalable and cost-effective solutions. These services are designed to handle high volumes of data and provide features like automatic scaling and data replication.

For example, an e-commerce application can use DynamoDB to store product information, customer data, and order details. DynamoDB’s pay-per-request pricing model allows the application to scale resources based on demand, optimizing costs. - Caching Services: Implementing caching services like Amazon ElastiCache, Azure Cache for Redis, or Google Cloud Memorystore can reduce data transfer costs by caching frequently accessed data. This reduces the load on the primary data store and speeds up application performance.

For example, an application that frequently accesses product catalogs can use ElastiCache to cache product data. This reduces the number of requests to the database and decreases data transfer costs. - Analyzing Pricing Models: Evaluate the pricing models of different storage services. Pay close attention to storage costs, data transfer costs, request costs, and any associated fees. Use cost calculators provided by cloud providers to estimate costs based on projected usage patterns. Compare these estimations to determine the most cost-effective solution for your application’s specific requirements.

Automation and Infrastructure as Code (IaC) for Cost Management

Automating cost optimization tasks and leveraging Infrastructure as Code (IaC) are crucial for efficient serverless cost management. These practices enable consistent deployments, facilitate resource management, and reduce operational overhead, leading to significant cost savings. This approach promotes a proactive and controlled environment, ensuring that serverless applications are deployed and managed with cost efficiency as a primary consideration.

Guidelines for Automating Cost Optimization Tasks

Automating cost optimization tasks ensures continuous monitoring and adjustment of serverless resources. Implementing these guidelines streamlines cost management, enabling proactive identification and mitigation of cost inefficiencies.

- Establish Automated Monitoring and Alerting: Implement automated monitoring systems to track resource utilization, function invocations, and other relevant metrics. Configure alerts to notify administrators when costs exceed predefined thresholds or when resource utilization deviates from expected patterns. For example, using AWS CloudWatch or Azure Monitor, define custom metrics based on function execution times, memory usage, or the number of invocations. Set up alerts to trigger notifications when any of these metrics surpass pre-determined limits.

This automated system allows for prompt identification of cost anomalies.

- Automate Resource Right-Sizing: Automate the process of right-sizing serverless resources based on performance data. Tools like AWS Lambda Power Tuning can analyze function performance and recommend optimal memory allocation. Automate the adjustment of memory settings based on these recommendations, using IaC templates. This ensures resources are appropriately sized to meet the workload demands.

- Automate Deployment and Configuration of Cost-Aware Policies: Integrate cost optimization best practices into the deployment pipeline. This includes configuring functions with appropriate memory settings, timeout durations, and concurrency limits. Automate the enforcement of these policies using IaC to ensure consistent application of cost-saving configurations across all deployments.

- Implement Automated Cost Reporting and Analysis: Automate the generation of cost reports and analyses to provide insights into spending patterns. Integrate cost data with business metrics to understand the cost implications of different application components. Utilize tools like AWS Cost Explorer or Azure Cost Management to generate regular reports. Automate the distribution of these reports to relevant stakeholders to facilitate data-driven decision-making.

- Automate Resource Cleanup: Implement automated processes to identify and remove unused or underutilized resources. This includes removing idle functions, unused storage, and other resources that are contributing to unnecessary costs. Utilize scripts or IaC templates to regularly scan the environment and automatically delete these resources based on pre-defined criteria.

Using Infrastructure as Code (IaC) to Manage Serverless Resources Cost-Effectively

IaC is a fundamental practice for managing serverless resources cost-effectively. IaC enables the definition, provisioning, and management of infrastructure through code, providing repeatability, consistency, and automation. This approach minimizes manual configuration errors and promotes best practices in resource allocation and cost control.

- Define Infrastructure as Code: Use IaC tools such as AWS CloudFormation, Terraform, or Azure Resource Manager templates to define serverless infrastructure. This allows for the creation of reproducible and version-controlled infrastructure configurations. Define all resources, including functions, APIs, databases, and storage, within these templates.

- Implement Cost-Aware Configurations: Within the IaC templates, configure serverless resources with cost-optimization in mind. Specify optimal memory settings, timeout durations, and concurrency limits for functions. Configure auto-scaling policies for resources like databases and queues to dynamically adjust capacity based on demand.

- Version Control and Collaboration: Store IaC templates in a version control system (e.g., Git) to track changes, facilitate collaboration, and enable rollbacks. This allows teams to work together on infrastructure changes and ensures that configurations can be reverted to previous states if necessary.

- Automated Deployments: Integrate IaC templates into CI/CD pipelines to automate the deployment of serverless applications. This ensures that infrastructure is provisioned consistently and efficiently with each deployment. Automate the deployment of infrastructure as part of the application release process, ensuring all resources are configured correctly from the start.

- Infrastructure Auditing and Compliance: Use IaC to enforce compliance with cost-related policies and best practices. Define rules and policies within the IaC templates to ensure that resources are configured in a cost-effective manner. Implement automated audits to verify that the deployed infrastructure complies with these policies.

Examples of IaC Templates for Deploying Serverless Functions with Cost-Aware Configurations

IaC templates offer a practical approach to deploy serverless functions with cost-aware configurations. These examples illustrate how to specify resource settings, monitoring, and scaling policies, enabling cost-effective serverless deployments.

- AWS CloudFormation Example (Lambda Function with Memory Optimization):

This example demonstrates how to use AWS CloudFormation to define a Lambda function with specific memory allocation and monitoring configuration. The template includes the function’s memory size, timeout settings, and a CloudWatch metric for monitoring execution duration.

Resources: MyLambdaFunction: Type: AWS::Lambda::Function Properties: FunctionName: MyCostOptimizedFunction Runtime: nodejs18.x Handler: index.handler Code: ZipFile: | exports.handler = async (event) => console.log('Hello from Lambda!'); return statusCode: 200, body: 'Hello, World!' ; ; MemorySize: 128 # Adjust memory as needed Timeout: 30 # Set appropriate timeout Role: !GetAtt LambdaExecutionRole.Arn LambdaExecutionRole: Type: AWS::IAM::Role Properties: AssumeRolePolicyDocument: Version: '2012-10-17' Statement: -Effect: Allow Principal: Service: lambda.amazonaws.com Action: sts:AssumeRole Policies: -PolicyName: LambdaBasicExecution PolicyDocument: Version: '2012-10-17' Statement: -Effect: Allow Actions: -'logs:CreateLogGroup' -'logs:CreateLogStream' -'logs:PutLogEvents' Resource: 'arn:aws:logs:*:*:*' MyLambdaFunctionMetric: Type: AWS::CloudWatch::Alarm Properties: AlarmName: "MyLambdaFunction-DurationAlarm" MetricName: "Duration" Namespace: "AWS/Lambda" Statistic: Average Period: 60 EvaluationPeriods: 1 Threshold: 5000 # milliseconds ComparisonOperator: GreaterThanThreshold Dimensions: -Name: FunctionName Value: !Ref MyLambdaFunction - Terraform Example (Azure Function App with Consumption Plan):

This example demonstrates how to deploy an Azure Function App using Terraform, configured with a Consumption plan for pay-per-use pricing. The template includes the function app’s resource group, app service plan (Consumption), and function definition.

resource "azurerm_resource_group" "example" name = "example-resources" location = "West Europe" resource "azurerm_app_service_plan" "example" name = "example-plan" location = azurerm_resource_group.example.location resource_group_name = azurerm_resource_group.example.name kind = "FunctionApp" reserved = false sku tier = "Dynamic" size = "Y1" resource "azurerm_function_app" "example" name = "example-function-app" location = azurerm_resource_group.example.location resource_group_name = azurerm_resource_group.example.name app_service_plan_id = azurerm_app_service_plan.example.id storage_account_name = azurerm_storage_account.example.name storage_account_access_key = azurerm_storage_account.example.primary_access_key resource "azurerm_storage_account" "example" name = "examplestorageaccount" resource_group_name = azurerm_resource_group.example.name location = azurerm_resource_group.example.location account_tier = "Standard" account_replication_type = "LRS" - Google Cloud Deployment Manager Example (Cloud Function with Concurrency Settings):

This example uses Google Cloud Deployment Manager to deploy a Cloud Function and configures concurrency settings. The template defines the function’s name, region, runtime, and the maximum number of concurrent executions.

resources: -name: my-function type: gcp-types/cloudfunctions-v1:projects.locations.functions properties: location: us-central1 function: my_function entryPoint: helloWorld runtime: nodejs18 sourceArchiveUrl: gs://your-bucket/source.zip environmentVariables: SOME_VARIABLE: some_value ingressSettings: "ALLOW_INTERNAL_ONLY" maxInstances: 10 # Adjust concurrency settings as needed serviceAccountEmail: "[email protected]"

Case Studies of Serverless Cost Optimization

Understanding the practical application of serverless cost optimization is crucial for realizing its benefits. This section presents real-world case studies, demonstrating successful strategies and the challenges encountered during implementation. The examples provide insights into specific techniques, their impact, and the measurable outcomes achieved.

Case Study: Reducing Costs in a Media Streaming Platform

A media streaming platform migrated its video processing pipeline to a serverless architecture to improve scalability and reduce operational overhead. The initial implementation, while functional, faced significant cost overruns due to inefficient resource allocation and a lack of optimization.

The following optimizations were implemented:

- Right-Sizing Lambda Functions: The initial Lambda functions were over-provisioned, leading to higher compute costs. The platform used monitoring tools to analyze function performance and identified areas where the memory allocation and execution time could be reduced. This involved reconfiguring the memory settings of the functions and optimizing the code to reduce processing time. For instance, functions were initially allocated 1GB of memory, but analysis revealed that 256MB was sufficient for the majority of the tasks.

- Optimizing Data Storage and Transfer: The platform utilized Amazon S3 for storing video assets. Cost optimization was achieved by implementing lifecycle policies to automatically move older, less frequently accessed videos to a cheaper storage tier (e.g., S3 Glacier). Furthermore, the platform optimized the data transfer by implementing content delivery networks (CDNs) to reduce egress costs.

- Implementing Event-Driven Architecture: The platform refactored its monolithic architecture into an event-driven design, leveraging services like Amazon EventBridge and AWS Step Functions. This allowed the platform to process events asynchronously, optimizing resource utilization and reducing the time functions were running. This reduced the number of long-running tasks, minimizing the overall compute time.

- Code Optimization: The developers optimized the code to reduce the execution time and memory consumption of the Lambda functions. This included optimizing image processing libraries, minimizing the size of dependencies, and implementing efficient data serialization and deserialization techniques.

The platform experienced a significant reduction in costs after implementing these optimization strategies. The overall monthly costs decreased by approximately 40%. Furthermore, the improved efficiency led to faster video processing times and better user experience. The monitoring and observability capabilities allowed for continuous monitoring and adjustments, ensuring that the platform maintained cost-effectiveness over time.

Case Study: Optimizing Costs for a E-commerce Application

An e-commerce company migrated its product catalog and order processing system to a serverless architecture to handle peak loads during sales events. Initially, the system experienced cost spikes during periods of high traffic, mainly due to inefficiencies in the Lambda functions and database interactions.

Here’s a look at the optimization strategies employed:

- Lambda Function Concurrency Limits: The platform implemented concurrency limits on Lambda functions to prevent over-provisioning during peak traffic. This ensured that the platform didn’t allocate more resources than necessary, preventing unexpected costs. This was particularly crucial for order processing functions.

- Database Connection Pooling: The application utilized a serverless database, and database connection pooling was implemented to reduce the number of database connections opened and closed, improving performance and reducing costs associated with database usage.

- Optimizing API Gateway Usage: The company optimized the API Gateway configuration to reduce latency and costs. This involved caching frequently accessed data and implementing request throttling to prevent overload.

- Leveraging Serverless Database Features: The company used features like auto-scaling for the serverless database and pay-per-request pricing, which helped to minimize costs during periods of low activity.

The e-commerce platform saw a substantial improvement in cost efficiency after implementing the optimization strategies. The monthly costs were reduced by around 30% during peak sales periods. This was achieved while maintaining the performance and scalability required to handle the increased traffic. The company was also able to identify areas for future cost savings through the ongoing monitoring of its serverless infrastructure.

Key takeaways from these case studies highlight the importance of continuous monitoring, right-sizing resources, and optimizing code and architecture for cost efficiency. The implementation of event-driven architectures, leveraging serverless database features, and optimizing API Gateway configurations are key factors in successful cost optimization. These examples underscore the importance of proactive cost management and the potential for significant savings in serverless environments.

Wrap-Up

In conclusion, optimizing costs in serverless environments is not merely about implementing a single technique; it’s a holistic approach that requires a blend of careful planning, continuous monitoring, and proactive adjustments. By embracing these strategies, organizations can unlock significant cost savings, improve performance, and fully leverage the benefits of serverless computing. The ongoing evolution of serverless technologies necessitates a commitment to staying informed and adapting to the latest advancements in cost optimization best practices, ensuring long-term financial sustainability and operational excellence.

FAQ Resource

What are the primary cost drivers in serverless environments?

The main cost drivers include function invocation duration, memory allocation, data transfer, and the number of function invocations. Optimizing these factors is key to cost efficiency.

How does monitoring contribute to serverless cost optimization?

Monitoring provides visibility into function performance, resource usage, and cost trends. It allows for early detection of anomalies, performance bottlenecks, and inefficient code, enabling proactive cost management.

What are the benefits of right-sizing resources for serverless functions?

Right-sizing ensures that functions have the optimal amount of resources (memory, CPU) needed to execute efficiently. This prevents over-provisioning, which leads to unnecessary costs, and under-provisioning, which can impact performance.

How can code optimization reduce serverless costs?

Efficient code minimizes execution time and the amount of data processed. Techniques like optimizing database queries, reducing dependencies, and using efficient data structures can significantly lower costs.

What role does Infrastructure as Code (IaC) play in serverless cost management?

IaC allows for the automated and consistent deployment of serverless resources with cost-aware configurations. This ensures that resources are provisioned efficiently and that cost optimization best practices are consistently applied.