Serverless stream processing architecture represents a paradigm shift in how real-time data is handled, offering a scalable and cost-effective solution for processing continuous data streams. It leverages the principles of serverless computing, where the underlying infrastructure management is abstracted away, allowing developers to focus solely on the code that processes the data. This architecture is designed to handle the ever-increasing volume, velocity, and variety of data generated by modern applications and devices, making it ideal for scenarios requiring immediate insights and actions.

This architecture typically involves a combination of event sources, data ingestion services, processing functions, and storage solutions. The core of this system is built upon cloud provider services like AWS Lambda, Azure Functions, or Google Cloud Functions. These components work together to ingest, transform, analyze, and store data in real-time, providing valuable insights for various applications, from fraud detection and real-time personalization to IoT data processing and more.

Introduction to Serverless Stream Processing

Serverless stream processing represents a modern architectural paradigm that combines the event-driven nature of stream processing with the operational benefits of serverless computing. This approach allows for real-time data processing without the need for managing underlying infrastructure, offering scalability, cost efficiency, and simplified operations. This architecture is particularly well-suited for applications requiring immediate analysis and action based on continuous data streams.Serverless stream processing architectures have become increasingly popular due to their ability to handle large volumes of data with minimal operational overhead.

The core principle involves the automated scaling of resources based on the incoming data volume, ensuring efficient resource utilization and cost optimization.

Fundamental Concepts of Serverless Computing and Stream Processing

Understanding the underlying concepts of serverless computing and stream processing is crucial for grasping the serverless stream processing architecture. Serverless computing is a cloud computing execution model where the cloud provider dynamically manages the allocation of machine resources. Stream processing, on the other hand, is a data processing paradigm that focuses on the continuous analysis of data streams in real-time.Serverless computing fundamentally removes the need for server management.

Developers deploy code, and the cloud provider handles the infrastructure, including scaling, provisioning, and patching. This allows developers to focus on application logic rather than server administration. Key characteristics of serverless computing include:

- Event-driven execution: Serverless functions are triggered by events, such as HTTP requests, database updates, or messages in a queue.

- Automatic scaling: The cloud provider automatically scales the resources allocated to the functions based on the incoming workload.

- Pay-per-use pricing: Users are charged only for the actual compute time consumed by their functions, leading to cost savings.

Stream processing involves the continuous processing of data streams as they arrive. This is in contrast to batch processing, which processes data in discrete chunks. Stream processing architectures are designed to handle high-velocity, high-volume data in real-time or near real-time. Key characteristics of stream processing include:

- Real-time processing: Data is processed as it arrives, allowing for immediate analysis and action.

- High throughput: Stream processing systems are designed to handle large volumes of data with low latency.

- Fault tolerance: Stream processing systems must be able to handle failures and ensure data consistency.

Definition of a Serverless Stream Processing Architecture

A serverless stream processing architecture is a system designed to process continuous streams of data in real-time, leveraging the serverless computing model. It allows developers to build applications that react to events and analyze data as it arrives, without managing any underlying infrastructure. This architecture typically utilizes serverless functions to process data streams triggered by events such as data ingestion, transformations, and analytics.In essence, this architecture is a combination of event-driven programming, real-time data processing, and the operational benefits of serverless computing.

It’s designed for applications where immediate data analysis and action are crucial, such as fraud detection, real-time monitoring, and personalized recommendations.

Core Components of a Serverless Stream Processing Architecture

A typical serverless stream processing architecture comprises several core components that work together to ingest, process, and analyze data streams. These components are designed to be highly scalable, fault-tolerant, and cost-effective.The key components include:

- Data Ingestion Services: These services are responsible for collecting and ingesting data streams from various sources. Examples include message queues (e.g., Amazon SQS, Azure Service Bus, Google Cloud Pub/Sub), data streaming services (e.g., Amazon Kinesis, Azure Event Hubs, Google Cloud Dataflow), and APIs.

- Serverless Functions: These are the core processing units of the architecture. They are triggered by events from the data ingestion services and perform the actual data processing tasks, such as data transformation, filtering, and aggregation. Popular platforms include AWS Lambda, Azure Functions, and Google Cloud Functions.

- Data Storage: Processed data is often stored in a data store for further analysis, reporting, or archival purposes. Examples include databases (e.g., Amazon DynamoDB, Azure Cosmos DB, Google Cloud Datastore), data warehouses (e.g., Amazon Redshift, Azure Synapse Analytics, Google BigQuery), and object storage (e.g., Amazon S3, Azure Blob Storage, Google Cloud Storage).

- Event Triggers: These components connect the data ingestion services to the serverless functions. They monitor the data streams and trigger the functions when new data arrives. Event triggers can be integrated within the data ingestion services or provided as separate services.

- Monitoring and Logging: Monitoring and logging services are crucial for ensuring the health and performance of the architecture. These services collect metrics and logs from the serverless functions and other components, allowing for real-time monitoring, troubleshooting, and performance optimization. Examples include Amazon CloudWatch, Azure Monitor, and Google Cloud Monitoring.

Key Benefits of Serverless Stream Processing

Serverless stream processing offers significant advantages over traditional methods, primarily centered around scalability, cost optimization, and operational simplification. These benefits stem from the inherent characteristics of serverless architectures, allowing for a more efficient and agile approach to real-time data processing. The core of these advantages lies in the abstraction of infrastructure management, enabling developers to focus on application logic rather than server provisioning and maintenance.

Scalability and Elasticity

The scalability and elasticity of serverless stream processing are fundamental to its appeal. The architecture automatically adjusts resources based on the incoming data volume and processing demands.The benefits of auto-scaling are:

- Dynamic Resource Allocation: Serverless platforms dynamically allocate compute resources (e.g., CPU, memory) in response to fluctuating workloads. This contrasts sharply with traditional methods, where resource allocation is often static or requires manual intervention, potentially leading to underutilization or bottlenecks. For example, during peak hours, a serverless function can automatically scale up to handle a surge in incoming events, ensuring minimal latency and optimal performance.

- No Capacity Planning: The need for capacity planning is eliminated. Developers no longer need to predict future resource needs and provision infrastructure accordingly. This significantly reduces the risk of over-provisioning (wasting resources) or under-provisioning (leading to performance degradation).

- Horizontal Scaling: Serverless architectures typically scale horizontally, adding more instances of the processing function as needed. This contrasts with vertical scaling (e.g., upgrading server hardware), which has physical limitations and can be more disruptive. Horizontal scaling ensures that the system can handle virtually unlimited throughput.

- Reduced Operational Overhead: The platform manages the scaling process automatically, reducing the operational burden on development teams. This frees up developers to focus on application logic and business value, rather than infrastructure management tasks.

Cost-Effectiveness

The pay-per-use model inherent in serverless stream processing significantly impacts cost-effectiveness. This model charges users only for the actual compute time and resources consumed.The advantages of the pay-per-use model are:

- Elimination of Idle Costs: In traditional stream processing, resources are often provisioned and running continuously, even when the data volume is low. Serverless platforms only charge for the time a function is actively processing data. This eliminates costs associated with idle resources.

- Granular Pricing: Serverless platforms offer granular pricing, often measured in milliseconds of compute time. This fine-grained billing allows for precise cost control and optimization. For instance, if a function processes a small amount of data in a few milliseconds, the cost will be minimal.

- Optimized Resource Utilization: Serverless architectures automatically optimize resource utilization. The platform allocates only the necessary resources to process incoming data, preventing waste and reducing costs.

- Reduced Capital Expenditure (CapEx): The pay-per-use model eliminates the need for upfront investment in hardware and infrastructure. This shifts the financial burden from CapEx to OpEx (operational expenditure), making it easier to budget and control costs.

Operational Efficiency

Serverless stream processing streamlines operations by abstracting away infrastructure management tasks. This allows development teams to focus on building and deploying applications.Key aspects of operational efficiency are:

- Automated Infrastructure Management: The serverless platform handles all aspects of infrastructure management, including server provisioning, patching, scaling, and monitoring. This reduces the operational burden on development teams.

- Simplified Deployment and Updates: Deploying and updating serverless functions is typically a straightforward process, often involving uploading code and configuring triggers. This accelerates the development lifecycle and reduces the risk of errors.

- Improved Monitoring and Observability: Serverless platforms often provide built-in monitoring and logging capabilities. This makes it easier to track application performance, identify issues, and troubleshoot problems.

- Faster Time-to-Market: The combination of automated infrastructure management, simplified deployment, and improved monitoring allows development teams to build and deploy applications faster. This accelerates time-to-market and enables businesses to respond quickly to changing market demands.

Comparison with Traditional Stream Processing

A comparison between serverless and traditional stream processing methods reveals key differences in resource management and operational characteristics. Traditional stream processing, often implemented using technologies like Apache Kafka or Apache Storm, requires manual provisioning, scaling, and management of infrastructure. Serverless stream processing, in contrast, abstracts away these complexities.The differences are:

| Feature | Serverless Stream Processing | Traditional Stream Processing |

|---|---|---|

| Resource Management | Automated, managed by the platform | Manual, requiring provisioning and scaling |

| Scaling | Automatic, elastic scaling | Manual or semi-automatic scaling |

| Cost Model | Pay-per-use | Provisioned resources, potentially underutilized |

| Operational Overhead | Low, reduced infrastructure management | High, requiring infrastructure management expertise |

| Deployment | Simplified, often through code uploads and configuration | Complex, requiring server configuration and application deployment |

Core Components and Technologies

Serverless stream processing architectures leverage a suite of cloud-native technologies to ingest, process, and store data in real-time. These architectures are characterized by their scalability, cost-effectiveness, and operational simplicity. The core components, often provided as managed services by cloud providers, work together to form a robust and flexible data pipeline.

Cloud Provider Services

Cloud providers offer a variety of services that are central to building serverless stream processing pipelines. The selection of services depends on factors such as the volume of data, processing complexity, and desired latency.

- AWS Lambda: AWS Lambda is a serverless compute service that executes code in response to events. It’s a fundamental component for processing stream data. Lambda functions can be triggered by services like Kinesis or SQS to process incoming data in near real-time.

- Azure Functions: Azure Functions, similar to AWS Lambda, provides a serverless compute environment. It enables developers to run event-triggered code without managing servers. Azure Functions integrates with services like Azure Event Hubs for stream processing.

- Google Cloud Functions: Google Cloud Functions offers a serverless execution environment for building event-driven applications. It supports various triggers, including Google Cloud Pub/Sub and Cloud Storage, making it suitable for stream processing tasks.

- Amazon Kinesis: Amazon Kinesis is a fully managed service for real-time data streaming. It provides features for data ingestion, processing, and storage. Kinesis Data Streams is commonly used for capturing and delivering data to Lambda functions or other processing services. Kinesis Data Analytics allows for real-time stream processing using SQL or Apache Flink.

- Azure Event Hubs: Azure Event Hubs is a big data streaming service capable of ingesting millions of events per second. It is designed to handle high-volume data streams and integrates with Azure Functions and other Azure services. Event Hubs is a key component for building real-time data pipelines in the Azure ecosystem.

- Google Cloud Pub/Sub: Google Cloud Pub/Sub is a fully managed, real-time messaging service that allows for asynchronous communication between applications. It is used for ingesting and distributing data streams to subscribers. Pub/Sub is a core component for building event-driven architectures and is often used with Cloud Functions.

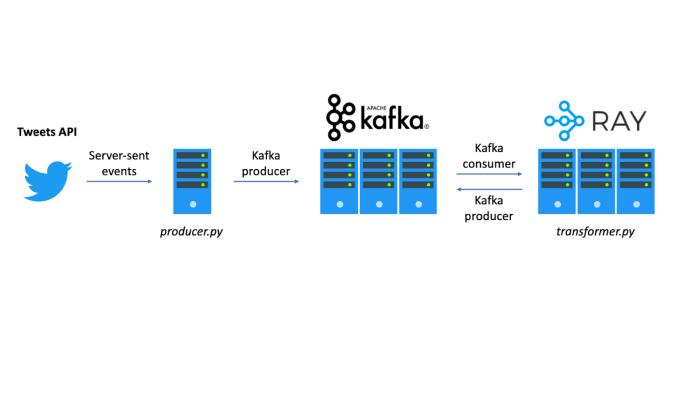

Simple Architecture Diagram

A simple architecture diagram illustrates the flow of data from source to processing to storage. This diagram demonstrates a common pattern in serverless stream processing.

Data Source (e.g., IoT Devices) -> Kinesis Data Streams (AWS) / Event Hubs (Azure) / Pub/Sub (Google Cloud) -> AWS Lambda / Azure Functions / Google Cloud Functions -> Data Storage (e.g., S3, Azure Blob Storage, Google Cloud Storage)

In this architecture:

- IoT devices, as an example data source, continuously send data streams.

- The data is ingested by a streaming service like Kinesis Data Streams (AWS), Event Hubs (Azure), or Pub/Sub (Google Cloud). These services provide the initial point of ingestion and buffering.

- The streaming service triggers a serverless compute function (Lambda, Azure Functions, or Cloud Functions).

- The function processes the data (e.g., data transformation, filtering, aggregation).

- Processed data is then stored in a data storage service like S3 (AWS), Azure Blob Storage, or Google Cloud Storage for further analysis or archiving.

This architecture provides a scalable, cost-effective, and easily manageable solution for processing data streams.

Data Sources and Integration

Serverless stream processing architectures can integrate with a wide range of data sources. The integration method depends on the source’s data format, volume, and the cloud provider’s services being utilized.

- IoT Devices: IoT devices generate a continuous stream of data, often including sensor readings, device status updates, and telemetry data. Integration can involve direct data transmission to a streaming service (e.g., using MQTT protocol and a Kinesis Data Streams, Azure Event Hubs, or Google Cloud Pub/Sub). The processing function can then parse the data, perform calculations, and store the results.

For example, temperature sensors in a warehouse could stream data that is processed to identify and flag potential temperature deviations, triggering alerts if thresholds are exceeded.

- Social Media Feeds: Social media feeds provide a rich source of real-time data, including posts, comments, and user interactions. Integration involves utilizing APIs to access the data streams. For example, a serverless function can be triggered by data from a social media API to analyze sentiment or identify trending topics. This data can be used to provide real-time insights or trigger actions based on the detected trends.

- Application Logs: Application logs contain valuable information about application behavior, errors, and performance. Integration involves collecting and forwarding the logs to a streaming service. For example, an application could send logs to a service like CloudWatch Logs (AWS), Azure Monitor, or Cloud Logging (Google Cloud). A serverless function can then be triggered to parse the logs, detect anomalies, and trigger alerts or initiate remediation actions.

This can be crucial for detecting and addressing performance issues or security threats in real-time.

Data Ingestion and Transformation

Serverless stream processing architectures necessitate robust mechanisms for acquiring and manipulating incoming data. This section details the methods employed for ingesting streaming data and the techniques used for data transformation within such systems. Efficient data ingestion and transformation are crucial for deriving valuable insights from real-time data streams.

Data Ingestion Methods

Ingesting streaming data into a serverless environment involves various strategies depending on the data source and the specific requirements of the application.

- Message Queues: Message queues, such as Amazon SQS, Google Cloud Pub/Sub, or Azure Event Hubs, are commonly used to buffer and decouple data producers from consumers. Data producers publish messages to the queue, and serverless functions are triggered by events within the queue. This approach offers scalability and fault tolerance, as the queue can handle bursts of data and function failures without data loss.

- Event Streams: Event streams, such as Amazon Kinesis Data Streams, Apache Kafka (often managed as a service), or Azure Event Hubs, provide a continuous stream of data. Serverless functions subscribe to these streams and process data as it arrives. Event streams are particularly suitable for high-volume, real-time data ingestion. Data is typically partitioned within the stream to enable parallel processing by multiple serverless function instances.

- HTTP Endpoints: Serverless functions can also expose HTTP endpoints to receive data directly from external sources. This approach is useful for ingesting data from web applications, IoT devices, or other systems that can send HTTP requests. API Gateway services, such as Amazon API Gateway, Google Cloud API Gateway, or Azure API Management, can be used to manage these endpoints, providing features like authentication, authorization, and rate limiting.

- File Storage: Data can be ingested by storing files in object storage services such as Amazon S3, Google Cloud Storage, or Azure Blob Storage. When new files arrive, serverless functions can be triggered by events indicating the creation of new files. This approach is suitable for batch processing or periodic data ingestion.

Data Transformation Techniques

Data transformation is a critical step in stream processing, enabling data cleaning, enrichment, and aggregation. Serverless architectures facilitate various transformation techniques.

- Filtering: Filtering involves selectively retaining or discarding data based on predefined criteria. This reduces the volume of data processed and focuses on relevant information.

- Mapping: Mapping transforms data from one format to another, potentially changing the structure or schema of the data.

- Aggregation: Aggregation combines multiple data points into a single output, such as calculating sums, averages, or counts over a time window. This is crucial for deriving summary statistics and trends.

- Enrichment: Enrichment adds additional context to the data by incorporating information from external sources, such as databases or APIs.

- Joining: Joining combines data from multiple streams or sources based on common keys or attributes.

Code Example: Simple Data Transformation Function

The following Python code demonstrates a simple serverless function that transforms incoming data. This function receives a JSON payload, converts a temperature reading from Celsius to Fahrenheit, and then logs the transformed data. This example can be deployed to any serverless platform.“`pythonimport jsondef transform_temperature(event, context): “”” Transforms a temperature reading from Celsius to Fahrenheit. Args: event: A dictionary containing the input data.

Expected format: “temperature_celsius”: float context: Information about the invocation, function, and execution environment. Returns: A dictionary containing the transformed data. “”” try: # Extract temperature in Celsius from the event data = json.loads(event[‘body’]) # Assuming data is passed in the body of an HTTP request celsius = data.get(‘temperature_celsius’) # Check if the temperature is valid if celsius is None: return ‘statusCode’: 400, ‘body’: json.dumps(‘error’: ‘Missing temperature_celsius parameter’) # Convert Celsius to Fahrenheit fahrenheit = (celsius – 9/5) + 32 # Prepare the output output = ‘temperature_celsius’: celsius, ‘temperature_fahrenheit’: fahrenheit # Return the transformed data return ‘statusCode’: 200, ‘body’: json.dumps(output) except Exception as e: print(f”Error processing event: e”) return ‘statusCode’: 500, ‘body’: json.dumps(‘error’: str(e)) “`This function illustrates a straightforward data transformation process.

It first retrieves the temperature value, then applies the conversion formula:

Fahrenheit = (Celsius × 9/5) + 32

Finally, it packages the original and transformed data into a JSON response. This example can be readily integrated into a serverless platform such as AWS Lambda, Google Cloud Functions, or Azure Functions. The `event` parameter typically contains the incoming data, and the function’s logic transforms the data according to the defined requirements. Error handling is included to ensure that the function gracefully handles unexpected input or processing errors.

Event-Driven Architectures and Stream Processing

Event-driven architectures (EDAs) and serverless stream processing are highly complementary paradigms. EDAs enable systems to react in real-time to events, while serverless stream processing provides the scalable infrastructure to handle the resulting data streams. This combination facilitates the creation of responsive, agile, and cost-effective applications capable of processing large volumes of data as they occur.

Event Triggers in Stream Processing

Event triggers are the catalysts that initiate stream processing tasks within an event-driven architecture. These triggers can originate from various sources, signaling the need for immediate data processing.

- Data Ingestion: The arrival of new data in a data lake or data warehouse can trigger a series of processing steps. For example, the upload of a new customer order to an object storage service (like Amazon S3 or Azure Blob Storage) can trigger a serverless function to parse the order details, validate the information, and enrich the data with information from other services.

- Database Updates: Changes in database records can serve as event triggers. A modification to a customer’s address in a relational database, for instance, can initiate a stream processing job to update the customer’s location data in a real-time dashboard or to trigger a notification to the customer.

- Application Events: Events within an application’s internal logic can trigger processing tasks. A user’s click on a button, a successful login attempt, or a failed transaction can initiate data enrichment, analysis, or alert generation processes. For instance, a successful payment confirmation within an e-commerce platform can trigger a stream processing task to update inventory levels, generate an order confirmation email, and update the customer’s loyalty points.

- IoT Device Signals: Data streams from Internet of Things (IoT) devices, such as sensors, can be the source of event triggers. The detection of a specific temperature threshold from a sensor can trigger a stream processing job to log the event, generate an alert, or adjust climate control settings.

- API Calls: The invocation of an API endpoint can be a trigger. An API call to upload a file might trigger a stream processing job to perform media transcoding, extract metadata, or analyze the content.

Message Queues and Event Buses in Event Flows

Message queues and event buses are critical components for managing event flows within event-driven architectures. They decouple event producers from event consumers, enabling asynchronous communication and improving system resilience.

- Message Queues: Message queues provide a buffer for events, allowing producers to publish events without waiting for consumers to process them immediately. This decoupling is especially important for stream processing, as it allows the system to handle bursts of events without overwhelming the processing infrastructure. Examples of message queue services include Amazon SQS, Azure Service Bus, and Google Cloud Pub/Sub.

The use of message queues provides several advantages:

- Scalability: Queues enable scaling of processing capacity independently of the event production rate.

- Reliability: Queues provide a mechanism for retrying failed processing attempts.

- Decoupling: Queues allow producers and consumers to operate independently, improving system maintainability.

- Event Buses: Event buses act as a central hub for routing events to multiple consumers. They allow different services and applications to subscribe to specific event types, enabling a publish-subscribe (pub/sub) model. Examples of event bus services include Amazon EventBridge, Azure Event Grid, and Google Cloud Pub/Sub (which can also function as an event bus). The benefits of using event buses include:

- Event Filtering: Event buses allow consumers to subscribe to only the events they are interested in, filtering out irrelevant data.

- Simplified Integration: Event buses streamline the integration of multiple services and applications.

- Increased Flexibility: Event buses facilitate the addition of new event consumers without modifying the event producers.

In essence, the combination of message queues and event buses forms a robust infrastructure for handling event flows in serverless stream processing. This architecture allows for high scalability, reliability, and flexibility, making it suitable for a wide range of real-time data processing applications.

Real-time Analytics and Monitoring

Serverless stream processing significantly enhances real-time analytics and monitoring capabilities by enabling immediate insights into streaming data. This real-time analysis allows for rapid detection of anomalies, proactive response to events, and data-driven decision-making, ultimately leading to improved operational efficiency and business agility. The ability to process and analyze data as it arrives is a key advantage of serverless stream processing, differentiating it from batch processing approaches.

Enabling Real-time Analytics

Serverless stream processing enables real-time analytics by providing a platform for continuous data ingestion, processing, and analysis. Data streams, such as clickstream data from websites, sensor readings from IoT devices, or financial transaction records, are ingested and processed in real-time. Serverless functions are triggered by the arrival of new data, enabling the execution of analytical logic. This can include tasks such as calculating moving averages, detecting patterns, or identifying anomalies.

The results of these analyses are then made available almost instantly, providing actionable insights for various applications, including fraud detection, predictive maintenance, and personalized recommendations.

Common Metrics for Monitoring

Monitoring is crucial for ensuring the health and performance of a stream processing pipeline. Several metrics are commonly monitored to assess the pipeline’s effectiveness and identify potential issues. These metrics provide valuable insights into the data flow, processing efficiency, and system resource utilization.

| Metric | Description | Importance | Monitoring Tools |

|---|---|---|---|

| Data Volume | The amount of data processed per unit of time (e.g., events per second, bytes per second). | Indicates the load on the pipeline and helps to identify potential bottlenecks. High data volume might require scaling the processing resources. | CloudWatch, Prometheus, Grafana |

| Processing Latency | The time taken to process an event from ingestion to output. | Measures the responsiveness of the pipeline. High latency can indicate performance issues or resource constraints. | CloudWatch, Datadog, New Relic |

| Error Rate | The percentage of events that fail to be processed successfully. | Identifies potential issues with data quality, processing logic, or external dependencies. High error rates require investigation and remediation. | CloudWatch, Sentry, Rollbar |

| Resource Utilization | The usage of system resources, such as CPU, memory, and network bandwidth. | Provides insights into the pipeline’s resource consumption and helps to optimize resource allocation. High resource utilization can indicate bottlenecks. | CloudWatch, Prometheus, Kubernetes dashboard |

Visualizing Real-time Data

Effective visualization is critical for extracting meaningful insights from real-time data. Visualization tools allow users to monitor key metrics, identify trends, and detect anomalies quickly. Various techniques and tools are used to present data in a clear and concise manner.One common approach involves creating real-time dashboards that display key performance indicators (KPIs) and other relevant metrics. These dashboards often include:

- Line charts: Displaying trends over time, such as the number of events processed per minute or the average processing latency.

- Bar charts: Comparing different categories of data, such as the number of events originating from different sources.

- Gauge charts: Displaying the current value of a metric against a predefined threshold, such as CPU utilization or error rate.

- Heatmaps: Visualizing data density, such as the distribution of events across different time periods.

The choice of visualization tool depends on the specific requirements of the application and the available resources. Popular options include:

- Cloud-based dashboards: Cloud providers like AWS (CloudWatch dashboards), Azure (Azure Monitor dashboards), and Google Cloud (Cloud Monitoring) offer built-in dashboarding capabilities.

- Open-source tools: Tools like Grafana and Kibana provide powerful visualization capabilities and integrate well with various data sources.

- Custom-built dashboards: For specific needs, developers can build custom dashboards using JavaScript libraries like D3.js or Chart.js.

For example, consider a real-time fraud detection system built on serverless stream processing. A real-time dashboard could display the number of suspicious transactions detected per minute, the average transaction value, and the geographical distribution of fraudulent activity. This visualization enables security analysts to identify and respond to fraudulent activity in real-time, mitigating financial losses.

Security Considerations

Serverless stream processing, while offering numerous advantages, introduces unique security challenges. The distributed nature of these systems, coupled with the reliance on third-party services, necessitates a robust security posture. This section delves into the critical security aspects, best practices, and auditing strategies required to protect data and ensure the integrity of serverless stream processing pipelines.

Data Encryption

Data encryption is paramount in safeguarding sensitive information throughout the serverless stream processing lifecycle. Encryption methods are applied both in transit and at rest.* Encryption in Transit: Data transmitted between various components of the pipeline, such as data producers, stream processing services, and data consumers, should be encrypted using secure protocols like TLS/SSL. This prevents eavesdropping and man-in-the-middle attacks.

For example, when a data producer sends data to a service like Amazon Kinesis, the connection should be secured with TLS.* Encryption at Rest: Data stored within the stream processing system, including data in transit, data stored in message brokers, and data stored in data lakes, must be encrypted. This protects against unauthorized access to data if the underlying storage is compromised.

Cloud providers like AWS offer services like KMS (Key Management Service) to manage encryption keys. Data can be encrypted using symmetric or asymmetric encryption algorithms, with the choice depending on the specific requirements of the application. For example, data ingested by Apache Kafka, which might be used in a serverless stream processing setup, can be encrypted at rest using various encryption methods, including AES-256.

Access Control

Implementing robust access control mechanisms is crucial to restrict unauthorized access to resources and data within the serverless stream processing pipeline. This involves defining roles, permissions, and policies to govern user and service interactions.* Identity and Access Management (IAM): Utilize IAM services provided by cloud providers to manage identities and permissions. Grant the principle of least privilege: grant users and services only the necessary permissions to perform their tasks.

For example, an AWS Lambda function that processes data from an Amazon Kinesis stream should only have permissions to read from the Kinesis stream and write to its output destinations.* Role-Based Access Control (RBAC): Implement RBAC to define roles and assign permissions to those roles. This simplifies access management and ensures consistent security policies. Define roles such as “data producer,” “data processor,” and “data consumer,” and assign appropriate permissions to each role.* Network Segmentation: Employ network segmentation to isolate different components of the stream processing pipeline.

This limits the impact of a security breach by restricting the lateral movement of attackers. Use virtual private clouds (VPCs) and security groups to control network traffic between services.

Identity Management

Proper identity management is essential to authenticate and authorize users and services accessing the serverless stream processing pipeline. This involves securely managing identities, authentication mechanisms, and authorization processes.* Service Accounts: Use service accounts for automated tasks and service-to-service communication. These accounts should have the minimum necessary privileges. For example, a Lambda function should use an IAM role with the appropriate permissions.* Authentication: Implement strong authentication mechanisms, such as multi-factor authentication (MFA), to verify user identities.

Utilize cloud provider-managed authentication services like AWS Cognito or Google Cloud Identity Platform.* Authorization: Enforce authorization policies to control access to resources based on user identities and roles. This ensures that only authorized users can perform specific actions.

Best Practices for Securing a Serverless Stream Processing Pipeline

Adopting a set of best practices is vital for building a secure serverless stream processing pipeline. These practices cover various aspects of security, from design and implementation to monitoring and incident response.* Principle of Least Privilege: Grant users and services only the minimum necessary permissions. This reduces the attack surface and limits the impact of a security breach.* Data Encryption: Encrypt all sensitive data in transit and at rest.

Use strong encryption algorithms and manage encryption keys securely.* Regular Security Audits: Conduct regular security audits to identify vulnerabilities and ensure compliance with security policies. This can be done through automated tools or manual reviews.* Vulnerability Scanning: Regularly scan the serverless functions and dependencies for known vulnerabilities. This helps to identify and address potential security risks before they are exploited.* Secure Configuration: Configure all serverless services securely, following the security best practices recommended by the cloud provider.

This includes configuring network settings, access control policies, and logging.* Input Validation and Sanitization: Validate and sanitize all user inputs to prevent injection attacks, such as SQL injection or cross-site scripting (XSS). This helps to protect the data and the processing pipeline.* Monitoring and Alerting: Implement comprehensive monitoring and alerting to detect suspicious activity and potential security breaches. Monitor key metrics such as error rates, access attempts, and resource usage.* Incident Response Plan: Develop and maintain an incident response plan to handle security incidents effectively.

This plan should define roles, responsibilities, and procedures for responding to security breaches.* Automated Security Testing: Integrate security testing into the CI/CD pipeline to identify vulnerabilities early in the development process.

Security Auditing and Logging

Security auditing and logging are critical components of a secure serverless stream processing pipeline. They provide visibility into system activity, enabling detection of malicious behavior and ensuring data integrity.* Comprehensive Logging: Enable comprehensive logging for all components of the stream processing pipeline. Log all relevant events, including user activity, service access, and data modifications. Utilize centralized logging solutions like AWS CloudWatch Logs, Google Cloud Logging, or Azure Monitor.* Log Analysis: Analyze logs regularly to identify security threats, performance issues, and compliance violations.

Use log analysis tools to search for patterns, anomalies, and suspicious activity.* Security Information and Event Management (SIEM): Integrate logs with a SIEM system to correlate events from different sources and detect advanced threats. SIEM systems can provide real-time threat detection, incident response, and compliance reporting.* Auditing Access and Configuration Changes: Audit all access to the system and any configuration changes. This helps to track who made changes, when they were made, and what changes were made.

This information is essential for investigating security incidents and ensuring compliance.* Data Integrity Monitoring: Implement mechanisms to monitor data integrity and detect unauthorized modifications. This can involve using checksums, digital signatures, or other techniques to verify the integrity of data.

Use Cases and Applications

Serverless stream processing architectures find application across a diverse range of industries, enabling real-time data analysis and decision-making. Their scalability, cost-effectiveness, and ease of deployment make them ideal for handling the ever-increasing volume and velocity of data generated by modern applications. These architectures empower businesses to gain valuable insights, automate processes, and improve operational efficiency.These architectures enable the efficient processing of streaming data, offering several key advantages for various applications.

They excel in scenarios where real-time insights and immediate actions are crucial.

Fraud Detection

Fraud detection is a critical application area for serverless stream processing. The ability to analyze data in real-time allows for the immediate identification of suspicious activities, mitigating financial losses and protecting users. This is achieved by continuously monitoring incoming transactions and applying pre-defined rules or machine learning models.

- Real-time Transaction Monitoring: Serverless functions can be triggered by each transaction event, instantly analyzing transaction details such as amount, location, and time. This analysis involves checking against blacklists, unusual spending patterns, and other risk indicators.

- Anomaly Detection: Machine learning models, deployed as serverless functions, can identify anomalies in transaction data that deviate from established patterns. These models can detect unusual spending habits, such as large purchases in unfamiliar locations or rapid sequences of transactions.

- Alerting and Response: Upon detection of potentially fraudulent activity, serverless functions can trigger alerts to fraud analysts or automatically initiate actions such as blocking transactions or contacting the customer.

- Example: A credit card company uses a serverless stream processing architecture to analyze transactions in real-time. When a transaction is flagged as potentially fraudulent, the system immediately alerts the customer via SMS and temporarily blocks the card, preventing further unauthorized use. This system processes millions of transactions per second, minimizing fraud losses.

Real-time Personalization

Real-time personalization leverages stream processing to tailor user experiences based on their real-time behavior and preferences. This involves analyzing user interactions with a website or application to deliver relevant content, recommendations, and offers.

- Behavioral Tracking: Serverless functions track user actions, such as clicks, page views, and product searches, capturing this data in real-time. This data is then fed into stream processing pipelines.

- Profile Enrichment: User profiles are enriched with real-time behavioral data, providing a dynamic view of user preferences and interests. This allows for a more granular understanding of individual users.

- Content Recommendation: Based on user behavior and profile data, serverless functions generate personalized content recommendations. These recommendations can be displayed on the website or within the application in real-time.

- A/B Testing and Optimization: Serverless stream processing enables real-time A/B testing, allowing businesses to optimize content and offers based on user engagement. This allows for iterative improvements based on user interactions.

- Example: An e-commerce website uses a serverless stream processing architecture to provide personalized product recommendations. As a user browses products, the system analyzes their browsing history, recently viewed items, and items in their cart to suggest relevant products in real-time. This leads to increased click-through rates and higher conversion rates.

IoT Data Processing

The Internet of Things (IoT) generates vast amounts of data from connected devices. Serverless stream processing provides the infrastructure to ingest, process, and analyze this data in real-time, enabling a wide range of IoT applications.

- Data Ingestion: Serverless functions are used to ingest data from IoT devices, handling the high volume and velocity of incoming data streams.

- Data Transformation: Incoming data is transformed and formatted to ensure compatibility with downstream analytics and storage systems. This can include data cleaning, aggregation, and filtering.

- Real-time Analytics: Serverless functions perform real-time analytics on the data, identifying trends, anomalies, and patterns. This can include calculating device health metrics, detecting equipment failures, and monitoring environmental conditions.

- Alerting and Control: Based on real-time analysis, serverless functions trigger alerts or control actions. For example, they can send notifications when a sensor detects a critical event or automatically adjust equipment settings.

- Example: A smart factory uses a serverless stream processing architecture to monitor the performance of industrial machinery. Sensors on the machines stream data about temperature, pressure, and vibration. The system analyzes this data in real-time, identifying potential equipment failures and alerting maintenance personnel before a breakdown occurs. This minimizes downtime and reduces maintenance costs.

Implementation Steps and Best Practices

Building a serverless stream processing system involves a series of well-defined steps, from initial design to ongoing monitoring and maintenance. Successful implementation hinges on adhering to best practices throughout the development lifecycle. This section Artikels the crucial steps and provides a checklist to guide the process.

General Steps for Building a Serverless Stream Processing System

The creation of a serverless stream processing system is a multi-stage process. Each step builds upon the previous one, culminating in a robust and scalable solution.

- Define Requirements and Scope: This initial phase involves clearly outlining the business needs and the specific data streams to be processed. Define the desired outcomes, performance metrics, and scalability requirements. Consider data sources, processing logic, and target destinations. Understanding the data volume, velocity, and variety (the “3 Vs” of big data) is crucial. For instance, if processing financial transactions, the system needs to handle high velocity and volume with stringent accuracy requirements.

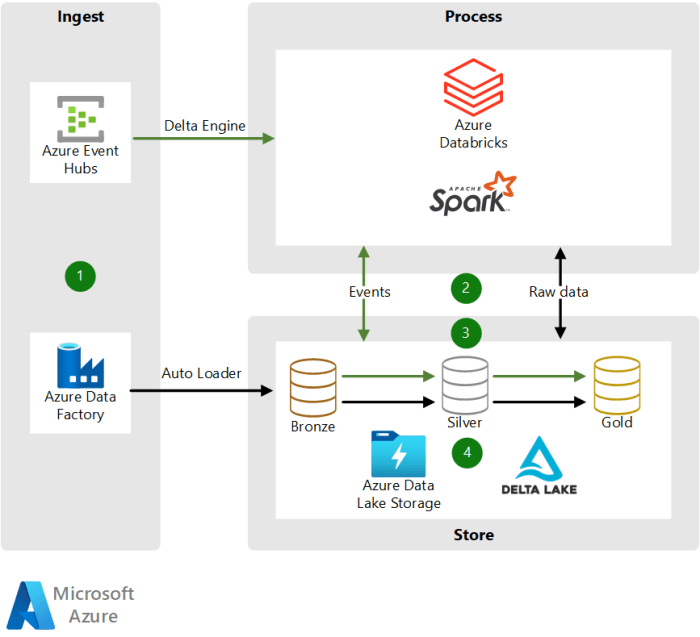

- Choose Technologies: Select appropriate serverless services for each component of the architecture. This includes services for data ingestion (e.g., Amazon Kinesis Data Streams, Azure Event Hubs, Google Cloud Pub/Sub), stream processing (e.g., AWS Lambda, Azure Functions, Google Cloud Functions), data storage (e.g., Amazon S3, Azure Blob Storage, Google Cloud Storage), and real-time analytics (e.g., Amazon Kinesis Data Analytics, Azure Stream Analytics, Google Cloud Dataflow).

Consider factors such as cost, performance, and integration capabilities.

- Design the Architecture: Design the system’s overall architecture, mapping out data flows and component interactions. This includes defining event triggers, data transformations, and error handling strategies. A well-designed architecture promotes modularity, maintainability, and scalability. Consider the use of a service mesh for improved communication and control.

- Develop and Test: Implement the chosen technologies and develop the necessary code for data ingestion, processing, and output. Employ rigorous testing throughout the development cycle, including unit tests, integration tests, and performance tests. Testing should simulate real-world data volumes and processing scenarios.

- Deploy and Configure: Deploy the serverless components to the chosen cloud provider’s environment. Configure the services according to the defined requirements, including setting up security configurations, access controls, and resource limits. Implement infrastructure-as-code (IaC) practices for repeatable and automated deployments.

- Monitor and Alert: Implement comprehensive monitoring and alerting mechanisms to track the system’s performance and identify potential issues. Utilize cloud provider-specific monitoring tools (e.g., Amazon CloudWatch, Azure Monitor, Google Cloud Operations) to collect metrics and logs. Establish alerts for critical events, such as errors, latency spikes, and resource exhaustion.

- Optimize and Iterate: Continuously monitor the system’s performance and identify areas for optimization. Analyze performance metrics, such as processing latency, throughput, and cost. Iterate on the design and implementation based on the observed behavior and changing requirements. Regularly review and update the system to incorporate new features and address evolving business needs.

Best Practices Checklist for Serverless Stream Processing

Adhering to best practices is essential for building a resilient, scalable, and cost-effective serverless stream processing system. The following checklist provides guidance across various aspects of the system.

- Security:

- Implement robust access control and authentication mechanisms.

- Encrypt data at rest and in transit.

- Regularly review and update security configurations.

- Use the principle of least privilege for all components.

- Scalability and Performance:

- Design the architecture to be horizontally scalable.

- Optimize data processing logic for efficiency.

- Use appropriate partitioning and sharding strategies.

- Monitor performance metrics and proactively scale resources.

- Cost Optimization:

- Choose cost-effective serverless services.

- Optimize resource utilization to minimize costs.

- Monitor and analyze spending patterns.

- Leverage auto-scaling features to adjust resources based on demand.

- Reliability and Resilience:

- Implement robust error handling and retry mechanisms.

- Design for fault tolerance and high availability.

- Regularly back up data and ensure data durability.

- Implement circuit breakers to prevent cascading failures.

- Monitoring and Observability:

- Implement comprehensive monitoring and alerting.

- Collect detailed metrics and logs.

- Establish dashboards for visualizing system performance.

- Proactively monitor key performance indicators (KPIs).

- Deployment and Management:

- Use infrastructure-as-code (IaC) for automated deployments.

- Implement CI/CD pipelines for continuous integration and deployment.

- Manage configurations centrally and securely.

- Document the system architecture and configurations thoroughly.

- Data Handling:

- Validate and sanitize data before processing.

- Implement data transformation and enrichment logic.

- Choose appropriate data storage formats.

- Handle data errors and exceptions gracefully.

Importance of Monitoring and Alerting in Production Environments

Comprehensive monitoring and alerting are critical for the operational health and success of a serverless stream processing system. Proactive monitoring allows for the early detection of issues, minimizing downtime and ensuring data integrity.

Effective monitoring encompasses several key aspects:

- Real-time Monitoring: Implement dashboards that provide real-time visibility into system performance, including metrics such as data ingestion rates, processing latency, error rates, and resource utilization. These dashboards should offer a clear and concise overview of the system’s health. For example, a dashboard might display the number of events processed per second, the average processing time for each event, and the percentage of events that resulted in errors.

- Alerting: Set up alerts for critical events and performance anomalies. Alerts should be triggered based on predefined thresholds and rules. Implement different alert severities to prioritize responses. For instance, an alert could be triggered if the processing latency exceeds a certain threshold or if the error rate increases above an acceptable level.

- Logging: Implement comprehensive logging to capture detailed information about the system’s operations. Logs should include timestamps, event details, and error messages. Centralized logging allows for easy analysis and troubleshooting. Utilize log aggregation tools to streamline the process of searching and analyzing logs.

- Performance Metrics: Track key performance indicators (KPIs) to measure the system’s performance and identify areas for optimization. Common KPIs include throughput, latency, error rates, and resource utilization. Regularly review these metrics to identify trends and proactively address potential issues. For example, if the throughput decreases over time, it may indicate a need to scale resources or optimize the processing logic.

- Proactive Issue Resolution: Monitoring and alerting enable proactive issue resolution. When an alert is triggered, the operations team can investigate the issue and take corrective action before it impacts the system’s functionality. This might involve scaling resources, fixing code errors, or adjusting configurations.

- Examples and Real-World Cases: Consider a fraud detection system built on serverless stream processing. If the system experiences a sudden spike in transaction processing time or a significant increase in error rates, alerts would immediately notify the operations team. The team can then investigate the root cause, potentially identifying a denial-of-service attack or a bug in the processing logic. This proactive response minimizes the impact of fraudulent activities and ensures the system’s integrity.

Final Thoughts

In conclusion, a serverless stream processing architecture offers a powerful and flexible approach to real-time data processing. Its ability to scale automatically, its cost-effectiveness, and its operational efficiency make it an attractive option for businesses seeking to leverage the power of streaming data. By understanding the core components, benefits, and best practices, organizations can successfully implement these architectures to unlock valuable insights, drive innovation, and gain a competitive edge in today’s data-driven world.

As technology evolves, serverless stream processing will continue to play a critical role in enabling businesses to respond to events in real-time and make data-driven decisions.

FAQ Summary

What are the primary advantages of serverless stream processing over traditional methods?

Serverless stream processing offers superior scalability, cost-effectiveness through pay-per-use models, and reduced operational overhead compared to traditional methods. It eliminates the need for infrastructure management, allowing developers to focus on business logic.

How does auto-scaling work in a serverless stream processing architecture?

Auto-scaling is a core feature. Cloud providers automatically allocate and deallocate resources based on the incoming data volume and processing demand, ensuring optimal performance and cost efficiency without manual intervention.

What are the common data sources that can be used with serverless stream processing?

Common data sources include IoT devices, social media feeds, application logs, clickstream data, financial transactions, and sensor data, all of which generate continuous streams of information.

How is data transformation handled in a serverless stream processing system?

Data transformation is performed using functions (e.g., AWS Lambda, Azure Functions) triggered by incoming data events. These functions can perform various operations, such as filtering, aggregation, enrichment, and format conversion, to prepare the data for analysis and storage.