The AWS Lambda Power Tuning tool presents a sophisticated solution for optimizing serverless function performance within the Amazon Web Services (AWS) ecosystem. This tool directly addresses the critical challenge of efficiently allocating resources, specifically memory, to AWS Lambda functions, thereby minimizing operational costs while maximizing execution speed. By systematically analyzing function behavior under various power configurations, the tool empowers developers to fine-tune their serverless applications for optimal performance and cost-effectiveness.

This methodology is rooted in the core principles of serverless computing, where resource allocation directly impacts both performance and financial expenditures. Understanding the intricacies of this tool, from its underlying mechanics to its practical applications, is crucial for anyone aiming to harness the full potential of AWS Lambda and its serverless architecture. The tool helps in determining the optimal memory allocation, leading to cost-effective and high-performance Lambda functions.

Introduction to AWS Lambda Power Tuning

AWS Lambda Power Tuning is a tool designed to optimize the performance and cost-efficiency of serverless functions. It leverages a systematic approach to determine the optimal memory allocation for a Lambda function, balancing execution time and cost. This optimization is crucial in serverless computing, where resource allocation directly impacts both performance and expenditure.

Core Concept and Primary Objective

The fundamental principle behind AWS Lambda Power Tuning revolves around finding the “sweet spot” for a Lambda function’s memory configuration. The primary objective is to identify the memory setting that minimizes the overall cost of execution while maintaining acceptable performance levels. This involves testing the function across a range of memory settings and analyzing the resulting execution times and costs.

Problem Addressed in Serverless Computing

Serverless computing, while offering scalability and reduced operational overhead, introduces a new challenge: resource allocation optimization. Developers must define the resources (primarily memory) for their Lambda functions. Over-provisioning leads to increased costs, as users pay for unused capacity. Under-provisioning, on the other hand, results in longer execution times and potential timeouts, degrading performance and user experience. AWS Lambda Power Tuning addresses this problem by providing a data-driven method for determining the optimal memory configuration, thereby minimizing both cost and execution time.

This is particularly relevant given that Lambda function pricing is directly proportional to memory allocation and execution duration.

Benefits of Using the Tool

Using AWS Lambda Power Tuning offers significant advantages in cost optimization and performance enhancement.

- Cost Optimization: By identifying the memory setting that balances performance and cost, the tool helps users avoid over-provisioning, leading to significant cost savings. The tool’s analysis provides data to support informed decisions, ensuring users are only paying for the resources they actually need.

- Performance Enhancement: While cost optimization is a primary goal, the tool also aids in improving performance. Finding the optimal memory allocation can reduce execution times, leading to faster response times and improved user experience. A function configured with insufficient memory might experience longer execution times due to CPU throttling, whereas the tool aims to identify a setting that mitigates such throttling.

- Data-Driven Decision Making: The tool provides a structured, data-driven approach to optimization, eliminating guesswork and reliance on intuition. It analyzes the function’s behavior across different memory settings and provides clear, quantifiable results, making it easier for developers to make informed decisions.

- Automated Optimization: Power Tuning automates the process of testing and analyzing different memory configurations, saving developers time and effort. The tool can quickly test various settings and present the results in an easy-to-understand format.

For example, consider a Lambda function processing image thumbnails.

Cost = (Memory Allocated

- Price per GB-second)

- Execution Time

If the tool determines that a 512MB memory allocation results in a faster execution time and a lower overall cost compared to 1024MB, developers can save money without sacrificing performance. The tool also provides insights into potential performance bottlenecks, allowing for further optimization of the function code itself.

Function Optimization Challenges

Optimizing AWS Lambda function performance is a critical aspect of cloud application development, directly impacting cost, latency, and overall system efficiency. Several challenges arise during this optimization process, demanding careful consideration and strategic approaches. These challenges are multifaceted, encompassing resource allocation, code efficiency, and the complexities of the serverless environment itself.

Common Lambda Function Optimization Challenges

Lambda function optimization presents several hurdles. These challenges stem from the nature of serverless computing, where developers relinquish control over underlying infrastructure, requiring a different approach to performance tuning compared to traditional application deployments.

- Resource Allocation Complexity: Determining the optimal memory allocation for a Lambda function is a significant challenge. Over-provisioning leads to increased costs, while under-provisioning results in performance bottlenecks and increased execution times. The relationship between memory allocation and CPU power further complicates this.

- Cold Start Latency: Cold starts, where a Lambda function needs to initialize a new execution environment, can introduce significant latency. This is particularly problematic for functions handling time-sensitive requests. Factors like function size, dependencies, and VPC configuration influence cold start times.

- Code Optimization Difficulties: Efficient code is crucial, but identifying performance bottlenecks within the function’s code can be difficult. Profiling and tracing tools are essential, but analyzing the results and implementing effective optimizations requires expertise.

- Dependency Management: Managing dependencies and ensuring they are optimized for serverless environments is another hurdle. Large or poorly optimized dependencies can increase function size, cold start times, and execution duration.

- Monitoring and Debugging Limitations: Monitoring Lambda function performance and debugging issues can be more complex than in traditional environments. Limited access to the underlying infrastructure and the ephemeral nature of function instances require specialized tools and techniques.

Difficulties in Selecting Optimal Memory Allocation

Selecting the appropriate memory allocation is one of the most intricate aspects of Lambda function optimization. The optimal memory setting is not static; it varies based on the function’s code, the nature of the workload, and the desired performance characteristics.

- Memory and CPU Relationship: Lambda functions allocate CPU power proportionally to the memory configured. Increasing memory allocation also increases CPU resources, which can improve performance, but at a higher cost. The optimal balance between memory and CPU utilization is critical.

- Workload Variability: Different workloads require different resource allocations. CPU-intensive tasks benefit from more memory, while I/O-bound tasks might benefit less. Understanding the specific demands of the workload is crucial.

- Cost Considerations: Lambda function execution costs are directly related to memory allocation and execution time. Over-provisioning memory can lead to unnecessary costs, while under-provisioning can result in slower performance and potentially higher overall costs due to increased execution duration.

- Performance Testing Complexity: Determining the optimal memory setting requires rigorous performance testing. Testing across a range of memory configurations is essential to identify the point where performance plateaus or declines. This can be time-consuming and require sophisticated tooling.

- Dependency on External Services: The performance of external services that the Lambda function interacts with can influence the optimal memory allocation. If external services are slow, increasing the function’s memory might not significantly improve overall performance.

How the Tool Overcomes Function Optimization Challenges

The AWS Lambda Power Tuning tool addresses the aforementioned challenges by providing an automated and data-driven approach to function optimization. It simplifies the process of identifying the optimal memory configuration and offers insights into other performance-related aspects.

- Automated Memory Configuration Testing: The tool automatically tests the Lambda function across a range of memory configurations, from the minimum to the maximum allowed. This eliminates the need for manual testing across multiple memory settings.

- Performance Profiling and Analysis: The tool collects detailed performance metrics for each memory configuration, including execution time, cost, and cold start duration. It then analyzes these metrics to identify the optimal memory setting based on user-defined criteria (e.g., lowest cost, fastest execution time).

- Visualization of Performance Data: The tool provides visualizations of the performance data, making it easier to understand the relationship between memory allocation and performance metrics. This helps developers quickly identify performance bottlenecks and make informed decisions.

- Cost Optimization Insights: The tool calculates the cost of each memory configuration, allowing developers to optimize for cost efficiency. It highlights the memory settings that provide the best performance for the lowest cost.

- Cold Start Time Analysis: The tool also analyzes cold start times for different memory configurations. This is crucial for optimizing functions that are sensitive to latency.

- Support for Different Workloads: The tool can be used with a variety of workloads, including CPU-intensive, I/O-bound, and mixed workloads. It helps identify the optimal memory configuration regardless of the function’s specific characteristics.

Tool Functionality and Features

The AWS Lambda Power Tuning tool provides a systematic approach to optimizing Lambda function performance by identifying the most cost-effective memory configuration. This optimization process involves automatically testing a range of power settings and analyzing the resulting performance metrics to recommend the optimal configuration. This section details the key features and functionalities of the tool.

Automated Power Configuration Discovery

The primary function of the AWS Lambda Power Tuning tool is to automate the process of discovering the ideal power configuration for a Lambda function. This automation eliminates the manual trial-and-error process typically involved in Lambda function optimization, saving developers time and resources. The tool systematically evaluates the function’s performance across various memory settings, from the minimum to the maximum allowed by AWS Lambda.

This systematic approach ensures that all possible configurations are considered, leading to a more accurate and reliable optimization result. The tool employs a process that includes:

- Power Range Definition: The tool begins by defining the range of memory settings to be tested. This range typically spans from the minimum memory allocation (currently 128 MB) to the maximum (currently 10240 MB), in increments determined by the tool’s configuration.

- Invocation and Measurement: For each memory setting within the defined range, the tool invokes the Lambda function multiple times. During these invocations, it collects performance metrics, including execution time, cost, and memory utilization. The number of invocations per setting can be configured to increase the statistical significance of the results.

- Data Analysis: The tool analyzes the collected performance metrics to identify trends and patterns. This analysis focuses on the trade-offs between execution time and cost for each memory setting. The tool’s algorithms identify the memory setting that provides the best balance between performance and cost efficiency.

- Recommendation: Based on the data analysis, the tool recommends the optimal power configuration for the Lambda function. This recommendation includes the specific memory setting and provides supporting data, such as the estimated cost savings and performance improvements.

Output Formats and Data Presentation

The AWS Lambda Power Tuning tool provides its results in multiple output formats, enabling users to interpret the findings in various ways. The tool generates detailed reports and visualizations that facilitate understanding of the function’s performance across different power settings. This flexibility in output formats ensures that the tool’s results are accessible and easily understandable for a wide range of users.

The output formats include:

- JSON Output: The tool generates JSON (JavaScript Object Notation) output, which is a structured data format that is easily parsed and processed by other tools and systems. This format includes detailed performance metrics for each memory setting tested, such as the average execution time, the cost per invocation, and the total cost for the specified number of invocations. This format is suitable for programmatic analysis and integration with other applications.

- CSV Output: The tool also provides results in CSV (Comma-Separated Values) format. This format is a simple, tabular data format that is widely compatible with spreadsheet software and data analysis tools. The CSV output includes the same performance metrics as the JSON output but in a format that is easier to view and manipulate in spreadsheets. This allows for easy visualization of the data using tools like Microsoft Excel or Google Sheets.

- HTML Output: The tool generates HTML (HyperText Markup Language) output, which presents the results in a user-friendly, interactive format. The HTML output includes tables, charts, and graphs that visualize the performance data across different memory settings. The HTML output provides a clear and concise summary of the optimization results, making it easy to understand the trade-offs between execution time and cost.

The visualization often includes a scatter plot, where the X-axis represents memory allocation, and the Y-axis represents either cost or execution time. Each data point on the plot represents a specific memory setting and its associated performance metrics.

- Visualization within AWS Console (Optional): Integration with the AWS console allows users to view the results directly within the Lambda function configuration. This can include displaying the recommended power configuration and key performance metrics in the Lambda function’s dashboard. This streamlines the process of implementing the recommended configuration.

Installation and Setup

The effective utilization of the AWS Lambda Power Tuning tool necessitates a well-defined installation and setup procedure. This process ensures the tool functions correctly, allowing for the accurate profiling and optimization of Lambda functions. The following sections detail the steps required, the necessary prerequisites, and the dependencies involved in establishing a functional environment for the tool.

Prerequisites and Dependencies

Before initiating the installation, several prerequisites must be satisfied to ensure a smooth setup process. These prerequisites involve the availability of specific software components and account configurations.

- AWS Account and Credentials: Access to an active AWS account is fundamental. This account should have sufficient permissions to create and manage Lambda functions, IAM roles, and other related AWS resources. Securely configure your AWS credentials, typically through the AWS CLI, to allow the tool to interact with your account.

- AWS CLI Installation and Configuration: The AWS Command Line Interface (CLI) is essential for interacting with AWS services from the command line. Install the AWS CLI and configure it with your AWS account credentials. This allows the tool to deploy resources and manage your Lambda functions. Verify the installation by running `aws –version` in your terminal.

- Node.js and npm: Node.js and npm (Node Package Manager) are required for running the tool. Install the latest LTS (Long-Term Support) version of Node.js and npm. This provides the runtime environment and package management capabilities needed for the tool’s execution. Verify the installation by running `node -v` and `npm -v` in your terminal.

- Serverless Framework (Optional but Recommended): While not strictly mandatory, the Serverless Framework simplifies the deployment and management of serverless applications, including the Lambda Power Tuning tool. Installing the Serverless Framework streamlines the deployment process, allowing for easier configuration and management of resources.

Step-by-Step Installation Guide

The installation process involves several sequential steps, each crucial for the successful deployment and configuration of the AWS Lambda Power Tuning tool.

Step 1: Clone the Repository

Begin by cloning the AWS Lambda Power Tuning tool’s repository from GitHub. Use the following command in your terminal:

git clone https://github.com/alexcasalboni/aws-lambda-power-tuning.gitThis command downloads the tool’s source code to your local machine.

Step 2: Navigate to the Project Directory

Change your current directory to the project directory using the `cd` command:

cd aws-lambda-power-tuningThis step ensures you are in the correct directory to execute subsequent commands.

Step 3: Install Dependencies

Install the project’s dependencies using npm. Run the following command:

npm installThis command installs all the required packages listed in the `package.json` file, ensuring the tool has everything it needs to run correctly.

Step 4: Deploy the Tool

Deploy the tool to your AWS account. This step typically involves using the Serverless Framework (if installed) or manually deploying the necessary resources. If using the Serverless Framework, you might use a command like:

serverless deployThis command creates the required Lambda functions, IAM roles, and other resources in your AWS account, making the tool accessible for use. The exact deployment command might vary based on your setup and configuration.

Step 5: Configure the Tool (Optional)

Configure the tool to match your specific requirements. This might involve modifying environment variables, adjusting the memory settings for the tool’s Lambda functions, or setting up permissions. Refer to the tool’s documentation for detailed configuration instructions.

Step 6: Verify the Installation

After deployment, verify that the tool is functioning correctly. This can be done by running a test scan on a sample Lambda function or by checking the tool’s logs for any errors. Ensure that the tool can access your AWS resources and execute scans successfully.

Running the Tuning Process

Initiating the AWS Lambda Power Tuning process involves specifying the Lambda function to be analyzed and configuring the parameters that govern the tuning run. This process leverages the tool’s ability to test the function across various power settings, identifying the optimal configuration for cost and performance. The selection of parameters significantly influences the outcome, directly affecting the accuracy and efficiency of the tuning process.

Initiating the Tuning Run

To start the tuning process, the user must first identify the target Lambda function. This is typically done by providing the function’s name or ARN (Amazon Resource Name) to the AWS Lambda Power Tuning tool. The tool then orchestrates a series of invocations of the Lambda function, each time with a different power setting, ranging from the minimum to the maximum memory allocation.The process generally involves these steps:

- Function Specification: The user provides the Lambda function’s name or ARN.

- Configuration Parameterization: The user configures parameters such as the power range, number of iterations, and payload.

- Execution: The tool invokes the Lambda function multiple times, varying the memory allocation.

- Data Collection: The tool collects metrics such as duration, cost, and memory usage for each invocation.

- Analysis and Recommendation: The tool analyzes the collected data to identify the optimal power setting.

Configurable Parameters

Several parameters can be configured during the tuning run to tailor the process to specific needs. These parameters control aspects such as the power range to test, the number of iterations for each power setting, and the payload used for function invocations. Configuring these parameters correctly is crucial for obtaining accurate and reliable results.Here is a table detailing the key parameters and their descriptions:

| Parameter | Description | Default Value | Impact |

|---|---|---|---|

| Function Name/ARN | The name or ARN of the Lambda function to be tuned. This specifies the target function. | None (Required) | Determines which function is analyzed. Incorrect input will cause the process to fail. |

| Power Range | The range of memory allocations (in MB) to test for the Lambda function. This defines the power settings to be evaluated. | 128 MB – Maximum Allowed | Influences the granularity of the tuning and the time required. A wider range increases the search space and can lead to more accurate results but takes longer. |

| Number of Iterations | The number of times the Lambda function is invoked for each power setting. This parameter improves the statistical significance of the results. | 10 | Affects the accuracy of the measurements. More iterations reduce the impact of random fluctuations, leading to more reliable results. |

| Payload | The input data passed to the Lambda function during each invocation. This affects the function’s execution time and resource consumption. | Empty JSON object () | The nature of the payload influences the execution time and memory usage. The complexity of the payload directly affects the resource consumption. |

Analyzing Tuning Results

Interpreting the results from the AWS Lambda Power Tuning tool is crucial for optimizing function performance and cost. The tool generates a comprehensive report that details the performance characteristics of a Lambda function across different memory configurations. This report allows developers to pinpoint the optimal memory allocation that balances performance and cost efficiency.

Interpreting the Tuning Report

The tuning report presents data in a structured format, typically a table or a graph, that allows for easy analysis. Understanding the metrics and their relationships is key to making informed decisions.

- Memory Configuration: This column lists the different memory allocations tested, usually ranging from the minimum (128MB) to the maximum (currently 10240MB) in increments. Each memory setting represents a different power setting.

- Duration: This metric represents the time taken for the Lambda function to execute, measured in milliseconds (ms). Shorter durations indicate faster execution times, which are generally desirable.

- Cost: The estimated cost of executing the Lambda function for a given memory configuration. This is a crucial metric, calculated based on the duration and the memory allocated, reflecting the monetary impact of each configuration.

- Invocations: The number of times the Lambda function was invoked during the testing phase for each memory configuration. This ensures statistical significance in the results.

- Cold Start Duration: The time taken for the Lambda function to start when it doesn’t have an active execution environment. Cold starts can significantly impact latency, and this metric helps identify configurations that minimize this impact.

- Concurrency: The number of concurrent executions of the Lambda function during the testing phase. This metric provides insights into the function’s ability to handle concurrent requests.

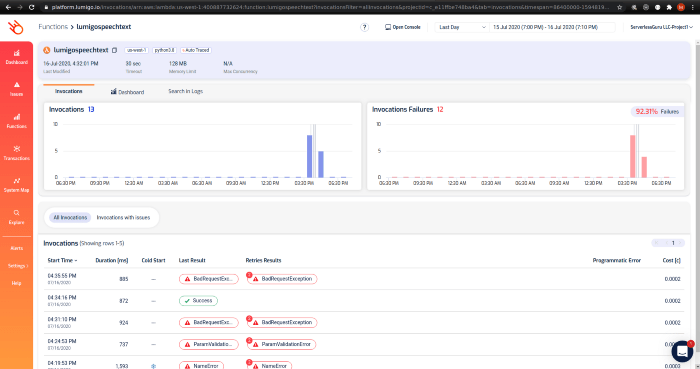

- Errors: The number of errors encountered during the execution of the function. Higher error rates suggest that the function may not be stable under certain memory configurations.

Key Metrics and Data Points

Several key metrics are critical in evaluating a function’s performance. These metrics, along with their interdependencies, allow for a comprehensive assessment of the function’s behavior.

- Execution Time vs. Memory: The relationship between execution time (duration) and memory allocation is fundamental. Generally, increasing memory can lead to decreased execution time, up to a certain point. Beyond that point, increasing memory may not significantly improve performance and may even increase cost. The point at which the execution time plateaus or increases is crucial for identifying the optimal memory setting.

- Cost vs. Memory: This metric directly relates to the financial implications of the function’s configuration. The tool calculates the estimated cost based on duration and memory. The goal is to find the lowest cost configuration that meets performance requirements. It’s important to consider that faster execution times can sometimes justify a slightly higher cost if it significantly improves the user experience or reduces the overall processing time for a larger workload.

- Cost per Execution: This is derived by dividing the total cost by the number of invocations. This metric provides a more granular view of the cost efficiency of each memory configuration.

- Throughput: This refers to the rate at which the function processes requests. A higher throughput is desirable as it indicates that the function can handle more requests in a given period. Throughput is often indirectly observed by analyzing execution time and concurrency.

- Error Rate: A low error rate is essential for a reliable function. A high error rate indicates that the function may be unstable under certain memory configurations, which can lead to data loss or service disruption.

Identifying the Optimal Memory Allocation

The tuning results guide the identification of the optimal memory allocation, balancing performance and cost. The process involves analyzing the trade-offs between various metrics.

- The “Speed/Cost” Curve: The tool typically presents a “speed/cost” curve that visually represents the relationship between execution time and cost across different memory settings. The optimal memory setting is often found at the “elbow” of this curve, where performance gains diminish relative to the increase in cost.

- Cost Optimization: Identify the memory configuration that provides the lowest cost without significantly impacting performance. If execution time is within acceptable limits, prioritize cost savings.

- Performance Requirements: If low latency is critical, prioritize the memory configuration that provides the fastest execution time, even if it results in a slightly higher cost. Consider the impact of cold starts on overall performance.

- Practical Example: Suppose a Lambda function processes image thumbnails. The tuning results show that increasing memory from 512MB to 1024MB reduces execution time by 20% but increases cost by 30%. If the function is invoked frequently, the 20% performance improvement might justify the 30% cost increase. However, if the function is invoked infrequently, the cost savings of the 512MB configuration may be preferable, even with the slower execution time.

- Real-World Scenarios: Consider real-world scenarios where performance is critical. For example, in a financial application where real-time data processing is essential, the fastest execution time may be preferred, even at a higher cost. In contrast, for batch processing tasks, cost optimization might be the primary concern.

Cost and Performance Trade-offs

Optimizing AWS Lambda function performance necessitates careful consideration of the inherent trade-offs between cost and execution time. The Lambda Power Tuning tool directly aids in navigating these trade-offs by providing data-driven insights into how different memory configurations impact both resource consumption and function latency. This allows developers to make informed decisions that balance performance requirements with budgetary constraints.

Comparing Cost and Performance Implications of Memory Configurations

The memory allocated to a Lambda function directly influences its computational power, which, in turn, affects execution time and cost. Higher memory allocation generally translates to faster execution times but also increases the per-invocation cost. Conversely, lower memory allocation reduces cost but may lead to longer execution times, potentially impacting the overall cost due to increased compute time.

- Memory Allocation and Execution Time: The relationship between memory and execution time is not always linear. For some functions, increasing memory beyond a certain point may yield diminishing returns in terms of speed improvements. This is because the function might be limited by other factors, such as network latency or database access times, rather than CPU power.

- Cost Calculation: The cost of a Lambda function is determined by two primary factors: the amount of time the function runs (duration) and the amount of memory allocated. AWS charges based on the GB-seconds consumed. For example, a function running for 1 second with 128 MB of memory consumes 0.128 GB-seconds. The price per GB-second varies depending on the AWS region.

- Cold Starts: The memory allocation also impacts cold start times, which are the time it takes for a Lambda function to initialize when it hasn’t been invoked recently. Functions with higher memory allocations often have faster cold start times because more resources are available to initialize the execution environment.

Balancing Cost and Performance for Optimal Results

The optimal memory configuration for a Lambda function depends on the specific workload and performance requirements. The Lambda Power Tuning tool helps identify the memory configuration that provides the best balance between cost and performance. This typically involves finding the point where increasing memory no longer significantly reduces execution time, while also considering the acceptable cost threshold.To illustrate the relationship between memory allocation, execution time, and cost, consider the following table:

| Memory (MB) | Execution Time (ms) | Cost per Invocation ($) | Notes |

|---|---|---|---|

| 128 | 1500 | 0.0000004 | Lowest cost, longest execution time. |

| 512 | 800 | 0.0000016 | Improved performance, moderate cost. |

| 1024 | 600 | 0.0000032 | Significant performance improvement, higher cost. |

| 2048 | 550 | 0.0000064 | Marginal performance improvement, highest cost. |

In this example, increasing the memory from 128 MB to 512 MB significantly improves execution time. However, increasing the memory further to 2048 MB yields only a marginal improvement in execution time while substantially increasing the cost. The optimal memory configuration would likely be either 512 MB or 1024 MB, depending on the performance requirements and budget constraints. The Lambda Power Tuning tool allows developers to evaluate different memory configurations and identify the most cost-effective option for their specific function.

Practical Use Cases

The AWS Lambda Power Tuning tool finds its utility across a diverse range of serverless applications, helping developers optimize function performance and cost. By systematically exploring different power configurations, the tool identifies the optimal balance between execution time and resource consumption. This targeted optimization is particularly valuable in real-world scenarios where performance bottlenecks can significantly impact application responsiveness and operational expenses.

Image Processing and Transformation

Image processing tasks, common in web applications and content delivery networks, often involve computationally intensive operations like resizing, format conversion, and applying filters. These functions are prime candidates for power tuning, as the processing time directly affects user experience and the cost of cloud resources.To optimize image processing functions:

- Scenario: A web application that automatically resizes user-uploaded images.

- Tool Application: The Lambda Power Tuning tool is used to test different memory configurations for the resizing function.

- Example: The tool identifies that a function with 512MB of memory executes the resizing operation 30% faster than the default 128MB configuration, while incurring only a slightly higher cost. This improvement results in a faster user experience.

- Outcome: Reduced latency for image delivery and potential cost savings compared to less optimized configurations.

Data Processing and ETL Pipelines

Extract, Transform, Load (ETL) pipelines are crucial for moving and processing large datasets. Lambda functions can be used to perform various steps in these pipelines, such as data cleansing, aggregation, and loading data into data warehouses. Optimizing these functions is essential for timely data delivery and efficient resource utilization.To optimize ETL pipelines:

- Scenario: A daily data processing pipeline that aggregates sales data from multiple sources.

- Tool Application: The Lambda Power Tuning tool is used to evaluate the performance of the aggregation function.

- Example: The tool reveals that increasing the memory allocation from 256MB to 1GB significantly reduces the execution time of the aggregation function, leading to faster data processing and reporting.

- Outcome: Improved data processing speed, allowing for quicker insights and reduced operational delays.

API Backends and Microservices

API backends and microservices often handle a high volume of requests, making performance optimization critical. Lambda functions can be used to serve API endpoints, handle user requests, and interact with other services. Power tuning helps ensure the responsiveness and scalability of these functions.To optimize API backends:

- Scenario: An e-commerce platform’s product search API.

- Tool Application: The Lambda Power Tuning tool is used to optimize the search function, which retrieves product information from a database.

- Example: The tool discovers that increasing memory and CPU resources reduces the database query time, leading to a faster response time for product searches.

- Outcome: Enhanced API performance, leading to a better user experience and the ability to handle a higher volume of requests.

Real-time Data Stream Processing

Lambda functions can be used to process real-time data streams from sources like IoT devices, social media feeds, or financial markets. These functions often involve complex computations and require efficient resource allocation to handle the continuous flow of data.To optimize real-time data stream processing:

- Scenario: An IoT application that processes sensor data from a fleet of vehicles.

- Tool Application: The Lambda Power Tuning tool is applied to the function that analyzes sensor data and triggers alerts.

- Example: The tool identifies that increasing the memory allocation allows the function to process more data per second, reducing the latency in triggering alerts and improving the responsiveness of the system.

- Outcome: Faster response times for critical events, enabling more timely actions and improved operational efficiency.

Machine Learning Inference

Lambda functions can be used to perform machine learning inference, making predictions based on trained models. Optimizing these functions is critical for delivering accurate and timely predictions.To optimize machine learning inference:

- Scenario: An application that performs image recognition using a pre-trained model.

- Tool Application: The Lambda Power Tuning tool is used to optimize the function that performs image classification.

- Example: The tool determines that a specific memory configuration improves the inference time, leading to faster image classification.

- Outcome: Improved inference speed, allowing for quicker results and a better user experience.

Limitations and Considerations

The AWS Lambda Power Tuning tool, while a valuable asset for optimizing Lambda function performance, is not without its constraints. Understanding these limitations and the factors to consider when deploying the tool in production environments is crucial for maximizing its effectiveness and mitigating potential issues. This section delves into these aspects, providing insights into addressing challenges and tailoring the tool for specific workloads.

Tool Limitations

The AWS Lambda Power Tuning tool operates within certain boundaries, which can impact its applicability and the accuracy of its recommendations. Recognizing these limitations is essential for setting realistic expectations and interpreting the results effectively.

- Sampling-Based Analysis: The tool relies on sampling the function’s execution with different power configurations. The accuracy of the results depends on the representativeness of the sample. If the sample does not adequately reflect the function’s typical workload, the tuning recommendations may be skewed. This is particularly relevant for functions with highly variable or unpredictable input patterns.

- Cold Start Influence: The tool’s measurements can be affected by cold starts, especially in lower power configurations. Cold starts introduce latency that is not directly related to the function’s processing time, potentially leading to inaccurate power setting recommendations.

- Limited Metric Scope: The tool primarily focuses on execution time and cost. It does not directly consider other factors such as memory usage patterns or network I/O, which can influence overall performance and cost-effectiveness.

- Dependencies on Function Code: The tool’s performance is inherently linked to the function’s code. Complex or inefficient code can significantly impact the tuning results, potentially leading to suboptimal power settings.

- Environment Variability: The tool’s recommendations are based on the environment in which it is run. Changes in the underlying infrastructure (e.g., updates to the Lambda runtime environment) can render previous tuning results obsolete.

Production Environment Considerations

Deploying the AWS Lambda Power Tuning tool in a production environment requires careful planning and execution. Several factors must be considered to ensure the tool’s effective and safe integration.

- Impact on Existing Traffic: Running the tool in a production environment can potentially impact existing traffic, especially if the tool’s experiments involve significant variations in power settings. Implementing measures to minimize this impact is crucial.

- Throttling and Rate Limiting: Lambda functions and the AWS services they interact with are subject to throttling and rate limits. The tuning process itself, involving multiple function invocations, may trigger these limits if not carefully managed.

- Cost Implications: The tuning process generates costs associated with function invocations and other AWS services. These costs must be carefully monitored and managed to avoid unexpected expenses.

- Monitoring and Alerting: Implementing robust monitoring and alerting mechanisms is essential to track the function’s performance and identify any issues arising from the tuning process or the recommended power settings.

- Security Considerations: The tuning process involves invoking Lambda functions. Ensure that the functions and the tool itself adhere to security best practices, including proper authentication and authorization.

Addressing Challenges and Optimizing for Specific Workloads

To overcome the limitations of the AWS Lambda Power Tuning tool and optimize its use for specific workloads, several strategies can be employed.

- Workload Characterization: Before running the tool, thoroughly analyze the function’s workload. Identify the typical input patterns, data sizes, and processing requirements. This information can inform the tuning process and help interpret the results.

- Representative Test Data: Use representative test data that accurately reflects the function’s typical inputs during the tuning process. This ensures that the tuning recommendations are relevant to the actual workload.

- Cold Start Mitigation: Consider using provisioned concurrency to mitigate the impact of cold starts, especially when using lower power configurations. This helps to isolate the effects of power settings on processing time.

- Iterative Tuning: The tuning process is often iterative. Start with a baseline configuration and then progressively refine the power settings based on the results. This allows for incremental improvements and reduces the risk of significant disruptions.

- Integration with CI/CD: Integrate the tool into the continuous integration/continuous deployment (CI/CD) pipeline. This allows for automated tuning and validation of Lambda functions as part of the software development lifecycle.

- Monitoring and Validation: After applying the recommended power settings, continuously monitor the function’s performance in the production environment. Validate the tuning results by comparing the actual performance metrics (e.g., execution time, cost) with the predictions made by the tool.

- Code Optimization: Address any code inefficiencies or bottlenecks before tuning the function’s power settings. This can significantly improve the overall performance and cost-effectiveness of the function.

Concluding Remarks

In conclusion, the AWS Lambda Power Tuning tool serves as an indispensable asset for serverless developers seeking to refine their AWS Lambda functions. Through its ability to automate the power configuration process and provide data-driven insights, the tool enables informed decision-making regarding resource allocation, cost management, and performance enhancement. By embracing the capabilities of this tool, developers can unlock the full potential of serverless computing, leading to more efficient, cost-effective, and high-performing applications.

Q&A

What is the primary goal of the AWS Lambda Power Tuning tool?

The primary goal is to identify the optimal memory configuration for a Lambda function, balancing execution time and cost to achieve the best possible performance-to-cost ratio.

How does the tool determine the optimal memory allocation?

The tool works by running the Lambda function with different memory settings and measuring its performance (execution time) and cost. It then analyzes the results to identify the memory setting that provides the best trade-off between these two factors.

Does the tool support all programming languages used in Lambda functions?

Yes, the AWS Lambda Power Tuning tool is designed to work with all programming languages supported by AWS Lambda, including Python, Node.js, Java, Go, and others.

Is the tool free to use?

The tool itself is open-source and free to use. However, you will incur costs for the Lambda function executions during the tuning process, which are based on the function’s resource usage.