Embarking on a journey to understand “what is the role of a DevOps Engineer” is like peering into the engine room of modern software development. This multifaceted role is crucial in bridging the gap between development and operations, streamlining processes, and ensuring that software is not only created efficiently but also deployed and maintained seamlessly. It’s a dynamic field, constantly evolving with the latest technologies and methodologies.

A DevOps Engineer wears many hats, from automating infrastructure to implementing CI/CD pipelines, and from monitoring application performance to ensuring robust security. Their core mission is to foster collaboration, enhance efficiency, and accelerate the delivery of high-quality software. This encompasses a broad spectrum of responsibilities, demanding a unique blend of technical skills, problem-solving abilities, and a collaborative mindset.

Overview of a DevOps Engineer’s Role

The DevOps Engineer role is pivotal in modern software development, bridging the gap between development and operations teams. This role facilitates faster, more reliable software releases by automating and streamlining the software delivery lifecycle. DevOps Engineers are crucial in fostering a culture of collaboration, automation, and continuous improvement.

Core Responsibilities of a DevOps Engineer

DevOps Engineers shoulder a variety of responsibilities, all aimed at improving the software development and deployment process. These responsibilities encompass a broad range of activities, from infrastructure management to monitoring and security.

- Infrastructure as Code (IaC): Managing and automating infrastructure provisioning using tools like Terraform, Ansible, or CloudFormation. This involves writing code to define and manage servers, networks, and other infrastructure components. For example, a DevOps Engineer might write a Terraform script to provision a new Kubernetes cluster.

- Continuous Integration and Continuous Deployment (CI/CD): Designing, implementing, and maintaining CI/CD pipelines. This involves automating the build, test, and deployment processes. Popular tools used include Jenkins, GitLab CI, and CircleCI. A CI/CD pipeline might automatically build and test code changes, and then deploy them to a staging environment.

- Monitoring and Logging: Implementing and managing monitoring and logging systems to track application performance and identify issues. Tools like Prometheus, Grafana, and the ELK stack (Elasticsearch, Logstash, Kibana) are frequently used. This allows DevOps Engineers to proactively identify and resolve issues before they impact users.

- Automation: Automating repetitive tasks to reduce manual effort and improve efficiency. This can involve scripting, using configuration management tools, or developing custom tools. For instance, automating the process of scaling a web application based on traffic load.

- Security: Implementing security best practices throughout the software development lifecycle. This includes tasks like vulnerability scanning, access control management, and compliance monitoring. Tools like SonarQube and various cloud provider security services are often utilized.

- Collaboration and Communication: Working closely with development, operations, and other teams to facilitate communication and collaboration. This involves sharing knowledge, providing support, and promoting a DevOps culture.

Day-to-Day Tasks of a DevOps Engineer

The daily activities of a DevOps Engineer are varied and dynamic, focusing on maintaining and improving the software delivery pipeline. These tasks can range from hands-on coding to strategic planning and problem-solving.

- Managing CI/CD pipelines: Monitoring pipeline performance, troubleshooting build failures, and optimizing pipelines for speed and efficiency. This may involve modifying pipeline configurations or integrating new tools.

- Provisioning and managing infrastructure: Creating and maintaining infrastructure environments, which includes tasks like setting up servers, configuring networks, and managing cloud resources.

- Monitoring system performance: Analyzing system metrics, identifying performance bottlenecks, and implementing solutions to improve application performance.

- Responding to incidents: Investigating and resolving production issues, including debugging code, analyzing logs, and implementing fixes.

- Automating tasks: Developing scripts and using automation tools to streamline repetitive tasks, such as deploying applications or scaling infrastructure.

- Collaborating with other teams: Working with developers, operations staff, and other stakeholders to improve the software delivery process.

Key Skills and Knowledge Areas for DevOps Engineers

Success in the DevOps Engineer role requires a diverse skillset encompassing technical proficiency, problem-solving abilities, and soft skills. Mastering these areas is essential for effective performance.

- Programming and Scripting: Proficiency in scripting languages like Python, Bash, or PowerShell is crucial for automating tasks and managing infrastructure.

- Cloud Computing: A strong understanding of cloud platforms like AWS, Azure, or Google Cloud is essential. This includes knowledge of services like compute, storage, networking, and databases. For example, knowing how to configure and manage EC2 instances on AWS.

- Containerization: Experience with containerization technologies like Docker and container orchestration platforms like Kubernetes is vital for modern application deployments.

- Configuration Management: Familiarity with configuration management tools like Ansible, Chef, or Puppet is necessary for automating infrastructure configuration.

- CI/CD Tools: Expertise in CI/CD tools like Jenkins, GitLab CI, CircleCI, or Azure DevOps is required for building and deploying software automatically.

- Monitoring and Logging Tools: Knowledge of monitoring and logging tools like Prometheus, Grafana, ELK stack, or Datadog is important for tracking application performance and identifying issues.

- Operating Systems: A solid understanding of operating systems, especially Linux, is beneficial for managing servers and troubleshooting issues.

- Networking: Basic networking knowledge, including understanding of TCP/IP, DNS, and firewalls, is crucial for managing network configurations.

- Security: Knowledge of security best practices, including vulnerability scanning, access control, and compliance monitoring.

- Communication and Collaboration: Strong communication and collaboration skills are necessary for working effectively with other teams and stakeholders.

Collaboration and Communication

DevOps Engineers are pivotal in bridging the gap between development and operations teams, fostering a collaborative environment that accelerates software delivery and improves product quality. They act as conduits, ensuring that both teams understand each other’s needs, priorities, and constraints. This collaborative approach is essential for streamlining the software development lifecycle and achieving business objectives.

Facilitating Communication Between Development and Operations Teams

DevOps Engineers actively work to break down the silos that often exist between development and operations. They create channels for open communication, ensuring that both teams are aligned throughout the software development lifecycle. This involves establishing shared goals, promoting transparency, and encouraging a culture of shared responsibility.

- Bridging the Gap: DevOps engineers facilitate communication by acting as translators between development and operations teams. They understand the technical language and concerns of both sides, enabling them to effectively communicate requirements, challenges, and solutions. This helps to avoid misunderstandings and ensures that everyone is on the same page.

- Establishing Shared Goals: A key aspect of effective communication is the establishment of shared goals. DevOps Engineers work with both teams to define common objectives, such as faster release cycles, improved system stability, and reduced downtime. When teams are working towards the same goals, they are more likely to collaborate effectively.

- Promoting Transparency: Transparency is essential for building trust and fostering collaboration. DevOps Engineers encourage open communication by making information readily available to both teams. This includes sharing project plans, release schedules, system metrics, and incident reports. This transparency allows everyone to understand the status of the project and identify potential problems early on.

- Encouraging Shared Responsibility: DevOps promotes a culture of shared responsibility. DevOps Engineers encourage both development and operations teams to take ownership of the entire software development lifecycle, from coding to deployment and maintenance. This shared responsibility fosters a sense of accountability and encourages teams to work together to solve problems.

Tools and Strategies for Effective Collaboration

DevOps Engineers utilize a variety of tools and strategies to promote effective collaboration. These tools and strategies are designed to facilitate communication, streamline workflows, and improve overall efficiency.

- Version Control Systems: Version control systems like Git are fundamental for collaborative development. They allow developers to track changes to code, collaborate on projects, and revert to previous versions if necessary. This promotes code sharing, reduces merge conflicts, and improves overall code quality.

- Continuous Integration/Continuous Delivery (CI/CD) Pipelines: CI/CD pipelines automate the software release process, enabling faster and more reliable deployments. They integrate development and operations by automating testing, building, and deployment processes. This reduces the risk of human error and allows teams to release software more frequently.

- Communication Platforms: Platforms like Slack or Microsoft Teams provide real-time communication channels for development and operations teams. These platforms facilitate quick communication, instant messaging, and file sharing, enabling teams to collaborate more effectively.

- Incident Management Systems: Tools such as PagerDuty or ServiceNow are used for incident management, which provides a structured process for handling and resolving system outages or performance issues. They allow for efficient communication, tracking, and resolution of incidents, improving system reliability and minimizing downtime.

- Monitoring and Alerting Tools: Tools like Prometheus, Grafana, and Datadog provide real-time monitoring of system performance and generate alerts when issues arise. This allows operations teams to quickly identify and address problems, minimizing their impact on users. Development teams can use these tools to understand how their code impacts system performance.

- Collaboration Platforms: Platforms like Jira or Azure DevOps Services serve as centralized hubs for project management, task tracking, and collaboration. They provide a single source of truth for project information, allowing both development and operations teams to stay organized and aligned.

- Infrastructure as Code (IaC): IaC tools like Terraform or Ansible enable the management of infrastructure through code. This promotes collaboration by allowing both development and operations teams to define, provision, and manage infrastructure in a consistent and repeatable manner.

Scenario: Resolving a Conflict Between Development and Operations

Consider a scenario where a new feature developed by the development team is causing performance issues in the production environment. The operations team is concerned about the impact on system stability and user experience, while the development team is eager to release the feature. A DevOps Engineer steps in to mediate the conflict.

- Understanding the Problem: The DevOps Engineer first gathers information from both teams. They meet with the development team to understand the feature’s functionality and the reasons behind its implementation. Simultaneously, they work with the operations team to identify the specific performance bottlenecks and their impact.

- Data-Driven Analysis: The DevOps Engineer uses monitoring tools to gather data on the feature’s performance. They analyze metrics such as CPU usage, memory consumption, and response times to identify the root cause of the performance issues.

- Facilitating a Discussion: The DevOps Engineer organizes a meeting with both teams to discuss the findings. They present the data and facilitate a constructive dialogue, ensuring that all perspectives are heard and understood.

- Finding a Solution: Based on the analysis and discussion, the DevOps Engineer helps the teams identify potential solutions. These might include optimizing the code, scaling the infrastructure, or implementing a phased rollout of the feature.

- Implementing and Monitoring the Solution: The DevOps Engineer helps implement the chosen solution, working closely with both teams. They then monitor the system’s performance to ensure that the issue is resolved and that the solution does not introduce new problems.

In this scenario, the DevOps Engineer acts as a neutral facilitator, using data and communication to resolve the conflict and ensure a successful outcome. The DevOps Engineer’s ability to bridge the communication gap between development and operations teams, combined with their technical expertise, enables them to resolve conflicts efficiently and effectively, leading to faster releases, improved system stability, and better collaboration.

Automation and Infrastructure as Code (IaC)

The DevOps Engineer’s role is heavily reliant on automation and the practice of Infrastructure as Code (IaC). These principles are fundamental to achieving the agility, speed, and reliability that define successful DevOps implementations. Automation streamlines repetitive tasks, reduces human error, and allows for faster deployment cycles. IaC, on the other hand, treats infrastructure the same way as application code, enabling version control, collaboration, and automated provisioning.

This section will delve into the specifics of automation and IaC, demonstrating their significance in a DevOps environment.

Automation in the DevOps Workflow

Automation is the cornerstone of efficient DevOps practices. It empowers DevOps engineers to manage complex systems with greater ease and consistency. By automating tasks, engineers can significantly reduce manual effort, minimize errors, and accelerate the software development lifecycle. This leads to faster release cycles, improved stability, and increased productivity.The benefits of automation are numerous and impact various aspects of the DevOps workflow:

- Faster Deployments: Automated deployment pipelines allow for rapid and consistent application releases. This reduces the time required to deploy changes and updates to production environments.

- Reduced Errors: Automation minimizes the potential for human error during repetitive tasks, such as configuration and deployment.

- Improved Consistency: Automated processes ensure that infrastructure and application configurations are consistent across different environments (development, testing, production).

- Increased Efficiency: Automation frees up DevOps engineers to focus on more strategic tasks, such as improving system performance and designing new features.

- Enhanced Scalability: Automated infrastructure provisioning allows for scaling resources up or down quickly and efficiently, based on demand.

Common IaC Tools and Their Application

Infrastructure as Code (IaC) represents a paradigm shift in infrastructure management. It allows engineers to define and manage infrastructure resources (servers, networks, databases, etc.) using code. This approach offers significant advantages, including version control, repeatability, and automated provisioning. Several powerful tools facilitate IaC implementation, each with its strengths and specific use cases.Here’s an overview of some popular IaC tools and their applications:

- Terraform: Terraform is a widely used IaC tool developed by HashiCorp. It supports a declarative approach, allowing engineers to define the desired state of the infrastructure. Terraform uses providers to interact with various cloud platforms (AWS, Azure, Google Cloud) and other services. It is known for its ability to manage complex infrastructure environments. For example, a DevOps Engineer might use Terraform to define and provision a multi-tier application stack across AWS, including virtual machines, databases, and load balancers.

- Ansible: Ansible is an open-source automation engine that can configure systems, deploy software, and orchestrate tasks. It uses a simple, human-readable language (YAML) to describe configurations. Ansible is particularly well-suited for configuration management and orchestration. A DevOps Engineer could use Ansible to automate the installation and configuration of software on existing servers, ensuring consistency across the environment.

- AWS CloudFormation: AWS CloudFormation is a service provided by Amazon Web Services (AWS) that allows users to define and manage AWS resources as code. It uses JSON or YAML templates to describe the infrastructure. CloudFormation is tightly integrated with AWS services, making it a natural choice for managing infrastructure within the AWS ecosystem. For instance, a DevOps Engineer could use CloudFormation to create and manage an entire AWS environment, including virtual private clouds (VPCs), subnets, and security groups.

- Azure Resource Manager (ARM) Templates: Azure Resource Manager (ARM) templates are used to define and deploy Azure resources declaratively. These templates, written in JSON, specify the resources needed for an application. They are similar to AWS CloudFormation but specifically for the Azure platform. A DevOps Engineer working with Azure might use ARM templates to deploy a complete application stack, including virtual machines, storage accounts, and databases.

- Google Cloud Deployment Manager: Google Cloud Deployment Manager is Google Cloud’s equivalent to CloudFormation and ARM templates. It enables the creation and management of Google Cloud resources using declarative templates, supporting both YAML and Python. DevOps Engineers on Google Cloud Platform (GCP) leverage Deployment Manager to provision and manage their infrastructure.

Implementing a Basic IaC Pipeline with Terraform

Terraform provides a practical and versatile solution for implementing IaC. This step-by-step guide demonstrates how to create a basic IaC pipeline using Terraform to provision a simple virtual machine on a cloud platform (for illustrative purposes, we will use AWS).

- Prerequisites:

- An AWS account with appropriate permissions.

- Terraform installed on your local machine. You can download it from the official Terraform website and follow the installation instructions for your operating system.

- AWS CLI (Command Line Interface) installed and configured with your AWS credentials.

- Project Setup:

- Create a new directory for your Terraform project (e.g., `terraform-vm`).

- Navigate to the new directory in your terminal.

- Create a file named `main.tf`. This file will contain your Terraform configuration.

- Configuration (main.tf):

Open `main.tf` in a text editor and add the following configuration. This example provisions an EC2 instance (virtual machine) on AWS.

Replace `”YOUR_AWS_REGION”` with your desired AWS region (e.g., “us-east-1”). Replace `”ami-xxxxxxxxxxxxxxxxx”` with a valid AMI ID for your chosen region. You can find AMI IDs in the AWS console.

provider "aws" region = "YOUR_AWS_REGION" resource "aws_instance" "example" ami = "ami-xxxxxxxxxxxxxxxxx" instance_type = "t2.micro" tags = Name = "Terraform-Managed-VM"

- Initialization:

In your terminal, navigate to the project directory and run the following command to initialize the Terraform project. This downloads the necessary provider plugins.

terraform init

- Planning:

Run the following command to create an execution plan. This shows you what Terraform will do (create, modify, or delete resources) based on your configuration.

terraform plan

- Applying:

If the plan looks correct, run the following command to apply the configuration and create the EC2 instance. Terraform will prompt you to confirm the action.

terraform apply

Type `yes` and press Enter to confirm.

- Verification:

Once the `apply` command completes, Terraform will output the details of the created resources. Verify that the EC2 instance has been created in your AWS console.

- Destroying the Infrastructure:

To destroy the resources created by Terraform, run the following command:

terraform destroy

Type `yes` and press Enter to confirm. This will delete the EC2 instance and any other resources managed by Terraform.

Important Considerations:

- State Management: Terraform stores the state of your infrastructure in a state file (terraform.tfstate). This file is crucial for managing your infrastructure. For production environments, it’s recommended to store the state file remotely (e.g., in an AWS S3 bucket) to enable collaboration and prevent data loss.

- Version Control: Always store your Terraform configuration files in a version control system (e.g., Git). This allows you to track changes, collaborate with others, and revert to previous versions if necessary.

- Security: Be mindful of security best practices when writing Terraform configurations. Avoid hardcoding sensitive information (e.g., access keys). Use environment variables or secrets management tools to handle sensitive data.

- Modules: Use Terraform modules to encapsulate reusable infrastructure components. This promotes code reuse and simplifies complex configurations.

Continuous Integration and Continuous Deployment (CI/CD)

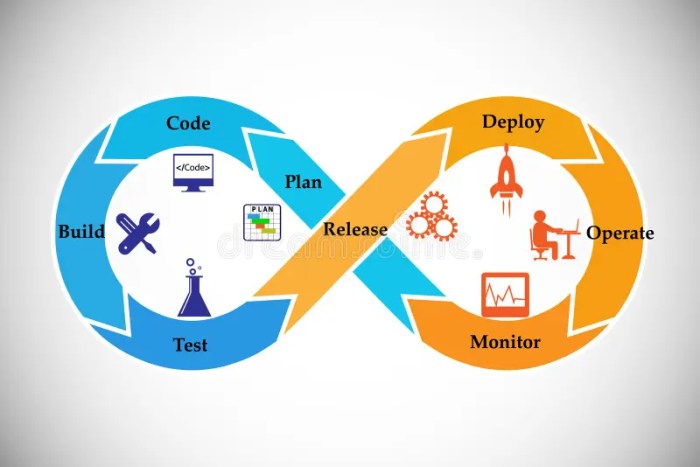

CI/CD is a cornerstone of modern DevOps practices, enabling faster and more reliable software releases. It’s a methodology focused on automating the software development lifecycle, from code integration to deployment, ensuring that changes are integrated frequently and delivered to users rapidly. This approach fosters a culture of continuous improvement and feedback, leading to higher quality software and increased developer productivity.

Principles of CI/CD Implementation

A DevOps Engineer implements CI/CD by establishing a streamlined workflow that automates the build, test, and deployment processes. This involves integrating code changes frequently, automating testing at various stages, and automating the deployment process to different environments. The core principles are centered around automation, frequent integration, and continuous feedback.

- Continuous Integration (CI): CI focuses on integrating code changes from multiple developers into a shared repository frequently. This typically involves automated builds and tests triggered by code commits. The goal is to identify and resolve integration issues early and often.

For example, a developer commits code to a Git repository.

The CI system, such as Jenkins or GitLab CI, automatically detects the change and triggers a build process. This process includes compiling the code, running unit tests, and potentially performing static code analysis. If the build fails or tests fail, the developer receives immediate feedback, allowing them to address the issues promptly.

- Continuous Delivery (CD): CD builds upon CI by automating the release process, making software releases more predictable and reliable. Code changes that pass the CI stage are automatically prepared for release. This can involve packaging the application, preparing configuration files, and performing other necessary steps.

For example, after a successful CI build, the application package might be automatically deployed to a staging environment for further testing.

This could involve integration tests, user acceptance tests (UAT), and performance testing. If these tests pass, the application is ready for deployment to production.

- Continuous Deployment (CD): Continuous deployment takes CD a step further by automating the deployment of code changes to production. With continuous deployment, every code change that passes the CI/CD pipeline is automatically released to the production environment. This requires a high degree of automation and a robust testing strategy.

For example, after successful testing in the staging environment, the application is automatically deployed to production.

This might involve a blue/green deployment strategy, where the new version of the application is deployed alongside the existing version, allowing for a smooth transition and rollback if necessary. The key difference between Continuous Delivery and Continuous Deployment is the automation of the deployment to production.

Examples of CI/CD Pipelines

CI/CD pipelines vary depending on the application type, infrastructure, and specific requirements. Here are examples for different application types:

- Web Application Pipeline:

For a web application, the pipeline might include:

- Code commit to Git repository.

- Automated build using a build tool (e.g., Maven, Gradle, npm).

- Unit tests and integration tests.

- Static code analysis.

- Containerization of the application (e.g., Docker).

- Deployment to a staging environment.

- User acceptance testing (UAT).

- Deployment to production environment, possibly using a blue/green deployment strategy.

- Mobile Application Pipeline:

A mobile application pipeline might include:- Code commit to Git repository.

- Automated build for different platforms (e.g., iOS, Android).

- Unit tests, UI tests, and integration tests.

- Code signing.

- Deployment to a testing distribution platform (e.g., TestFlight, Firebase App Distribution).

- Release to app stores (e.g., Apple App Store, Google Play Store).

- Microservices Pipeline:

For microservices, the pipeline might include:- Code commit to a Git repository for each microservice.

- Automated build and packaging of each microservice.

- Unit tests, integration tests, and end-to-end tests.

- Containerization of each microservice (e.g., Docker).

- Deployment to a container orchestration platform (e.g., Kubernetes).

- Monitoring and logging setup.

Comparison of CI/CD Tools

Several tools are available to implement CI/CD pipelines, each with its strengths and weaknesses. The choice of tool depends on the specific project needs, team expertise, and budget.

| Feature | Jenkins | GitLab CI | CircleCI |

|---|---|---|---|

| Ease of Setup | Requires initial setup and configuration; can be complex. | Integrated with GitLab; relatively easy to set up with a `.gitlab-ci.yml` file. | Easy to set up, especially for projects hosted on GitHub or GitLab. |

| Flexibility | Highly flexible with a vast plugin ecosystem. | Good flexibility; configuration via YAML files. | Good flexibility; supports various languages and platforms. |

| Scalability | Highly scalable with distributed builds and master/slave architecture. | Scalable, particularly within the GitLab ecosystem. | Scalable; offers parallel builds and caching. |

| Cost | Open-source; free, but requires infrastructure to run. | Free for basic use; paid plans for more features and resources. | Free for limited use; paid plans for more features and resources. |

| Community Support | Large and active community. | Good community support, integrated with GitLab. | Strong community support. |

Monitoring and Logging

Monitoring and logging are fundamental pillars of a successful DevOps implementation. They provide invaluable insights into the health and performance of applications and infrastructure, enabling proactive identification and resolution of issues. Effective monitoring and logging practices are crucial for maintaining system stability, optimizing performance, and ensuring a positive user experience. They empower DevOps engineers to make data-driven decisions and continuously improve the overall system.

Importance of Monitoring and Logging in a DevOps Environment

The implementation of robust monitoring and logging practices is crucial for several key reasons within a DevOps environment. These practices directly contribute to the efficiency, reliability, and responsiveness of the system.

- Proactive Issue Detection: Monitoring allows for the real-time tracking of system metrics, enabling early detection of potential problems before they impact users. For example, if CPU usage spikes unexpectedly, alerts can be triggered to investigate the cause.

- Faster Troubleshooting: Logging provides detailed records of events, errors, and user actions, which are essential for pinpointing the root cause of issues. This accelerates the troubleshooting process and reduces downtime. For instance, if a service fails, logs can reveal the exact point of failure and the underlying reason.

- Performance Optimization: Monitoring and logging data can be used to identify performance bottlenecks and areas for optimization. By analyzing metrics such as response times and resource utilization, DevOps engineers can fine-tune applications and infrastructure for optimal performance.

- Improved User Experience: By proactively identifying and resolving issues, monitoring and logging contribute to a more stable and reliable system, ultimately improving the user experience. A smooth and responsive application leads to greater user satisfaction.

- Data-Driven Decision Making: Monitoring and logging data provide valuable insights that can be used to inform decisions about resource allocation, capacity planning, and application design. This data-driven approach helps optimize resource utilization and ensures scalability.

- Security and Compliance: Logging plays a critical role in security monitoring and compliance. It provides an audit trail of system activity, enabling the detection of security breaches and ensuring adherence to regulatory requirements.

Tools and Techniques for Monitoring Application Performance and Infrastructure Health

A wide range of tools and techniques are employed to monitor application performance and infrastructure health in a DevOps environment. These tools capture various metrics and provide insights into the system’s behavior.

- Application Performance Monitoring (APM) Tools: APM tools, such as New Relic, Dynatrace, and AppDynamics, provide detailed insights into application performance, including transaction tracing, error rates, and response times. They help identify performance bottlenecks and pinpoint the root cause of issues within the application code. These tools often feature dashboards and alerts to notify teams of performance degradations.

- Infrastructure Monitoring Tools: Infrastructure monitoring tools, such as Prometheus, Grafana, and Nagios, monitor the health and performance of infrastructure components, including servers, networks, and databases. They collect metrics like CPU usage, memory utilization, disk I/O, and network latency. These tools provide alerts when thresholds are exceeded, indicating potential problems.

- Log Management Tools: Log management tools, such as the ELK Stack (Elasticsearch, Logstash, Kibana), Splunk, and Graylog, centralize and analyze logs from various sources. They allow DevOps engineers to search, filter, and visualize logs to identify patterns, troubleshoot issues, and monitor system behavior. These tools often provide features for log aggregation, indexing, and alerting.

- Synthetic Monitoring: Synthetic monitoring simulates user interactions to proactively test the availability and performance of applications. Tools like Pingdom and UptimeRobot simulate user actions from various locations to identify potential issues before users are affected. This can include checking website uptime, API response times, and overall application performance.

- Real User Monitoring (RUM): RUM tools capture performance data from real user interactions with the application. They provide insights into the user experience, including page load times, errors, and session duration. Tools like Google Analytics and Sentry offer RUM capabilities, enabling teams to understand how users are experiencing the application in real-time.

- Container Monitoring: Container monitoring tools, such as Kubernetes monitoring tools and Docker monitoring tools, provide insights into the health and performance of containerized applications. These tools monitor container resource usage, health checks, and overall container orchestration. They enable teams to monitor container performance and manage container deployments effectively.

Scenario: Troubleshooting a Production Issue Using Monitoring Data

Consider a scenario where a DevOps Engineer is alerted to a sudden increase in error rates on a production e-commerce website. The engineer uses monitoring data to diagnose and resolve the issue.

- Alert Received: An alert from the APM tool (e.g., New Relic) indicates a significant increase in error rates for the “checkout” service. The alert includes details such as the affected service, the time the error rate spiked, and the severity of the issue.

- Data Analysis: The DevOps Engineer accesses the APM tool’s dashboard to analyze the data. The dashboard displays the following information:

- Error Rate Graph: A graph showing the error rate for the “checkout” service, which has recently spiked dramatically.

- Transaction Traces: Detailed transaction traces for the “checkout” service, highlighting the slowest transactions and the errors encountered.

- Database Performance Metrics: Metrics showing increased database query times related to the checkout process.

- Root Cause Identification: Based on the data, the engineer identifies the following:

- The errors are primarily related to database connection timeouts.

- Slow database queries are causing the timeouts.

- The increased database query times coincide with a recent deployment of a new feature.

- Troubleshooting Steps: The DevOps Engineer takes the following steps:

- Rollback the Deployment: Since the issue seems to be related to the new feature deployment, the engineer initiates a rollback to the previous stable version of the “checkout” service. This is done through the CI/CD pipeline.

- Database Optimization: Simultaneously, the engineer investigates the database queries. They identify an inefficient query and optimize it to improve performance.

- Monitoring and Verification: After the rollback and query optimization, the engineer monitors the error rates and database performance metrics in real-time. The APM tool shows that the error rates have decreased, and database query times have improved.

- Resolution and Documentation: The issue is resolved, and the website is functioning normally. The engineer documents the incident, including the root cause, the steps taken for resolution, and any preventive measures implemented. This documentation is used to prevent similar incidents in the future. The engineer also schedules a post-mortem to analyze the incident in detail and to identify further improvements to prevent the same type of incident from happening again.

Security and Compliance

A DevOps Engineer plays a crucial role in embedding security and compliance throughout the software development lifecycle. They bridge the gap between development, operations, and security teams, ensuring that security is not an afterthought but an integral part of the process. This proactive approach helps organizations protect their data, meet regulatory requirements, and maintain customer trust.

Ensuring Security and Compliance

DevOps Engineers are responsible for integrating security practices into every stage of the CI/CD pipeline. This includes automating security checks, implementing security policies, and ensuring that infrastructure and applications are secure by design. Their focus is on shifting security left, which means addressing security concerns early in the development process, leading to faster remediation and reduced risk. This involves close collaboration with security teams to understand vulnerabilities, define security requirements, and implement security controls.

They also monitor systems and applications for security threats and ensure compliance with relevant regulations and industry standards.

Security Best Practices Implementation

DevOps Engineers implement various security best practices to safeguard applications and infrastructure. These practices are often automated and integrated into the CI/CD pipeline to ensure consistency and efficiency. Some key examples include:

- Automated Security Scanning: Implementing automated vulnerability scanning tools (e.g., OWASP ZAP, Nessus) to identify vulnerabilities in code and infrastructure before deployment. This can be integrated into the CI/CD pipeline to automatically trigger scans on every code commit or build. For example, a scan could be configured to automatically fail a build if a critical vulnerability is detected, preventing the deployment of vulnerable code.

- Infrastructure as Code (IaC) Security: Using IaC tools (e.g., Terraform, CloudFormation) to define infrastructure configurations and enforce security policies. This ensures that infrastructure is provisioned consistently and securely. For example, security configurations, like enabling encryption at rest for data storage, can be codified in the IaC templates and applied consistently across all environments.

- Secrets Management: Implementing secure methods for managing secrets (e.g., API keys, passwords) using tools like HashiCorp Vault or AWS Secrets Manager. This prevents hardcoding secrets in code or configuration files. For instance, rather than storing a database password directly in a configuration file, a DevOps Engineer would store the password in a secrets management system and then use an automated process to retrieve the secret at runtime.

- Access Control and Identity Management: Implementing robust access controls using tools like AWS IAM, Azure Active Directory, or Google Cloud IAM. This involves defining roles and permissions to ensure that users and services have only the necessary access to resources. For example, using the principle of least privilege, a DevOps Engineer can grant a specific service only the minimum permissions required to perform its function, reducing the potential impact of a security breach.

- Security Auditing and Logging: Implementing comprehensive logging and auditing to track user activity, system events, and security incidents. This data is crucial for identifying security breaches and investigating incidents. For example, logs from various sources (applications, servers, network devices) can be aggregated and analyzed using a SIEM (Security Information and Event Management) tool to detect suspicious activity.

- Regular Security Updates and Patching: Automating the process of applying security patches and updates to operating systems, applications, and libraries. This helps to address known vulnerabilities and protect against exploits. For example, a DevOps Engineer might use a configuration management tool like Ansible to automate the patching of servers on a regular schedule.

- Container Security: Securing containerized applications using tools like Docker Bench for Security or by integrating security scanning into the container build process. This includes scanning images for vulnerabilities and enforcing security best practices within the container environment. For example, the DevOps Engineer can incorporate a container image scanner as part of the CI/CD pipeline, so that every new container image is checked for vulnerabilities.

Common Security Vulnerabilities and Mitigation Strategies

DevOps Engineers actively mitigate common security vulnerabilities by implementing various strategies. Here’s a list of common vulnerabilities and how DevOps Engineers address them:

- SQL Injection: This vulnerability allows attackers to manipulate database queries.

- Mitigation: Implement parameterized queries or prepared statements, use input validation and sanitization to prevent malicious code from being injected into queries.

- Cross-Site Scripting (XSS): This vulnerability allows attackers to inject malicious scripts into websites viewed by other users.

- Mitigation: Implement output encoding to prevent malicious scripts from being executed, use Content Security Policy (CSP) to control the resources the browser is allowed to load, and validate and sanitize user input.

- Cross-Site Request Forgery (CSRF): This vulnerability tricks users into performing unwanted actions on a web application where they’re currently authenticated.

- Mitigation: Implement CSRF tokens, use same-site cookies, and verify the Referer header.

- Broken Authentication and Session Management: This vulnerability involves weaknesses in how a system handles user authentication and session management.

- Mitigation: Implement strong password policies, use multi-factor authentication (MFA), and securely store session IDs.

- Security Misconfiguration: This involves improperly configured systems, applications, or services.

- Mitigation: Use Infrastructure as Code (IaC) to ensure consistent and secure configurations, regularly audit configurations, and follow security hardening guidelines.

- Sensitive Data Exposure: This involves the exposure of sensitive data, such as passwords or API keys.

- Mitigation: Encrypt sensitive data at rest and in transit, use secrets management tools, and enforce access controls.

- Vulnerable Components: This involves the use of outdated or vulnerable software components.

- Mitigation: Regularly update software dependencies, use a Software Composition Analysis (SCA) tool to identify vulnerabilities in dependencies, and use the latest versions of software.

- Insufficient Logging and Monitoring: This makes it difficult to detect and respond to security incidents.

- Mitigation: Implement comprehensive logging and monitoring, use a Security Information and Event Management (SIEM) system to analyze logs, and establish incident response procedures.

Cloud Computing and DevOps

Cloud computing has profoundly reshaped the landscape of software development and IT operations, fundamentally altering the responsibilities and skill sets required of a DevOps Engineer. The shift towards cloud platforms has enabled unprecedented levels of scalability, agility, and cost-efficiency, while simultaneously introducing new complexities that DevOps professionals must navigate. This section will explore the impact of cloud platforms on the DevOps role, examining cloud-specific services, tools, and practical deployment strategies.

Impact of Cloud Platforms on the DevOps Engineer’s Role

The adoption of cloud platforms has significantly transformed the DevOps Engineer’s role, expanding its scope and emphasizing cloud-native skills. Cloud environments provide a wealth of services that streamline various aspects of the software development lifecycle, including infrastructure provisioning, application deployment, and monitoring.The core impact includes:

- Infrastructure as Code (IaC) becomes paramount: Cloud platforms heavily rely on IaC for managing infrastructure. DevOps Engineers are expected to write code (e.g., using Terraform, AWS CloudFormation, Azure Resource Manager) to define and provision cloud resources. This enables automated, repeatable, and version-controlled infrastructure deployments.

- Increased focus on automation: Cloud platforms facilitate automation at every stage, from resource provisioning to application deployment and scaling. DevOps Engineers leverage automation tools and scripting to optimize workflows and reduce manual intervention.

- Enhanced monitoring and observability: Cloud providers offer comprehensive monitoring and logging services. DevOps Engineers use these tools to gain deep insights into application performance, identify issues, and ensure optimal system health.

- Greater emphasis on security: Cloud security is a shared responsibility. DevOps Engineers must understand cloud security best practices, implement security controls, and ensure compliance with industry regulations.

- Expanded skillset requirements: DevOps Engineers need to be proficient in cloud-specific services and tools, including containerization, orchestration, serverless computing, and various platform-specific services.

Cloud-Specific Services and Tools Used by DevOps Engineers

Cloud platforms offer a diverse range of services and tools that DevOps Engineers utilize to build, deploy, and manage applications. The specific services and tools employed depend on the chosen cloud provider (e.g., AWS, Azure, GCP) and the application’s requirements.Some key categories and examples include:

- Compute Services: These services provide the infrastructure for running applications.

- AWS: Amazon Elastic Compute Cloud (EC2), AWS Lambda, Amazon Elastic Container Service (ECS), Amazon Elastic Kubernetes Service (EKS).

- Azure: Azure Virtual Machines, Azure Functions, Azure Container Instances, Azure Kubernetes Service (AKS).

- GCP: Google Compute Engine, Google Cloud Functions, Google Kubernetes Engine (GKE), Cloud Run.

- Storage Services: These services offer various storage options for data and application artifacts.

- AWS: Amazon Simple Storage Service (S3), Amazon Elastic Block Storage (EBS), Amazon Elastic File System (EFS).

- Azure: Azure Blob Storage, Azure Disk Storage, Azure Files.

- GCP: Google Cloud Storage, Persistent Disk, Cloud Filestore.

- Networking Services: These services enable connectivity and communication within and outside the cloud environment.

- AWS: Amazon Virtual Private Cloud (VPC), Amazon Route 53, Elastic Load Balancing.

- Azure: Azure Virtual Network, Azure DNS, Azure Load Balancer.

- GCP: Google Virtual Private Cloud (VPC), Cloud DNS, Cloud Load Balancing.

- Database Services: These services provide managed database solutions.

- AWS: Amazon Relational Database Service (RDS), Amazon DynamoDB, Amazon Aurora.

- Azure: Azure SQL Database, Azure Cosmos DB, Azure Database for PostgreSQL.

- GCP: Cloud SQL, Cloud Spanner, Cloud Datastore.

- Containerization and Orchestration: These tools manage containerized applications.

- Docker: A platform for building, shipping, and running containers.

- Kubernetes: An open-source container orchestration platform.

- AWS: ECS, EKS.

- Azure: AKS.

- GCP: GKE.

- CI/CD Tools: These tools automate the software delivery pipeline.

- AWS: AWS CodePipeline, AWS CodeBuild, AWS CodeDeploy.

- Azure: Azure DevOps.

- GCP: Cloud Build, Cloud Deploy.

- Jenkins: A popular open-source CI/CD tool.

- GitLab CI/CD: Integrated CI/CD features within GitLab.

- Monitoring and Logging Tools: These tools provide visibility into application performance and system health.

- AWS: Amazon CloudWatch, AWS X-Ray.

- Azure: Azure Monitor, Azure Application Insights.

- GCP: Cloud Monitoring, Cloud Logging.

- Prometheus: An open-source monitoring system.

- Grafana: A data visualization and monitoring tool.

- ELK Stack (Elasticsearch, Logstash, Kibana): A popular logging and analytics stack.

Deploying an Application to a Cloud Platform Using IaC

Deploying an application to a cloud platform using IaC involves defining the infrastructure required for the application in code, automating the provisioning process, and ensuring repeatability and consistency. This example demonstrates deploying a simple web application to AWS using Terraform. Step 1: Define Infrastructure as Code (Terraform Configuration)Create a Terraform configuration file (e.g., `main.tf`) to define the necessary AWS resources:“`terraformterraform required_providers aws = source = “hashicorp/aws” version = “~> 4.0” provider “aws” region = “us-east-1” # Replace with your desired regionresource “aws_instance” “example” ami = “ami-0c55b84c255c0023a” # Replace with a valid AMI for your region instance_type = “t2.micro” tags = Name = “example-instance” “`This configuration defines an AWS EC2 instance.

The `ami` attribute specifies the Amazon Machine Image (AMI) to use (replace with a valid AMI for your region), and the `instance_type` specifies the instance size. Step 2: Initialize TerraformRun the following command to initialize Terraform:“`bashterraform init“`This command downloads the necessary provider plugins. Step 3: Plan the DeploymentRun the following command to create an execution plan:“`bashterraform plan“`This command shows the changes Terraform will make to your infrastructure.

Step 4: Apply the ConfigurationRun the following command to apply the configuration and provision the resources:“`bashterraform apply“`Terraform will prompt you to confirm the changes. Type `yes` and press Enter to proceed. Step 5: Verify the DeploymentAfter the deployment completes, verify that the EC2 instance has been created in the AWS Management Console or using the AWS CLI. Step 6: Deploy the Application (e.g., Using Ansible or Docker)Once the infrastructure is provisioned, deploy the application to the EC2 instance.

This can be done using various methods, such as:

- Ansible: Use Ansible playbooks to install dependencies, copy application files, and start the application.

- Docker: Build a Docker image containing the application and deploy it to the EC2 instance using Docker Compose or a similar tool.

Step 7: Automate with CI/CDIntegrate the IaC and application deployment steps into a CI/CD pipeline (e.g., using AWS CodePipeline, Azure DevOps, or Jenkins) to automate the entire process. This enables continuous integration and continuous deployment of the application.This example demonstrates a simplified deployment. In a real-world scenario, the IaC configuration would be more complex, including resources like VPCs, subnets, security groups, load balancers, and databases.

Containerization and Orchestration

Containerization and orchestration are pivotal aspects of modern DevOps practices, enabling efficient application deployment, scaling, and management. They contribute significantly to achieving the agility and scalability that are hallmarks of a successful DevOps implementation. These technologies streamline the software development lifecycle, allowing for faster releases and improved resource utilization.

Containerization Technologies in DevOps

Containerization technologies, such as Docker, provide a lightweight and portable way to package and run applications. Containers encapsulate an application and its dependencies into a single unit, ensuring that the application runs consistently across different environments.

- Isolation: Containers isolate applications from each other and the underlying infrastructure. This isolation prevents conflicts and ensures that applications can run independently without interfering with each other.

- Portability: Containers are designed to be portable, meaning they can run on any system that has a container runtime installed. This portability simplifies deployment across various environments, including development, testing, and production.

- Efficiency: Containers are lightweight because they share the host operating system’s kernel. This efficiency allows for faster startup times and reduced resource consumption compared to virtual machines.

- Consistency: Containerization ensures that applications run consistently, regardless of the underlying infrastructure. This consistency reduces the risk of “it works on my machine” issues and simplifies troubleshooting.

- Version Control: Container images are version-controlled, allowing for easy rollback to previous versions if issues arise. This versioning simplifies updates and reduces downtime.

Container Orchestration Tools in DevOps

Container orchestration tools, such as Kubernetes, automate the deployment, scaling, and management of containerized applications. They provide a platform for managing container lifecycles, ensuring high availability, and optimizing resource utilization.

- Deployment Automation: Kubernetes automates the deployment of containerized applications, simplifying the process of releasing new versions and updates.

- Scaling: Kubernetes automatically scales applications up or down based on demand, ensuring that resources are efficiently utilized.

- Service Discovery: Kubernetes provides service discovery, allowing containers to find and communicate with each other.

- Health Monitoring: Kubernetes monitors the health of containers and automatically restarts unhealthy containers.

- Load Balancing: Kubernetes distributes traffic across multiple container instances, ensuring high availability and performance.

- Rolling Updates: Kubernetes supports rolling updates, which allow for the gradual deployment of new versions of an application without downtime.

Architecture of a Kubernetes Cluster

A Kubernetes cluster is a collection of worker machines, called nodes, that run containerized applications. These nodes are managed by a control plane, which is responsible for the overall orchestration of the cluster. The architecture of a Kubernetes cluster typically includes the following components:

Control Plane:

- kube-apiserver: The central management point for the cluster. It exposes the Kubernetes API and is the front end for all control plane operations.

- etcd: A distributed key-value store that stores the configuration data for the cluster.

- kube-scheduler: Schedules pods onto nodes based on resource requirements and other constraints.

- kube-controller-manager: Runs controllers that manage various aspects of the cluster, such as replication, deployments, and service accounts.

- cloud-controller-manager: (Optional) Integrates with cloud providers to manage resources such as load balancers and storage volumes.

Nodes (Worker Machines):

- kubelet: An agent that runs on each node and communicates with the control plane. It is responsible for managing the pods and containers running on the node.

- kube-proxy: A network proxy that enables communication between pods and services.

- Container Runtime: Software responsible for running containers (e.g., Docker, containerd, CRI-O).

Pod: The smallest deployable unit in Kubernetes. A pod can contain one or more containers that share storage and network resources.

Service: An abstraction that defines a logical set of pods and a policy by which to access them. Services provide a stable IP address and DNS name for accessing pods.

Diagram Description:

The diagram illustrates a Kubernetes cluster architecture. At the center is the Control Plane, consisting of key components: kube-apiserver (acting as the central API), etcd (the distributed key-value store), kube-scheduler (scheduling pods), kube-controller-manager (managing cluster aspects), and optionally, cloud-controller-manager (for cloud integrations). The Control Plane manages the cluster and its resources. Connecting to the Control Plane are the Nodes (worker machines).

Each Node has kubelet (managing pods), kube-proxy (for network communication), and a Container Runtime (like Docker) to run containers. Pods, the smallest deployable units, reside on the Nodes and contain one or more containers. Services, which provide stable access points, are also shown, representing how applications are exposed within the cluster. Arrows indicate the flow of information and control between the Control Plane and the Nodes, emphasizing the dynamic interaction of the cluster components.

Performance Optimization and Tuning

A DevOps Engineer plays a crucial role in ensuring applications perform optimally. This involves a proactive approach to identify and resolve performance bottlenecks, leading to improved user experience, reduced operational costs, and enhanced system reliability. The goal is to make applications run faster, more efficiently, and with minimal resource consumption.

Methods for Optimizing Application Performance

DevOps Engineers employ a variety of methods to optimize application performance, covering different stages of the application lifecycle. These methods are often implemented in conjunction with each other for a holistic approach.

- Monitoring and Profiling: Continuous monitoring of application performance metrics is essential. Profiling tools help identify code sections or database queries that consume excessive resources. This includes tracking CPU usage, memory consumption, disk I/O, network latency, and response times.

- Code Optimization: Analyzing and optimizing the application’s codebase is a critical aspect. This can involve refactoring inefficient code, optimizing algorithms, and minimizing resource-intensive operations.

- Database Optimization: Optimizing database performance is vital for many applications. This includes optimizing database queries, indexing tables appropriately, and tuning database server configurations.

- Caching: Implementing caching mechanisms, such as caching frequently accessed data in memory, can significantly reduce response times and improve overall performance. This can be applied at different levels, including server-side caching, client-side caching, and content delivery networks (CDNs).

- Load Balancing: Distributing traffic across multiple servers using load balancers prevents any single server from becoming overloaded, ensuring high availability and responsiveness.

- Infrastructure Optimization: Optimizing the underlying infrastructure, including server hardware, network configurations, and cloud resources, is also important. This can involve scaling resources dynamically based on demand and selecting the appropriate instance types.

- Continuous Performance Testing: Integrating performance testing into the CI/CD pipeline allows for the early detection of performance issues. This includes load testing, stress testing, and soak testing.

Performance Tuning Techniques for Different Applications

Different types of applications require specific performance tuning techniques based on their architecture and functionalities. The DevOps Engineer tailors the approach to match the application’s characteristics.

- Web Applications:

- Frontend Optimization: Minifying and compressing JavaScript and CSS files, optimizing images, and leveraging browser caching can significantly improve frontend performance.

- Backend Optimization: Optimizing database queries, caching frequently accessed data, and using efficient web server configurations are crucial.

- Content Delivery Networks (CDNs): Utilizing CDNs to distribute static content geographically closer to users reduces latency.

- Database Applications:

- Query Optimization: Analyzing and optimizing database queries, using appropriate indexes, and avoiding full table scans are vital.

- Connection Pooling: Implementing connection pooling to reuse database connections reduces the overhead of establishing new connections.

- Database Server Tuning: Tuning database server configurations, such as buffer pool size and query cache settings, based on the application’s workload.

- Microservices Applications:

- Service Discovery and Load Balancing: Efficient service discovery and load balancing are critical for managing traffic across microservices.

- Inter-Service Communication Optimization: Optimizing the communication between microservices, such as using efficient protocols like gRPC and minimizing data transfer.

- Resource Management: Ensuring each microservice has sufficient resources (CPU, memory) allocated to handle its workload.

- Batch Processing Applications:

- Parallel Processing: Utilizing parallel processing techniques to process large datasets concurrently.

- Resource Allocation: Optimizing resource allocation (CPU, memory, disk I/O) for batch jobs.

- Job Scheduling Optimization: Optimizing job scheduling to ensure efficient resource utilization.

Scenario: Identifying and Resolving a Performance Bottleneck

Consider an e-commerce website experiencing slow page load times during peak hours. A DevOps Engineer investigates and resolves the performance bottleneck.

- Monitoring and Alerting: The DevOps Engineer uses monitoring tools (e.g., Prometheus, Grafana) to track key performance indicators (KPIs) such as response times, error rates, and CPU utilization. Alerts are configured to trigger when response times exceed a certain threshold.

- Identifying the Bottleneck: When the alerts are triggered, the DevOps Engineer investigates the issue. Examining the monitoring dashboards reveals that database query response times are significantly high during peak hours. The CPU utilization on the database server is also nearing its capacity.

- Profiling the Application: The DevOps Engineer uses a profiling tool (e.g., New Relic, Datadog) to analyze the application’s code and identify slow database queries. The profiling tool reveals that a specific query related to displaying product recommendations is particularly slow.

- Database Query Optimization: The DevOps Engineer analyzes the slow query and identifies that it is missing an index on a frequently used column. They add the appropriate index to the database table.

For example, the original query: `SELECT

FROM products WHERE category_id = 123;` could be optimized by adding an index on the `category_id` column.

- Database Server Tuning: The DevOps Engineer also tunes the database server configuration to optimize performance. This includes increasing the buffer pool size to cache more data in memory and adjusting the query cache settings.

- Load Testing and Validation: After implementing the changes, the DevOps Engineer performs load testing to validate the improvements. They use a load testing tool (e.g., JMeter, LoadRunner) to simulate a high volume of traffic and measure the response times. The load testing results show a significant improvement in response times, confirming the effectiveness of the optimization.

- Deployment and Monitoring: The optimized code and database changes are deployed to production. The DevOps Engineer continues to monitor the application’s performance using the monitoring tools to ensure the improvements are sustained and to detect any new performance issues.

Disaster Recovery and Business Continuity

A DevOps Engineer plays a critical role in ensuring the resilience and availability of systems. This involves proactive planning and implementation to minimize downtime and data loss in the event of unforeseen circumstances, such as natural disasters, hardware failures, or cyberattacks. Disaster recovery (DR) and business continuity (BC) are integral parts of a robust DevOps strategy.

Importance of Disaster Recovery and Business Continuity Planning

Disaster recovery and business continuity planning are paramount for safeguarding business operations. They provide a framework for maintaining critical services during disruptive events. The absence of such planning can lead to significant financial losses, reputational damage, and legal repercussions.

Strategies for Business Continuity

A DevOps Engineer employs several strategies to ensure business continuity. These strategies are designed to mitigate risks and enable rapid recovery.

- Redundancy: Implementing redundant systems and components, such as servers, databases, and network infrastructure, to eliminate single points of failure. This ensures that if one component fails, another can take over seamlessly. For example, using a load balancer to distribute traffic across multiple servers.

- Backup and Recovery: Establishing robust backup and recovery procedures for all critical data and applications. This includes regular backups, both on-site and off-site, and well-defined recovery processes. Consider implementing an automated backup solution that backs up data to a secure, off-site location, such as Amazon S3 or Azure Blob Storage.

- High Availability: Designing systems for high availability, which means ensuring minimal downtime. This often involves techniques like clustering, failover mechanisms, and automated health checks. For instance, configuring a database cluster with automatic failover to a standby replica in case of primary database failure.

- Geographic Redundancy: Deploying infrastructure across multiple geographic regions to protect against regional outages. This strategy involves replicating data and applications to different locations. A company might replicate its application and data to a different AWS region to ensure availability if one region experiences an outage.

- Automated Failover: Implementing automated failover mechanisms that automatically switch to backup systems or redundant resources in the event of a failure. This minimizes downtime and reduces the need for manual intervention. Using tools like Kubernetes to automatically restart failed containers on healthy nodes.

- Regular Testing and Drills: Conducting regular disaster recovery drills and tests to validate the effectiveness of recovery plans and identify areas for improvement. Simulate various failure scenarios to ensure the team is prepared to respond effectively.

Disaster Recovery Scenario Plan

A comprehensive disaster recovery plan is essential for outlining the steps to be taken in the event of a disaster. Here is a sample plan for a hypothetical e-commerce application.

- Assessment and Declaration: The first step involves assessing the impact of the disaster and declaring a disaster scenario. This might involve monitoring dashboards and alerts to detect failures, and communication protocols to notify the relevant teams.

- Communication: Establish clear communication channels for internal and external stakeholders. This includes notifying the relevant teams, customers, and partners about the situation and providing updates on the recovery progress.

- Failover Initiation: Initiate the failover process to the backup infrastructure. This involves activating redundant systems and services. If the primary database fails, initiate failover to the standby database.

- Data Restoration: Restore data from backups to the backup infrastructure. This step ensures data integrity and availability. For example, restoring the latest database backups from a secure storage location.

- Application Recovery: Bring the application back online in the backup environment. This might involve restarting application servers and configuring network settings.

- Testing and Validation: Thoroughly test the recovered application to ensure functionality and data integrity. This includes verifying all core functionalities, such as order processing, payment gateway integration, and user account management.

- Monitoring and Stabilization: Continuously monitor the application and infrastructure for stability and performance. Address any issues that arise during the recovery process.

- Failback (Optional): Once the primary infrastructure is restored, plan for a failback process to return to the primary infrastructure, if appropriate.

Tools Used:

- Cloud Provider Services: Utilize cloud provider services such as AWS Route 53 for DNS failover, AWS S3 for backup storage, and AWS EC2 for infrastructure replication.

- Configuration Management Tools: Use tools like Ansible or Terraform to automate infrastructure provisioning and configuration in the backup environment.

- Monitoring Tools: Implement monitoring tools such as Prometheus and Grafana to track system health and performance, and receive alerts during a disaster.

- Backup and Recovery Software: Employ backup and recovery solutions such as Veeam or Commvault for data protection and restoration.

- CI/CD Pipeline: Leverage the CI/CD pipeline to deploy updated application code and configurations to the backup environment.

Evolving Trends and Future of the Role

The DevOps Engineer role is dynamic, constantly adapting to technological advancements and shifting industry demands. Understanding the emerging trends and anticipating future requirements is crucial for DevOps professionals to remain relevant and effective. This section delves into the evolving landscape, examining key influences and outlining the skills necessary for success in the years to come.

Emerging Trends Shaping the Future of the DevOps Engineer Role

Several trends are significantly influencing the evolution of the DevOps Engineer role. These changes necessitate continuous learning and adaptation to leverage new technologies and methodologies effectively.

- Increased Focus on Security: Security is becoming an integral part of the DevOps lifecycle, giving rise to the concept of “DevSecOps.” This involves integrating security practices into every stage, from development to deployment and monitoring. DevOps Engineers will increasingly be responsible for implementing security automation, vulnerability scanning, and compliance checks. For example, organizations are adopting tools like static and dynamic code analysis, and automated penetration testing, to proactively identify and mitigate security risks throughout the development pipeline.

- Rise of Serverless Computing: Serverless computing, which allows developers to build and run applications without managing servers, is gaining traction. DevOps Engineers will need to understand and manage serverless architectures, including the configuration and monitoring of serverless functions and event-driven systems. This involves mastering technologies like AWS Lambda, Azure Functions, and Google Cloud Functions, and adapting CI/CD pipelines to accommodate serverless deployments.

- Expansion of Infrastructure as Code (IaC): IaC continues to mature, with a broader range of tools and platforms available. DevOps Engineers will need to deepen their expertise in IaC tools like Terraform, Ansible, and CloudFormation, as well as embrace best practices for version control, testing, and modularization of infrastructure code. The ability to manage infrastructure as code is essential for achieving automation, consistency, and scalability.

- Growing Importance of Observability: Observability, encompassing monitoring, logging, and tracing, is becoming increasingly critical for understanding the behavior of complex, distributed systems. DevOps Engineers will need to leverage advanced observability tools and techniques to gain deeper insights into application performance, identify bottlenecks, and troubleshoot issues effectively. This includes using tools like Prometheus, Grafana, and Jaeger.

- Greater Adoption of AI and Machine Learning: Artificial intelligence (AI) and machine learning (ML) are being integrated into DevOps practices to automate tasks, improve efficiency, and enhance decision-making. DevOps Engineers will need to understand how to apply AI/ML techniques to areas like anomaly detection, performance prediction, and automated incident response. For example, AI-powered tools can analyze log data to identify potential issues and trigger automated remediation actions.

Impact of Technologies like Serverless Computing and AI on DevOps Practices

The adoption of serverless computing and AI is fundamentally reshaping DevOps practices. These technologies offer new opportunities for automation, scalability, and efficiency, but also present new challenges.

- Serverless Computing: Serverless architectures reduce the operational overhead of managing servers, allowing DevOps Engineers to focus on application development and deployment. CI/CD pipelines need to be adapted to deploy and manage serverless functions and services. Monitoring and logging strategies also need to evolve to effectively observe and troubleshoot serverless applications. For example, CI/CD pipelines now automate the deployment of individual functions, which reduces deployment time and allows for faster iteration cycles.

- AI and Machine Learning: AI and ML are automating tasks such as code reviews, testing, and deployment, increasing efficiency and reducing manual effort. AI-powered tools can also be used to analyze log data, predict performance issues, and automate incident response. DevOps Engineers are tasked with integrating AI/ML tools into CI/CD pipelines, configuring and managing these tools, and interpreting the insights they provide. The use of AI to detect anomalies in application behavior allows for proactive responses, often before users are affected.

Skills and Knowledge for DevOps Engineers in the Coming Years

To thrive in the evolving DevOps landscape, engineers must possess a combination of technical skills, soft skills, and a continuous learning mindset.

- Cloud Computing Expertise: Deep knowledge of cloud platforms (AWS, Azure, GCP) is essential. This includes understanding cloud services, infrastructure management, and cloud-native architectures. Certification in cloud platforms is highly valuable.

- Infrastructure as Code Proficiency: Strong skills in IaC tools like Terraform, Ansible, and CloudFormation are crucial for automating infrastructure provisioning and management. Proficiency in scripting languages (Python, Bash) is also beneficial.

- Containerization and Orchestration Skills: Expertise in container technologies (Docker) and orchestration platforms (Kubernetes) is critical for deploying and managing containerized applications.

- CI/CD Pipeline Management: Ability to design, implement, and manage CI/CD pipelines using tools like Jenkins, GitLab CI, and CircleCI.

- Observability and Monitoring Tools: Familiarity with monitoring and logging tools (Prometheus, Grafana, ELK stack, Datadog) is crucial for understanding system behavior and troubleshooting issues.

- Security Awareness and Practices: Understanding of security best practices, including implementing security automation, vulnerability scanning, and compliance checks. Knowledge of DevSecOps principles is increasingly important.

- Soft Skills: Excellent communication, collaboration, and problem-solving skills are essential for working effectively in cross-functional teams. Adaptability and a continuous learning mindset are crucial for staying current with the latest technologies and trends.

Final Conclusion

In conclusion, the role of a DevOps Engineer is pivotal in today’s fast-paced software landscape. They are the architects of efficiency, the guardians of reliability, and the catalysts for innovation. From infrastructure as code to continuous deployment, their expertise shapes the future of software development. As technology continues to advance, the DevOps Engineer’s role will remain indispensable, driving the industry forward with their innovative approach and collaborative spirit.

Popular Questions

What is the typical salary range for a DevOps Engineer?

Salary ranges vary significantly based on experience, location, and company size, but generally, a DevOps Engineer can expect a competitive salary, often reflecting the high demand and specialized skills required.

What are the most important programming languages for a DevOps Engineer?

While not always mandatory, proficiency in scripting languages like Python, Bash, or Ruby is highly valuable for automating tasks and managing infrastructure.

How does a DevOps Engineer stay up-to-date with the latest technologies?

Continuous learning is key. DevOps Engineers often engage in online courses, attend conferences, read industry blogs, and experiment with new tools and technologies to stay current.

What’s the difference between a DevOps Engineer and a System Administrator?

While there’s overlap, a DevOps Engineer typically focuses on automation, CI/CD, and collaboration between development and operations. System Administrators are often more focused on the day-to-day maintenance and management of the infrastructure.